-

Notifications

You must be signed in to change notification settings - Fork 37

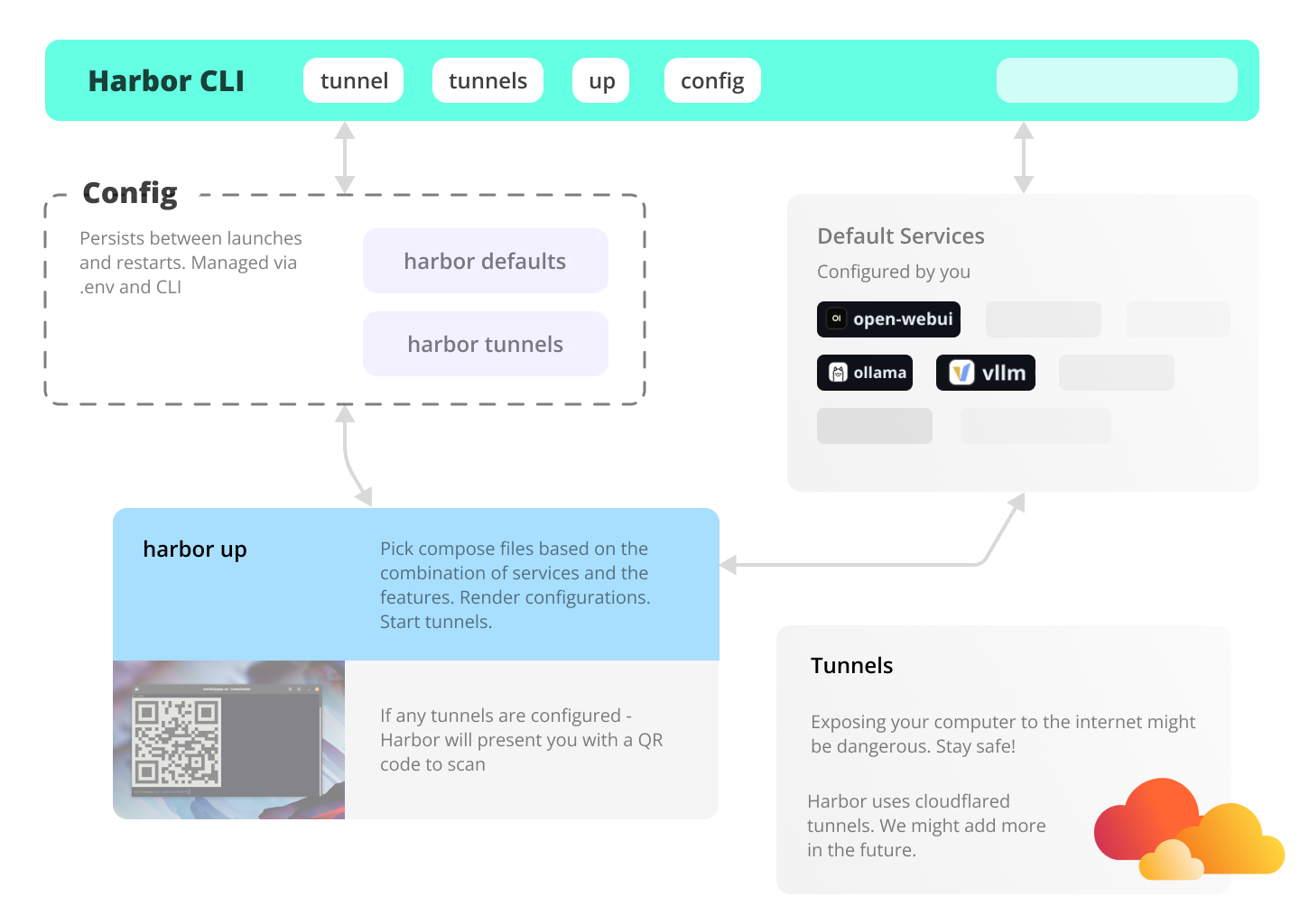

3. Harbor CLI Reference

Alias:

harbor u

Starts selected services. See the list of available services here. Run harbor defaults to see the default list

of services that will be started. When starting additional services, you might need to harbor down first, so that all the services can pick updated configuration. API-only services can be started without stopping the main stack.

# Start with default services

harbor up

# Start with additional services

# See service descriptions in the Services Overview section

harbor up searxng

# Start with multiple additional services

harbor up webui ollama searxng llamacpp tts tgi lmdeploy litellmup supports a few additional behaviors, see below.

You can instruct Harbor to start tailing logs of the services that are started.

# Starts tailing logs as soon as "docker compose up" is done

harbor up webui --tail

# Alias

harbor up webui -tYou can instruct Harbor to also open the service that is started with up, once the docker compose up is done.

# Start default services + searxng and open

# searxng in the default browser

harbor up searxng --open

# Alias

harbor up searxng -oYou can also configure Harbor to automatically run harbor open for the current default UI service. This is useful if you always want to have the UI open when you start Harbor. The behavior can be enabled by setting ui.autoopen config field to true.

# Enable auto-open

harbor config set ui.autoopen true

# Disable auto-open (default)

harbor config set ui.autoopen falseYou can switch the default UI service with the ui.main config field.

# Set the default UI service

harbor config set ui.main hollamaYou can instruct Harbor to only start the services you specify and skip the default ones.

# Start only the services you explicitly specify

harbor up --no-defaults searxngYou can configure Harbor to automatically start tunnels for given services when running up. This is managed by harbor tunnels command.

# Add webui to the list of services that will be tunneled

# whenever `harbor up` is run

harbor tunnels add webui[!WARN] Exposing your services to the internet is dangerous. Be safe! It's a bad idea to expose a service to the Internet without any authentication.

Alias:

harbor d

Stops all currently running services.

# Stop all services

harbor down

# Pass down options to docker-compose

harbor down --remove-orphansAlias:

harbor r

Restarts Harbor stack. Very useful for adjusting the configuration on the fly.

# Restart everything

harbor restart

# Restart a specific service only

harbor restart tabbyapi

# 🚩 Restarting a single service might be

# finicky, if something doesn't look right

# try down/up cycle insteadPulls the latest images for the selected services. Note that it works with harbor service handles instead of docker container names (those will match for primary service container, however).

# Pull the latest images for the default services

harbor pull

# Pull the latest images for additional services

harbor pull searxng

# Pull everything (used or not)

# ⚠️ Warning: This will consume

# a few dozen gigabytes of bandwidth

harbor pull "*"Builds the images for the selected services. Mostly relevant for services that have their Dockerfile local in the Harbor repository.

# HF Downloader is an example of a service that

# has a local Dockerfile

harbor build hfdownloadProxy to docker-compose ps command. Displays the status of all services.

harbor psAlias:

harbor l

Tails logs for all or selected services.

harbor logs

# Show logs for a specific service

harbor logs webui

# Show logs for multiple services

harbor logs webui ollama

# Filter specific logs with grep

harbor logs webui | grep ERROR

# Start tailing logs after "harbor up"

harbor up llamacpp --tail

# Show last 1000 lines in the initial tail chunk

harbor logs -n 1000Additionally, harbor logs accepts all the options that docker-compose logs does.

Allows executing arbitrary commands in the container running given service. Useful for inspecting service at runtime or performing some custom operations that aren't natively covered by Harbor CLI.

# This is the same folder as "harbor/open-webui"

harbor exec webui ls /app/backend/data

# Check the processes in searxng container

harbor exec searxng ps auxexec offers plenty of flexibility. Some useful examples below.

Launch an interactive shell in the running container with one of the services.

# Launch "bash" in the ollama service

harbor exec ollama bash

# You are then landed in the interactive

# container shell

$ root@279a3a523a0b:/#Access useful scripts and CLIs from the llamacpp.

# See .sh scripts from the llama.cpp

harbor exec llamacpp ls ./scripts

# Run one of the bundled CLI tools

harbor exec llamacpp ./llama-bench --helpEnsuring that the service is running might not be convenient. See harbor run and harbor cmd.

Runs (in the order of precedence):

- One of configured aliases

- A command in the Harbor services

# Configure and run an alias to quickly edit

harbor alias set env 'code $(harbor home)/.env

harbor run envAliases take precendence over services in case of a name conflict. See the harbor aliases reference for more details.

Unlike harbor exec, harbor run starts a new container with the given command. This is useful for running one-off commands or scripts that don't require the service to be running. Note that the command accepts the service handle, not the container name, main container for the service will be used.

# Run a one-off command in the litellm service

harbor run litellm --helpThis command has a pretty rigid structure, it doesn't allow you to override the entypoint or run an interactive shell. See harbor exec and harbor cmd for more flexibility.

harbor run litellm --help

# Will run the same command as

$(harbor cmd litellm) run litellm --helpLaunch interactive shell in the service's container. Useful for debug and inspection.

harbor shell tabbyuiPrepares the same docker compose call that is used by the Harbor itself, you can then use it to run arbitrary Docker commands.

# Will print docker compose command

# that is used to start these services

harbor cmd webui litellm vllmIt's most useful to be combined with eval of the returned command.

$(harbor cmd litellm) run litellm --help

# Unlike exec, this doesn't require service to be running

$(harbor cmd litellm) run -it --entrypoint bash litellm

# Note, this is not an equivalent of `harbor down`,

# It'll only shut down default services.

$(harbor cmd) down

# Harbor has a special wildcard notation for compose commands.

# Note the quotes around the wildcard (otherwise it'll be expanded by the shell)

$(harbor cmd "*") down

# And now, this is an equivalent of

harbor downRenders Harbor's Docker Compose configuration into a standalone config that can be moved and used elsewhere. Accepts the same options as harbor up.

# Eject with default services

harbor eject

# Eject with additional services

harbor eject searxng

# Likely, you want the output to be saved in a file

harbor eject searxng llamacpp > docker-compose.harbor.ymlRuns Ollama CLI in the container against the Harbor configuraiton.

# All Ollama commands are available

harbor ollama --version

# Show currently cached models

harbor ollama list

# See for more commands

harbor ollama --help

# Configure ollama version, accepts a docker tag

harbor config set ollama.version 0.3.7-rc5-rocmRuns CLI tasks specific to managing llamacpp service.

# Show the model currently configured to run

harbor llamacpp model

# Set a new model to run via a HuggingFace URL

# ⚠️ Note, other kinds of URLs are not supported

harbor llamacpp model https://huggingface.co/user/repo/blob/main/file.gguf

# Above command is an equivalent of

harbor config set llamacpp.model https://huggingface.co/user/repo/blob/main/file.gguf

# And will translate to a --hf-repo and --hf-file flags for the llama.cpp CLI runtimeRuns CLI tasks specific to managing text-generation-inference service.

# Show the model currently configured to run

harbor tgi model

# Unlike llama.cpp, a few more parameters are needed,

# example of setting them below

harbor tgi quant awq

harbor tgi revision 4.0bpw

# Alternatively, configure all in one go

harbor config set tgi.model.specifier '--model-id repo/model --quantize awq --revision 3_5'Runs CLI tasks specific to managing litellm service.

# change default username and password to use litellm UI

harbor litellm username admin

harbor litellm password admin

# Open LiteLLM UI in the browser

harbor litellm ui

# Note that it's different from the main litellm endpoint

# that can be opened/accessed with general commands:

harbor open litellm

harbor url litellmRuns HuggingFace CLI in the container against the hosts' HuggingFace cache.

# All HF commands are available

harbor hf --help

# Show current cache status

harbor hf scan-cacheHarbor's hf CLI is expanded with some additional commands for convenience.

Parses the HuggingFace URL and prints the repository and file names. Useful for setting the model in the llamacpp service.

# Get repository and file names from the HuggingFace URL

harbor hf parse-url https://huggingface.co/user/repo/blob/main/file.gguf

# > Repository: user/repo

# > File: file.ggufManage HF token for accessing private/gated models.

# Set the token

harbor hf token <token>

# Show the token

harbor hf tokenThis is a proxy for the awesome HuggingFaceModelDownloader CLI pre-configured to run in the same way as the other Harbor services.

# See the original help

harbor hf dl --help

# EXL2 example

#

# -s ./hf - Save the model to global HuggingFace cache (mounted to ./hf)

# -c 10 - make download go brr with 10 concurrent connections

# -m - model specifier in user/repo format

# -b - model revision/branch specifier (where applicable)

harbor hf dl -c 10 -m turboderp/TinyLlama-1B-exl2 -b 2.3bpw -s ./hf

# GGUF example

#

# -s ./llama.cpp - Save the model to global llama.cpp cache (mounted to ./llama.cpp)

# -c 10 - make download go brr with 10 concurrent connections

# -m - model specifier in user/repo format

# :Q2_K - file filter postfix - will only download files with this postfix

harbor hf dl -c 10 -m TheBloke/TinyLlama-1.1B-Chat-v1.0-GGUF:Q2_K -s ./llama.cppHuggingFace's own download utility. Works great when you want to download things for tgi, aphrodite, tabbyapi, vllm, etc.

# Download the model to the global HuggingFace cache

harbor hf download user/repo

# Set the token for private/gated models

harbor hf token <token>

harbor hf download user/private-repo

# Download a specific file

harbor hf download user/repo fileTip

You can use harbor find to locate downloaded files on your system.

A shortcut from the terminal to the HuggingFace model search. It will open the search results in the default browser.

# Search for the models with the query

harbor hf find gguf gemma-2

# will open this URL

# https://huggingface.co/models?sort=trending&search=gguf%20gemma-2

# Search for the models with the query

harbor hf find exl2 gemma-2-2b

# will open this URL

# https://huggingface.co/models?sort=trending&search=exl2%20gemma-2-2bRuns CLI tasks specific to managing vllm service.

Get/set the model currently configured to run.

# Show the model currently configured to run

harbor vllm model

# Set a new model to run via a repository specifier

harbor vllm model user/repoManage extra arguments to pass to the vllm engine.

# See the list of arguments in

# the official CLI

harbor run vllm --help

# Show the current arguments

harbor vllm args

# Set new arguments

harbor vllm args '--served-model-name vllm --device cpu'Select one of the attention backends. See VLLM_ATTENTION_BACKEND in the official env var docs for reference.

# Show the current attention backend

harbor vllm attention

# Set a new attention backend

harbor vllm attention 'ROCM_FLASH'Runs CLI tasks specific to managing webui service.

Get/set current version of the WebUI. Accepts a docker tag from the GHCR registry

# Show the current version

harbor webui version

# Set a new version

harbor webui version dev-cudaGet/Set the secret JWT key for the webui service. Allows Open WebUI JWT tokens to remain valid between Harbor restarts.

# Show the current secret

harbor webui secret

# Set a new secret

harbor webui secret sk-203948Get/Set the name of the service for Open WebUI (by default "Harbor").

# Show the current name

harbor webui name

# Set a new name

harbor webui name "Pirate Harbor"Get/Set the log level for the webui service. Allows to control the verbosity of the logs. See Official logging documentation.

# INFO is the default log level

harbor webui log

# Set to DEBUG for more visibility

harbor webui log DEBUGManage OpenAI-related configurations for related services.

One unusual thing is that Harbor allows setting up multiple OpenAI APIs and Keys. This is mostly useful for the services that support such a configuraion, for example LiteLLM or Open WebUI.

When setting one or more Keys/URLs - the first one will be propagated to serve as "default" for services that require strictly one url/key pair.

Manage OpenAI API keys for the services that require them.

# Show the current API keys

harbor openai keys

harbor openai keys ls

# Add a new API key

harbor openai keys add <key>

# Remove an API key

harbor openai keys rm <key>

# Remove by index (zero-based)

harbor openai keys rm 0

# Underlying config option

harbor config get openai.keysWhen settings API keys, the first one is also propagated to be the "default" one, for services that require strictly one key.

Manage OpenAI API URLs for the services that require them.

# Show the current URLs

harbor openai urls

harbor openai urls ls

# Add a new URL

harbor openai keys add <url>

# Remove a URL

harbor openai keys rm <url>

# Remove by index (zero-based)

harbor openai keys rm 0

# Underlying config option

harbor config get openai.urlsWhen settings API URLs, the first one is also propagated to be the "default" one, for services that require strictly one URL.

Manage TabbyAPI-related configurations for related services.

Get/Set the model currently configured to run.

# Show the model currently configured to run

harbor tabbyapi model

# Set a new model to run via a repository specifier

harbor tabbyapi model user/repo

# For example:

harbor tabbyapi model Annuvin/gemma-2-2b-it-abliterated-4.0bpw-exl2Manage extra arguments to pass to the tabbyapi engine. See the arguments in official Configuration Wiki.

# Show the current arguments

harbor tabbyapi args

# Set new arguments

harbor tabbyapi args --log-prompt trueYou can find some other items not listed above running the tabbyapi CLI with Harbor:

harbor run tabbyapi --helpWhen tabbyapi is running - will open the Docs Swagger UI in the default browser.

harbor tabbyapi docsTip

Similarly to the official Plandex CLI, also available with pdx alias.

Access Plandex CLI for interactions with the self-hosted Plandex instance.

See the service guide for some additional details on the Plandex service setup.

# Access Plandex own CLI

harbor pdx --helpWhenever you're running harbor pdx, the tool will have access to the current folder as if it was called directly in the terminal.

Pings the Plandex server to check if it's up and running, using the official /health endpoint.

# Check the Plandex server health

harbor pdx health # OKAllows you to verify which specific folder will be mounted to the Plandex containers as the workspace.

# Show the folder that will be mounted to the Plandex CLI

# against the current location

harbor pdx pwdA CLI to manage the mistralrs service.

Everything except the commands specified below is passed to the original mistralrs-server CLI.

Pings the MistralRS server to check if it's up and running, using the official /health endpoint.

# Check the MistralRS server health

harbor mistralrs health # OKOpen official service docs in the default browser (when the service is running).

# Open MistralRS docs in the browser

harbor mistralrs docsGet/Set the model currently configured to run. See a more detailed guide in the mistralrs service guide.

# Show the model currently configured to run

harbor mistralrs model

# Set a new model to run via a repository specifier

# For "plain" models:

harbor mistralrs model user/repo

# For "gguf" models:

harbor mistralrs model "container/folder -f model.gguf"

# See the guide above for a more detailed overviewManage extra arguments to pass to the mistralrs engine. See the full list with harbor mistralrs --help.

# Show the current arguments

harbor mistralrs args

# Set new arguments

harbor mistralrs args "--no-paged-attn --throughput"

# Reset the arguments to the default

harbor mistralrs args ""Get/Set the model type currently configured to run.

# Show the model type currently configured to run

harbor mistralrs type

# Set a new model type to run

harbor mistralrs type gguf

harbor mistralrs type plain

# See the service guide for setup on bothFor plain type, allows to set the architecture of the model. See the official reference.

# Show the model architecture currently configured to run

harbor mistralrs arch

# Set a new model architecture to run

harbor mistralrs arch mistral

harbor mistralrs arch gemma2For plain type, allows to set the in situ quantization.

# Show the ISQ status currently configured to run

harbor mistralrs isq

# Set a new ISQ status to run

harbor mistralrs isq Q2KConfigure and run Open Interpreter CLI. (Almost) everything except the commands specified below is passed to the original interpreter CLI.

Get/Set the model currently configured to run.

# Show the model currently configured to run

harbor opint model

# Set a new model to run

# must match the "id" of a model of a backend

# that'll be used to serve interpreter requests

harbor opint model <model>

# For example, for ollama

harbor opint model codestralManage extra arguments to pass to the Open Interpreter engine.

# Show the current arguments

harbor opint args

# Set new arguments

harbor opint args "--no-paged-attn --throughput"Overrides the whole command that will be run in the Open Interpreter container. Useful for running something completely custom.

[!WARN] Resets "model" and "args" to empty strings.

# Set the command to run in the Open Interpreter container

harbor opint cmd "--profile agentic_code_expert.py"Prints the directory that will be mounted to the Open Interpreter container as the workspace.

# Show the folder that will be mounted

# to the Open Interpreter CLI

harbor opint pwdAlias:

harbor opint --profilesAlias:harbor opint -p

Works identically (hopefully) to the interpreter --profiles - open the directory storing custom profiles for the Open Interpreter.

OS Mode is not supported as there's no established way to have full OS host control from within a container.

Opens the service URL in the default browser. In case of API services, you'll see the response from the service main endpoint.

# Without any arguments, will open

# the service from main.ui config field

harbor open

# `harbor open` will now open hollama

# by default

harbor config set main.ui hollama

# Open a specific service

# using its handle

harbor open ollamaPrints the URL of the service to the terminal.

# With default settings, this will print

# http://localhost:33831

harbor url llamacppHarbor will try to determine multiple additional URLs for the service:

# URL on local host

harbor url ollama

# URL on LAN

harbor url --lan ollama

harbor url --addressable ollama

harbor url --a ollama

# URL on Docker's intranet

harbor url -i ollama

harbor url --internal ollamaGenerates a QR code for the service URL and prints it in the terminal.

# This service will open by default

harbor config get ui.main

# Generate a QR code for default UI

harbor qr

# Generate a QR code for a specific service

# Makes little sense for non-UI services.

harbor qr ollama

Alias:

harbor t

Opens a cloudflared tunnel to the local instance of the service. Useful for sharing the service with others or accessing it from a remote location.

[!WARN] Exposing your services to the internet is dangerous. Be safe! It's a bad idea to expose a service without any authentication whatsoever.

# Open a tunnel to the default UI service

harbor tunnel

# Open a tunnel to a specific service

harbor tunnel ollama

# Stop all running tunnels

harbor tunnel down

harbor tunnel stop

harbor t s

harbor t dThe command will print the URL of the tunnel as well as the QR code for it.

Let's say that you are absolutely certain that you want a tunnel to be available all the time you run Harbor. You can set up a list of services that will be tunneled automatically.

# See list config docs

harbor tunnels --help

# Show the current list of services

harbor tunnels

harbor tunnels ls

# Add a new service to the list

harbor tunnels add ollama

# Remove a service from the list

harbor tunnels rm ollama

# Remove by index (zero-based)

harbor tunnels rm 0

# Remove all services from the list

# Don't confuse with stopping the tunnels (see above)

harbor tunnels rm

harbor tunnels clear

# Stop all running tunnels

harbor tunnel down

harbor tunnel stop

harbor t s

harbor t dYou can also edit this setting directly in the .env:

HARBOR_SERVICES_TUNNELS="webui"Whenever a harbor up is run - these tunnels will be established, Harbor will print their URLs as well as QR codes in the terminal.

Alias:

harbor ln

Creates a symlink to the harbor.sh script in the user's home bin directory. This allows you to run the script from any directory.

# Puts the script in the bin directory

harbor lnIf you're me and have to run harbor hundreds of times a day, ln comes with a --short option.

# Also links the short alias

harbor ln --shortYou can adjust where harbor is linked and the names for the symlinks:

# Assuming it's not linked yet

# See the defaults

./harbor.sh config get cli.path

./harbor.sh config get cli.name

./harbor.sh config get cli.short

# Customize

./harbor.sh config set cli.path ~/bin

./harbor.sh config set cli.name ai

./harbor.sh config set cli.short ai

# Link

./harbor.sh ln --short

# Use

ai up

ai downAn antipode to harbor link. Removes previously added symlinks. Note that this uses current links configuration, so if it was changed since the link was added, it might not work as expected.

# Removes the symlink(s)

harbor unlinkDisplays or sets the list of default services that will be started when running harbor up. Will include one LLM backend and one LLM frontend out of the box.

# Show the current default services

harbor defaults

harbor defaults ls

# Add a new default service

harbor defaults add tts

# Remove a default service

harbor defaults rm tts

# Remove by index (zero-based)

harbor defaults rm 0

# Remove all services from the default list

harbor defaults rm

# This is an alias for the

# services.default config field

harbor config set services.default 'webui ollama searxng'

# You can also configure it

# via the .env file

cat $(harbor home)/.env | grep HARBOR_SERVICES_DEFAULTAllows configuring additional aliases for the harbor run command. Any arbitrary shell command can be added as an alias. Aliases are managed in a key-value format, where the key is the alias name and the value is the command.

# Show the current list of aliases

harbor aliases

# Show aliases help

harbor aliases --help

# Same as above

harbor alias

harbor aThe alias is managed by harbor config internally, and is linked to the aliases config field.

# Will be empty, unless some alises are configured

harbor config get aliases

# Placement in the `.env`:

cat $(harbor home)/.env | grep HARBOR_ALIASESLists all the currently set aliases.

harbor aliases ls

# Running without any args

# defaults to "ls" behavior

harbor aliases

harbor alias

harbor aAdds a new alias to the list.

# Note the single quotes on the outside

# and double quotes on the inside

harbor alias set echo 'echo "I like $PWD!"'You can then see the set alias:

harbor alias

echo: echo "I like $PWD!"

harbor alias get echo

# echo "I like $PWD!"You can run aliases with harbor run:

harbor run echo

# I like /home/user/harborObtain a command for a specific alias.

harbor alias get echoRemoves an alias from the list.

harbor alias rm echoPrint basic help information to the console.

harbor help

harbor --helpPrints the current version of the Harbor script.

harbor version

harbor --version# Show the help for the config command

harbor config --helpAllows working with the harbor configuration via the CLI. Mostly useful for the automation and scripting, as the configuration can also be managed via the .env file variables.

Translating CLI config fields to .env file variables:

# All three version are pointing to the same

# environment variable in the .env file

webui.host.port -> HARBOR_WEBUI_HOST_PORT

webui_host_port -> HARBOR_WEBUI_HOST_PORT

WEBUI_HOST_PORT -> HARBOR_WEBUI_HOST_PORTAlias:

harbor config ls

# Show the current configuration

harbor config listThis will pring all the configuration options and their values. List could be quite long, so it's handy to pipe it to grep or less.

# Show the current configuration

harbor config list | grep WEBUIYou will see that configuration options have a namespace hierarchy, for example - everything related to the webui service will be under the WEBUI_ namespace.

Unprefixed variables will either be global or will be related to the Harbor CLI itself.

# Get a specific configuration value

# All versions below are equivalent and will return the same value

harbor config get webui.host.port

harbor config get webui.host_port

harbor config get WEBUI_HOST.PORT

harbor config get webui.HOST_PORT# Set a new configuration value

harbor config set webui.host.port 8080Resets the current .env configuration to its original form, based on the default.env file.

# You'll be asked to confirm the reset

harbor config resetWill merge default.env with the current local .env in order to add new configuration options. Typically used after updating Harbor when new variables are added. Most likely, you won't need to run this manually, as it's done automatically after harbor update.

This process won't overwrite user-defined variables, only add new ones.

# Merge the default.env with the current .env

harbor config updateAlias:

harbor profiles,harbor p

Allows creating and managing configuration profiles. It's attached to the .env file under the hood and allows you to switch between different configurations easily.

# Show the help for the profile command

harbor profile --helpNote

There are a few considerations when using profiles. Please read below.

- When the profile is loaded, modifications are not saved by default and will be lost when switching to another profile (or reloading the current one). Use

harbor profile save <name>to persist the changes after making them - Profiles are stored in the Harbor workspace and can be shared between different Harbor instances

- Profiles are not versioned and are not guaranteed to work between different Harbor versions

- You can also edit profiles as

.envfiles in the workspace, it's not necessary to use the CLI

Alias:

harbor profile ls

Lists currently saved profiles.

harbor profile list

harbor profile lsAlias:

harbor profile save

Creates the new profile from the current configuration.

# Create a new profile named "dev"

harbor profile add devAlias:

harbor profile load,harbor profile set

Loads the profile with the given name.

# Load the "dev" profile

harbor profile use devAlias:

harbor profile rm

Removes the profile with the given name.

# Remove the "dev" profile

harbor profile remove devThis is a helper command to similar configuration experience provided by the harbor config to the service-specific environment variables, that are not directly managed by the Harbor CLI.

This command writes to the override.env file for a given service, you can also do that manually, if more convenient.

# List current override env vars

# Note, that it doesn't include the ones from main "harbor config"

harbor env <service>

# Get a specific env var

harbor env <service> <key>

# Set a new env var

harbor env <service> <key> <value>The <key> supports same naming convention as used by the harbor config command.

# All keys below are equivalent

# and will write to the same env var: "N8N_SECURE_COOKIE"

harbor env n8n N8N_SECURE_COOKIE # original notation

harbor env n8n n8n_secure_cookie # underscore notation

harbor env n8n n8n.secure_cooke # mixed dot/underscore notation# Show the current environment variables for the "n8n" service

harbor env n8n

# Get a specific environment variable

# for the dify service (LOG_LEVEL under the hood)

harbor env dify log.level

# Set a brand new environment variable for the service

# All three are equivalent

harbor env cmdh NODE_ENV development

harbor env cmdh node_env development

harbor env cmdh node.env developmentHarbor remembers a number of most recently executed CLI commands. You can search/re-run the commands via the harbor history command.

This is an addition to the native history in your shell, that'll persist longer and is specific to the Harbor CLI.

Use history.size config option to adjust the number of commands stored in the history.

# Get/set current history size

harbor history size

harbor history size 50

# Same, but with harbor config

harbor config get history.size

harbor config set history.size 50History is stored in the .history file in the Harbor workspace, you can also edit/access it manually.

# Using a built-in helper

harbor history ls | grep ollama

# Manually, using the file

cat $(harbor home)/.history | grep ollamaYou can clear the history with the harbor history clear command.

# Clear the history

harbor history clear

# Empty

harbor history lsLaunched a Docker container with the Dive CLI to inspect the given image layers and sizes.

Might be integrated with service handles in the future.

# Dive into the latest image of the webui service

harbor dive ghcr.io/open-webui/open-webuiPulls the latest version of the Harbor script from the repository.

# Pull the latest version of the Harbor script

harbor updateNote

Updates implementation is likely to change in the future Harbor versions.

Note

Harbor needs to be running with ollama backend to use the how command.

Harbor can actually tell you how to do things. It's a bit of a gimmick, but it's also surprisingly useful and fun.

# Ok, I'm cheesing a bit here, this is one of the examples

$ harbor how to ping a service from another service?

✔ Retrieving command... to ping a service from another service?

desired command: harbor exec webui curl $(harbor url -i ollama)

assistant message: The command 'harbor exec webui curl $(harbor url -i ollama)' will ping the Ollama service from within the WebUI service's container. This can be useful for checking network connectivity or testing service communication.

# But this is for real

$ harbor how to filter webui error logs with grep?

✔ Retrieving command... to filter webui error logs with grep?

setup commands: [ harbor logs webui -f ]

desired command: harbor logs webui | grep error

assistant message: You can filter webui error logs with grep like this. Note: the '-f' option is for follow and will start tailing new logs after current ones.

# And this is a bit of a joke

$ harbor how to make a sandwich?

✔ Retrieving command... to make a sandwich?

desired command: None (harbor is a CLI for managing LLM services, not making sandwiches)

assistant message: Harbor is specifically designed to manage and run Large Language Model services, not make physical objects like sandwiches. If you're hungry, consider opening your fridge or cooking an actual meal!

# And this is surprisingly useful

$ harbor how to run a command in the ollama container?

✔ Retrieving command... to run a command in the ollama container?

setup commands: [ docker exec -it ollama bash ]

desired command: harbor exec ollama <command>

assistant message: You can run any command in the running Ollama container. Make sure that command is valid and doesn't try to modify the container's state, because it might affect the behavior of Harbor services.A simple wrapper around the find command that allows you to search for files in the service's cache directories. Uses a substring match on a file path.

# Find all GGUFs

harbor find .gguf

# Find all files from bartowski repos

harbor find bartowski