-

Notifications

You must be signed in to change notification settings - Fork 37

2.3.28 Satellite: Promptfoo

Handle:

promptfoo

URL: http://localhost:34233

promptfoo is a tool for testing, evaluating, and red-teaming LLM apps.

With promptfoo, you can:

- Build reliable prompts, models, and RAGs with benchmarks specific to your use-case

- Secure your apps with automated red teaming and pentesting

- Speed up evaluations with caching, concurrency, and live reloading

- Score outputs automatically by defining metrics

- Use as a CLI, library, or in CI/CD

- Use OpenAI, Anthropic, Azure, Google, HuggingFace, open-source models like Llama, or integrate custom API providers for any LLM API

# [Optional] Pre-pull the image

harbor pull promptfooYou'll be running Promptfoo CLI most of the time, it's available as:

# Full name

harbor promptfoo --help

# Alias

harbor pf --helpWhenever the CLI is called, it'll also automatically start local Promptfoo backend.

# Run a CLI command

harbor pf --help

# Promptfoo backend started

harbor ps # harbor.promptfooPromptfoo backend serves all recorded results in the web UI:

# Open the web UI

harbor open promptfoo

harbor promptfoo view

harbor pf oMost of the time, your workflow will be centered around creating prompts, assets, writing an eval config, running it and then viewing the results.

Harbor will run pf CLI from where you call Harbor CLI, so you can use it from any folder on your machine.

# Ensure a dedicated folder for the eval

cd /path/to/your/eval

# Init the eval (here)

harbor pf init

# Edit the configuration, prompts as needed

# Run the eval

harbor pf eval

# View the results

harbor pf viewNote

If you're seeing any kind of file system permission errors you'll need to ensure that files written from within a container are accessible to your user.

Harbor pre-configures promptfoo to run against ollama out of the box (must be started before pf eval). Any other providers can be configured via:

- env vars (see

harbor env) - directly in promptfooconfig files (see Providers reference in the official documentation)

# For example, use vLLM API

harbor env promptfoo OPENAI_BASE_URL $(harbor url -i vllm)Promptfoo is a very rich and extensive tool, we recommend reading through excellent official documentation to get the most out of it.

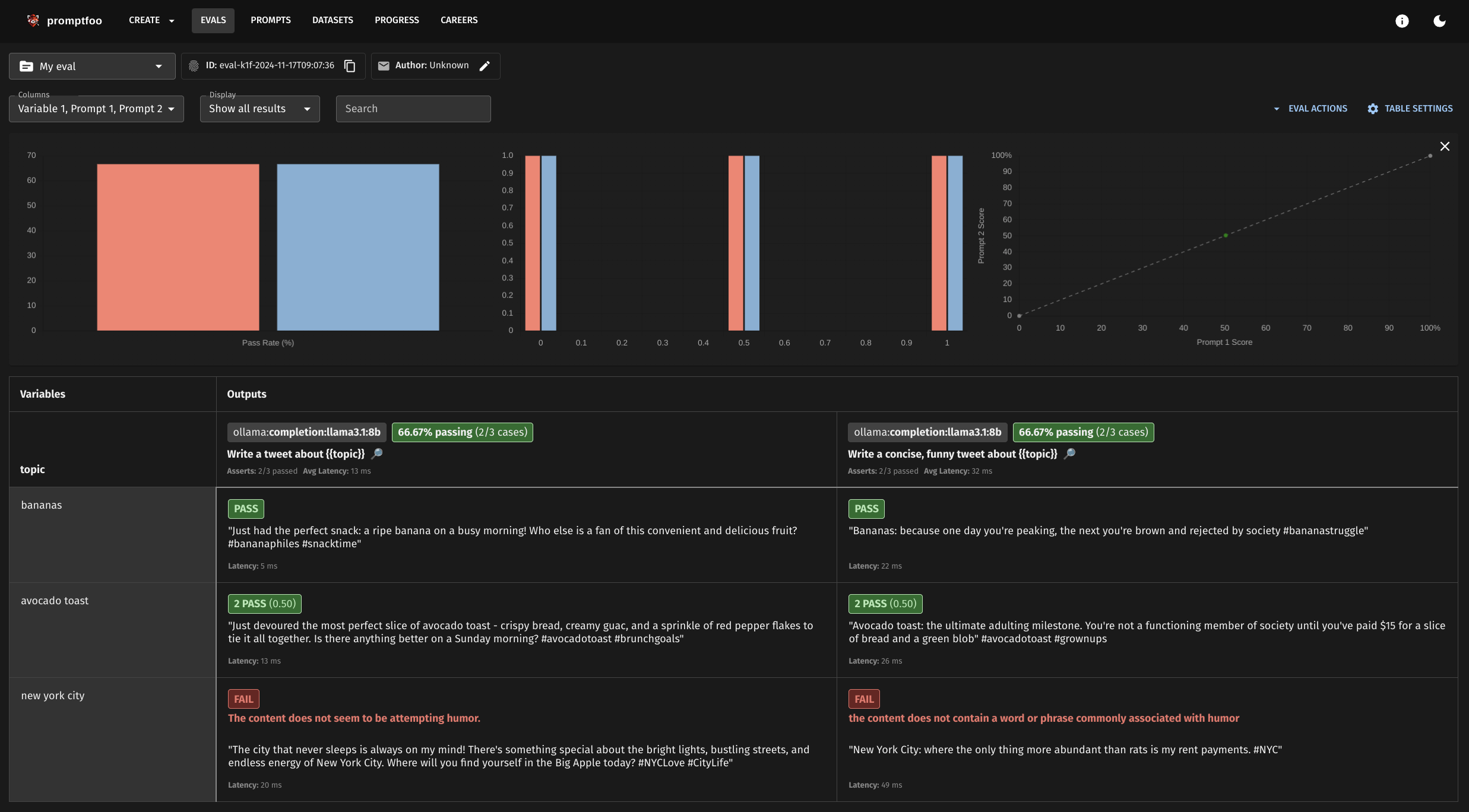

Harbor comes with two (basic) built-in examples.

# Navigate to eval folder

cd $(harbor home)/promptfoo/examples/hello-promptfoo

# Start ollama and pull the target model

harbor up ollama

harbor ollama pull llama3.1:8b

# Run the eval

harbor pf eval

# View the results

harbor pf view

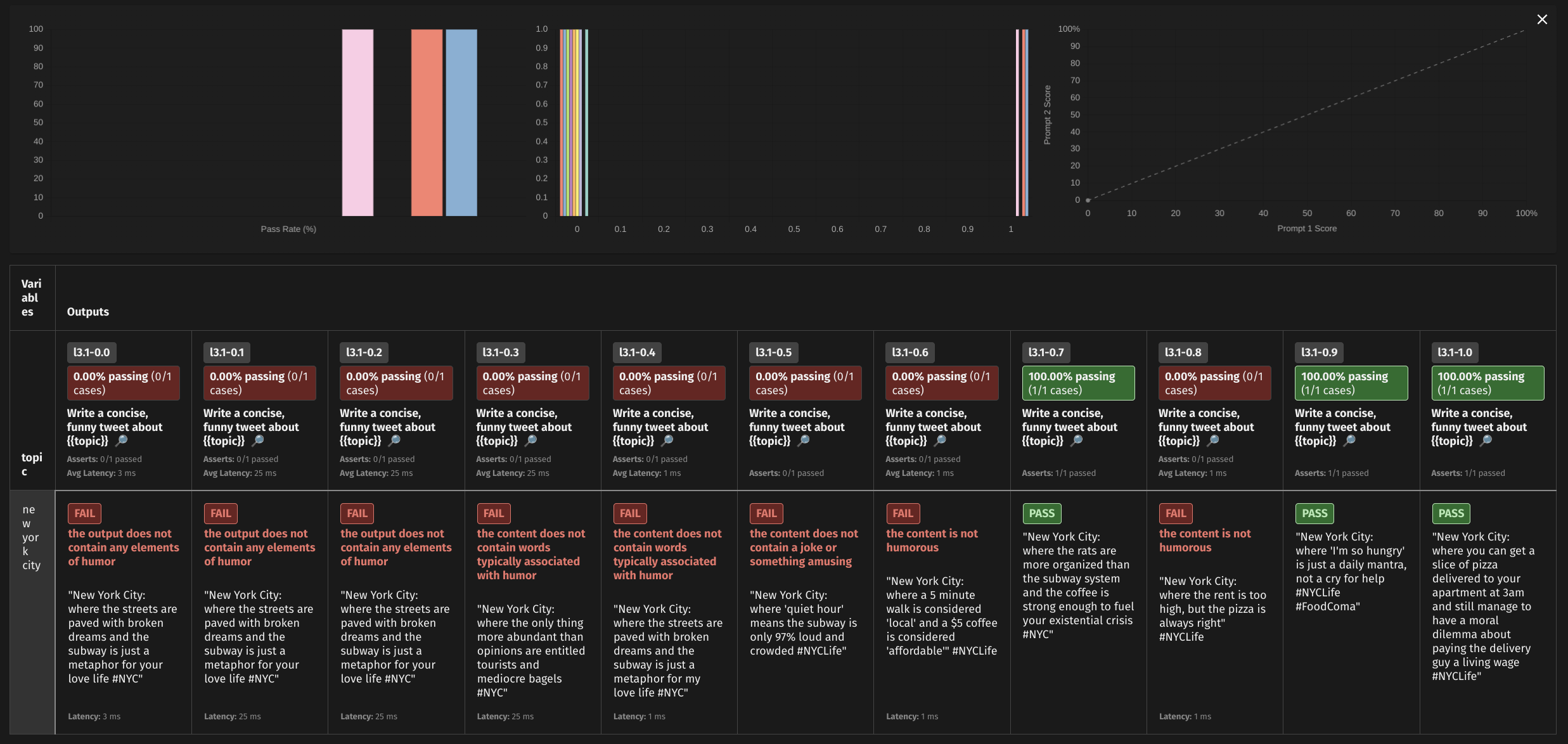

Evaluate a model across a range of temperatures to see if there's a sweet spot for a given prompt.

# Navigate to eval folder

cd $(harbor home)/promptfoo/examples/temp-test

# Start ollama and pull the target model

harbor up ollama

harbor ollama pull llama3.1:8b

# Run the eval

harbor pf eval

# View the results

harbor pf view