-

Notifications

You must be signed in to change notification settings - Fork 37

2.2.15 Backend: Nexa SDK

Handle:

nexaURL: http://localhost:34181

On-device Model Hub / Nexa SDK Documentation

Nexa SDK is a comprehensive toolkit for supporting ONNX and GGML models. It supports text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. Additionally, it offers an OpenAI-compatible API server with JSON schema mode for function calling and streaming support, and a user-friendly Streamlit UI. Users can run Nexa SDK in any device with Python environment, and GPU acceleration is supported.

# [Optional] pre-build the image

harbor build nexa

# [Optional] Check Nexa CLI is working

harbor nexa --help

# Start the Nexa SDK

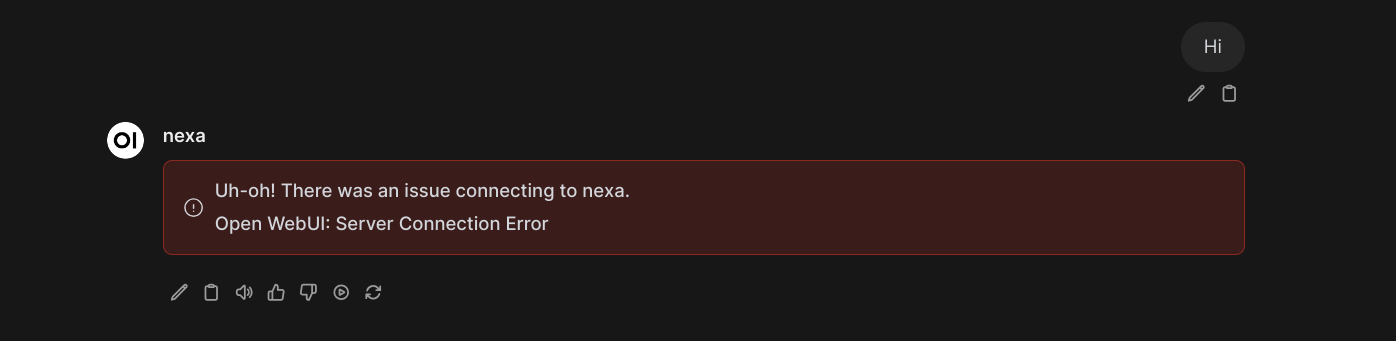

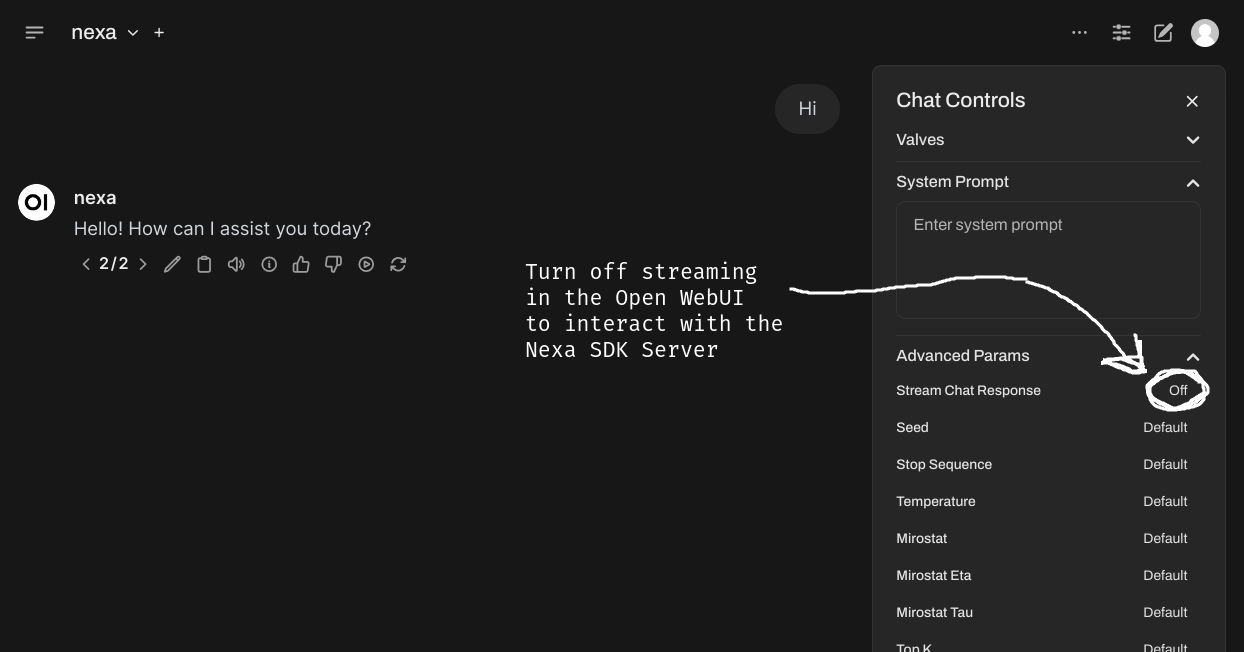

harbor up nexa[!WARN] At the time of writing these docs, (Oct 3rd, 2024) Nexa SDK server doesn't support streaming mode. Unfortunately that can't be fixed on Harbor's side. There's a chance that since then the SDK was updated with a fix, so your installation might already be fixed out of the box. GitHub issue to track.

So, if streaming doesn't work, try turning it off as a workround (hopefully temporary)

Harbor supports running Nexa as a command or as a service. This will result in slightly different configuration being appled.

When running as a CLI, just the nexa CLI is executed, without any extra arguments.

harbor nexaWhen running as a service, the nexa CLI is executed with the server argument. It's bound to port pre-configured by Harbor (so it'll be avialble on your host machine with harbor url). However, you can configure a model that will be used by the service.

# Start the service

harbor up nexaYou can configure which model the SDK will run in the service mode.

# See currently configured model

harbor nexa model

# Set a new model

# Must be one of the identifiers from the official list

harbor nexa model llama3.2Harbor runs a custom version of Nexa image, that is enhanced in two ways:

- GPU support in Docker

- Harbor builds a custom version of Nexa SDK image, that ensures that CUDA-enabled version is installed in Docker

-

Partial compatibility with Open WebUI (except the streaming issue)

- Harbor runs Nexa SDK server behind a proxy that adds

/v1/modelsendpoint for compatibility with Open WebUI - GitHub issue to track

- Harbor runs Nexa SDK server behind a proxy that adds

Harbor wasn't tested with vision or audio models in Nexa SDK - a contribution is welcome!