-

Notifications

You must be signed in to change notification settings - Fork 4

Private Cluster

Execution environment(s):

- Vanilla GCP Project;

- Qwiklabs

Status: Working Draft2/3

TODO:

- Certificates

- Firewall rule: traffic between public endpoint :443 and pods and services? ranges

- Snapshots and outputs

This is the must enterprise security requirement. There is number of hardening options white setting up your private Kubernetes cluster:

- private cluster, private nodes

- master authorized networks

- cluster service account with minimal privileges

- private container repository

- explicit egress control

- no external access (via jumpbox) (overrides master authorized networks)

We will revisit them in turn, but let's start with the first two.

Recommended reading: Completely Private GKE Clusters with No Internet Connectivity by Andrey Kumarov.

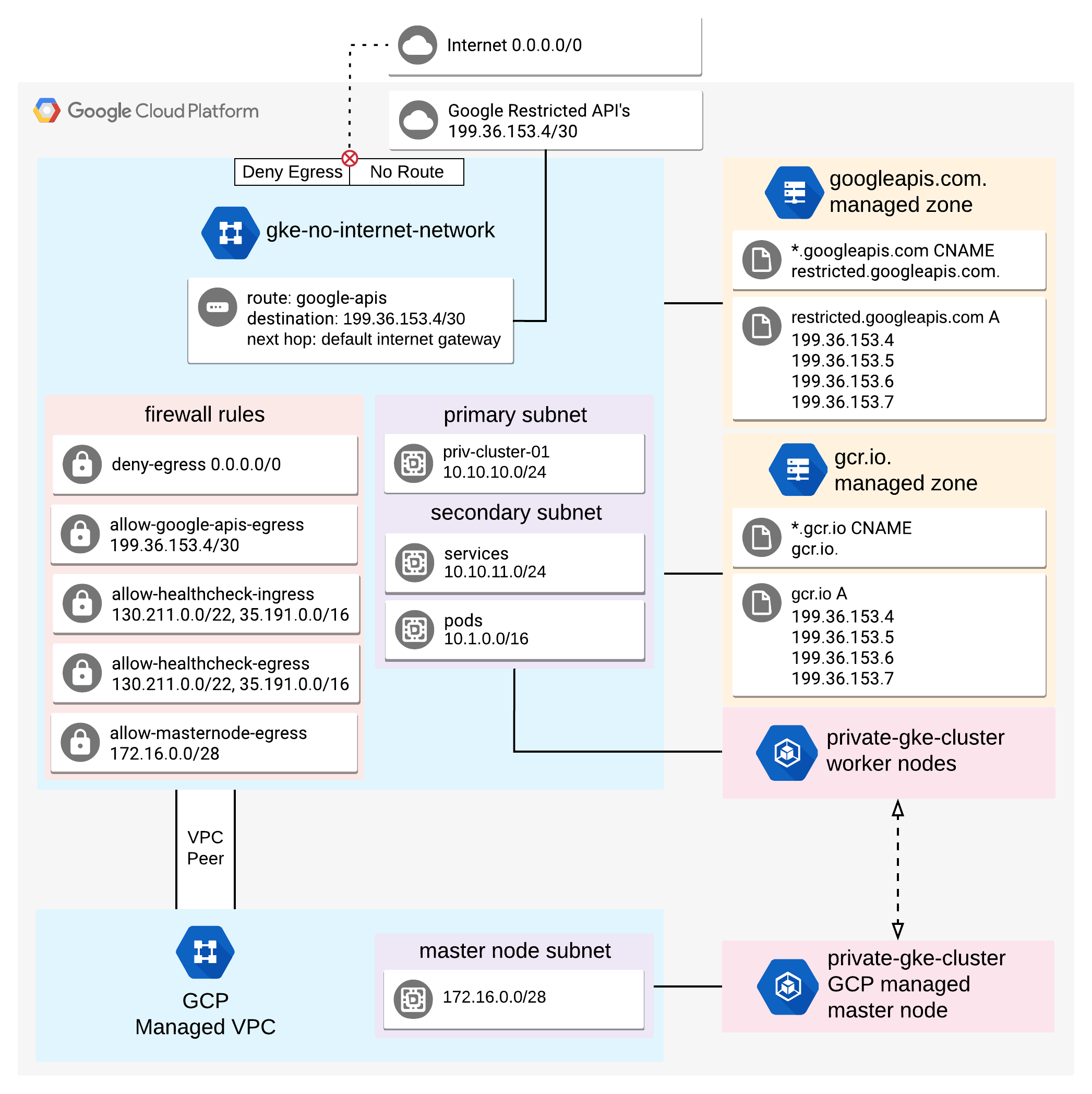

It is worth to keep his excellent diagram for private cluster networking right before your eyes:

?. <start-qwiklabs-gcp-project-and-so-on...>

?. Start the CloudShell in a new window

?. Open it in separate tab

?. Pin Compute Engine, Kubernetes Engine, IAM & Admin, Network Services, VPC Network in the hamburger menu.

NOTE: After you configure environment and wish to initialize it in a different terminal window or after CloudShell session expired, use following statements:

export AHR_HOME=~/apigee-hybrid/ahr export HYBRID_HOME=~/apigee-hybrid/apigee-hybrid120-nin source $AHR_HOME/bin/ahr-env source $HYBRID_HOME/hybrid120-nin.sh source <(ahr-runtime-ctl home)

?. In your home folder, create working directory

mkdir ~/apigee-hybrid

?. Clone ahr tool

cd ~/apigee-hybrid

git clone https://github.com/yuriylesyuk/ahr

?. Configure AHR_HOME and PATH variables, ahr autocompletion

export AHR_HOME=~/apigee-hybrid/ahr

export PATH=$AHR_HOME/bin:$PATH

. $AHR_HOME/bin/ahr-completion.bash

?. Create Hybrid Project directory

export HYBRID_HOME=~/apigee-hybrid/hybrid120-nin

mkdir $HYBRID_HOME

?. Define Apigee Hybrid environment file from provided example

cp $AHR_HOME/examples/hybrid-demo6-sz-s-1.2.0.sh $HYBRID_HOME/hybrid120-nin.sh

?. Confirm that $GOOGLE_CLOUD_PROJECT contains correct qwiklabs student project Id.

NOTE: For project id, do not rely on GOOGLE_PROJECT value, it is deprecated. Be vigilant:It is also dangerous to use GOOGLE_CLOUD_PROJECT, as it might not be populated.

echo $GOOGLE_CLOUD_PROJECT

?. Configure environment variables

- we are going to create custom network gke-nin and subnetwork gke-nin-subnet.

- we are not populating Hybrid Organization name. We do not know it yet, as it will be given a unique name for a trial org when we create hybrid org later.

vi $HYBRID_HOME/hybrid120-nin.sh

Variables to configure:

export PROJECT=$GOOGLE_CLOUD_PROJECT

export NETWORK=gke-nin

export SUBNETWORK=gke-nin-subnet

?. Cluster location. We are going to create a single-zone GKE cluster. The variables that define cluster region and zone are:

export REGION=europe-west1

export CLUSTER_ZONE=europe-west1-b

export CLUSTER_LOCATIONS='"europe-west1-b"'

?. Save and exit environment config file.

NOTE: Do not forget to source the environment and re-generated hybrid cluster and hybride config files from templates after you change relevant variable values.

source $HYBRID_HOME/hybrid120-nin.sh

TODO: [ ] describe network layout. secondary and primary ranges.

?. Create custom network gke-nin

gcloud compute networks create $NETWORK --subnet-mode custom --bgp-routing-mode global

Output: TODO

?. Create subnetwork gke-nin-subnet

gcloud compute networks subnets create $SUBNETWORK \

--network $NETWORK \

--range 10.10.10.0/24 \

--region $REGION --enable-flow-logs \

--enable-private-ip-google-access \

--secondary-range services=10.10.11.0/24,pods=10.11.0.0/16

Output: TODO

?. Define firewall rule for ssh access to the nodes

gcloud compute firewall-rules create allow-access --network $NETWORK --allow tcp:22,tcp:3389,icmp

Output: TODO

?. Define firewall rule for ingress healthchecks of the cluster

gcloud compute firewall-rules create allow-healthcheck-ingress \

--action ALLOW \

--rules tcp:80,tcp:443 \

--source-ranges 130.211.0.0/22,35.191.0.0/16 \

--direction INGRESS \

--network $NETWORK

Output: TODO

?. Generate cluster .json definition

ahr-cluster-ctl template $AHR_HOME/templates/cluster-single-zone-one-nodepool-template.json > $CLUSTER_CONFIG

?. Enable Master Authorized Networks

cat <<< "$(jq .cluster.masterAuthorizedNetworksConfig='{"enabled": true}' < $CLUSTER_CONFIG)" > $CLUSTER_CONFIG

?. Enable Private Nodes and define master IPv4 CIDR block

export CLUSTER_PRIVATE_CONFIG=$( cat << EOT

{

"enablePrivateNodes": true,

"enablePrivateEndpoint": false,

"masterIpv4CidrBlock": "172.16.0.16/28"

}

EOT

)

cat <<< "$(jq .cluster.privateClusterConfig="$CLUSTER_PRIVATE_CONFIG" < $CLUSTER_CONFIG)" > $CLUSTER_CONFIG

?. Create the cluster

ahr-cluster-ctl create

?. Check cluster creation progess by visiting Kubernetes Engine/Cluster in GCP Console.

?. While cluster is created, click on the cluster name to see cluster details and creation progress.

83% error: Status details

All cluster resources were brought up, but: only 0 nodes out of 3 have registered; this is likely due to Nodes failing to start correctly; try re-creating the cluster or contact support if that doesn't work.

25% error: Status details

Retry budget exhausted (80 attempts): Secondary range "pods" does not exist in network "default", subnetwork "default".

A private cluster cannot access Internet to download container images. To provide outbound Internet access, we can use Cloud NAT.

?. Create a router

gcloud compute routers create gke-nin-router --network=$NETWORK --project=$PROJECT --region=$REGION

?. Create a NAT gateway

gcloud compute routers nats create gke-nin-nat --router=gke-nin-router --auto-allocate-nat-external-ips --nat-all-subnet-ip-ranges --project=$PROJECT --region=$REGION

?. Enable Apigee API

ahr-cluster-ctl enable apigee.googleapis.com

?. Create Apigee Hybrid organization

ahr-runtime-ctl org-create hybrid-org --ax-region europe-west1

Output:

{

"name": "organizations/hybrid-org-trial-6r2re/operations/f2872f9f-2fa2-4bf8-818e-822edace8097",

"metadata": {

"@type": "type.googleapis.com/google.cloud.apigee.v1.OperationMetadata",

"operationType": "INSERT",

"targetResourceName": "organizations/hybrid-org-trial-6r2re",

"state": "IN_PROGRESS"

}

}

?. Node the value of the field targetResourceName

Edit environment config file variable ORG

vi $HYBRID_HOME/hybrid120-nin.sh

?. Change the ORG variable value

export ORG=hybrid-org-trial-6r2re

?. Re-source the env configuration file.

source $HYBRID_HOME/hybrid120-nin.sh

?. Check the value

echo $ORG

?. Validate Hybrid Organization status

ahr-runtime-ctl config

Identify values for name, analyticsRegion, features.hybrid.enabled property.

{

"name": "hybrid-org-trial-6r2re",

"displayName": "hybrid-org",

"description": "organization_description",

"createdAt": "1588155110758",

"lastModifiedAt": "1588155110758",

"properties": {

"property": [

{

"name": "features.hybrid.enabled",

"value": "true"

}

]

},

"analyticsRegion": "europe-west1",

"runtimeType": "HYBRID",

"subscriptionType": "TRIAL"

}

Sync Authorization:

{

"etag": "BwWkayzB9mo="

}

?. apigeeconnect enable

ahr-cluster-ctl apigeeconnect.googleapis.coma

TODO: WARNING: above will fail for the next two weeks (as of Apr, 27)

?. Create Service Accounts

ahr-sa-ctl create all

?. Verify Service Accounts

ahr-sa-ctl config synchronizer

Service Account: apigee-synchronizer@qwiklabs-gcp-02-166af9561342.iam.gserviceaccount.com

ROLE

roles/apigee.synchronizerManager

GCP Project Permission:

{

"permissions": [

"apigee.environments.get"

"apigee.environments.manageRuntime"

]

}

Apigee Hybrid Org/Env Permission:

{

"permissions": [

"apigee.environments.get"

"apigee.environments.manageRuntime"

]

}

?. Generate Runtime Config file

ahr-runtime-ctl template $AHR_HOME/templates/overrides-small-template.yaml > $RUNTIME_CONFIG

?. Look around generated runtime config file

less $RUNTIME_CONFIG

?. TODO: XXXXXX ahr-verify

?. Fetch hybrid installation sources

ahr-runtime-ctl get

?. Activate project configuration and cluster credentials

source $AHR_HOME/bin/ahr-env

source <(ahr-runtime-ctl home)

?. Try to get list of pods. It will hang, because we are using node that is not authorised.

k get pods --all-namespaces

Interrupt it by pressing Ctrl+C.

?. Identify IP address of the CloudShell node we are currently using.

export SHELL_IP=$(dig +short myip.opendns.com @resolver1.opendns.com)

?. Whitelist this IP address

gcloud container clusters update $CLUSTER \

--zone=$CLUSTER_ZONE \

--enable-master-authorized-networks \

--master-authorized-networks="$SHELL_IP/32"

?. Run kubernetes get pods command again. This time it finishes successfully.

k get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system event-exporter-v0.2.4-7c8896bbb8-qqmr4 2/2 Running 0 38m

kube-system fluentd-gcp-scaler-6965bb45c9-8257f 1/1 Running 0 38m

...

TODO: output

?. Init

ahr-runtime-ctl apigeectl init -f $RUNTIME_CONFIG

You cn

?. For CI/CD scenarios, it is important to make sure that the creation of all containers finished successfully. We need to use wait-for-ready command to ensure this.

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

?. Check status of workloads using Kubernetes Engine/Workloads page. TODO:

?. Set up Synchronizer Service Account.

ahr-runtime-ctl setsync $SYNCHRONIZER_SA_ID

?. Enable Apigee connect

ahr-runtime-ctl setproperty features.mart.apigee.connect.enabled true

?. Verify configuration

ahr-runtime-ctl config

?. Install hybrid components

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

?. Identify service IP address

k get svc|grep apigee-runtime|awk '{print $3}'

?. Deploy busyboxplus:curl container

kubectl run curl --generator=run-pod/v1 -it --image=radial/busyboxplus:curl

NOTE: If you wish to use it later

kubectl attach pod curl -it

?. Send request to the ping API

curl https://10.10.11.89/ping -v -k

TODO: add and --resolve curl

AHR-*-CTL

AHR-*-CTL