-

Notifications

You must be signed in to change notification settings - Fork 48

RozoFSUsersGuide1.2.x

This guide is intended to serve RozoFS's users as a definitive reference guide and handbook. This manual provides information for administrators who want to install and deploy RozoFS within their company. It describes the requirements for deployment, installation procedures, and general configuration. This manual contains the information one needs to consult before and during deployment.

This guide has been released to the RozoFS community, and its authors strive to improve it permanently. Feedback from readers is always welcome and encouraged. Please use the RozoFS public mailing list for enhancement suggestions and corrections. Copyright © 2010-2013 FIZIANS SAS. All rights reserved. FIZIANS, FIZIANS logo, ROZOFS and ROZOFS logo are trademarks of FIZIANS SAS. All other trademarks, icons and logos are the property of their respective owners. All other names of products and companies mentioned may be trademarks, owned by their respective owners. The information contained in this document represents the vision of SAS FIZIANS on topics from the date of publication. Changes are inevitable, this information can not be held liable for FIZIANS SAS and FIZIANS SAS can not guarantee the accuracy of any information presented after the date of publication. This document is for information only. FIZIANS makes no express or implied warranties in this document.

This guide is intended to serve users of RozoFS as a definitive reference guide and handbook. This manual provides information for administrators who want to install and deploy RozoFS within their company. It describes the requirements for deployment, installation procedures, and general configuration. This manual contains the information one needs to consult before and during deployment.

This guide assumes, throughout, that you are using RozoFS version 1.2.0 or later.

RozoFS is scale-out NAS. This software solution offers a high performance storage solution while ensuring high data availability of your business. RozoFS can easily and economically store a large volume (up to petabytes) of data by aggregating storage resources from heterogeneous servers. RozoFS uses erasure coding to ensure high availability of data while optimizing the raw storage capacity used. RozoFS is free software licensed under the GNU GENERAL PUBLIC LICENSE version 2 (GNU GPL 2.0).

RozoFS provides a native POSIX file system. The particularity of RozoFS lies in how data are stored. Before being stored, data are cut into a multitude of smaller pieces of information. These chunks are transformed using erasure coding into encoded fragments we call projections. These projections are then distributed and stored on different available storage servers. The data can then be retrieved (decoded) even if several projections (servers) are unavailable. Note that this mechanism adds a level of confidentiality to the system: the projections are not usable individually.

The redundancy method based on coding techniques that is used by RozoFS allows for large storage savings compared to traditional replication method.

The file system itself comprises three components:

-

exportd - metadata servers manage the location of chunks (ensuring the best capacity load balancing with respect to high availability), file access and namespace (hierarchy). Multiple replicated metadata servers are used to provide failover.

-

storaged - storage server storing the chunks.

-

rozofsmount - clients communicating with both export servers and chunk servers. They are responsible for data transformation.

Beyond the scale out architecture, RozoFS was designed to provide high performance and scalability using singleprocess eventdriven architecture and nonblocking calls to perform asynchronous I/O operations. All RozoFS components can run on the same physical hosts. A RozoFS single node setup can be useful for testing purpose. In production environment it is not unusual to have hosts running exportd, storaged and rozofsmount.

Each storage node hosts a storaged daemon and configures itself via a configuration file. This daemon receives requests and stores the converted data files. A storaged can manage several storage locations. These storage locations are physical storages (e.g. disks, partitions or even remote file systems) accessed through an usual file system (e.g. ext4, btrfs, zfs …) where storaged can read and write projections.

An export node includes all the information needed to reconstruct all the data it holds. It runs an exportd daemon configured by a configuration file describing three fundamental concepts in RozoFS : volume, layout and export.

A volume in RozoFS can be seen as a usable capacity. It is defined by a pool of storage locations. These storage locations are themselves gathered by clusters. These clusters in RozoFS provide load balancing based on effective capacity. When RozoFS needs to store projections on a volume, it will select the cluster with the larger free space and then the storage locations within this cluster on the same criteria. This ensures a good capacity repartition without any lost space due to small nodes that could be full. This design was selected with scalability in mind. Today, nodes are less capacitive than the ones you would add in the future. The only requirement on volume takes place upon a volume creation: it must at least contain one cluster holding at least enough storage locations handling the same capacity, according to the erasure coding parameters (see below). Even if a volume can be extended with the number of storage locations you need, a good practice is to scale with the same requirements. In the same way, the administrator is invited not to create clusters with storage locations on the same physical node, at risk of compromising the data availability upon node failure.

An exportd can manage several volumes. Obviously, a storage node can hold storage locations belonging to different volumes.

As previously mentioned, RozoFS introduces redundancy to ensure high reliability. This reliability depends on the chosen configuration. The number of storage servers used and the reliability you need are the two criteria which determine the best configuration to use. While redundancy tweaking might be possible, in RozoFS the default redundancy level is set to 1.5. RozoFS generates n projections, and only m among them are required to rebuild the data with n / m = 1.5. This redundancy level has been chosen for its availability equivalent with 3-way replication. Based on that, three redundancy configurations called layout have been defined in RozoFS. A layout in RozoFS is defined by a tuple (m,n,s) with m corresponding to the number of projections required to rebuild the data, n the number of generated projections, and s the number of possible storage locations that can be used to store projections. The purpose of the s value is about ensuring high availability in write operations : RozoFS will only consider a write safe if and only if it has been able to store n fragments. To do so, there must have enough storage locations available where to distribute the projections in order to tolerate failures of some of them. In the exportd configuration file, these layouts are identified by integers (from 0 to 2) and we call them layout_0, layout_1 and layout_2 in this guide:

-

layout_0: (2, 3, 4)

-

layout_1: (4, 6, 8)

-

layout_2: (8, 12, 16)

Note: as explained in the previous section, a good practice is to organize storage locations of a cluster on different physical nodes, thus the layouts are linked with the physical infrastructure of the storage platform, especially with the number of nodes required to start and to scale.

Volumes are the raw storage space on which several file sytems can be created (called exports) and exposed to clients. Exports can be declared or removed any time. Each export shares the raw capacity offered by its volume that can be managed through resizable quotas (hard and soft).

rozofsmount allows users to mount an export of the RozoFS file

system on a local directory using the FUSE library. Once the file system

is mounted, RozoFS usage is transparent to the user. rozofsmount is

responsible for data transformation and determines a set of storage

servers for read and write operations.

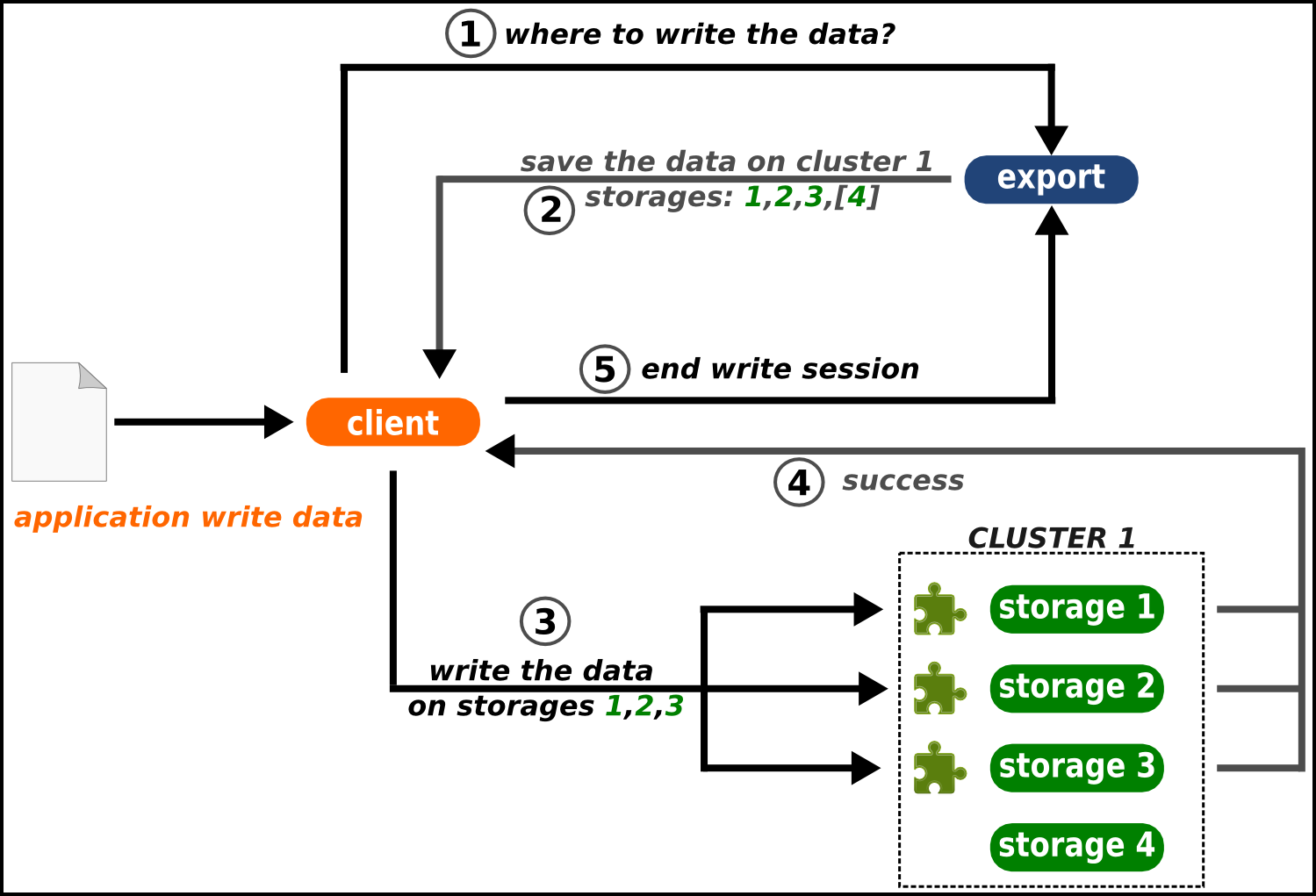

The following figures shows the read and write process in RozoFS. The client who wants to store a file first sends a request to the exportd to ask for the list of storage locations (nodes if good practices are respected) that must be used, then rozofsmount splits the file into a number of blocks (according to the file system block size e.g. 4096B) each block is then encoded and each resulting projection is sent to a storage location. During this write process, rozofsmount is responsible for choosing running storage locations among the possible ones to ensure data availability. Failures of storage locations (according to layout) are transparent for upper level (OS).

During the read operation, the opposite process is performed. The rozofsmount requests the list of storage locations used for each block of data stored and retrieves a sufficient set of projections to rebuild the block before transmitting it to the application level. The redundancy introduced during the write operation ensures a reliable storage despite any single or multiple failure of storage nodes (depending on the layout chosen for redundancy).

RozoFS is fully redundant. It takes care of managing both metadata and data with the same availability. However availability is handled in two different ways: metadata are small and are accessed frequently and highly structured (as in transactional systems) while data are huge and unstructured (and rather an I/O problem).

That is the reason why RozoFS uses well known replication for metadata, and active standby clusters technologies. Otherwise, for huge data it applies erasure coding.

The metadata server (exportd) is a single point of potential failure (SPOF). A high-availability strategy is necessary to be transparent to the failure of one or more servers hosting the exportd service.

The DRBD (Distributed Replicated Block Device) software allows the system to synchronize data, block by block, through the network between two servers. This disk partition is used by only one server at a time (the elected master server), and it is on this server that the service exportd is active. The cluster management software Pacemaker controls the different machines in the cluster and takes the necessary measures in case of problem on any of them (failover IP address, boot order mof services, etc).

A distributed system as RozoFS must protect the user data. Since this kind of system relies on several nodes (from tens to thousands), the risk of failures is a common factor. Failure sources are manifold. Hardware components might fail (network, disks, power management …) as well as software components (bugs, operating system, expected upgrades …). In order to protect data, RozoFS relies on an erasure code that uses the Mojette mathematic transform.

Traditionally, fault-tolerance is managed by replication of data. Blocks of information are replicated into several copies. A 3-way replication produces 3 copies for each block of information. In this case the system is able to cope with 2 failures. These replicated fragments are then distributed to the storage nodes of the system. Upon user access to a file, the system reads blocks of information that correspond to the file. A failure occurs when a block is not accessible by the system. In this case, the system switches its access to another copy of the block, stored in another storage node. Consider now a system that holds 3 petabytes of data, that needs to be protected against failures. A system based on a 3-way replication approach requires 3 times the amount of the user data. It means that your protected system consumes 9 petabytes of protected data!

There is an alternative, called erasure coding. Erasure coding aims at reducing the storage overhead that involves fault-tolerance up to 50% compared to replication. Consider now that your k chunks of information are encoded into n fragments (k<n). These fragments are then distributed to the storage nodes of your system. When a user wants to access a file, the system needs to read any k fragments among the n it produced in order to rebuild the file. It means that if a fragment is not accessible because of a failure, the system can pick another one. Considering storage capacity in the previous example of a 2 fault-tolerant system, erasure coding transforms your 3 petabytes into 4.5 petabytes. With the same reliability, erasure code saved 1.5 petabytes compared to replication

Different kinds of erasure codes exist. The most popular ones are the Reed-Solomon codes, but their implementations often lack performance. RozoFS relies on an erasure code based on the Mojette transform, which is a discrete version of the Radon transform. It is a mathematical tool designed formerly for tomography but it has applications in a lot of domain, especially in erasure coding.

Let consider our data set in a squared array where each row represents a block and where lines depend on the size of blocks. The Mojette transform defines linear combinations of the data. When a user wants to write a file on RozoFS, the system encodes its information. Basically, the encoder simply computes additions between different bits in this array to produce redundant data. These additions follow a certain pattern depending on the angles of projections. In RozoFS, it is the layout that defines the number of projections and their angle. We call these additions “bins” which are the elements that compose a projection. After the projections are computed, RozoFS distributes these encoded blocks to the storage nodes.

Consider now a user that need to access some information. Reading a file is realised by the system as decoding information. It is possible to invert the transform as we know the pattern. Only a subpart of the projections is sufficient to retrieve the data (we saw previously the gain in storage capacity). If enough projections are accessible, the system is able to decode. Decoding is the process that fills an empty array from the bins of projections, knowing the pattern used. We consider that the system has rebuilt the data once the array is fully filled.

The Mojette transform guarantees high performance. First, it relies on simple additions. Encoding and decoding computations are linear to the size of the array. This transform is a good tool to protect data as the overhead of computations due to encoding and decoding is low as well as the storage overhead.

Fizians SAS provides binary packages for every component of RozoFS

and various GNU/Linux distributions based on Debian (.deb) and Redhat

(.rpm) package format. Using binary packages brings you benefits.

First, you do not need a full development environment and other hand

binary packages come with init script, easy dependency management etc...

that can simplify deployment and management process. See help of your

favorite GNU/Linux distribution's package manager for package

installation. According to their roles, nodes should have at least one

these packages installed :

-

rozofs-storaged_<version>_<arch>.<deb|rpm> -

rozofs-exportd_<version>_<arch>.<deb|rpm> -

rozofs-rozofsmount_<version>_<arch>.<deb|rpm>

To help and automate management, the following optional packages should be installed on each node involved in a RozoFS platform:

-

rozofs-manager-lib_<version>_<arch>.<deb|rpm> -

rozofs-manager-cli_<version>_<arch>.<deb|rpm> -

rozofs-manager-agent_<version>_<arch>.<deb|rpm> -

rozofs-rprof_<version>_<arch>.<deb|rpm> -

rozofs-rozodebug_<version>_<arch>.<deb|rpm>

The latest stable release of RozoFS can be downloaded from http://github.com/rozofs/rozofs.

To build the RozoFS source code, it is necessary to install several libraries and tools. RozoFS uses the cross-platform build system cmake to get you started quickly. RozoFS dependencies are the following:

-

cmake -

libattr1-dev -

uuid-dev -

libconfig-dev -

libfuse-dev -

libreadline-dev -

python2.6-dev -

libpthread -

libcrypt -

swig

Once the required packages are installed on your appropriate system, you

can generate the build configuration with the following commands (using

default values compiles RozoFS in Release mode and installs it on

/usr/local) :

# cmake -G "Unix Makefiles" ..

-- The C compiler identification is GNU

-- Check for working C compiler: /usr/bin/gcc

-- Check for working C caompiler: /usr/bin/gcc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Configuring done

-- Generating done

-- Build files have been written to: /root/rozofs/build

# make

# make install

If you use default values, make will place the executables in

/usr/local/bin, build options (CMAKE_INSTALL_PREFIX,

CMAKE_BUILD_TYPE...) of generated build tree can be modified with the

following command :

# make edit_cache

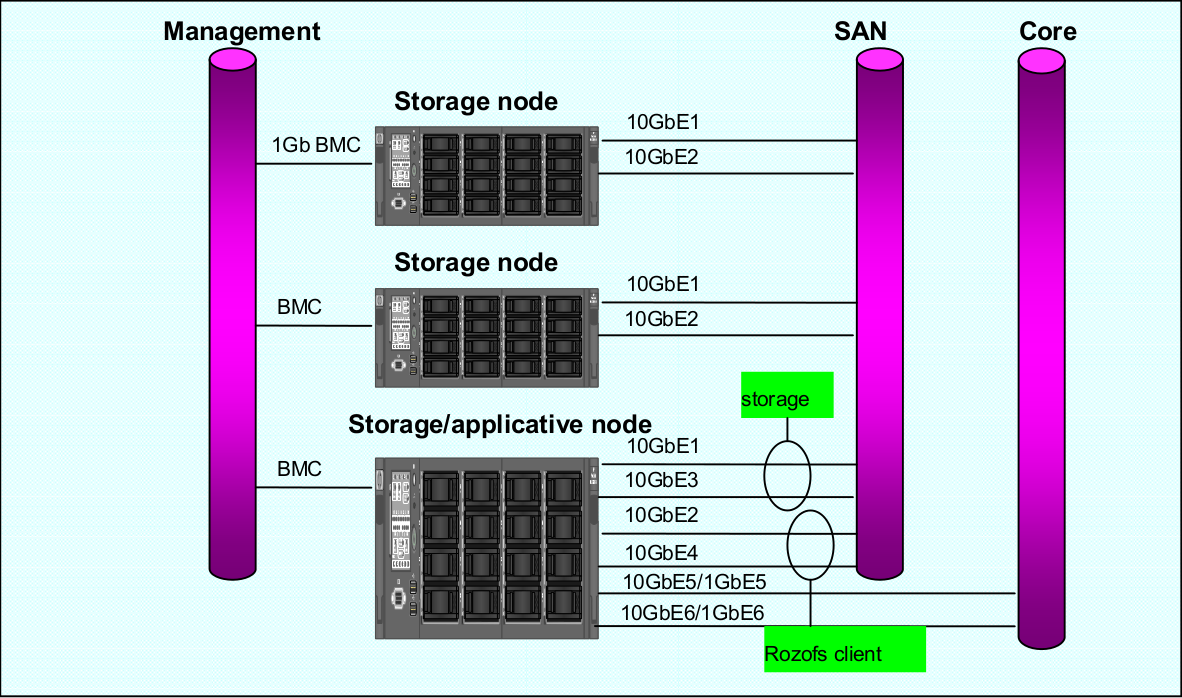

It is recommended to separate core traffic (application) from the SAN traffic (RozoFS/Storage) with VLANs. It is recommended to use separate ports for application and RozoFS/Client. When RozoFS and Storage are co-located, they can share the same ports. However, if there are enough available ports, it is better that each entity (RozoFS, Storage) has its own set of ports.

It is mandatory to enable Flow Control on the switch ports that handle RozoFS/Storage traffic. In addition, one must also enable Flow Control on the NICs used by RozoFS/Storage to obtain the performance benefit. On many networks, there can be an imbalance in the network traffic between the devices that send network traffic and the devices that receive the traffic. This is often the case in SAN configurations in which many hosts (initiators such as RozoFS) are communicating with storage devices. If senders transmit data simultaneously, they may exceed the throughput capacity of the receiver. When this occurs, the receiver may drop packets, forcing senders to retransmit the data after a delay. Although this will not result in any loss of data, latency will increase because of the retransmissions, and I/O performance will degrade.

It is recommended to disable spanning-tree protocol (STP) on the switch ports that connect end nodes (RozoFS clients and storage array network interfaces). If it is still decide to enable STP on those switch ports, one need to check for a STP vendor feature, such as PortFast, which allows immediate transition of the ports into forwarding state.

RozoFS Clients/Storages node connections to the SAN network switches are always in active-active mode. In order to leverage to Ethernet ports utilization, the balancing among the ports is under the control of the application and not under the control of a bonding driver (there is no need for bonding driver with RozoFS storage node). When operating in the default mode of RozoFs (no LACP), it is recommended that each SAN port belongs to different VLANs. Configuration with 802.3ad (LACP) trunks is supported, however the Ethernet ports usage will not be optimal since the selection of a port depends on a hash applied either an MAC or IP level.

That configuration is the recommended one for RozoFS where there is one separate Vlan per physical port. The following diagram describes how storage nodes are connected toward the ToR switches. It is assumed that the RozoFS clients reside on nodes that are connected towards the northbound of the ToR SAN switches

In that case, the ports dedicated to the SAN (RozoFS and Storage) are grouped in one or two LACP groups, depending if we want to separate the RozoFS and Storage traffic or not. They can be either reside on the same or different VLANs.

DRBD replicates data from the primary device to the secondary device in a way which ensures that both copies of the data remain identical. Think of it as a networked RAID 1. It mirrors data in real-time, so its replication occurs continuously. Applications do not need to know that in fact their data is stored on different disks.

NOTE: You must set up the DRBD devices (for store RozoFS metadata) before creating file systems on them.

To install the needed packages for DRBD see: DRBD website. The following procedure uses two servers named node1 and node2, and the cluster resource name r0. It sets up node1 as the primary node. Be sure to modify the instructions relative to your own nodes and filenames.

To set up DRBD manually, proceed as follows: The DRBD configuration

files are stored in the directory /etc/drbd.d/. There are two

configuration files which are created:

-

/etc/drbd.d/r0.rescorresponds to the configuration for resource r0; -

/etc/drbd.d/global_common.confcorresponds to the global configuration of DRBD.

Create the file /etc/drbd.d/r0.res on node1, changes the lines

according to your parameters, and save it:

resource r0 {

protocol C;

on node1 {

device /dev/drbd0;

disk /dev/mapper/vg01-exports;

address 192.168.1.1:7788;

meta-disk internal;

}

on node2 {

device /dev/drbd0;

disk /dev/mapper/vg01-exports;

address 192.168.1.2:7788;

meta-disk internal;

}

}

Copy DRBD configuration files manually to the other node:

# scp /etc/drbd.conf node2:/etc/drbd.d/

Initialize the metadata on both systems by entering the following command on each node:

# drbdadm -- --ignore-sanity-checks create-md r0

Attach resource r0 to the backing device :

# drbdadm attach r0

Set the synchronization parameters for the DRBD resource:

# drbdadm syncer r0

Connect the DRBD resource with its counterpart on the peer node:

# drbdadm connect r0

Start the resync process on your intended primary node (node1 in this case):

# drbdadm -- --overwrite-data-of-peer primary r0

Set node1 as primary node:

# drbdadm primary r0

Create an ext4 file system on top of your DRBD device:

# mkfs.ext4 /dev/drbd0

If the install and configuration procedures worked as expected, you are

ready to run a basic test of the DRBD functionality. Create a mount

point on node1, such as /srv/rozofs/exports:

# mkdir -p /srv/rozofs/exports

Mount the DRBD device:

# mount /dev/drbd0 /srv/rozofs/exports

Write a file:

# echo “helloworld” > /srv/rozofs/exports/test

Unmount the DRBD device:

# umount /srv/rozofs/exports

To verify that synchronization is performed:

# cat /proc/drbd

version: 8.3.11 (api:88/proto:86-96)

srcversion: 41C52C8CD882E47FB5AF767

0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:3186507 nr:0 dw:3183477 dr:516201 al:4702 bm:163 lo:0 pe:0 ua:0

ap:0 ep:1 wo:f oos:0

The two resources are now synchronized (UpToDate). The initial synchronization is performed, it is necessary to stop the DRBD service and remove the link for the initialization script not to start the service automatically DRBD. The service is now controlled by the Pacemaker service.

Disable DRBD init script (depending on your distribution, here Debian example):

# /etc/init.d/drbd stop

# update-rc.d -f drbd remove

Pacemaker is an open-source high availability resource management tool suitable for clusters of Linux machines. This tool can detect machine failures with a communication system based on an exchange of UDP packets and migrate services (resource) from one server to another.

The configuration of Pacemaker can be done with the crm command. It

allows you to manage different resources and propagates changes on each

server automatically. The creation of a resource is done with an entry

named primitive in the configuration file. This primitive uses a script

corresponding to the application to be protected.

In the case of the platform, Pacemaker manages the following resources:

-

exportd daemon;

-

The virtual IP address for the exportd service;

-

Mounting the file system used to store meta-data;

-

DRBD resources (r0), roles (master or slave);

-

Server connectivity.

The following diagram describes the different resources configured and controlled via Pacemaker. In this case, two servers are configured and node1 is the master server.

The first component to configure is Corosync. It manages the

infrastructure of the cluster, i.e. the status of nodes and their

operation. For this, we must generate an authentication key that is

shared by all the machines in the cluster. The corosync-keygen utility

can be use to generate this key and then copy it to the other nodes.

Create key on node1:

# corosync-keygen

Copy the key manually to the other node:

# scp /etc/corosync/authkey root@node2:/etc/corosync/authkey

Besides copying the key, you also have to modify the corosync

configuration file which stored in /etc/corosync/corosync.conf.

Edit your corosync.conf with the following:

interface {

# The following values need to be set based on your environment

ringnumber: 1

bindnetaddr:192.16.1.0

mcastaddr: 226.94.1.2

mcastport: 5407

ttl: 255

}

Copy the corosync.conf manually to the other node:

# scp /etc/corosync/corosync.conf root@node2:/etc/corosync/corosync.conf

Corosync is started as a regular system service. Depending on your distribution, it may ship with a LSB init script, an upstart job, or a systemd unit file. Either way, the service is usually named corosync:

# /etc/init.d/corosync start

or:

# service corosync start

or:

# start corosync

or:

# systemctl start corosync

You can now check the Corosync connectivity by typing the following command:

# crm_mon

============

Last updated: Tue May 2 03:54:44 2013

Last change: Tue May 2 02:27:14 2013 via crmd on node1

Stack: openais

Current DC: node1 - partition with quorum

Version: 1.1.7-ee0730e13d124c3d58f00016c3376a1de5323cff

4 Nodes configured, 4 expected votes

0 Resources configured.

============

Online: [ node1 node2 ]

Once the Pacemaker cluster is set up and before configuring the different resources and constraints of the Pacemaker cluster, it is necessary to copy the OCF scripts for exportd on each server. The exportd script is enable to start, stop and monitor the exportd daemon.

Copy the OCF script manually to each node:

# scp exportd root@node1:/usr/lib/ocf/resource.d/heartbeat/exportd

# scp exportd root@node1:/usr/lib/ocf/resource.d/heartbeat/exportd

To set the cluster properties, start the crm shell and enter the

following commands: configure property stonith-enabled=false

configure property no-quorum-policy=ignore

configure primitive p_ping ocf:pacemaker:ping params

host_list="192.168.1.254" multiplier="100" dampen="5s"

op monitor interval="5s"

configure clone c_ping p_ping meta interleave="true"

configure primitive p_drbd_r0 ocf:linbit:drbd params drbd_resource="r0" op

start timeout="240" op stop timeout="100" op notify interval="0"

timeout="90" op monitor interval="10" timeout="20" role="Master" op

monitor interval="20" timeout="20" role="Slave"

configure ms ms_drbd_r0 p_drbd_r0 meta master-max="1"

master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

globally-unique="false"

configure location loc_ms_drbd_r0_needs_ping ms_drbd_r0 rule -inf:

not_defined pingd or pingd lte 0

configure primitive p_vip_exportd ocf:heartbeat:IPaddr2 params

ip="192.168.1.10" nic="eth0" cidr_netmask=24 op monitor interval="30s"

configure primitive p_fs_exportd ocf:heartbeat:Filesystem params

device="/dev/drbd0" directory="/srv/rozofs/exports" fstype="ext4"

options="user_xattr,acl,noatime" op start timeout="60" op stop timeout="60"

configure primitive exportd_rozofs ocf:heartbeat:exportd params

conffile="/etc/rozofs/export.conf" op monitor interval="30s"

configure group grp_exportd p_fs_exportd p_vip_exportd exportd_rozofs

configure colocation c_grp_exportd_on_drbd_rU inf: grp_exportd

ms_drbd_r0:Master

configure order o_drbd_rU_before_grp_exportd inf: ms_drbd_r0:promote

grp_exportd:start

configure location loc_prefer_grp_exportd_on_node1 grp_exportd 100: node1

Once all the primitives and constraints are loaded, it is possible to check the correct operations of the cluster with the following command:

# crm_mon -1

============

Last updated: Wed May 2 02:44:21 2013

Last change: Wed May 2 02:43:27 2013 via cibadmin on node1

Stack: openais

Current DC: node1 - partition with quorum

Version: 1.1.7-ee0730e13d124c3d58f00016c3376a1de5323cff

2 Nodes configured, 2 expected votes

5 Resources configured.

============

Online: [ node1 node2 ]

Master/Slave Set: ms_drbd_r0 [p_drbd_r0]

Masters: [ node1 ]

Slaves: [ node2 ]

Resource Group: grp_exportd

p_fs_exportd (ocf::heartbeat:Filesystem): Started node1

p_vip_exportd (ocf::heartbeat:IPaddr2): Started node1

exportd_rozofs (ocf::heartbeat:exportd): Started node1

Clone Set: c_ping [p_ping]

Started: [ node1 node2 ]

Storaged Storaged nodes should have appropriate free space on disks. The storaged service stores transformed data as files on a common file system (ext4). It is important to dedicate file systems used by storaged service exclusively to it (use a Logical Volume or dedicated partition). It is necessary to manage the free space properly.

The configuration file of exportd (export.conf) consists of 3 types of

information :

-

the redundancy configuration chosen (layout)

-

the list of storage volumes used to store data (volumes)

-

list of file systems exported (exports)

Redundancy Configuration (layout): the layout allows you to specify the configuration of redundancy RozoFS. There are 3 redundancy configurations that are possible :

-

layout=0; cluster(s) of 4 storage locations, 3 are used for each write and 2 for each read

-

layout=1; cluster(s) of 8 storage locations, 6 are used for each write and 4 for each read

-

layout=2; cluster(s) 16 storage locations, 12 are used for each write and 8 for each read

List of storage volumes (volumes): The list of all the storage volumes used by exportd is grouped under the volumes list. A volume in the list is identified by a unique identification number (VID) and contains one or more clusters identified by a unique identification number (CID) consisting of 4, 8 or 16 storage locations according to the layout you have chosen. Each storage location in a cluster is defined with the SID (the storage unique identifier within the cluster) and its network name (or IP address).

List of exported file systems (exports): The exportd daemon can export one or more file systems. Each exported file system is defined by the absolute path to the local directory that contains specific metadata for this file system.

Here is the an example of configuration file (export.conf) for exportd

daemon:

# rozofs export daemon configuration file

layout = 0 ; # (inverse = 2, forward = 3, safe = 4)

volumes = # List of volumes

(

{

# First volume

vid = 1 ; # Volume identifier = 1

cids= # List of clusters for the volume 1

(

{

# First cluster of volume 1

cid = 1; # Cluster identifier = 1

sids = # List of storages for the cluster 1

(

{sid = 01; host = "storage-node-1-1";},

{sid = 02; host = "storage-node-1-2";},

{sid = 03; host = "storage-node-1-3";},

{sid = 04; host = "storage-node-1-4";}

);

},

{

# Second cluster of volume 1

cid = 2; # Cluster identifier = 2

sids = # List of storages for the cluster 2

(

{sid = 01; host = "storage-node-2-1";},

{sid = 02; host = "storage-node-2-2";},

{sid = 03; host = "storage-node-2-3";},

{sid = 04; host = "storage-node-2-4";}

);

}

);

},

{

# Second volume

vid = 2; # Volume identifier = 2

cids = # List of clusters for the volume 2

(

{

# First cluster of volume 2

cid = 3; # Cluster identifier = 3

sids = # List of storages for the cluster 3

(

{sid = 01; host = "storage-node-3-1";},

{sid = 02; host = "storage-node-3-2";},

{sid = 03; host = "storage-node-3-3";},

{sid = 04; host = "storage-node-3-4";}

);

}

);

}

);

# List of exported filesystem

exports = (

# First filesystem exported

{eid = 1; root = "/srv/rozofs/exports/export_1"; md5="AyBvjVmNoKAkLQwNa2c";

squota="128G"; hquota="256G"; vid=1;},

# Second filesystem exported

{eid = 2; root = "/srv/rozofs/exports/export_2"; md5="";

squota=""; hquota = ""; vid=2;}

);

The configuration file of the storaged daemon (storage.conf) must

be completed on each physical server storage where storaged daemon is

used. It contains two informations:

-

ports; list of TCP ports used to receive requests to write and read from clients using rozofsmount

-

storages; list of local storage locations used to store the transformed data (projections)

List of local storage locations (storages): All of storage locations used by the storaged daemon on a physical server are grouped under the storages list. The storages list consists of one or more storage locations. Each storage location is defined by the CID (unique identification number of the cluster to which it belongs) and SID (the storage unique identifier within the cluster) and the absolute path to the local directory that will contain the specific encoded data for this storage.

Configuration file example (storage.conf) for one storaged daemon:

# rozofs storage daemon configuration file.

# ports:

# It's a list of TCP ports used for receive write and read requests

# from clients (rozofsmount).

ports = [40001, 40002, 40003, 40004 ];

# storages:

# It's the list of local storage managed by this storaged.

storages = (

{cid = 1; sid = 1; root = "/srv/rozofs/storages/storage_1-1";},

{cid = 2; sid = 1; root = "/srv/rozofs/storages/storage_2-1";}

);

The storaged daemon starts with the following command:

# /etc/init.d/rozofs-storaged start

To stop the daemon, the following command is used:

# /etc/init.d/rozofs-storaged stop

To get the current status of the daemon, the following command is used:

# /etc/init.d/rozofs-storaged status

To reload the storaged configuration file (storage.conf) after a

configuration changes, the following command is used:

# /etc/init.d/rozofs-storaged reload

To automatically start the storaged daemon every time the system boots, enterone of the following command lines.

For Red Hat based systems:

# chkconfig rozofs-storaged on

For Debian based systems

# update-rc.d rozofs-storaged defaults

Systems Other than Red Hat and Debian:

# echo "storaged" >> /etc/rc.local

The exportd daemon is started with the following command:

# /etc/init.d/rozofs-exportd start

To stop the daemon, the following command is used:

# /etc/init.d/rozofs-exportd stop

To get the current status of the daemon, the following command is used:

# /etc/init.d/rozofs-exportd status

To reload the exportd configuration file (export.conf) after a

configuration changes, the following command is used:

# /etc/init.d/rozofs-exportd reload

To automatically start the exportd daemon every time the system boots, enter one of the following command line.

For Red Hat based systems:

# chkconfig rozofs-exportd on

For Debian based systems

# update-rc.d rozofs-exportd defaults

Systems Other than Red Hat and Debian:

# echo "exportd" >> /etc/rc.local

After installing the rozofsmount (RozoFS Client), you have to mount the RozoFS exported file system to access the data. Two methods are possible: mount manually or automatically.

To manually mount Rozo file system, use the following command:

# rozofsmount -H EXPORT_IP -E EXPORT_PATH MOUNTDIR

For example, if the exported file system is:

/srv/rozofs/exports/export_1 and IP address for export server is

192.168.1.100:

$ rozofsmount -H 192.168.1.100 -E

/srv/rozofs/exports/export_1 /mnt/rozofs/fs-1

To unmount the file system:

$ umount /mnt/rozofs/fs-1

To automatically mount a Rozo file system, edit the /etc/fstab file

and add the following line:

$ rozofsmount MOUNTDIR rozofs exporthost=EXPORT_IP,

exportpath=EXPORT_PATH,_netdev 0 0

For example, if the exported file system is

/srv/rozofs/exports/export_1 and IP address for export server is

192.168.1.100 :

$ rozofsmount /mnt/rozofs/fs1 rozofs\

$ exporthost=192.168.1.100,exportpath=/srv/rozofs/exports/export_1,_netdev

0\ 0

RozoFS comes with a command line utility called rozo that aims to automate the management process of a RozoFS platform. Its main purpose is to chain up the operations required on remote nodes involved on a high level management task such as stopping and starting the whole platform, add new nodes to the platform in order to extend the capacity, add new exports on volume etc…

Rozo is fully independant of RozoFS daemons and processes and is not required for a fully functional system but when installed aside RozoFS on each involved nodes it greatly facilitates configuration as it takes care of all the unique id generation of storage locations, clusters and so on. Despite not being a monitoring tool, rozo can be however used to get easily a description of the platform, its status and its configuration.

Rozo uses the running exportd configuration file as a basic platform knowledge, you can use rozo on any nodes (even not involve in the platform).

You can have an overview of rozo capabilities and get the help you

need by using the rozo manual

# man rozo

See below, examples of rozo usage for common management tasks on a 8

nodes platform. Each command is launched on the running exportd node.

To get informations about all nodes in the platform and their roles.

root@fec4cloud-01:~# rozo nodes -E 172.19.34.221

NODE ROLES

172.19.34.208 ['storaged', 'rozofsmount']

172.19.34.201 ['storaged', 'rozofsmount']

172.19.34.202 ['storaged', 'rozofsmount']

172.19.34.203 ['storaged', 'rozofsmount']

172.19.34.204 ['storaged', 'rozofsmount']

172.19.34.205 ['storaged', 'rozofsmount']

172.19.34.206 ['storaged', 'rozofsmount']

172.19.34.207 ['storaged', 'rozofsmount']

fec4cloud-01 ['exportd']

You can easily list nodes according to their roles (exportd, storaged or

rozofsmount) using the -r option.

To get an overview of the nodes: a RozoFS processes status.

root@fec4cloud-01:~# rozo status -E 172.19.34.221

NODE: 172.19.34.208 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.201 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.202 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.203 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.204 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.205 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.206 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: 172.19.34.207 - UP

ROLE STATUS

storaged running

rozofsmount running

NODE: fec4cloud-01 - UP

ROLE STATUS

exportd running

You can easily get nodes status according to their roles using the -r

option or get statuses for a specific node using the -n option.

root@fec4cloud-01:~# rozo stop -E 172.19.34.221

platform stopped

root@fec4cloud-01:~# rozo start -E 172.19.34.221

platform started

Given the platform described by the rozo node commands above, stop and start operations take care of daemons exportd and storaged stopping/starting as well as unmounting/mounting the exports configured on every node.

root@fec4cloud-01:~# rozo config -E 172.19.34.221

NODE: 172.19.34.208 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 8 /srv/rozofs/storages/storage_1_8

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.201 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 1 /srv/rozofs/storages/storage_1_1

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.202 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 2 /srv/rozofs/storages/storage_1_2

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.203 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 3 /srv/rozofs/storages/storage_1_3

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.204 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 4 /srv/rozofs/storages/storage_1_4

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.205 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 5 /srv/rozofs/storages/storage_1_5

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.206 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 6 /srv/rozofs/storages/storage_1_6

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: 172.19.34.207 - UP

ROLE: storaged

PORTS: [40001, 40002, 40003, 40004]

CID SID ROOT

1 7 /srv/rozofs/storages/storage_1_7

ROLE: rozofsmount

NODE EXPORT

172.19.34.221 /srv/rozofs/exports/export_1

NODE: fec4cloud-01 - UP

ROLE: exportd

LAYOUT: 1

VOLUME: 1

CLUSTER: 1

NODE SID

172.19.34.201 1

172.19.34.202 2

172.19.34.203 3

172.19.34.204 4

172.19.34.205 5

172.19.34.206 6

172.19.34.207 7

172.19.34.208 8

EID VID ROOT MD5 SQUOTA HQUOTA

1 1 /srv/rozofs/exports/export_1

The output of rozo config let us know each node configuration according to its role. We especially notice that this platform has one volume with one export relying on it.

Extend the platform is easy (add nodes) with the rozo expand command,

for example purpose we will add all the nodes already involved in volume

1

root@fec4cloud-01:~# rozo expand -E 172.19.34.221 172.19.34.201 172.19.34.202 172.19.34.203 172.19.34.204 172.19.34.205 172.19.34.206 172.19.34.207 172.19.34.208

As we added nodes without indicating the volume we want to expand,

rozo has created a new volume (with id 2) as illustrated in the rozo

config output extract below:

NODE: fec4cloud-01 - UP

ROLE: exportd

LAYOUT: 1

VOLUME: 1

CLUSTER: 1

NODE SID

172.19.34.201 1

172.19.34.202 2

172.19.34.203 3

172.19.34.204 4

172.19.34.205 5

172.19.34.206 6

172.19.34.207 7

172.19.34.208 8

VOLUME: 2

CLUSTER: 2

NODE SID

172.19.34.201 1

172.19.34.202 2

172.19.34.203 3

172.19.34.204 4

172.19.34.205 5

172.19.34.206 6

172.19.34.207 7

172.19.34.208 8

EID VID ROOT MD5 SQUOTA HQUOTA

1 1 /srv/rozofs/exports/export_1

Indication of a volume id (e.g 1) would have resulted in the creation of a new cluster in this volume.

rozo export and unexport commands manage the creation (and deletion) of new exports

root@fec4cloud-01:~# rozofs export -E 172.19.34.221 1

This will create a new export on volume 1, and configure all nodes with

a rozofsmount role to mount this new export as illustrated in the df

output on one of the node.

root@fec4cloud-01:~# df

Sys. fich. 1K-blocks Util. Disponible Uti% Monté sur

rootfs 329233 163343 148892 53% /

udev 10240 0 10240 0% /dev

tmpfs 1639012 328 1638684 1% /run

/dev/mapper/fec4cloud--01-root 329233 163343 148892 53% /

tmpfs 5120 8 5112 1% /run/lock

tmpfs 3278020 16416 3261604 1% /run/shm

/dev/mapper/fec4cloud--01-home 4805760 140636 4421004 4% /home

/dev/mapper/fec4cloud--01-storages 884414828 204964 839284120 1% /srv/rozofs/storages

/dev/mapper/fec4cloud--01-tmp 376807 10254 347097 3% /tmp

/dev/mapper/fec4cloud--01-usr 8647944 573772 7634876 7% /usr

/dev/mapper/fec4cloud--01-var 2882592 307728 2428432 12% /var

/dev/drbd10 54045328 38259 51123665 1% /srv/rozofs/exports

rozofs 4867164832 0 4867164832 0% /mnt/rozofs@fec4cloud-01/export_1

rozofs 4867164832 0 4867164832 0% /mnt/rozofs@fec4cloud-01/export_2

RozoFS comes with a command line tool called rozodebug that lets the

RozoFS administrator access a huge amount of statistics. Even though

these statistics are very helpful for troubleshooting and for diagnostic

(see section below) they might be far too detailed for an everyday

usage. For that purpose Fizians provides a simplified approach: nagios

modules. The rozodebug application is used by Nagios scripts to

provide high level informations related to the system health. The

communication with rozodebug is achieved thanks to TCP.

Each component of RozoFS (exportd, rozofsmount, storcli, storaged and storio) has an associated debug entity whose role is to provide:

-

per application statistics (profiler command):

-

metadata statistics (exportd, rozofsmount)

-

read/write performance counters (storio)

-

-

CPU load of internal software entities,

-

information related to connectivity between components:

-

TCP connection status

-

-

Availability of the load balancing groups associated with storage nodes,…

-

version of the running software

-

…

Here is the menu of rozodebug. An external application can communicate

with any component of RozoFS by providing the IP address and the RozoFS

well-known rozodebug port associated with the component.

rozodebug [-i <hostname>] -p <port> [-c <cmd>] [-f <cmd file>] [-period <seconds>] [-t <seconds>]

-i <hostname> destination IP address or hostname of the debug server

default is 127.0.0.1

-p <port> destination port number of the debug server

mandatory parameter

-c <cmd|all> command to run in one shot or periodically (-period)

several -c options can be set

-f <cmd file> command file to run in one shot or periodically (-period)

several -f options can be set

-period <seconds> periodicity for running commands using -c or/and -f options

-t <seconds> timeout value to wait for a response (default 4 seconds)

These commands display the CPU information of each function (callback) that have been attached with the socket controller. It provides information related to the function name, the reference of the socket, the average CPU time, etc…

rozofsmount 0> cpu

...............................................

select max cpu time : 238 us

application sock last cumulated activation average

name nb cpu cpu times cpu

TMR_SOCK_XMIT 9 0 0 0 0

TMR_SOCK_RECV 10 14 420 18 23

DBG SERVER 11 0 0 0 0

rozofs_fuse 5 876 0 0 0

C:EXPORTD/127.0.0.1:683 12 745 0 0 0

C:STORCLI_1 6 0 0 0 0

C:STORCLI_0 13 0 0 0 0

DBG 192.168.2.1 14 108 108 1 108

scheduler 0 0 0 0 0

The CPU command operates in a read and clear mode. Once the statistics have been displayed, they are cleared. Fields:

-

Sock nb: socket value within the process.

-

Last cpu: value of the last activation duration in microseconds of application

-

Cumulated cpu: cumulative cpu time of the application. Unit is microseconds.

-

Activation time: number of times the application has been called during the observation period.

-

Average cpu: average cpu time of the application in microseconds

The default port of a rozofsmount process is 50003.

[root@localhost tests]# rozodebug -i localhost1 -p 50003

...............................................

system : rozofsmount 0

_________________________________________________________

rozofsmount 0> <enter>

...............................................

List of available topics :

af_unix

cpu

lbg

lbg_entries

profiler

tcp_info

tmr_default

tmr_set

tmr_show

trx

version

who

xmalloc

exit / quit / q

_________________________________________________________

rozofsmount 0>

This represents the list of lightweight threads that are known by the socketController of the system.

rozofsmount 0> cpu

...............................................

select max cpu time : 238 us

application sock last cumulated activation average

name nb cpu cpu times cpu

TMR_SOCK_XMIT 9 0 0 0 0

TMR_SOCK_RECV 10 14 420 18 23

DBG SERVER 11 0 0 0 0

rozofs_fuse 5 876 0 0 0

C:EXPORTD/127.0.0.1:683 12 745 0 0 0

C:STORCLI_1 6 0 0 0 0

C:STORCLI_0 13 0 0 0 0

DBG 192.168.2.1 14 108 108 1 108

scheduler 0 0 0 0 0

Here are the roles of the specific socket controller entities of a rozofsmount process:

-

rozofs_fuse: that entity is responsible for the processing of the Fuse low level requests: file system metadata operations, file read/write, etc…

-

C:EXPORTD/127.0.0.1:683: this represents the TCP connection that rozofsmount establishes towards its associated exportd. The attached function processes the RPC replies for the RPC requests submitted by the rozofsmount.

-

C:STORCLI_0/ C:STORCLI_1: This represents the AF_UNIX stream sockets of the local storage I/O clients owned by the rozofsmount. The attached function processes the replies to the read, write and truncate request submitted by rozofsmount.