usfxr is a C# library used to generate and play game-like procedural audio effects inside Unity. With usfxr, one can easily design and synthesize original sound in real time for actions such as item pickups, jumps, lasers, hits, explosions, and more, without ever leaving the Unity editor.

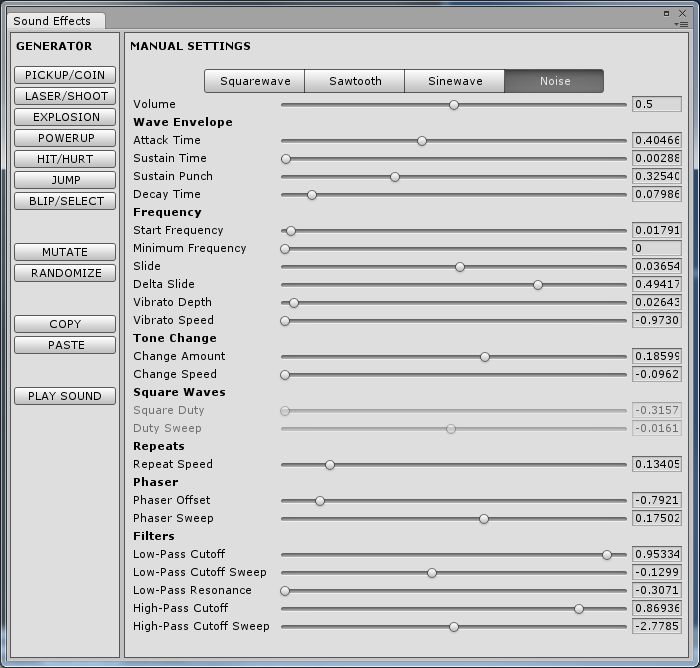

usfxr comes with code that allows for real-time audio synthesizing in games, and an in-editor interface for creating and testing effects before you use them in your code.

usfxr is a port of Thomas Vian's as3sfxr, which itself is an ActionScript 3 port of Tomas Pettersson's sfxr. And as if the acronym collection is not enough, it also supports additional features first introduced by BFXR such as new waveform types and filters.

This video explains the ideas behind as3sfxr, and the ideas supported by usfxr.

First things first: if you're just looking for a few good (but static) 16 bit-style sound effects to use in games, anyone can use sound files generated by the original sfxr or as3sfxr's online version without any changes, since both applications generate ready-to-be-used audio files.

However, by using a runtime library like usfxr, you can generate the same audio samples in real time, or re-synthesize effects generated in any of those tools by using a small list of parameters (encoded as a short string). The advantages of this approach are twofold:

- Audio is generated in real time; there's no storage of audio files as assets necessary, making compiled project sizes smaller

- Easily play variations of every sound you play; adds more flavor to the gameplay experience

And while the library was meant to be used in real time, without static assets, the editor can also generate static WAV files that can be used in your games. That way, nothing is synthesized in real time, but one still has the advantage of using an interface to generate original audio effects.

I make no claims in regards to the source code or interface, since it was simply adapted from Thomas Vian's own code and (elegant) as3sfxr interface, as well as Stephen Lavelle's BFXR additional features. As such, usfxr contains the same features offered by these two ports, such as caching of generated audio and ability to play sounds with variations. But because the code is adapted to work on a different platform (Unity), it has advantages of its own:

- Fast audio synthesis

- Ability to cache sounds the first time they're played

- Completely asynchronous caching and playback: sound is generated on a separate, non-blocking thread with minimal impact on gameplay

- Minimal setup: as a full code-based solution, no drag-and-drop or additional game object placement is necessary

- In-editor interface to test and generate audio parameters

Download the latest "usfxr" zip file from the "/build" folder of the GitHub repository and extract the contents of this file into the "Scripts" (or equivalent) folder of your Unity project. Alternatively, you can also download and install usfxr from the asset store.

After doing that, you should have the usfxr interface available inside Unity, as well as being able to instantiate and play SfxrSyth objects inside your project.

Typically, the workflow for using usfxr inside a project is as such:

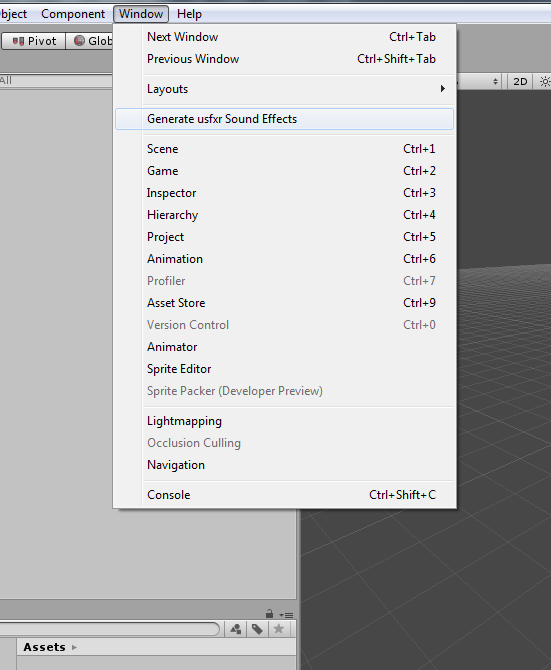

- Use the menu "Window" > "Generate usfxr Sound Effects" to open the sound effects window

- Play around with the sound parameters until you generate a sound effect that you want to use

- Click "COPY" to copy the effect parameters to the clipboard (as a string)

- Write some code to store your sound effect, pasting the correct string

SfxrSynth synth = new SfxrSynth();

synth.parameters.SetSettingsString("0,,0.032,0.4138,0.4365,0.834,,,,,,0.3117,0.6925,,,,,,1,,,,,0.5");

Finally, to play your audio effect, you call its Play() method where appropriate:

synth.Play();

With usfxr, all audio data is generated and cached internally the first time an effect is played, in real time. That way, any potential heavy load in generating audio doesn't have to be repeated the next time the sound is played, since it will already be in memory.

Because of that, while it's possible to generate new SfxrSynth instances every time they need to be played, it's usually a better idea to keep them around and reuse them as needed.

It's also important to notice that audio data generation does not occur all at once. This is a good thing: the audio data is generated as necessary, in batches, before playback of each 20ms chunk (more or less), so long audio effects won't take a lot of time to be generated. Audio is also generated on a separate thread, so impact on actual game execution should be minimal. Check OnAudioFilterRead for more details on how this happens.

Notice that, alternatively, you can also use the online version of as3sfxr to generate the parameter string that can be used when creating SfxrSynth instances. Strings generated by as3sfxr use the same format as usfxr so they're interchangeable.

Although this is rare, in case of long or numerous audio effects, it may make sense to cache them first, before they are allowed to be played. This is done by calling the cacheSound() method first, as in:

SfxrSynth synth = new SfxrSynth();

synth.parameters.SetSettingsString("0,,0.032,0.4138,0.4365,0.834,,,,,,0.3117,0.6925,,,,,,1,,,,,0.5");

synth.CacheSound();

...

synth.Play();

This caches the audio synchronously, that is, code execution is interrupted until synth.CacheSound() is completed. However, it's also possible to execute caching asynchronously, if you provide a callback function. Like so:

SfxrSynth synth = new SfxrSynth();

synth.parameters.SetSettingsString("0,,0.032,0.4138,0.4365,0.834,,,,,,0.3117,0.6925,,,,,,1,,,,,0.5");

synth.CacheSound(() => synth.Play());

In the above case, the CacheSound() method will immediately return, and audio start to be generated in parallel with other code (as a coroutine). When the audio is cached, the callback function (Play(), in this case) will be called.

As a reference, it typically takes around 7ms-70ms for an audio effect to be cached on a desktop, depending on its length and complexity. Therefore, sometimes it's better to let the game cache audio as it's played, or to stack the caching of all audio in the beginning of the gameplay, such as before a level starts.

An important notice when comparing to as3sfxr: the ActionScript 3 virtual machine doesn't normally support multi-threading, or parallel execution of code. Because of this, the asynchronous caching methods of as3sfxr are somewhat different from what is adopted with usfxr, since Unity does execute code in parallel (using Coroutines) or in a separate thread entirely (using OnAudioFilterRead). As such, your caching strategy on Unity normally won't have to be as robust as an ActionScript 3 project; in the vast majority of the cases, it's better to ignore caching altogether and let the library handle it itself by using Play() with no custom caching calls.

By default, all audio is attached to the first main Camera available (that is, Camera.main). If you want to attach your audio playback to a different game object - and thus produce positional audio - you use SetParentTransform on your SfxrSynth instance, as in:

synth.SetParentTransform(gameObject.transform);

This attaches the audio to an specific game object. See the documentation for more details.

Other than the source code to usfxr, the Git repository contains a few example projects using the library. The intention is to provide examples of how to properly use usfxr by solving common patterns that emerge in game development when it comes down to playing audio. The currently available sample projects are as such

This is a C# project that serves as a benchmark of the audio generation code. Run this example in different platforms to get an idea of how long it takes for that system to generate each audio sample. Because the audio is generated in real time the first time it has to be played, heavy audio generation can have a negative impact on game performance, so one must keep the results of this test in mind when deciding on a procedural audio solution for a game. A performance hit in audio generation may mean that you have to generate audio caches before a level is started, or in a worst case even abandoning the idea of using procedural audio.

A simple example of audio generation and playback. Press keys A-E to generate audio in different ways, and check Unity's console log for more information on what was generated.

A very simple game with basic objects: use the W, A, S and D (or arrow) keys to move around a cube in space. Press SPACE to shoot bullets. Enemy circles will spawn on the right of the screen; hit them with bullets to destroy them.

In this game prototype, all audio - bullets, enemy spawns, and enemy explosions - are handled by usfxr. Since all audio effects are played in their "mutated" form, this example shows how the same audio can be played with little variation, adding some flavor to the lo-fi audio emitted by the game.

Press the Z key to toggle mutated audio on and off. When off, the original audio is played. Try playing the game in both modes and notice the difference in regards to audio repetition when mutated mode is off.

Play this sample online here.

- Create button to copy code

- Test in Javascript/create example

- Create button to export to Unity sound clip/game object

- Show waveform in GUI

- Add stats such as memory and build time to GUI

- Show effect duration in GUI

- Undo/Redo

- Add option to "lock" GUI items like BFXR

- Automatic static caching?

- Allow clip/game objects to be edited/re-synthesized?

- Add more presets like SFMaker: http://www.leshylabs.com/apps/sfMaker/

- Tomas Pettersson created the original sfxr, where all the concepts for usfxr come from.

- Thomas Vian created as3sfxr, the original code that was ported to C# for usfxr.

- Tiaan Geldenhuys contributed to usfxr by cleaning the code and creating the original version of the in-editor window that I bastardized later.

- Stephen Lavelle created BFXR, an AS3 port of SFXR with several new features of its own, many of which have been adopted by usfxr.

- Michael Bailey contributed with bug fixes.

- Owen Roberts contributed with bug reports.

- Enrique J. Gil created the sound list saving/loading feature (SfxrSound) and its UI.

- If you want a C#/.Net port of sfxr that is not heavily coupled with Unity, nsfxr by Nathan Chere is what you're looking for.

2014-12-17 (1.4)

- Editor: Audio plays correctly when using the editor window in non-play mode in Unity 5 beta

2014-08-02 (1.3)

- Editor: SfxrGameObject instances are properly removed when switching between play and edit mode (no more leftover objects)

- Editor: The same SfxrSynth instance is reused when generating test sounds, rather than creating new instances on every change (better memory use)

- Fixed showstopping error that prevented publishing in non-PC platforms

- Added option to export WAV file to SfxrSynth (and the GUI)

2014-07-12 (1.2)

- Added support for BFXR's new filters: compression, harmonics, and bitcrusher

- Added support for BFXR's expanded pitch jump effects

- Added support for BFXR's parameter strings (standard SFXR parameter strings still work)

- Added support for BFXR's new waveform types: triangle, breaker, tan, whistle, and pink noise

2014-06-10 (1.1)

- Small internal optimizations: generates audio samples in about 9% less time

- Modified SfxrSynth and SfxrAudioPlayer to properly allow sound playing running the editor in edit mode

- Added an editor window to generate sound parameters right inside Unity

- Modified SfxrSynth to use the (safer) system-based random number generator (fixes errors thrown when attempting to generate noise from separate threads)

- Samples: "SpaceGame" has enemies that collide with bullets (taking damage and making bullets disappear) and explode when health reaches 0

- Samples: "SpaceGame" has a toggle for mutated audio mode

- Added movable enemies (spheres) to SpaceGame

- Added asynchronous generation (with callbacks) for CacheSound() and CacheMutations()

- Code cleanup and removal of useless files

- Samples: "Main" is a bit more user-friendly

- Samples: Added initial SpaceGame

- Users can now set the parent transform of the audio (for proper audio positioning) with

SetParentTransform() - Replaced

Random.valuecalls with a more correctgetRandom()function - Added

SfxrSynth.CacheMutations()(fixes #12) - Removed legacy code for WAV generation (closes #16 and fixes #17, #18)

For a list of things that need to be done, check the issues tab.