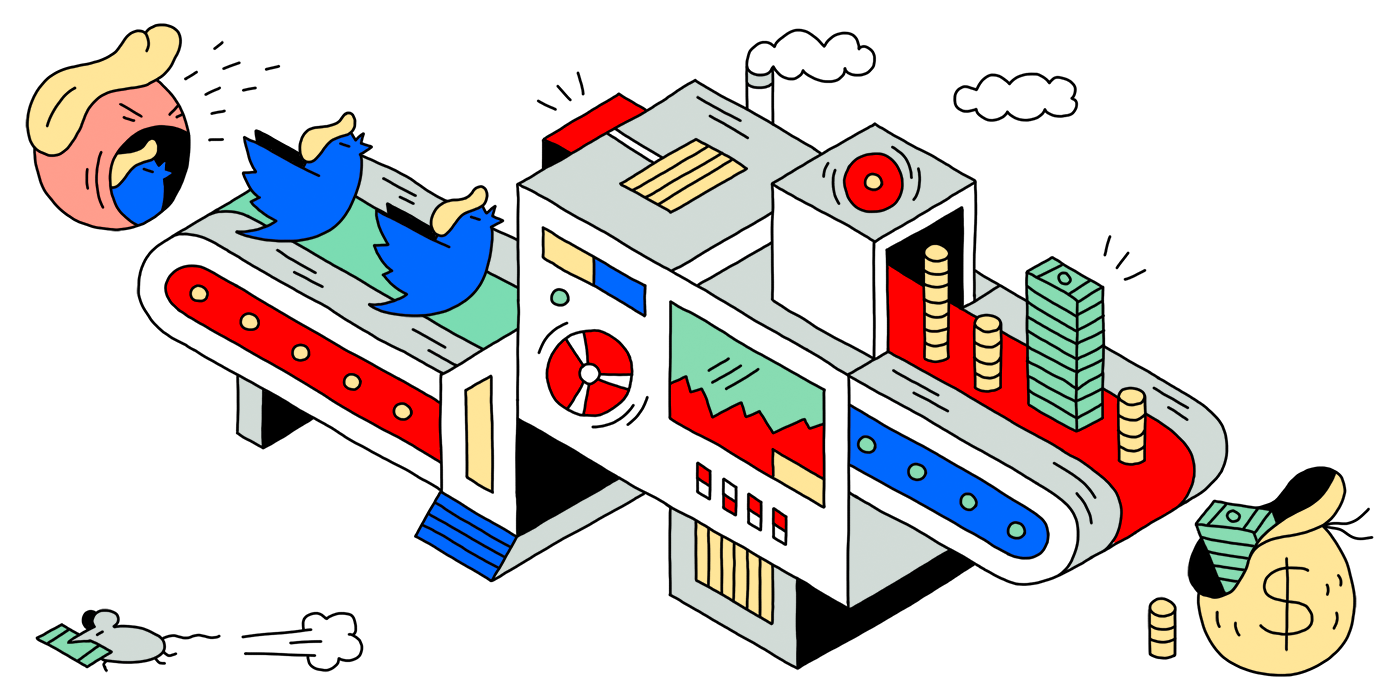

This bot watches Donald Trump's tweets and waits for him to mention any publicly traded companies. When he does, it uses sentiment analysis to determine whether his opinions are positive or negative toward those companies. The bot then automatically executes trades on the relevant stocks according to the expected market reaction. It also tweets out a summary of its findings in real time at @Trump2Cash.

You can read more about the background story here.

The code is written in Python and is meant to run on a Google Compute Engine instance. It uses the Twitter Streaming APIs to get notified whenever Trump tweets. The entity detection and sentiment analysis is done using Google's Cloud Natural Language API and the Wikidata Query Service provides the company data. The TradeKing API does the stock trading.

The main module defines a callback where incoming tweets are

handled and starts streaming Trump's feed:

def twitter_callback(tweet):

companies = analysis.find_companies(tweet)

if companies:

trading.make_trades(companies)

twitter.tweet(companies, tweet)

if __name__ == "__main__":

twitter.start_streaming(twitter_callback)The core algorithms are implemented in the analysis and

trading modules. The former finds mentions of companies in the

text of the tweet, figures out what their ticker symbol is, and assigns a

sentiment score to them. The latter chooses a trading strategy, which is either

buy now and sell at close or sell short now and buy to cover at close. The

twitter module deals with streaming and tweeting out the

summary.

Follow these steps to run the code yourself:

Check out the quickstart to create a Cloud Platform project and a Linux VM instance with Compute Engine, then SSH into it for the steps below. Pick a predefined machine type matching your preferred price and performance.

Alternatively, you can use the Dockerfile to build a

Docker container and

run it on Compute Engine

or other platforms.

docker build -t trump2cash .

docker tag trump2cash gcr.io/<YOUR_GCP_PROJECT_NAME>/trump2cash

docker push gcr.io/<YOUR_GCP_PROJECT_NAME>/trump2cash:latestThe authentication keys for the different APIs are read from shell environment variables. Each service has different steps to obtain them.

Log in to your Twitter account and create a new application. Under the Keys and Access Tokens tab for your app you'll find the Consumer Key and Consumer Secret. Export both to environment variables:

export TWITTER_CONSUMER_KEY="<YOUR_CONSUMER_KEY>"

export TWITTER_CONSUMER_SECRET="<YOUR_CONSUMER_SECRET>"If you want the tweets to come from the same account that owns the application, simply use the Access Token and Access Token Secret on the same page. If you want to tweet from a different account, follow the steps to obtain an access token. Then export both to environment variables:

export TWITTER_ACCESS_TOKEN="<YOUR_ACCESS_TOKEN>"

export TWITTER_ACCESS_TOKEN_SECRET="<YOUR_ACCESS_TOKEN_SECRET>"Follow the Google Application Default Credentials instructions to create, download, and export a service account key.

export GOOGLE_APPLICATION_CREDENTIALS="/path/to/credentials-file.json"You also need to enable the Cloud Natural Language API for your Google Cloud Platform project.

Log in to your TradeKing account and create a new application. Behind the Details button for your application you'll find the Consumer Key, Consumer Secret, OAuth (Access) Token, and Oauth (Access) Token Secret. Export them all to environment variables:

export TRADEKING_CONSUMER_KEY="<YOUR_CONSUMER_KEY>"

export TRADEKING_CONSUMER_SECRET="<YOUR_CONSUMER_SECRET>"

export TRADEKING_ACCESS_TOKEN="<YOUR_ACCESS_TOKEN>"

export TRADEKING_ACCESS_TOKEN_SECRET="<YOUR_ACCESS_TOKEN_SECRET>"Also export your TradeKing account number, which you'll find under My Accounts:

export TRADEKING_ACCOUNT_NUMBER="<YOUR_ACCOUNT_NUMBER>"There are a few library dependencies, which you can install using pip:

$ pip install -r requirements.txtVerify that everything is working as intended by running the tests with pytest using this command:

$ export USE_REAL_MONEY=NO && pytest *.py -vvThe benchmark report shows how the current implementation of the analysis and trading algorithms would have performed against historical data. You can run it again to benchmark any changes you may have made. You'll need a Polygon account:

$ export POLYGON_API_KEY="<YOUR_POLYGON_API_KEY>"

$ python benchmark.py > benchmark.mdEnable real orders that use your money:

$ export USE_REAL_MONEY=YESHave the code start running in the background with this command:

$ nohup python main.py &