Genoss is a pioneering open-source initiative that aims to offer a seamless alternative to OpenAI models such as GPT 3.5 & 4, using open-source models like GPT4ALL.

Project bootstrapped using Sicarator

- Open-Source: Genoss is built on top of open-source models like GPT4ALL.

- One Line Replacement: Genoss is a one-line replacement for OpenAI ChatGPT API.

Chat Completion and Embedding with GPT4ALL

genoss-demo-last.mp4

- GPT4ALL Model & Embeddings

- More models coming soon!

Before you embark, ensure Python 3.11 or higher is installed on your machine.

pip install genosspip install git+https://github.com/OpenGenerativeAI/GenossGPT.git@main\#egg\=genossgenoss-server

# To know more

genoss-server --helpAccess the api docs via http://localhost:4321/docs .

Install GPT4ALL Model

The first step is to install GPT4ALL, which is the only supported model at the moment. You can do this by following these steps:- Clone the repository:

git clone --recurse-submodules [email protected]:nomic-ai/gpt4all.git- Navigate to the backend directory:

cd gpt4all/gpt4all-backend/- Create a new build directory and navigate into it:

mkdir build && cd build- Configure and build the project using cmake:

cmake ..

cmake --build . --parallel-

Verify that libllmodel.* exists in

gpt4all-backend/build. -

Navigate back to the root and install the Python package:

cd ../../gpt4all-bindings/python

pip3 install -e .- Download it to your local machine from here and put it in the

local_modelsdirectory aslocal_models/ggml-gpt4all-j-v1.3-groovy.bin

You need to install poetry and a valid python version (3.11*).

poetry installFor more, on a complete install for development purpose, you can check the CONTRIBUTING.md. If you simply want to start the server, you can install with the corresponding poetry groups :

poetry install --only main,llmsAfter the Python package has been installed, you can run the application. The Uvicorn ASGI server can be used to run your application:

uvicorn main:app --host 0.0.0.0 --port 4321This command launches the Genoss application on port 4321 of your machine.

In the demo/

cp .env.example .envReplace the values and then

PYTHONPATH=. streamlit run demo/main.pyThe Genoss API is a one-line replacement for the OpenAI ChatGPT API. It supports the same parameters and returns the same response format as the OpenAI API.

Simply replace the OpenAI API endpoint with the Genoss API endpoint and you're good to go!

Modify the models to the supported list of models and you're good to go!

You can find the API documentation at /docs or /redoc.

While GPT4ALL is the only model currently supported, we are planning to add more models in the future. So, stay tuned for more exciting updates.

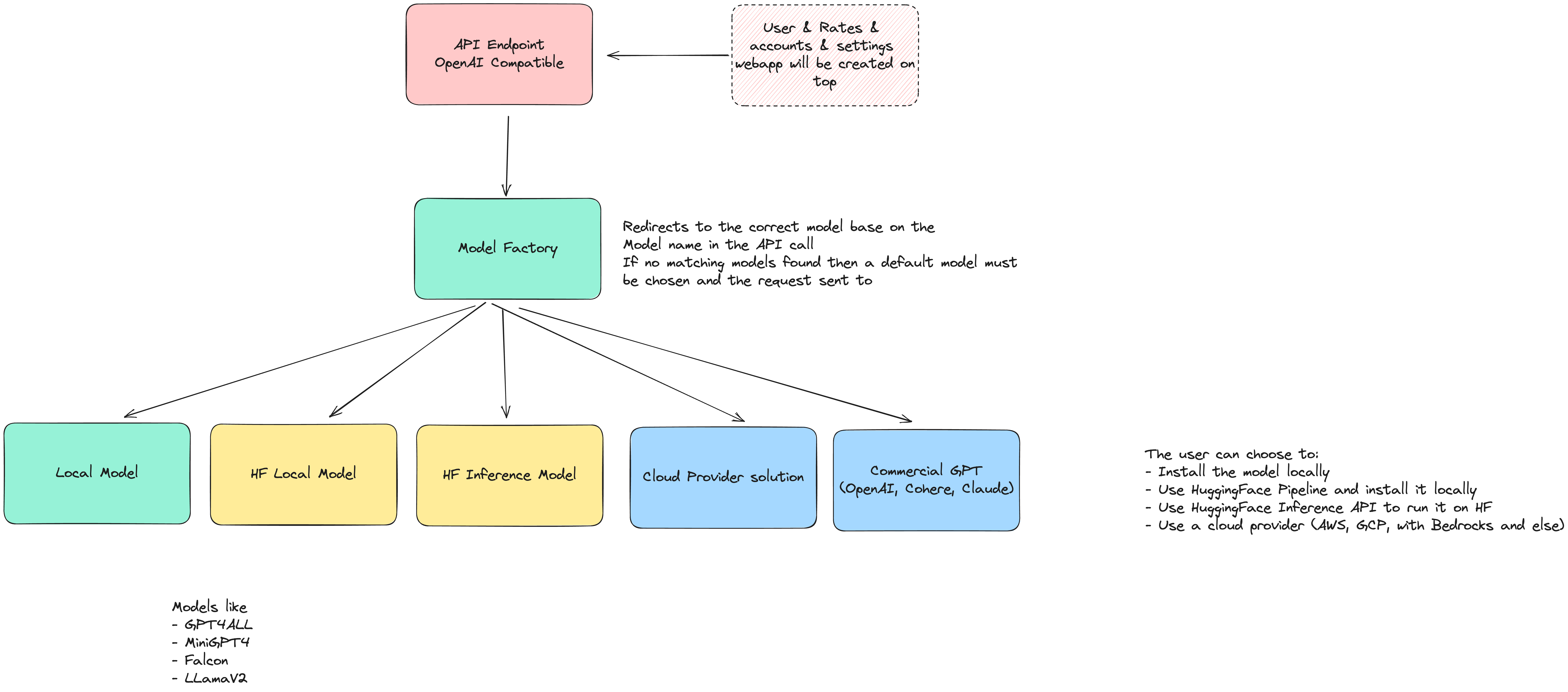

The vision:

- Allow LLM models to be ran locally

- Allow LLM to be ran locally using HuggingFace

- ALlow LLM to be ran on HuggingFace and just be a wrapper around the inference API.

- Allow easy installation of LLM models locally

- Allow users to use cloud provider solutions such as GCP, AWS, Azure, etc ...

- Allow users management with API keys

- Have all kinds of models available for use (text to text, text to image, text to audio, audio to text, etc.)

- Be compatible with OpenAI API for models that are compatible with OpenAI API

Genoss was imagined by Stan Girard when a feature of Quivr became too big and complicated to maintain.

The idea was to create a simple API that would allow to use any model with the same API as OpenAI's ChatGPT API.

Then @mattzcarey, @MaximeThoonsen, @Wirg and @StanGirard started working on the project and it became a reality.

Your contributions to Genoss are immensely appreciated! Feel free to submit any issues or pull requests.

Thanks go to these wonderful people:

This project could not be possible without the support of our sponsors. Thank you for your support!

Genoss is licensed under the Apache2 License. For more details, refer to the LICENSE file.