-

Notifications

You must be signed in to change notification settings - Fork 165

Rendering engine overview

This page will describe how the rendering engine of FSO renders one frame of gameplay. There are other sections of the game that are rendered differently (e.g. the main hall) which will not be described in detail here.

FSO has support for both OpenGL debug groups and the JSON tracing format used by chrome. If you compile a debug build then FSO will automatically use the GL_KHR_debug extension for communicating debug groups to the OpenGL implementation. This can be used by tools like apitrace or RenderDoc to group OpenGL actions together. This will make it much easier to understand what OpenGL function call belongs to what part of the engine. The JSON tracing output can be enabled by a command line switch. The resulting file can be loaded by the chrome://tracing/ page of a Chromium browser or another tool that supports this format (e.g. Trace Compass). This will also give you detailed statistics about what part of the engine takes how long to execute on the CPU and GPU.

The FSO rendering engine uses deferred shading for rendering opaque objects and standard forward rendering for transparent objects. Shadow mapping is done using cascaded variance shadow maps which produce soft shadows. Only the first directional light in the scene casts shadows.

The internal rendering API of FSO is implemented using function pointers and could theoretically be implemented by more than one graphics API. Currently there is only the OpenGL backend. Currently the OpenGL 3.2 Core profile is the minimum version that is required by FSO.

FSO uses 5 collor attachments for storing the material information of the individual objects:

- The color buffer. Stores the color information of the surface.

- The position buffer. The fragment position in view space.

- The normal buffer. The fragment normal in view space. The alpha channel stores the gloss factor of the surface.

- The specular buffer. The alpha channel stores the fresnel factor of the surface.

- The emissive buffer. This is color information that is not affected by lighting. This is used to store the background of the scene and glow map information.

For optimizing the rendering of a large amount of objects the engine uses the model_draw_list class. This class can be used for storing information about draw calls for rendering a model. First, the draw calls will be added to the class with their respective material information. Then the class will sort the draws to reduce the amount of state changes. After that a uniform buffer is used for sending the uniform data to the GPU. Finally the class dispatches the draw calls to the API by binding the correct uniform buffer range and issuing the draw call.

The code for rendering a single gameplay frame is located in game_render_frame(). The FRED renderer works a bit differently and won't be discussed here. The names of the following sections are loosely based on the names of the tracing scoped in case you want to cross reference this page with the tracing output.

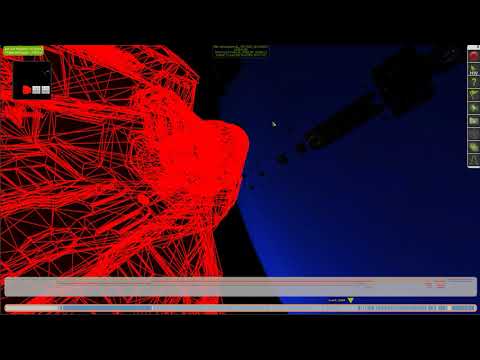

A visual demonstration of how a single frame is rendered is available here:

Another demonstration which shows how the lighting works. NOTE: This version is based on an older version of the rendering engine where the directional part of the lighting was done in the main shader.

This binds and sets up the frame buffer object (FBO) into which the scene will be rendered into. It also clears the deferred buffers to their default values (see gr_opengl_scene_texture_begin() and deferred-clear-f.sdr).

This draws the background of the scene which includes sun bitmaps, stars and the skybox model if present. This will also render the environment cube map if it has changed since the last frame.

This will render the shadow map into a framebuffer with 4 layers. Each layer is used for storing the depth information of each cascade. The draw calls are set up using a model draw list for better CPU and GPU performance.

After the shadow maps are rendered the scene FBO is used again for rendering the main view of the scene. Deferred shading is started by configuring the draw buffers and copying the existing color buffer into the emissive buffer. This is required since everything that existed before this point should not be affected by lighting (see gr_opengl_deferred_lighting_begin()).

Then another model draw list is used for building the draw list of the main view. First only the opaque meshes are drawn which stores the material information in the deferred shading buffers.

When there are decals in the scene they will be rendered here. The decal renderer basically uses the position information of the buffers to map a texture to the surface of an object and change the surface properties. See decals.cpp, decal_draw_list and decals-f.sdr for more information.

Then all the lights in the scene are rendered using the standard bounding mesh technique. This is done for all lights and the resulting lighting information is blended together using additive blending. The first directional light in the scene will also use the rendered shadow map for handling shadows.

After that the remaining meshes which use transparent textures are rendered using standard forward rendering and only a limited amount of lights.

This handled drawing the trails in the scene. A trail is basically just a long screen aligned quad which is used for rendering the trail texture.

Particles are rendered using a batching mechanism that stores the vertices of the individual particles in a big buffer and uploads them to the GPU all at once. This allows to group together multiple particles in one draw call for less CPU overhead. This also uses texture arrays for grouping more particles together if they use the same animation but different frames within that animation.

This renders effects such as weapon bitmaps, beams, 2D shockwaves, thrusters and some other effects. Some effects can distort the image behind them so they need access to the current view of the scene. Since we are currently rendering into that texture we need to copy it before applying the distortion effects. This is done by gr_opengl_copy_effect_texture().

This resolves the rendered scene textures into the final image. First blooming is applied to the bright parts of the scene. A fragment is considered "bright" if the color channel is above 1. This is possible since we use floating point textures for all color attachments.

After that is done the HDR scene is converted to a standard LDR color range using a tone mapping algorithm (see tonemapping-f.sdr for more information on the used algorithm).

Then FXAA is applied to the image and finally the post processing effects are applied to the final image.

This concludes this overview of the FSO rendering engine. The final part of the process is the page flip which presents the current back buffer to the user.