-

Notifications

You must be signed in to change notification settings - Fork 4

VOLD

Vold stands for VOLume management Daemon.

One VOLD process runs on each node.

A VOLD has several roles:

- It manages the different VVRs (Versioned Volume Repository): creation, start, deletion, update, ...

- It enables the client to access the volumes as 'block devices'.

- It handles the connections with the other nodes and synchronizes with them.

- It handles the different states and distributed transactions on all resources.

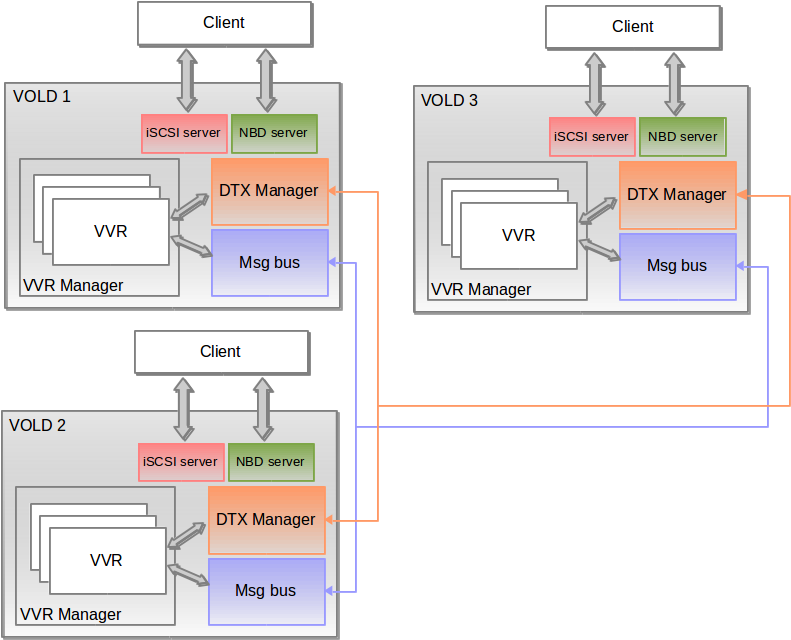

The VOLD includes the following modules:

- a VVR Manager to handle one or more VVRs' lifecycle,

- a DTX (Distributed Transaction eXecution) manager to handle distributed transactions between nodes,

- an internal bus for exchanging messages between nodes (MSG bus),

- an iSCSI server and a NBD server for exporting volumes as block devices and

- a JMX server for monitoring the VOLD.

Interactions between 3 VOLD

Interactions between 3 VOLD

Each node contains a VVR Manager that can create/delete VVRs. A VVR is replicated to all VOLDs in a cluster. Lifecycle operations like creating or deleting a VVR are synchronized between nodes using transactions while other operations like starting a VVR are only applied locally. The data stored in the each volume are propagated between nodes using the internal message bus.

Storage volumes are exposed via the iSCSI and/or NBD server to the client.

The main class is named Vold.

It starts the VVR manager, the iSCSI and the NBD server, the JMX server, the MSG client/server and finally the DTX Manager.

VOLD, VVR manager and VVRs are resource managers as defined by The Open Group's XA distributed transaction specification.

- VOLD manages the list of peers in a cluster.

- VVR manager controls a set of VVRs.

- VVR manages volumes and their snapshots.

All the operations are logged in a journal. When a node is stopped for maintenance or disconnected for any other reason it must replay transactions missing in its journal once it comes back online.

Each resource manager is updated with the missing transactions through a multi state mechanism of discovery and synchronization handled by the DTX Manager. See DTX for more information on discovery and synchronization.

A resource manager registers with DTX manager and depending on discovery results enters one of the following synchronization states:

- UNDETERMINED

- LATE

- SYNCHRONIZING

- UP_TO_DATE

The VVRs' states depend on VVR manager states: the VVRs can be registered with DTX manager, only once the VVR Manager is considered as UP TO DATE. If the VVR manager becomes late, all its VVRs are unregistered.

| Event received | Actions |

|---|---|

| INIT |

|

| UP TO DATE |

|

| LATE |

|

| Table summarizing the actions performed after receiving events for the VVR Manager resource |

| Event received | Actions |

|---|---|

| UP TO DATE |

|

| LATE |

|

| Table summarizing the actions performed after receiving events for a VVR resource |

The VOLD module contains the MX beans used to monitor and administrate the VOLD, the VVR manager, and the VVR with its hierarchy of snapshots and devices.

Most operations are accessible via two methods: one is blocking, and one returns immediately with a task ID that can be monitored by polling an MXBean containing the status of the task.

For more information, see VOLD administration

The Internet Small Computer System Interface (iSCSI) is intended to connect to networked data storage system by sending SCSI commands through IP networks.

The iSCSI server is based on jSCSI (see https://github.com/disy/jSCSI), including some modifications.

An activated device is exported as iSCSI target by the server and can be accessed by the client, called the initiator.

Using an iSCSI target with Linux:

To obtain the target list:

iscsiadm --mode discovery --type sendtargets --portal <host>

To have the active sessions list:

iscsiadm --mode session

To login to a target:

iscsiadm --mode node --targetname <iqn> --portal <host> --login

To logout:

iscsiadm --mode node --targetname <iqn> --portal <host> --logout

Identify the device /dev/sdX to which the iSCSI target was mapped:

dmesg | grep sd

To create a partition on the target:

fdisk /dev/sdX

n

p

enter

w

To format the partition as ext4:

mkfs.ext4 /dev/sdX1

To mount it:

mount /dev/sdX1 /mnt

The network block device protocol stores data in a block device remotely via a network.

An activated device is exported as NBD export by the NBD server and is accessible by the client.

The Linux NBD client is available in 2 parts: a module and a user-space daemon. You need to install the package nbd-client and check with lsmod if the NBD module is loaded.

To obtain the export list (nbd-client version 3.4 or higher):

nbd-client <host> <port> -l

To login to an export:

nbd-client <host> <port> /dev/nbdX -N <device> [-b <blcksize>]

To logout of an export:

nbd-client -d /dev/nbdX

To create a partition:

fdisk /dev/nbdX

n

p

enter

w

To format the partition:

mkfs.ext4 /dev/nbdXp1

To mount the partition:

mount /dev/nbdXp1 /mnt