Sammy is a smart media player that enables understanding of videos for the visually-disabled and quicker navigation for everyone.

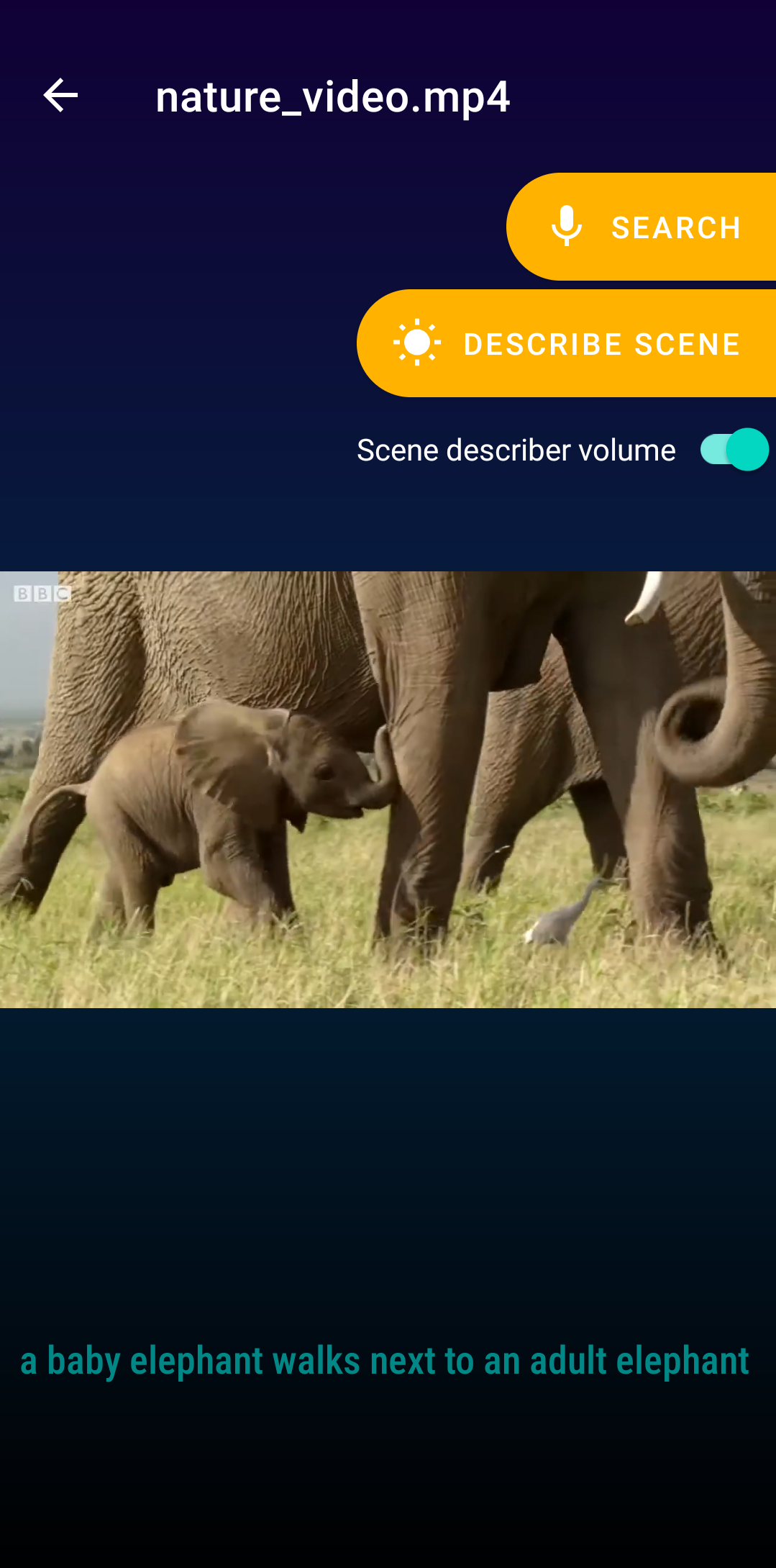

- ✨ Sammy is a multimedia player that can help you find, in a video, anything from a simple phrase that was spoken to a scene that you can describe in words.

- ✨ We combined OCR (Optical Character Recognition), ASR (Automatic Speech Recognition) and Scene Understanding (SDD) to describe the scene taking place in the video and to make this information searchable.

Imagine a world where even specially-enabled people are able to understand and navigate through video content and where nobody has to spend ages searching for a single line in hour-long videos. Today, we don’t have a system to make the content inside videos searchable and describable. Videos/movies for the optically disabled have to be professionally voiced through an expensive process, making them inaccessible. This lack of a system is also a problem when one is trying to pick up a small piece of information in a long video.

- Can search for a particular phrase through your voice

- It can Describe the video with voice and text

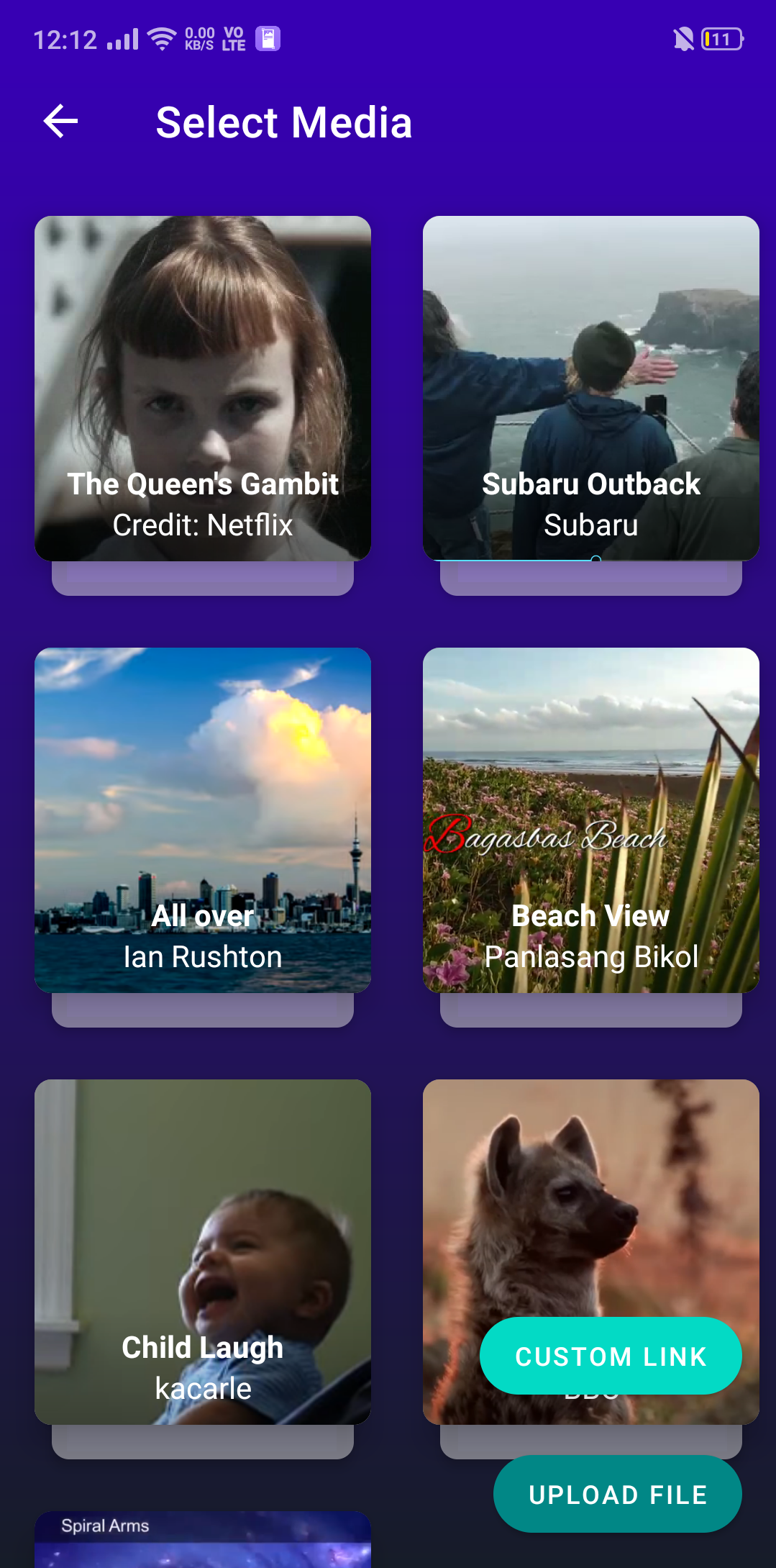

- Users can upload a video of their choice

Sammy uses several open-source projects to work properly:

-

- Java

- XML

- ExoPlayer

- Retrofit - for REST API

- Text to Speak by Google

- Speech-to-Text by Google

- MVP architecture

-

- NodeJS

- Web/Worker processes

- Packages that internally use Redis - for job queues

- Video API

- Packages that internally use ffmpeg - Detect scenes, extract frames

- Azure Cloud Vision

- Tesseract - for OCR

- Sharp

- Express

- Audio API

- Packages that internally use ffmpeg - to extract audio

- Azure cognitive speech services/DeepSpeech