-

Notifications

You must be signed in to change notification settings - Fork 19

Step by step usage guide

- Introduction

- Limitations, good to know

- Prerequisites

- Accessing the scale unit deployment tool

- Editing the configuration file for your hub and scale unit environments

- Automatic deployment

- Manual deployment

- Workload management

- Additional functionality

- Re-enabling the spoke environment

- Resetting the hub and spoke environment

The multi-node supply chain topology for Dynamics 365 with Cloud and Edge Scale Units enables companies to execute mission critical manufacturing and distribution processes without interruptions.

This tool helps partners with access to self-hosted one-box development environments for Dynamics 365 Supply Chain management to configure two environments for cloud and edge preview. The tool supports environments that are LCS-hosted and VHD-image-based environments.

In cloud and edge development configuration one environment will the act as the hub the second a scale unit.

The tool has several functions:

- Configure up to two "Tier 1" one-box environments to take the role of a hub and a scale unit. The tool also supports to use a single "Tier 1" one-box to host both hub and scale unit environment.

- Declare one of the two environments to become the hub.

- Declare the other of the two environments to become the scale unit and used as a subordinate to a hub.

- Assign the scale unit to the hub.

- Create workloads by configuring both hub and scale unit environments.

The tool is provided as a command line interface to initiate configuration activities. It expects a parameter file in XML format that you need to edit in the editor of your choice and provide parameter information for the following aspects of your development environments:

- Configuration information of an AAD application needed for the configuration tool to control the hub and scale unit environments.

- Information for the Hub environment for managing the configuration changes on the hub.

- Information for the scale unit environment.

- Workload definitions.

The following limitations apply to the preview of scale units and are good to know to plan for the best experience.

-

Once enabled the scale unit feature and deployed workloads cannot be removed. The removal of workloads and disassociation or repurposing of scale units will be added in an upcoming release of the preview. To prepare for reconfiguration we recommend to take a database snap shot before enabling scale units and deploying workloads such that it is easy to revert. When you revert the scale unit configuration using a database backup, you should also remove the content from the Azure Storage accounts that has been created by LCS when deploying the environments. The content in that storage account is used for the communication between hub and scale units and before reconfiguring this content from the previous configuration should be removed.

-

There are a few limitation in application functionality that will be applied when using scale units. One of those is the use of manual number sequences. Please refer to the documentation for more details.

This section states which prerequisites must be fulfilled before using the tool.

If you deploy the hub and the scale scale unit in separate developer boxes, then you will need two clean LCS cloud-hosted developer boxes or VHDs (10.0.16 or higher). Otherwise, you will only need one clean LCS cloud-hosted developer box or VHD (10.0.16 or higher).

One (the Hub) should be deployed with Demo Data, the other (Scale Unit) must be deployed with Empty DB. If the Scale Unit does not have an empty database, workload installation will fail by getting stuck during installation (see "Upload Sessions" Menu Item).

In case you use a single developer box to host both the hub and the scale unit environment, the OneBox environment should be deployed with Demo Data, then you need to manually restore the Empty DB. You can find the .bak file for the Empty DB under your Build drive (E:\AppRing3\10.0.689.10004\retail\Services\AOSService\Data) with the name AxBootstrapDB_Empty.bak.

Please note: Starting with tool version V2.2.0 the feature toggle "SysWorkloadTypeMES" will automatically be enable on both environments. This is relevant for 10.0.17 and later application versions until MES workloads will be generally available. Please find information on features toggles here..

Create at least two Applications in Azure Active Directory.

The first will provide the authorization for the data flow between hub and the scale unit (InterAOSAADConfiguration). The other ones will control the access of the configuration tool to the development environments. You can use the same one here for multiple scale unit instances. However, in some cases, such as if one of your scale units is LBD environment, you need to use different app for authorization (ADFS).

The following procedure shows one way to complete this task. For detailed information and alternatives, see the links after the procedure.

- In a web browser, go to https://portal.azure.com.

- Enter the name and password of the user who has access to the Azure subscription.

- In the Azure portal, in the left navigation pane, select Azure Active Directory.

- Make sure that you're working with the instance of Azure AD that is used by Supply Chain Management.

- In the Manage list, select App registrations.

- On the toolbar, select New registration to open the Register an application wizard.

- Enter a name for the application, select the Accounts in this organizational directory only option, and then select Register.

- Your new app registration is opened. Make a note of the Application (client) ID value, because you will need it later. This ID will be referred to later in this topic as the 'AppId'.

- In the Manage list, select Certificate & secrets. Then select one of the following buttons, depending on how you want to configure the app for authentication. (For more information, see the Authenticate by using a certificate or client secret section later in this topic.) Use the option New client secret and create a key by entering a key description and a duration in the Passwords section, and then select Add. Make a copy of the key value which you need to enter in the configuration file. (Please note, the configuration tool does not support certificates yet.)

- For each of your application take a copy of both the secret the value and the ID and store it securely. You can only access the secret at the time when creating the key.

Open the AD FS management console and under application groups open up the existing application group:

Create a new Server application and ensure you take note of the client identifier.

Additionally you need to setup the redirect URI for this application. If your environment url is ax.contoso.com the entries you would create are:

When configuring Application Credentials choose generate a shared secret and ensure you save it as it will only be available at this point.

Once the server application has been created you need to add it to the Web API. Under the Web API section select the application called Microsoft Dynamics 365 for Operation On-premises - Web API

Navigate to the client Permissions tab and click on Add...

Find the client application you previously created and click on Add.

The default scope of openid is the only one required.

Then click Ok and then Ok again.

Navigate to https://github.com/microsoft/SCMScaleUnitDevTools and download the latest release. Alternatively, you can clone the repository and build the release yourself.

Note that the release details can contain information about which application versions the tool works for, please check the release details before downloading to ensure that it works with your current versions.

The tool comes with an example configuration file "UserConfig.sample.xml". You can take a copy of the file for editing and place a copy with name "UserConfig.xml" in the same directory as the tool CLI.exe. The configuration file contains a number of configuration sections where you among other need to specify App Id (from above) and information on your one-box environments for hub and scale unit. Once you have edited the configuration for your environment, you can use the tool with the same configuration both for Hub and scale unit environment.

Do the next steps for example with the tool in a directory on the machine of hub environment using remote desktop access. Edit the configuration file (see below).

Taking a copy of the configuration file "UserConfig.sample.xml" and save it in the same directory as the configuration tool CLI.exe with the name "UserConfig.xml". Open the created file for editing in your favorite XML or text editor.

You need to fill in the following pieces of information:

-

Set the value under section UseSingleOneBox to

trueif you want to use the same OneBox to host both the hub and the scale unit; otherwise leave the value tofalse.- This will create two IIS sites, one for the hub and one for the scale unit. They will both point to the same

PackagesLocalDirectoryfolder and share code.- On code changes, manually restart the scale unit batch service and IIS site; it will not be done automatically by the Visual Studio extensions.

- If any tables/entities/queries/views are updated, make sure to also run DbSync against the scale unit DB; it cannot be done from Visual Studio.

- This will create two IIS sites, one for the hub and one for the scale unit. They will both point to the same

-

Under section InterAOSAADConfiguration you need to enter information that enables communication between Hub and Scale units.

- In field AppId enter the application ID of the Azure application that authorizes data flow between hub and scale unit.

- In field AppSecret enter the application secret of the Azure application that authorizes data flow between hub and scale unit.

- The field Authority need to carry the URL specifying the security authority for your tenant. It should be in the following format for a tenant called CONTOSO: https://login.windows.net/CONTOSO.onmicrosoft.com

-

Under the section ScaleUnitConfiguration you need to have a record of

ScaleUnitInstancefor each of the environments (one for the hub environment and one for the scale unit environment). EachScaleUnitInstancerecord needs to have the following information defined:-

In the field Domain enter the FQDN of the environment in the format 'Contoso.cloudax.dynamics.com', not including protocol or trailing '/'.

Note that all records need to have different domains. If

UseSingleOneBoxis set totruethen hub can have the environment domain, in which case the scale unit records should take a different domain. As an example, if hub's domain ishub.onebox.comthen the scale unit domain can be set toscaleUnit.oneBox.com. You will be able to access the scale unit rich client using the domain provided here. -

The field IpAddress can be ignored except from the cases below

- For hub if the environment type is

VHDyou need to set the ip address of the hub environment. - If the

UseSingleOneBoxhas been set totruethen local ip addresses of the form (127.0.0.x) should be defined on all theScaleUnitInstancerecords.Note that different ip addresses should be used for each record. For example if hub is set to

127.0.0.10then the scale unit could be127.0.0.11

- For hub if the environment type is

-

The field AzureStorageConnectionString is used for VHD based one-box environments and for

LCSHostedenvironments when theUseSingleOneBoxhas been set to true.- For

VHD: set this to an Azure storage account's connection string (this is needed to let Scale Unit(s) download data packages from the Hub) - In case the

UseSingleOneBoxsetting is true, this field should be set on all scale unitScaleUnitInstancerecords.

You can find instructions on how to create a compatible Azure storage account here.

- For

-

The field AxDbName should be set to the name for the AX DB. In the template file the value is prefilled with the default data base name 'AxDB'.

Note that when the

UseSingleOneBoxis set to true then this value should be different for eachScaleUnitInstance. For hub this should point to the DB with Demo Data and for the scale unit it should point to an Empty DB. -

The field EnvironmentType indicates the type of the one-box environment and needs to be set. For VHD-based environments use value

VHD, for LCS-hosted environments use valueLCSHosted, and for LBD environments use valueLBD. -

The field AuthConfiguration contains information that grants the configuration tool the access to this scale unit instance. The same information can be used for multiple scale unit instances if they belong to the same tenant.

- In field AppId enter the application ID of the Azure application that grants the configuration tool the access to this scale unit instance.

- In field AppSecret enter the application secret of the Azure application that grants the configuration tool the access to this scale unit instance.

- The field Authority need to carry the URL specifying the security authority for your tenant. It should be in the following format for a tenant called CONTOSO: https://login.windows.net/CONTOSO.onmicrosoft.com

-

-

Under the section Workloads you need to specify the attributes that specify which workloads you will run in the scale unit. The template file has a definition for one MES and one WES workload that matches one production site and one warehouse in the demo data base. Workload definitions are composed of a unique Id, the name specifying the kind of workloads (must be one of the identifiers 'MES' or 'WES') and a number of parameters called ConfiguredDynamicConstraintValue that specify the responsibility context of workload.

Manufacturing execution workloads are always configured per site. To create a manufacturing execution workload provide the following configuration elements:

- The field WorkloadInstanceId must carry a unique GUID for the workload.

- Set the field Name to the value MES.

- The enumeration ConfiguredDynamicConstraintValues needs to contain two attribute groups of type ConfiguredDynamicConstraintValue.

- Specify in the first the field DomainName with value LegalEntity and the field Value with the value of the legal entity.

- In the second ConfiguredDynamicConstraintValue attribute group use DomainName with value Site and for the field Value use the Id of the production site.

Warehouse execution workloads are always configured per warehouse. To create a warehouse execution workload provide the following configuration elements:

- The field WorkloadInstanceId must carry a unique GUID for the workload.

- Set the field Name to the value WES.

- The enumeration ConfiguredDynamicConstraintValues needs to contain three attribute groups of type ConfiguredDynamicConstraintValue.

- Specify in the first the field DomainName with value LegalEntity and the field Value with the value of the legal entity.

- In the second ConfiguredDynamicConstraintValue attribute group use DomainName with value Site and for the field Value use the Id of the warehouse site.

- In the third ConfiguredDynamicConstraintValue attribute group use DomainName with value Warehouse and for the field Value use the Id of the warehouse.

Once you have created the configuration file you need to copy the tool and configuration file to both the hub and the scale unit OneBox environments. Make sure your configuration (UserConfig.xml) is in the same directory as CLI.exe.

Use a Command Prompt under administrative permissions navigate to the directory of the tool and start the tool CLI.exe.

Automatic deployment is used to run through all the steps required to set up the hub and spoke environment and install workloads. When using automatic deployment, no user input is required. The program will inform the user when the deployment is done or when an error occurs.

Note: If this is not the first time the spoke is deployed, the AX DB used for the spoke must be restored to the emptyDB before deploying.

In version 3.14 and forward of the tool it is possible to run the steps above automatically from the command line on a single box environment.

Navigate to the directory where the CLI.exe file is. From here run the following command:

$ ./CLI.exe --single-box-deploy

This will run all the initialization, configuration and installation steps described above without requiring user input. The program will terminate when all workloads are installed.

Two separate operations must be performed in order to deploy on a multi box environment. First, the hub must be deployed. This is done by running the following command on the hub:

$ ./CLI.exe --hub-deploy

After the hub has been deployed, the spoke can be deployed. It is very important that the first command is completely finished before the spoke is deployed. To deploy the spoke, run the following command on the spoke:

$ ./CLI.exe --spoke-deploy

Once this command is finished, the hub and spoke environments are deployed.

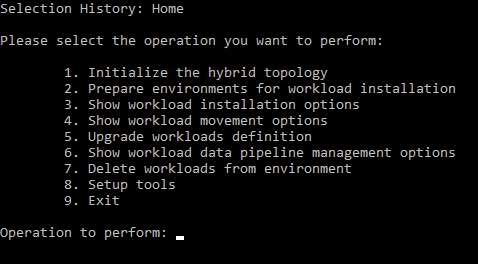

If you are not using the automatic deployment, the tool provides a command interface where you select the different operations using the keyboard.

In case of an error, follow the guidance presented by the tool to solve the issue(s) and go once more through the steps in the section - all actions are idempotent, but still take time.

If you find issues with using the tool that are not showing self-explanatory error messages, please feel free to open an issue here.

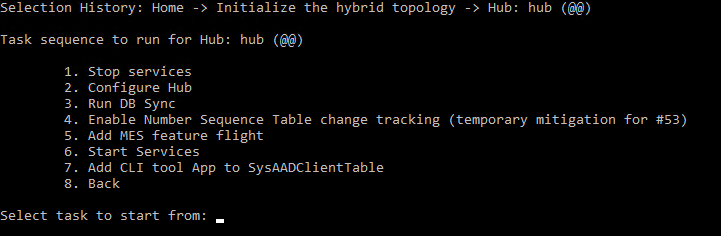

On the hub OneBox environment run the tool and run the following steps:

-

Select "1. Initialize the hybrid topology"

-

Select "1. Hub: hubName (@@)"

-

Press Enter to run all steps.

Please note: This is a task sequence! You typically initiate the sequence with the first step ("Stop services") and then the follow up steps in the screen will run automatically.

If no error has occurred, this environment is now ready to be configured to take the role of a Supply Chain Management hub.

In case of an error, you may also start with the first step that failed from the list of steps.

On the scale unit environment run the tool and run the following steps:

-

Select "1. Initialize the hybrid topology"

-

Select "2. Scale Unit: scaleUnitName (@A)"

-

Press Enter to run all steps.

The command will stop the service and the run the remaining commands to complete the action.

If no error has occurred, this environment is now ready to be configured to take the role of a Supply Chain Management scale unit.

In case of an error, you may also start with the first step that failed from the list of steps.

On either the hub or the scale unit environment commence the following steps in the tool:

- Select "2. Prepare environments for workload installation"

- Select "1. Hub: hubName (@@)"

If no error has occurred, your hub environment is now configured as the hub in your Scale Unit setup.

On either the hub or the scale unit environment run the tool with the following steps:

- Select "2. Prepare environments for workload installation"

- Select "2. Scale unit: scaleUnitName (@A)"

If no error has occurred, your scale unit environment is now as the spoke in your Scale Unit setup.

On either the hub or the scale unit environment you can create the workload information on the hub using the tool:

- Select "3. Show workload installation options"

- Select "1. Install workloads"

- Select "1. Hub: hubName (@@)"

On either the hub or the scale unit environment you can create the workloads on the scale unit using the tool:

- Select "3. Show workload installation options"

- Select "1. Install workloads"

- Select "2. Scale unit: scaleUnitName (@A)"

If no error has occurred, your scale unit environment is now either initializing or already running the workloads and ownership of the respective business processes has been transferred from the hub environment to the scale unit.

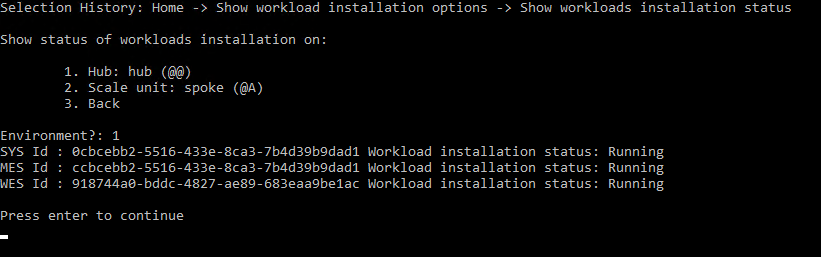

You can check that the workloads are running correctly on both the hub and scale unit environment.

On the hub or scale unit environment run the tool with the following commands to see the workload status on the hub:

- Select "3. Show workload installation options"

- Select "2. Show workloads installation status"

- Select "1. Hub: HubName (@@)"

On the hub or scale unit environment run the tool with the following commands to see that workload installation status on the scale unit:

- Select "3. Show workload installation options"

- Select "2. Show workloads installation status"

- Select "2. Scale unit: scaleUnitName(@A)"

The command will return all running workloads with their status.

When all workloads are in "Running" status both on hub and scale unit, the deployment is completed, and you can test the workload functionality in the actual environments.

In the tool it is possible to move workloads from the spoke to the hub. This step will take all workloads on every scale unit and move them to the hub. This will effectively disable the spoke, so all the work is done on the hub. To re-enable the spoke, see the section below on re-enabling the spoke environments.

To move all workloads to the hub, use the following steps:

- Select "4. Show workload movement options"

- Select "1. Move all workloads to the hub"

This will initiate the movement of all workloads from all scale units to the hub.

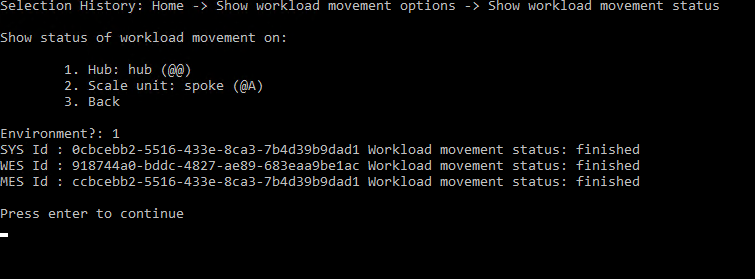

You can check the status of the movement on the hub using the tool. This will tell you whether a movement on a scale unit is "not started", "in progress" or "finished". To do this on the hub, follow these steps:

- Select "4. Show workload movement options"

- Select "2. Show workload movement status"

- Select "1. Hub: HubName (@@)"

This will return a list of workloads with status "in progress" until it is done moving, at which point it will say "finished".

The same thing can be done on the spoke:

- Select "4. Show workload movement options"

- Select "2. Show workload movement status"

- Select "2. Scale unit: scaleUnitName(@A)"

When the workload movements are finished on both the hub and the spoke, then the movements have been successfully completed.

The hub and the spoke communicate by sending packages back and forth. Sometimes it can be necessary to stop this communication, while ensuring that no packages are lost in the process. This is done by draining the workload data pipelines, which is supported by the devTools.

Draining and starting workload pipelines can be done by running the tool with command line arguments. It may take a few minutes before all the pipelines are drained. If a workload does not have status "Running", an error will occur when draining the workloads. To drain all pipelines on all scaleunits run the following command:

$ ./CLI.exe --drain-pipelines

If the pipelines have been drained and the user wants to resume the communication, this can be done by starting the workloads. To start all pipelines on all environments run:

$ ./CLI.exe --start-pipelines

Just like when the workloads are drained there will be an error if the program tries to start a workload that is already running.

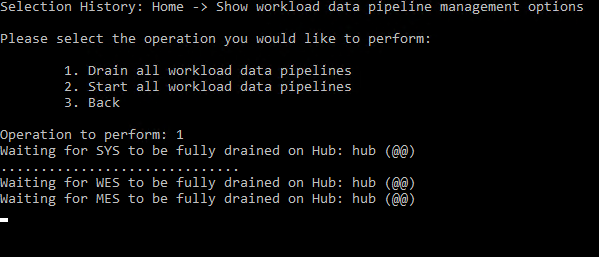

The actions can also be performed through the UI. All workload data pipelines are drained by following these steps:

- Select "6. Show workload data pipeline management options"

- Select "1. Drain workload data pipelines"

- Press Enter to drain on all environments.

Follow these steps to start workload pipelines:

- Select "6. Show workload data pipeline management options"

- Select "1. Start workload data pipelines"

- Press Enter to start on all environments.

The user may want to upgrade the definitions of the workloads running on the spoke so it matches the definitions stored on the hub. To be able to do this, make sure the workloads are drained. See the section above on draining/starting workloads for reference. Then do the following:

- Select "5. Upgrade workloads definition"

- Press Enter to upgrade all environments

This will take the definition of the workloads from the hub and create new workload instances on the spoke with these definitions. After the workloads have been upgraded all the data pipelines are started automatically, so it is not necessary to start them in the dev tools.

The user can delete all workloads from an environment. These workloads will no longer be installed on that scale unit. The user can select the environment they want to delete workloads on, for example the following theps delete all workloads on the hub.

- Select "7. Delete workloads from environment"

- Select "1. Hub: HubName (@@)"

The workloads will change their status to "NotInstalled".

As of version 3.13 of the tool, the user can select a subset of the initialization steps to run. This allows the user to run SyncDB in isolation, as well as restarting IIS services through the tool.

If a database needs to be synced, but you do not wish to run the other initialization steps. To run DBSync, e.g. on the hub, follow these steps:

- Select "1. Initialize the hybrid topology"

- Select "1. Hub: HubName (@@)"

- Select "3. Run DB Sync"

The tool allows the user to clean up the Azure storage account connected to a specific scale unit. This is required if for example the spoke environment needs to be reset. Cleaning up the storage accounts, e.g. on the spoke, can be done through the tool by running the executable with the following argument:

$ ./CLI.exe --clean-storage

This will clean everything stored in the Azure storage for both hub and spoke. This argument can be combined with other arguments, in which case the storage is cleaned first.

Cleaning the storage accounts can also be done through the UI. This is done by following these steps:

- Select "8. Setup tools"

- Select "4. Clean up Azure storage account for a scale unit"

- Select "2. scale unit: scaleUnitName (@A)"

When the storage accounts have been cleaned the batch job services running on the environment must be restarted for the change to take effect. This can be done through the tool by using the initialization steps. For the spoke, this would be:

- Select "1. Initialize the hybrid topology"

- Select "2. Scale unit: scaleUnitName(@A)"

- Enter "1,8" to select "Stop services" and "Start services"

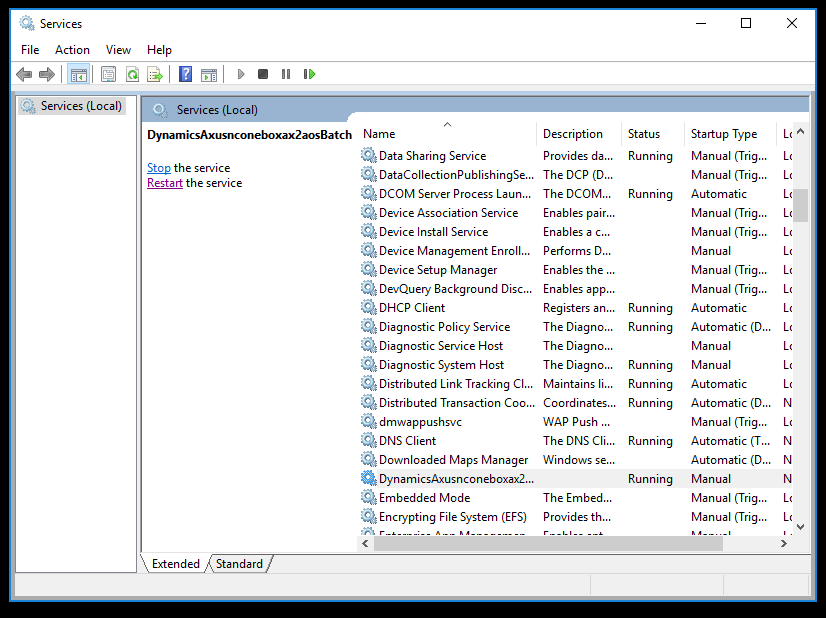

The services can also be restarted manually. To do this open the search bar on the machine and type in "Services". Opening the program with this name should start the window in the image. If the hub and spoke are on different environments, they will each have a batch service called "Microsoft Dynamics 365 Unified Operations: Batch Management Service". If the hub and spoke are on a singleOneBox environment, there will be a service called Microsoft Dynamics 365 Unified Operations: Batch Management Service" and another service called "DynamicsAx" followed by the spoke url, followed by "Batch". Find these services and click the button saying "Restart the service".

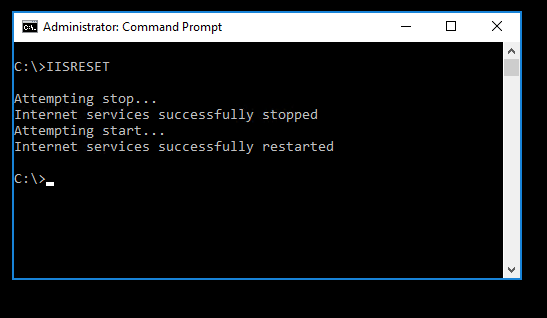

When the services have been restarted open the Command Prompt and run "IISRESET" to reset the internet services on the machine. Once this is done, the storage accounts are effectively cleaned.

If the user wants to disable the spoke, this can be done by disabling the scale unit feature through the tool. To do this, follow the steps:

- Select "8. Setup tools"

- Select "4. Clean up Azure storage account for a scale unit"

- Select "2. scale unit: scaleUnitName (@A)"

These steps will include running SyncDb and can take some time.

In case the scale unit ID needs to be changed, the webconfig will need to be updated. After updating the configuration file accordingly, run the following steps:

- Select "8. Setup tools"

- Select "3. Update scale unit id"

- Select "1. scale unit: scaleUnitName (@A)"

Workloads can be imported from one Azure Storage to another using a SAS token. A SAS token can be obtained either from a Geneva action or directly from the Azure Portal. The SAS token should point to the container that has the workloads in it. This will be the one named "sysworkloadinstancesharedserviceunitstorage". Once the user has the SAS token, follow these steps:

- Select "8. Setup tools"

- Select "5. Import workloads from Azure storage blob with SAS url"

- Select "2. scale unit: scaleUnitName (@A)" (Or another scale unit)

- Paste the SAS token and press Enter

This will copy the workload blob to the new Azure storage. If this storage already had a blob with workloads, this blob will be overwritten.

If the user has a hub that has been set up and is running, but wants to re-enable the spoke environment, these are the required steps. First, if any movements have been made recently, make sure that they have been completed. Then do the following:

- Clean the Azure Storage account for the spoke.

- Restore the emptyDB for the spoke.

- Update the scale unit id for the spoke with a new id (e.g. @B) in UserConfig file. Update the workload instances definitions in the same file accordingly.

- Delete workload instances only on the hub. As shown here

- Run the "initialize hybrid topology" step for the spoke.

- Prepare the spoke for workload installation.

- Proceed to install workloads on the hub, and then on the spoke.

How to do these steps is described in the sections above. After all the steps has been completed, the workloads should be installed on the hub and the spoke.

If the user wants to reset both the hub and spoke to their initial state after having installed workloads, the following steps must be applied to clean the environments.

- Clean the Azure Storage account for the spoke and for the hub.

- Restore the initial DB of the hub and restore the emptyDB of the spoke. If the user has DB snapshots, these can be used. This cannot be done in the devTools.

- If the user does not have a DB snapshot with the synced DB, the user might need to run the "initialize hybrid topology" step for the spoke and for the hub.

- Prepare the hub and the spoke for workload installation.

- Proceed to install workloads on the hub, and then on the spoke.

How to do these steps is described in the sections above. After completing the steps the spoke should be re-enabled and running.