What Else Can Fool Deep Learning? Addressing Color Constancy Errors on Deep Neural Network Performance

Mahmoud Afifi1 and Michael S. Brown1,2

1York University 2Samsung AI Center (SAIC) - Toronto

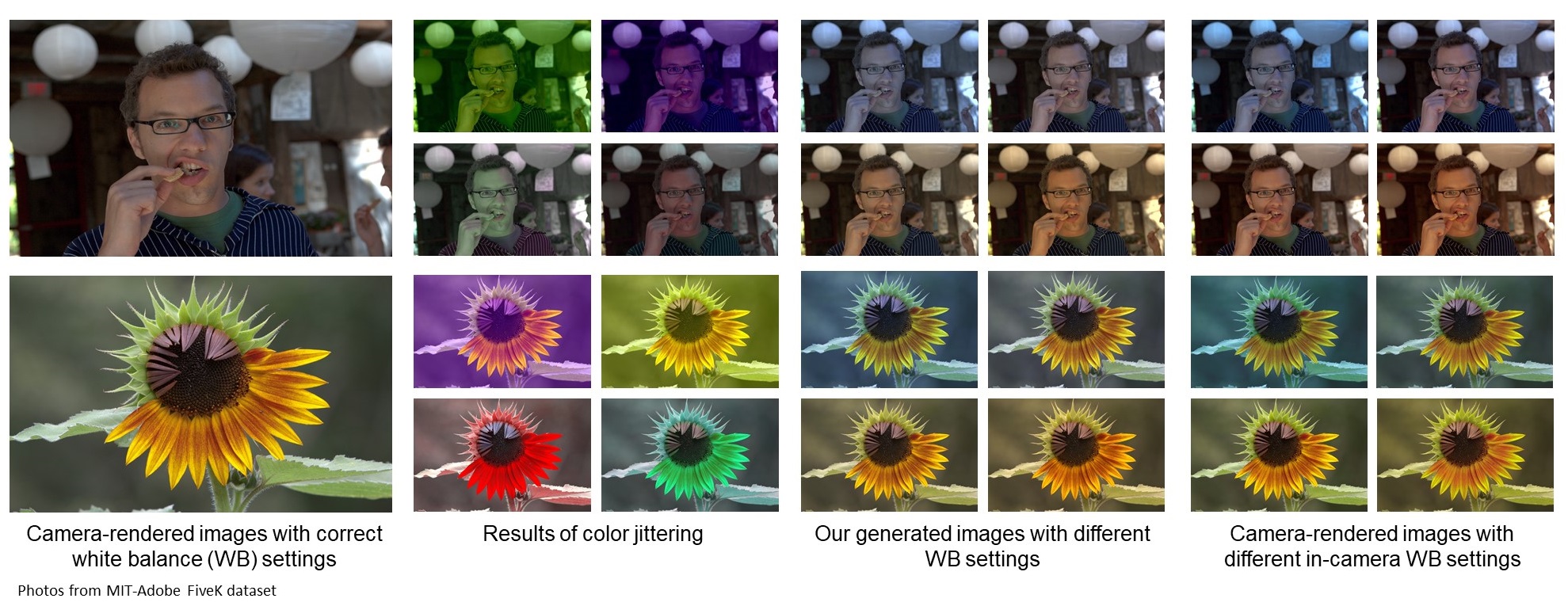

Our augmentation method can accurately emulate realistic color constancy degradation. Existing color augmentation methods often generate unrealistic colors which rarely happen in reality (e.g., green skin or purple grass). More importantly, the visual appearance of existing color augmentation techniques does not well represent the color casts produced by incorrect WB applied onboard cameras, as shown below.

- Requirements: numpy & Pillow

pip install numpypip install Pillow

- Run

wbAug.py; examples:

- Process a singe image (generate ten new images and a copy of the given image):

python wbAug.py --input_image_filename ../images/image1.jpg

- Process all images in a directory (for each image, generate ten images and copies of original images):

python wbAug.py --input_image_dir ../images

- Process all images in a directory (for each image, generate five images without original images):

python wbAug.py --input_image_dir ../images --out_dir ../results --out_number 5 --write_original 0

- Augment all training images and generate corresponding ground truth files (generate three images and copies of original images):

python wbAug.py --input_image_dir ../example/training_set --ground_truth_dir ../example/ground_truth --ground_truth_ext .png --out_dir ../new_training_set --out_ground_truth ../new_ground_truth --out_number 3 --write_original 1

demo.pyshows an example of how to use theWBEmulatormodule

torch_demo.py shows an example of how to use the WBEmulator module to augment images on the fly. This example can be easily adapted for TensorFlow as well. This code applies the WB augmentation to each image loaded from the data loader. See the implementation of BasicDataset in torch_demo.py as an example of PyTorch data loader with our WB augmenter. The parameter aug_prob controls the probability of applying the WB augmenter.

The demo in torch_demo.py uses the ImageNET pre-trained model of VGG19. The demo uses a single image of a hamster (located in \images and shown below) and randomly applies one of our WB/camera profiles to the image before classifying.

As shown below, the network has a different prediction each time because of the WB effect. The same BasicDataset class in torch_demo.py could be adjusted to use in training phase.

Output:

toy poodle 0.15292471647262573

hen 0.5484228134155273

toy poodle 0.15292471647262573

hen 0.5260483026504517

hen 0.5484228134155273

hen 0.5260483026504517

hamster 0.2579324543476105

hen 0.5260483026504517

hamster 0.2579324543476105

toy poodle 0.15292471647262573

In this example, we showed how to use the WB augmenter in a custom database class. To see how to use the WB augmenter for PyTorch built-in datasets, please see this Colab example. In this example, we use this repo, which provides a combined version of the WB augmenter Python implementation to facilitate cloning to Colab. We used CIFAR-10 to train a simple network with and without the WB augmenter. As shown in the Colab example, training with the WB augmenter results in +3% improvement compared to training on original data.

- Run

install_.m - Try our demos:

demo_single_imageto process signle imagedemo_batchto process an image directorydemo_WB_color_augmentationto process an image directory and repeating the corresponding ground truth files for our generated imagesdemo_GUI(located inGUIdirectory) for a GUI interface

- To use the WB augmenter inside your code, please follow the following steps:

- Either run install_() or addpath to code/model directories:

addpath('src'); addpath('models'); %or use install_()

- Load our model:

load('synthWBmodel.mat'); %load WB_emulator CPU model -- use load('synthWBmodel_GPU.mat'); to load WB_emulator GPU model - Run the WB emulator:

out = WB_emulator.generate_wb_srgb(I, NumOfImgs); %I: input image tensor & NumOfImgs (optional): numbre of images to generate [<=10] - Use the generated images:

new_img = out(:,:,:,i); %access the ith generated image

We used images from Set1 of the Rendered WB dataset to build our method.

In our paper, we introduced a new testing set that contains CIFAR-10 classes to evaluate trained models with different settings. This testing set contains 15,098 rendered images that reflect real in-camera WB settings. Our testing set is divided into ten directories, each includes testing images for one of CIFAR-10 classes. You can download our testing set from the following links: 32x32 pixels | 224x224 pixels | 227x227 pixels

If you use this code or our dataset, please cite our paper:

Mahmoud Afifi and Michael S. Brown. What Else Can Fool Deep Learning? Addressing Color Constancy Errors on Deep Neural Network Performance. International Conference on Computer Vision (ICCV), 2019.

@InProceedings{Afifi_2019_ICCV,

author = {Afifi, Mahmoud and Brown, Michael S.},

title = {What Else Can Fool Deep Learning? Addressing Color Constancy Errors on Deep Neural Network Performance},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {October},

year = {2019}

}

- When Color Constancy Goes Wrong: The first work to directly address the problem of incorrectly white-balanced images; requires a small memory overhead and it is fast (CVPR 2019).

- Deep White-Balance Editing: A multi-task deep learning model for post-capture white-balance correction and editing (CVPR 2020).

- Interactive White Balancing:A simple method to link the nonlinear white-balance correction to the user's selected colors to allow interactive white-balance manipulation (CIC 2020).