Quick Start |

Documentation |

LangChain and

LlamaIndex Support |

Discord

www.getzep.com

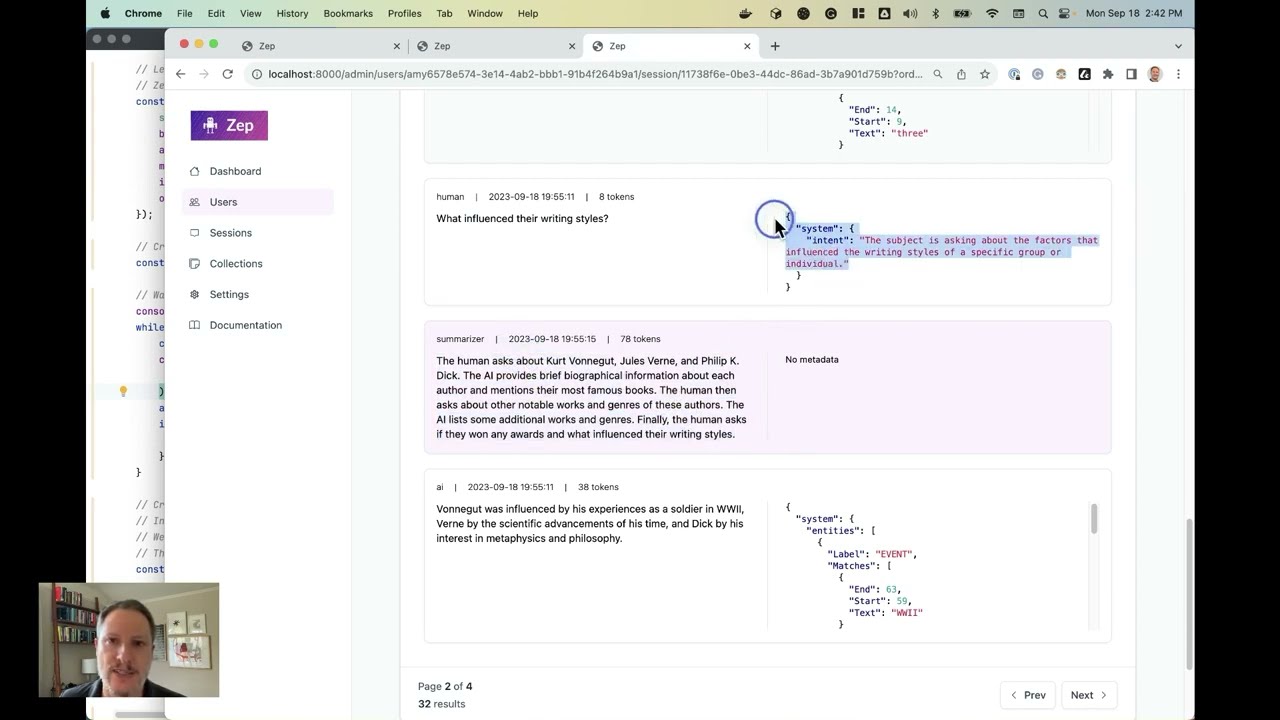

Zep is an open source platform for productionizing LLM apps. Zep summarizes, embeds, and enriches chat histories and documents asynchronously, ensuring these operations don't impact your user's chat experience. Data is persisted to database, allowing you to scale out when growth demands. As drop-in replacements for popular LangChain components, you can get to production in minutes without rewriting code.

- Manage users, sessions, chat messages, chat roles, and more, not just texts and embeddings.

- Build autopilots, agents, Q&A over docs apps, chatbots, and more.

- Zep’s local embedding models and async enrichment ensure a snappy user experience.

- Storing documents and history in Zep and not in memory enables stateless deployment.

- Zep Memory and VectorStore implementations are shipped with LangChain, LangChain.js, and LlamaIndex.

- Python & TypeScript/JS SDKs for easy integration with your LLM app.

- TypeScript/JS SDK supports edge deployment.

- Populate your prompts with relevant documents and chat history.

- Rich metadata and JSONPath query filters offer a powerful hybrid search over texts.

- Automatically embed texts and messages using state-of-the-art open source models, OpenAI, or bring your own vectors.

- Enrichment of chat histories with summaries, named entities, token counts. Use these as search filters.

- Associate your own metadata with sessions, documents & chat histories.

- 🏎️ Quick Start Guide: Docker or cloud deployment, and coding, in < 5 minutes.

- 📚 Zep By Example: Learn how to use Zep by example.

- 🦙 Building Apps with LlamaIndex

- 🦜⛓️ Building Apps with LangChain

- 🛠️ Getting Started with TypeScript/JS or Python

user_request = CreateUserRequest(

user_id=user_id,

email="[email protected]",

first_name="Jane",

last_name="Smith",

metadata={"foo": "bar"},

)

new_user = client.user.add(user_request)

# create a chat session

session_id = uuid.uuid4().hex # A new session identifier

session = Session(

session_id=session_id,

user_id=user_id,

metadata={"foo" : "bar"}

)

client.memory.add_session(session)

# Add a chat message to the session

history = [

{ role: "human", content: "Who was Octavia Butler?" },

]

messages = [Message(role=m.role, content=m.content) for m in history]

memory = Memory(messages=messages)

client.memory.add_memory(session_id, memory)

# Get all sessions for user_id

sessions = client.user.getSessions(user_id)const memory = new ZepMemory({

sessionId,

baseURL: zepApiURL,

apiKey: zepApiKey,

});

const chain = new ConversationChain({ llm: model, memory });

const response = await chain.run(

{

input="What is the book's relevance to the challenges facing contemporary society?"

},

);Hybrid similarity search over a document collection with text input and JSONPath filters (TypeScript)

const query = "Who was Octavia Butler?";

const searchResults = await collection.search({ text: query }, 3);

// Search for documents using both text and metadata

const metadataQuery = {

where: { jsonpath: '$[*] ? (@.genre == "scifi")' },

};

const newSearchResults = await collection.search(

{

text: query,

metadata: metadataQuery,

},

3

);from llama_index import VectorStoreIndex, SimpleDirectoryReader

from llama_index.vector_stores import ZepVectorStore

from llama_index.storage.storage_context import StorageContext

vector_store = ZepVectorStore(

api_url=zep_api_url,

api_key=zep_api_key,

collection_name=collection_name

)

documents = SimpleDirectoryReader("documents/").load_data()

storage_context = StorageContext.from_defaults(vector_store=vector_store)

index = VectorStoreIndex.from_documents(

documents,

storage_context=storage_context

)# Search by embedding vector, rather than text query

# embedding is a list of floats

results = collection.search(

embedding=embedding, limit=5

)Please see the Zep Quick Start Guide for important configuration information.

docker compose upLooking for other deployment options?

Please see the Zep Develoment Guide for important beta information and usage instructions.

pip install zep-pythonor

npm i @getzep/zep-js