The following is a template for deploying a Rust AWS Lambda function. All deployment is managed by the AWS CDK tool.

If you are interested in a more minimal version of this, check out patterns-serverless-rust-minimal.

✨ Features ✨

- 🦀 Ready-to-use serverless setup using Rust and AWS CDK.

- 🎟 GraphQL boilerplate taken care of.

- 🧘♀️ AWS DynamoDB boilerplate taken care of.

- 🚗 CI using GitHub Actions testing the deployment using LocalStack.

- 👩💻 Local development using LocalStack.

- 🚀 Deployments via GitHub releases.

Remaining:

- Rework GraphQL schema a bit

- Finish DynamoDB setup

- Add tests using local DynamoDB

- Plug in dataloader

⚡️ Quick start ⚡️

Assuming you have set up npm and cargo/rustup, the following will get you going:

npm ci: install all our deployment dependencies.npm run build: build the Rust executable and package it as an asset for CDK.npm run cdk:deploy: deploy the packaged asset.

The stack name is controlled by the name field in package.json. Other than that, just use your regular Rust development setup.

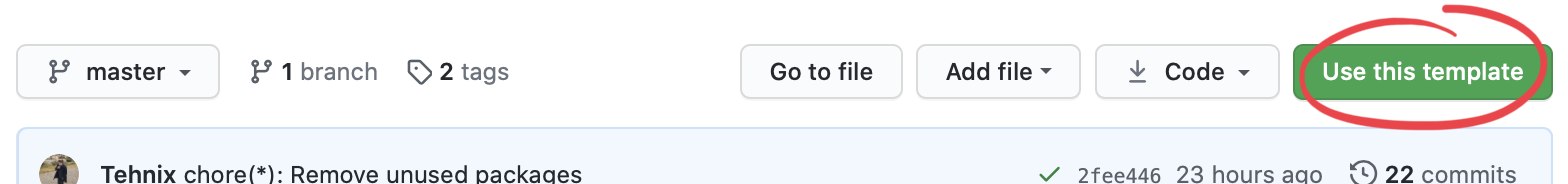

Use this repo as a template to get quickly started! If you are interested in a more fully-featured version of this, check out 🚧 patterns-serverless-rust 🚧 for how to expose a GraphQL endpoint and use DynamoDB.

- Building

- Deployment using CDK

- Development using LocalStack

- GitHub Actions (CI/CD)

- Benchmarks using AWS XRay

- Libraries

- Contributing

An overview of commands (all prefixed with npm run):

| Command | Description | Purpose |

|---|---|---|

build |

Build the Rust executable for release | 📦 |

build:debug |

Build the Rust executable for debug | 📦 |

build:archive |

Creates a ./lambda.zip for deployment using the AWS CLI |

📦 |

build:clean |

Cleans build artifcats from target/cdk |

📦 |

deploy |

Cleans and builds a new executable, and deploys it via CDK | 📦 + 🚢 |

cdk:bootstrap |

Bootstrap necessary resources on first usage of CDK in a region | 🚢 |

cdk:deploy |

deploy this stack to your default AWS account/region | 🚢 |

cdklocal:start |

Starts the LocalStack docker image | 👩💻 |

cdklocal:bootstrap |

Bootstrap necessary resources for CDK against LocalStack | 👩💻 |

cdklocal:deploy |

Deploy this stack to LocalStack | 👩💻 |

We build our executable by running npm run build.

Behind the scenes, the build NPM script does the following:

- Adds our

x86_64-unknown-linux-musltoolchain - Runs

cargo build --release --target x86_64-unknown-linux-musl

In other words, we cross-compile a static binary for x86_64-unknown-linux-musl, put the executable, bootstrap, in target/cdk/release, and CDK uses that as its asset. With custom runtimes, AWS Lambda looks for an executable called bootstrap, so this is why we need the renaming step.

We build and deploy by running npm run deploy, or just npm run cdk:deploy if you have already run npm run build previously.

A couple of notes:

- If this is the first CDK deployment ever on your AWS account/region, run

npm run cdk:bootstrapfirst. This creates the necessary CDK stack resources on the cloud. - The CDK deployment bundles the

target/cdk/releasefolder as its assets. This is where thebootstrapfile needs to be located (handled bynpm run build).

Generate our build assets

$ npm run buildDeploy the Rust asset

To deploy your function, call npm run cdk:deploy,

$ npm run cdk:deploy

...

sls-rust: deploying...

[0%] start: Publishing bdbf8354358bc096823baac946ba64130b6397ff8e7eda2f18d782810e158c39:current

[100%] success: Published bdbf8354358bc096823baac946ba64130b6397ff8e7eda2f18d782810e158c39:current

sls-rust: creating CloudFormation changeset...

[██████████████████████████████████████████████████████████] (5/5)

✅ sls-rust

Outputs:

sls-rust.entryArn = arn:aws:lambda:eu-west-1:xxxxxxxxxxxxxx:function:sls-rust-main

Stack ARN:

arn:aws:cloudformation:eu-west-1:xxxxxxxxxxxxxx:stack/sls-rust/xxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxx💡 The security prompt is automatically disabled on CIs that set

CI=true. You can remove this check by setting--require-approval neverin thecdk:deploynpm command.

Validate you CDK CloudFormation

If you want to check if you CDK generated CloudFormation is valid, you can do that via,

$ npm run cdk:synthCompare local against deployed

And finally, if you want to see a diff between your deployed stack and your local stack,

$ npm run cdk:diff👈 Expand here for deployment using AWS CLI

For real-usage we will deploy using AWS CDK, but you can dip your feet by deploying the Rust function via the AWS CLI.

We'll do a couple of steps additional steps for the first time setup. Only step 5. is necessary after having done this once:

- Set up a role to use with our Lambda function.

- Attach policies to that role to be able to actually do something.

- Deploy the Lambda function using the

lambda.zipwe've built. - Invoke the function with a test payload.

- (Optional) Update the Lambda function with a new

lambda.zip.

Generate our build assets

$ npm run build && npm run build:archiveSet up the IAM Role

$ aws iam create-role \

--role-name sls-rust-test-execution \

--assume-role-policy-document \

'{"Version": "2012-10-17","Statement": [{ "Effect": "Allow", "Principal": {"Service": "lambda.amazonaws.com"}, "Action": "sts:AssumeRole"}]}'We also need to set some basic policies on the IAM Role for it to be invokeable and for XRay traces to work,

$ aws iam attach-role-policy \

--role-name sls-rust-test-execution \

--policy-arn arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

$ aws iam attach-role-policy \

--role-name sls-rust-test-execution \

--policy-arn arn:aws:iam::aws:policy/AWSXRayDaemonWriteAccessDeploy our function

$ aws lambda create-function \

--function-name sls-rust-test \

--handler doesnt.matter \

--cli-binary-format raw-in-base64-out \

--zip-file fileb://./lambda.zip \

--runtime provided.al2 \

--role arn:aws:iam::$(aws sts get-caller-identity | jq -r .Account):role/sls-rust-test-execution \

--environment Variables={RUST_BACKTRACE=1} \

--tracing-config Mode=Active💡 You can replace the

$(aws sts get-caller-identity | jq -r .Account)call with your AWS account ID, if you do not have jq installed.

Invoke our function

$ aws lambda invoke \

--function-name sls-rust-test \

--cli-binary-format raw-in-base64-out \

--payload '{"firstName": "world"}' \

tmp-output.json > /dev/null && cat tmp-output.json && rm tmp-output.json

{"message":"Hello, world!"}(Optional) Update the function

We can also update the function code again, after creating a new asset lambda.zip,

$ aws lambda update-function-code \

--cli-binary-format raw-in-base64-out \

--function-name sls-rust-test \

--zip-file fileb://lambda.zipClean up the function

$ aws lambda delete-function --function-name sls-rust-test

$ aws iam detach-role-policy --role-name sls-rust-test-execution --policy-arn arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

$ aws iam detach-role-policy --role-name sls-rust-test-execution --policy-arn arn:aws:iam::aws:policy/AWSXRayDaemonWriteAccess

$ aws iam delete-role --role-name sls-rust-test-executionLocalStack allows us to deploy our CDK services directly to our local environment:

npm run cdklocal:startto start the LocalStack services.npm run cdklocal:boostrapto create the necessary CDK stack resources on the cloud.npm run cdklocal:deployto deploy our stack.- Target the local services from our application, with

cdklocal, or by setting theendpointoption on the AWS CLI, e.g.aws --endpoint-url=http://localhost:4566.

We can use cargo watch (via cargo install cargo-watch) to continously build a debug build of our application,

$ cargo watch -s 'npm run build:debug'If you want to test the application through the AWS CLI, the following should do the trick,

$ aws --endpoint-url=http://localhost:4566 lambda invoke \

--function-name sls-rust-minimal-main \

--cli-binary-format raw-in-base64-out \

--payload '{"firstName": "world"}' \

tmp-output.json > /dev/null && cat tmp-output.json && rm tmp-output.json

{"message":"Hello, world!"}Using GitHub actions allows us to have an efficient CI/CD setup with minimal work.

| Workflow | Trigger | Purpose | Environment Variables |

|---|---|---|---|

| ci | push | Continously test the build along with linting, formatting, best-practices (clippy), and validate deployment against LocalStack | |

| pre-release | Pre-release using GitHub Releases | Run benchmark suite | BENCHMARK_AWS_ACCESS_KEY_ID BENCHMARK_AWS_SECRET_ACCESS_KEY BENCHMARK_AWS_SECRET_ACCESS_KEY |

| pre-release | Pre-release using GitHub Releases | Deploy to a QA or staging environment | PRE_RELEASE_AWS_ACCESS_KEY_ID PRE_RELEASE_AWS_SECRET_ACCESS_KEY PRE_RELEASE_AWS_SECRET_ACCESS_KEY |

| release | Release using GitHub Releases | Deploy to production environment | RELEASE_AWS_ACCESS_KEY_ID RELEASE_AWS_SECRET_ACCESS_KEY RELEASE_AWS_SECRET_ACCESS_KEY |

The CI will work seamlessly without any manual steps, but for deployments via GitHub Releases to work, you will need to set up your GitHub secrets for the repository for the variables in the table above.

These are used in the .github/workflows/release.yml and .github/workflows/pre-release.yml workflows for deploying the CDK stack whenever a GitHub pre-release/release is made.

Since we have enabled tracing: lambda.Tracing.ACTIVE in CDK and tracing-config Mode=Active in the CLI, we will get XRay traces for our AWS Lambda invocations.

You can checkout each trace in the AWS Console inside the XRay service, which is extremely valuable for figuring our timings between services, slow AWS SDK calls, annotating cost centers in your code, and much more.

We can benchmark our performance using npm run benchmark, which will deploy the AWS Lambda to your AWS account, invoke it a bunch of times and trigger cold starts, along with gathering up all the AWS XRay traces into a neat table.

Below are two charts generated by the benchmark, you can see the raw data in the response-times table.

- 🔵: Average cold startup times

- 🔴: Average warm startup times

- 🔵: Fastest warm response time

- 🔴: Slowest warm response time

- 🟡: Fastest cold response time

- 🟠: Slowest cold response time

Benchmarks can be triggered in the CI by setting up its environment variables and creating a pre-release via GitHub Releases.

We are using a couple of libraries, in various state of maturity/release:

- The netlify fork of aws-lambda-rust-runtime pending on #274.

- We will need the musl tools, which we use instead of glibc, via

apt-get install musl-toolsfor Ubuntu orbrew tap SergioBenitez/osxct && brew install FiloSottile/musl-cross/musl-crossfor macOS.

- We will need the musl tools, which we use instead of glibc, via

- aws-cdk for deploying to AWS, using CloudFormation under-the-hood. We'll use their support for Custom Runtimes.

- The aws-cdk fork of localstack for a local development setup.

- cargo watch so we can develop using

cargo watch, installable viacargo install cargo-watch.

Have any improvements our ideas? Don't be afraid to create an issue to discuss what's on your mind!