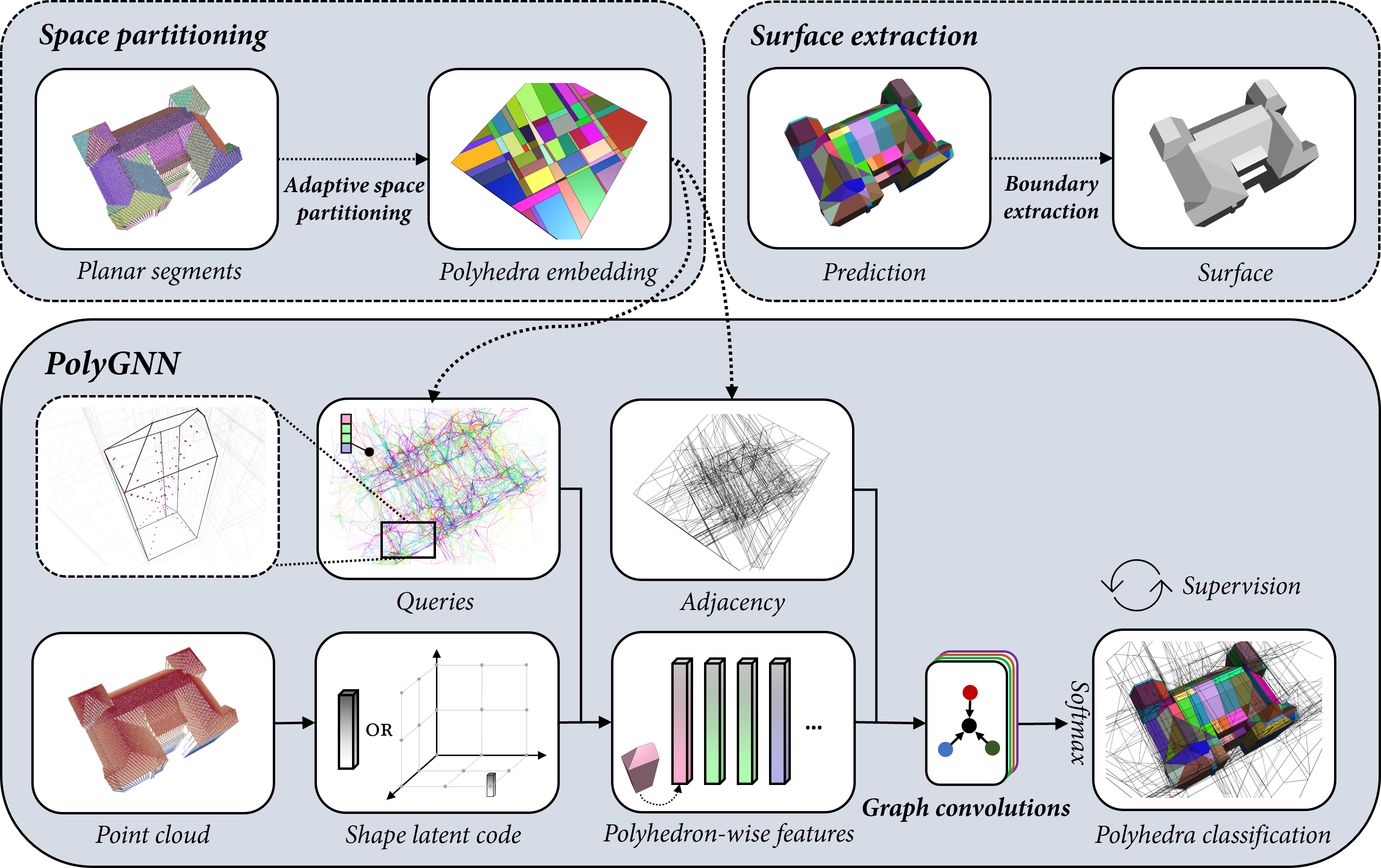

PolyGNN is an implementation of the paper PolyGNN: Polyhedron-based Graph Neural Network for 3D Building Reconstruction from Point Clouds. PolyGNN learns a piecewise planar occupancy function, supported by polyhedral decomposition, for efficient and scalable 3D building reconstruction.

Clone the repository:

git clone https://github.com/chenzhaiyu/polygnn && cd polygnnCreate a conda environment with all dependencies:

conda env create -f environment.yml && conda activate polygnnStill easy! Create a conda environment and install mamba for faster parsing:

conda create --name polygnn python=3.10 && conda activate polygnn

conda install mamba -c conda-forgeInstall the required dependencies:

mamba install pytorch torchvision sage=10.0 pytorch-cuda=11.7 pyg=2.3 pytorch-scatter pytorch-sparse pytorch-cluster torchmetrics rtree -c pyg -c pytorch -c nvidia -c conda-forge

pip install abspy hydra-core hydra-colorlog omegaconf trimesh tqdm wandb plyfile

Download the mini dataset and pretrained weights:

python download.py dataset=miniIn case you encounter issues (e.g., Google Drive limits), manually download the data and weights here, then extract them into ./checkpoints/mini and ./data/mini, respectively.

The mini dataset contains 200 random instances (~0.07% of the full dataset).

Train PolyGNN on the mini dataset:

python train.py dataset=miniThe data will be automatically preprocessed the first time you initiate training.

Evaluate PolyGNN with option to save predictions:

python test.py dataset=mini evaluate.save=trueGenerate meshes from predictions:

python reconstruct.py dataset=mini reconstruct.type=meshRemap meshes to their original CRS:

python remap.py dataset=miniGenerate reconstruction statistics:

python stats.py dataset=mini# check available configurations for training

python train.py --cfg job

# check available configurations for evaluation

python test.py --cfg jobAlternatively, review the configuration file: conf/config.yaml.

PolyGNN requires polyhedron-based graphs as input. To prepare this from your own point clouds:

- Extract planar primitives using tools such as Easy3D or GoCoPP, preferably in VertexGroup format.

- Build CellComplex from the primitives using abspy. Example code:

Alternatively, you can modify

from abspy import VertexGroup, CellComplex vertex_group = VertexGroup(vertex_group_path, quiet=True) cell_complex = CellComplex(vertex_group.planes, vertex_group.aabbs, vertex_group.points_grouped, build_graph=True, quiet=True) cell_complex.prioritise_planes(prioritise_verticals=True) cell_complex.construct() cell_complex.save(complex_path)

CityDatasetorTestOnlyDatasetto accept inputs directly from VertexGroup or reference mesh. - Structure your dataset similarly to the provided mini dataset:

YOUR_DATASET_NAME └── raw ├── 03_meshes │ ├── DEBY_LOD2_104572462.obj │ ├── DEBY_LOD2_104575306.obj │ └── DEBY_LOD2_104575493.obj ├── 04_pts │ ├── DEBY_LOD2_104572462.npy │ ├── DEBY_LOD2_104575306.npy │ └── DEBY_LOD2_104575493.npy ├── 05_complexes │ ├── DEBY_LOD2_104572462.cc │ ├── DEBY_LOD2_104575306.cc │ └── DEBY_LOD2_104575493.cc ├── testset.txt └── trainset.txt - To train or evaluate PolyGNN using your dataset, run the following commands:

For evaluation only, you can instantiate your dataset as a

# start training python train.py dataset=YOUR_DATASET_NAME # start evaluation python test.py dataset=YOUR_DATASET_NAME

TestOnlyDataset, as in this line.

- Demo with mini data and pretrained weights

- Short tutorial for getting started

- Host the entire dataset (>200GB)

If you use PolyGNN in a scientific work, please consider citing the paper:

@article{chen2024polygnn,

title = {PolyGNN: Polyhedron-based graph neural network for 3D building reconstruction from point clouds},

journal = {ISPRS Journal of Photogrammetry and Remote Sensing},

volume = {218},

pages = {693-706},

year = {2024},

issn = {0924-2716},

doi = {https://doi.org/10.1016/j.isprsjprs.2024.09.031},

url = {https://www.sciencedirect.com/science/article/pii/S0924271624003691},

author = {Zhaiyu Chen and Yilei Shi and Liangliang Nan and Zhitong Xiong and Xiao Xiang Zhu},

}