You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

(collaborators not at this hackathon: Jennifer Stiso, Dale Zhou, Jordan Dworkin, Danielle Bassett)

Registered Brainhack Global 2020 Event: Montreal, Canada

Project Description:

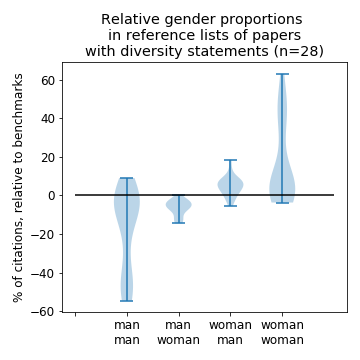

Gender imbalance is a big problem in academic research, and it is even reflected in our citation behavior. Our goal is to assess the 'impact' of the cleanBib tool, which was created to make researchers more aware of this problem. This tool can be used to assess the gender balance of authors in a reference list. Specifically, we want to find papers that cite the cleanBib code/paper/preprint, and see whether those citing papers have better gender balance in their reference lists than papers that do not.

Here's a preliminary figure we made by manually searching for citing papers, up to October 2021. Our Goal 0 (see below) is to update this manual figure up to January 2021. Our Goals 1-5 are mean to automate this process. .

0. Manual inspection of citing papers' diversity statement. As a first step, we're doing some manual data collection and visualization. We manually searched for papers that cite the code, paper, and/or preprint associated with cleanBib, and then compared their citations' gender diversity to the benchmarks reported in the paper. You can see our results in this notebook as of October 2020. We're hoping to update these figures with citing papers up to January 2021.

Here we'd benefit from skills in Python, working with APIs, and causality (we'll do these steps in order):

1. List citing papers. Automatically list papers that cite the code, paper, and/or preprint associated with cleanBib. We're currently at a stage where we can do this and we can get the gender guesses for the citing papers' first and last authors, but not for the citing papers' references. All this is currently done in this script.

2. List citing papers' citations.

3. Guess genders of the first and last authors of the citing papers' citations using the Gender API

4. Group citations into one of the following classes, using the gender guesses from the previous step:

Man & Man (i.e., man first author & man last author)

Woman & Man

Man & Woman

Woman & Woman

5. Compare class distributions. Compare the numbers of papers in each class for a) papers that cite the cleanBib code/paper/preprint, versus b) papers that do not. For the papers that do not, we could either use the numbers reported in the paper, or gather similar papers that do not cite the cleanBib code/paper/preprint and run a causal analysis.

Good first issues:

Update our manually collected info on the citation diversity statements of papers citing the cleanBib code/paper/preprint. This would involve searching for citing papers on, e.g., Google Scholar, opening the papers, and copy&pasting information into a spreadsheet (DOI, authors, citation diversity statement, etc.)

Review our gender-related language in our documentation / presentations to ensure we're discussing these issues in an appropriate way.

Skills:

Techy skills that would be helpful (but not necessary)

I added all of the labels I want an associate to my project

Project Submission

Submission checklist

Once the issue is submitted, please check items in this list as you add under ‘Additional project info’

Link to your project: could be a code repository, a shared document, etc.

Goals for Brainhack Global 2020: describe what you want to achieve during this brainhack.

Flesh out at least 2 “good first issues”: those are tasks that do not require any prior knowledge about your project, could be defined as issues in a GitHub repository, or in a shared document.

Skills: list skills that would be particularly suitable for your project. We ask you to include at least one non-coding skill. Use the issue labels for this purpose.

Chat channel: A link to a chat channel that will be used during the Brainhack Global 2020 event. This can be an existing channel or a new one. We recommend using the Brainhack space on Mattermost.

Optionally, you can also include information about:

Number of participants required.

Twitter-sized summary of your project pitch.

Provide an image of your project for the Brainhack Global 2020 website.

We would like to think about how you will credit and onboard new members to your project. If you’d like to share your thoughts with future project participants, you can include information about:

Specify how you will acknowledge contributions (e.g. listing members on a contributing page).

Provide links to onboarding documents if you have some:

The text was updated successfully, but these errors were encountered:

Dear @koudyk It is amazing to have this project as part of the Brainhack Montreal 🎉 It seems like your project is ready but only missing an image to create its card as others in here

If you can drop the image anywhere in the issue then I will publish the project and create its card for you.

Again welcome to Brainhack Montreal and hope you enjoy your project and participation 🤗

Thank you @koudyk That should do it, it defines the whole story beautifully already ✨ 💯 Let me publish now, but in the meantime, if you would like to make any change or additions to the issue, you can do as you wish. We will just approve the PR and it will be updated on our webpage.

I wish you all the bests with your participations 🤗

Project info

Title: CleanBibImpact: Do papers with a Citation Diversity Statement have more diverse citations?

Project lead: Kendra Oudyk (Twitter: @koudyk Mattermost: @kendra.oudyk

Project collaborators: You? :)

(collaborators not at this hackathon: Jennifer Stiso, Dale Zhou, Jordan Dworkin, Danielle Bassett)

Registered Brainhack Global 2020 Event: Montreal, Canada

Project Description:

Gender imbalance is a big problem in academic research, and it is even reflected in our citation behavior. Our goal is to assess the 'impact' of the cleanBib tool, which was created to make researchers more aware of this problem. This tool can be used to assess the gender balance of authors in a reference list. Specifically, we want to find papers that cite the cleanBib code/paper/preprint, and see whether those citing papers have better gender balance in their reference lists than papers that do not.

Here's a preliminary figure we made by manually searching for citing papers, up to October 2021. Our Goal 0 (see below) is to update this manual figure up to January 2021. Our Goals 1-5 are mean to automate this process.

.

.

Data to use:

Link to project repository/sources:

https://github.com/koudyk/cleanBibImpact

Goals for Brainhack Global 2020:

Few skills needed:

Here we'd benefit from skills in Python, working with APIs, and causality (we'll do these steps in order):

Good first issues:

Skills:

Techy skills that would be helpful (but not necessary)

Non-techy skills

Tools/Software/Methods to Use:

Communication channels:

Mattermost channel: cleanBibImpact

Project labels

Project Submission

Submission checklist

Once the issue is submitted, please check items in this list as you add under ‘Additional project info’

Optionally, you can also include information about:

We would like to think about how you will credit and onboard new members to your project. If you’d like to share your thoughts with future project participants, you can include information about:

The text was updated successfully, but these errors were encountered: