Yiming Li, Zixun Wang, Ziyan An, Yiqi Zhong, Siheng Chen, Chen Feng

[NOTICE] This repository provides a PyTorch benchmark implementation of our ongoing work V2X-Sim: A Virtual Collaborative Perception Dataset and Benchmark for Autonomous Driving. We currently release V2X-Sim 1.0 with LiDAR-based V2V data which is pubished as part of DiscoNet. V2X-Sim 2.0 with multi-modal multi-agent V2X data will be released soon.

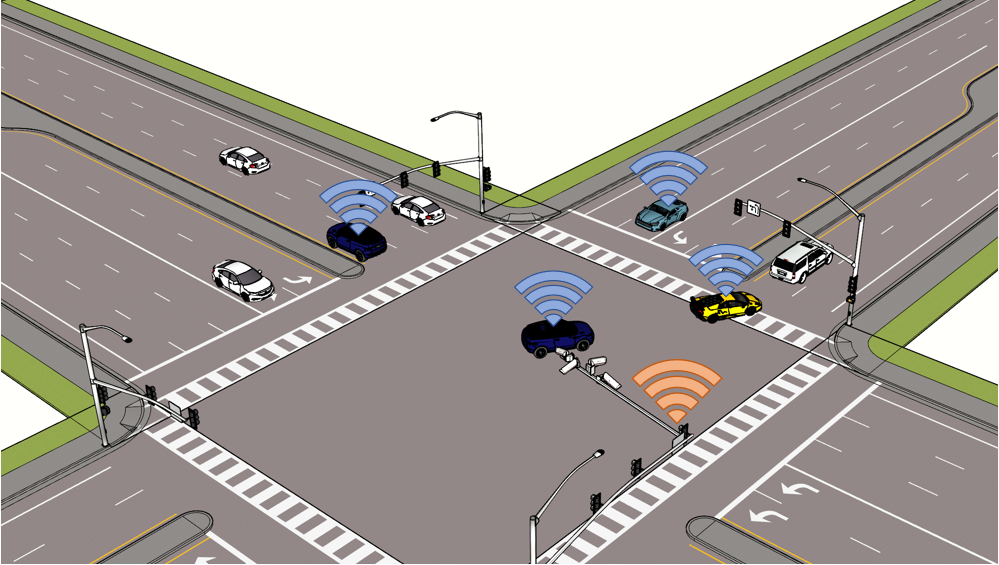

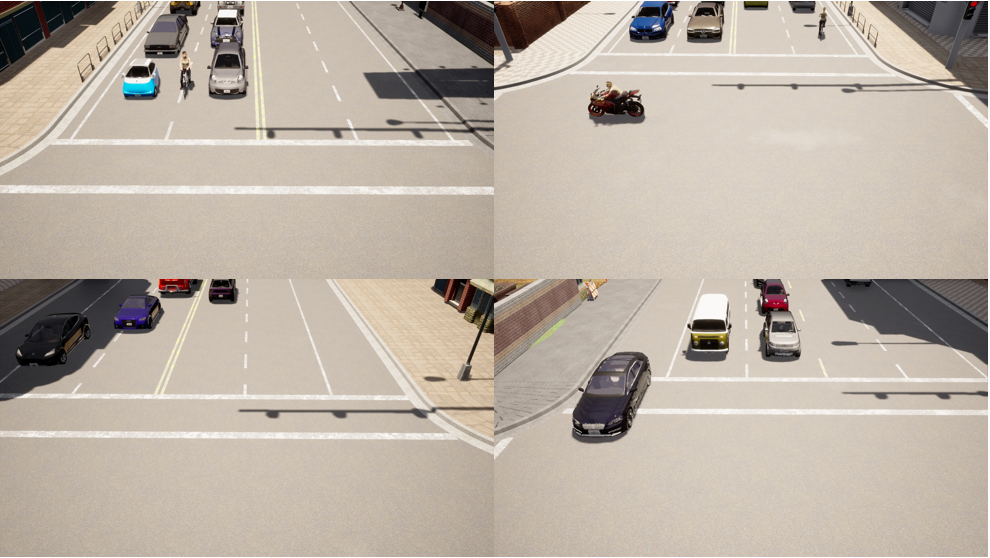

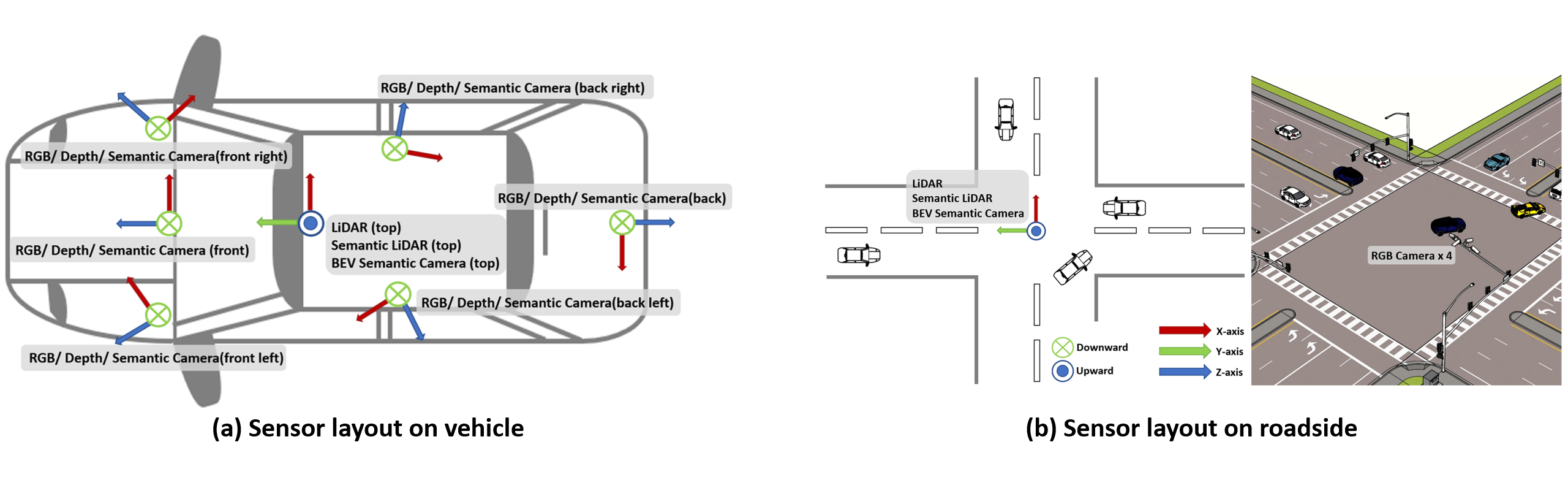

Vehicle-to-everything (V2X), which denotes the collaboration via communication between a vehicle and any entity in its surrounding, can fundamentally improve the perception in self-driving systems. As the single-agent perception rapidly advances, collaborative perception has made little progress due to the shortage of public V2X datasets. In this work, we present V2X-Sim, the first public large-scale collaborative perception dataset in autonomous driving. V2X-Sim provides: 1) well-synchronized recordings from roadside infrastructure and multiple vehicles at the intersection to enable collaborative perception, 2) multi-modality sensor streams to facilitate multi-modality perception, and 3) diverse well-annotated ground truth to support various downstream tasks including detection, tracking, and segmentation. We seek to inspire research on multi-agent multi-modality multi-task perception, and our virtual dataset is promising to promote the development of collaborative perception before realistic datasets become widely available.

You could find more detailed documents and the download link in our website!

Tested with:

- Python 3.7

- PyTorch 1.8.0

- Torchvision 0.9.0

- CUDA 11.2

We implement when2com, who2com, V2VNet, lowerbound and upperbound benchmark experiments on our datasets. You are welcome to go to detection, segmentation and tracking to find more details.

We are very grateful to multiple great opensourced codebases, without which this project would not have been possible:

If you find V2X-Sim 1.0 useful in your research, please cite our paper.

@InProceedings{Li_2021_NeurIPS,

title = {Learning Distilled Collaboration Graph for Multi-Agent Perception},

author = {Li, Yiming and Ren, Shunli and Wu, Pengxiang and Chen, Siheng and Feng, Chen and Zhang, Wenjun},

booktitle = {Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021)},

year = {2021}

}

@InProceedings{Li_2021_ICCVW,

title = {V2X-Sim: A Virtual Collaborative Perception Dataset for Autonomous Driving},

author = {Li, Yiming and An, Ziyan and Wang, Zixun and Zhong, Yiqi and Chen, Siheng and Feng, Chen},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (ICCVW)},

year = {2021}

}