The purpose of naming and style convention allows you and others to identify the type and purpose of database objects. Our goal is to create legible, concise, and consistent names for our database objects.

Never use a descriptive prefix such as tbl_. This 'reverse-Hungarian' notation has never been a standard for SQL and clashes with SQL Server's naming conventions. Some system procedures and functions were given prefixes sp_, fn_, xp_ or dt_ to signify that they were "special" and should be searched for in the master database first.

The use of the tbl_prefix for a table, often called "tibbling", came from databases imported from Access when SQL Server was first introduced. Unfortunately, this was an access convention inherited from Visual Basic, a loosely typed language.

SQL Server is a strongly typed language. There is never a doubt what type of object something is in SQL Server if you know its name, schema, and database, because its type is there in sys.objects: Also, it is obvious from the usage. Columns can be easily identified as such and character columns would have to be checked for length in the Object Browser anyway or Intellisense tool-tip hover in SQL Server Management Studio.

Do not prefix your columns with fld_, col_, f_, u_ as it should be obvious in SQL statements which items are columns (before or after the FROM clause). Do not use a data type prefix for the column either, for example, "ntCustomerId for a numeric type or VcName for a varchar() type.

Table and view names should be singular, for example, Customer instead of Customers. This rule is applicable because tables are patterns for storing an entity as a record – they are analogous to Classes serving up class instances. And if for no other reason than readability, you avoid errors due to the pluralization of English nouns in the process of database development. For instance, activity becomes activities, ox becomes oxen, person becomes people or persons, alumnus becomes alumni, while data remains data.

If writing code for a data integration and the source is plural keep the staging/integration tables the same as the source so there is no confusion.

For all parts of the table name, including prefixes, use Pascal Case. PascalCase also reduces the need for underscores to visually separate words in names.

Using reserved or future reserved words makes code more difficult to read, can cause problems to code formatters, and can cause errors when writing code.

Sometimes SSMS will color code a word making you think it is a reserved keyword. It might just be a "special" word that is used in a database backup function somewhere. Check the Reserved Keyword link below. If the highlighted word in SSMS is not on the current or future reserved list, you are safe to use it as an object name.

Special characters should not be used in names. Using PascalCase for your table name allows for the upper-case letter to denote the first letter of a new word or name. Thus, there is no need to do so with an underscore character. Do not use numbers in your table names either. This usually points to a poorly designed data model or irregularly partitioned tables. Do not use spaces in your table names either. While most database systems can handle names that include spaces, systems such as SQL Server require you to add brackets around the name when referencing it (like [table name] for example) which goes against the rule of keeping things as short and simple as possible.

- TODO: See Use PascalCase

Avoid using abbreviations if possible. Use Account instead of Acct and Hour instead of Hr. Not everyone will always agree with you on what your abbreviations stand for - and - this makes it simple to read and understand for both developers and non-developers.

Acct, AP, AR, Hr, Rpt, Assoc, Desc

Beware of numbers in any object names, especially table names. It normally flags up clumsy denormalization where data is embedded in the name, as in Year2017, Year2018 etc. Usually, the significance of the numbers is obvious to the perpetrator, but not to the maintainers of the system.

It is far better to use partitions than to create dated tables such as Invoice2018, Invoice2019, etc. If old data is no longer used, archive the data, store only aggregations, or both.

Database schemas act as a container for SQL Server objects like tables, views, stored procedures, and functions. A schema logically groups objects that are related, keeping them separate from other database objects.

Schemas allow for simplified security management, where you can assign user permissions for a group of objects. By using schemas to group database objects, you will no longer need to maintain permissions on each individual object like tables. Schemas allow you to merge multiple databases that might have been previously separated into one using security to segment the data and objects from each other.

Schemas allow for duplicate object names like tables and views in different schemas should the need arise. You will get nice groupings in client data tools like SSMS (SQL Server Management Studio) object explorer.

- Application or App - can be used to store objects and data that are used in the webapp

- Person - This would allow you to control who has access to Personally Identifiable Information (PII) data

- Purchasing - Purchase department related data

- Sales - Sales related data

- HumanResources or HR - Locked down to only HR staff

- Report - Curated views that are consumed either by users or a reporting system

- Dim or Dimension- Dimensions for a dimensional data warehouse

- Fact - Facts for a dimensional data warehouse

- Staging - Tables used to ingest data source data, generally temporary data, end users would not have access to these tables

- Integration - Tables used to ingest data from other systems, end users would not have access to these tables

- DBA - Stored procedures and table data used by the database administrator

- Et Cetera

A lookup, reference, list, enumeration, code, or domain table in a database is for storing commonly used characteristics or property values that are referenced by other tables. Lookup tables contain unique values like types, categories, codes, tags and/or descriptions and are designed to eliminate data redundancy and improve data integrity in a database. Other metadata values like SortOrderNumber and IsDefault can be included in the lookup table if the project warrants the functionality.

One True Lookup Table (OTLT) or Massively Unified Code-Key (MUCK) table is not a best practice, breaks normal form, and breaks enforcing data integrity where you put all the lookup values into only one large table rather than a table for each. 'Junk Dimensions' in a dimensional warehouse are a best practice.

Lookup tables should follow the EntityAttribute (AccountStatus, AccountType, ProductCategory) naming convention. Entities in this case can represent a real-world object or concept, such as a customer, an order, a product, or a process.

- EntityStatus - Reflects the state of being for an entity like an account

- Grouping and Organizing Data - In general, categories are the broadest grouping of data, while classes and types are increasingly specific subsets of that data. Classes and types are often used to add more detail and specificity to categories, making it easier to organize and manage large amounts of data.

- EntityCategory - A category is a broad grouping of items or entities that share similar characteristics or properties. Categories are often used to organize data into high-level groups or classes. For example, in an e-commerce database, products might be grouped into categories such as "electronics", "clothing", or "home goods".

- EntityClass - A class is a more specific grouping of items or entities that share a particular set of characteristics or properties. Classes are often used to define subsets of categories or to further classify data based on more specific criteria. For example, within the "electronics" category, products might be further classified into classes such as "computers", "televisions", or "smartphones".

- EntityType - A type is a particular variation or instance of a class or category, often defined by a specific set of properties or attributes. Types are used to describe specific objects or entities within a class or category. For example, within the "computers" class, types might include "desktops", "laptops", or "tablets".

- EntityCode - A code is generally a standard maintained by a trusted outside source. Zip and Postal codes are maintained by the United States Postal Services.

- EntityNumber - This is a string of numbers or alphanumerics that provide uniqueness. SQL Server also provides sequence number functionality if no gaps are required.

- EntityDate - Dates are generally not referenced to lookup tables in transactional databases but analytical data warehouses use them as dimensions lookups.

- EntityGender - Person genders would fall into this category. If you need to allow gender lookups for a person and an animal, you might need to have a superclass of 'Biological Entity' with subclasses.

- EntityMeasure - Unit of measure sometimes role-plays as an entity and attribute based on its use.

- EntityMethod - Shipping method sometimes role-plays as an entity and attribute based on its use.

- Entity[...] - Other businesses might have domain specific attributes.

CREATE TABLE Application.AddressType (

AddressTypeId int NOT NULL IDENTITY(1, 1)

,AddressTypeCode nvarchar(10) NOT NULL

,AddressTypeName nvarchar(50) NOT NULL

,AddressTypeDescription nvarchar(300) NULL

,SortOrderNumber int NULL

,IsDefault bit NOT NULL CONSTRAINT Application_AddressType_IsDefault_Default DEFAULT (0)

,IsLocked bit NOT NULL CONSTRAINT Application_AddressType_IsLocked_Default DEFAULT (0)

,IsActive bit NOT NULL CONSTRAINT Application_AddressType_IsActive_Default DEFAULT (1)

,RowModifyPersonId int NOT NULL CONSTRAINT Application_AddressType_Application_RowModifyPerson FOREIGN KEY REFERENCES Application.Person (PersonId)

,RowCreatePersonId int NOT NULL CONSTRAINT Application_AddressType_Application_RowCreatePerson FOREIGN KEY REFERENCES Application.Person (PersonId)

,RowModifyTime datetimeoffset(7) NOT NULL CONSTRAINT Application_AddressType_RowModifyTime_Default DEFAULT (SYSDATETIMEOFFSET())

,RowCreateTime datetimeoffset(7) NOT NULL CONSTRAINT Application_AddressType_RowCreateTime_Default DEFAULT (SYSDATETIMEOFFSET())

,RowVersionStamp rowversion NOT NULL

,CONSTRAINT Application_AddressType_AddressTypeId PRIMARY KEY CLUSTERED (AddressTypeId ASC)

,INDEX Application_AddressType_AddressTypeName_AddressTypeShortName UNIQUE NONCLUSTERED (AddressTypeCode ASC, AddressTypeName ASC)

,INDEX Application_AddressType_RowModifyPersonId NONCLUSTERED (RowModifyPersonId ASC)

,INDEX Application_AddressType_RowCreatePersonId NONCLUSTERED (RowCreatePersonId ASC)

);Avoid, where possible, concatenating two table names together to create the name of a relationship (junction, cross-reference, intersection, many-to-many) table when there is already a word to describe the relationship. e.g. use Subscription instead of NewspaperReader.

If you have a table named Product and a lookup table named ProductCategory and you need to create a many-to-many table, Catalog would be a good name.

When a word does not exist to describe the relationship use Table1Table2 with no underscores. If you are at a loss for a relationship name and Table1Table2 is one of the table names Table1Table2Relationship will work.

Choose a column name to reflect precisely what is contained in the attribute of the entity

- Avoid repeating the table name for column names except for:

- Table Primary Key: TODO: See Primary Key Column Naming

- Common or Natural Words or Terms: When you come across common or natural names like

PatientNumber,PurchaseOrderNumberorDriversLicenseNumber,GLAccount,ARAccount,Line1,Line2,FirstName,LastName,Title,Suffixyou will want to use them as they commonly are used. - Generic or Class Words: When using generic names like

Name,Description,Number,Code,Type,Status,Amount,Date,Quantity,Rate,Key,Value,Deleted,Active,Permission,Primary,Locked,Default… you should prefix the class word with a modifier like the table name if appropriate.- Instead use

AccountNumber,AddressTypeName,ProductDescription&StateCode - SELECT queries will need aliases when two tables use generic columns like

Name

- Instead use

- Use abbreviations rarely in attribute names. If your organization has a TPS "thing" that is commonly used and referred to in general conversation as a TPS, you might use this abbreviation

- Pronounced abbreviations: It is better to use a natural abbreviation like id instead of identifier

- Columns without common, or natural words, or terms, end the column name with a suffix (class word) that denotes general usage. These suffixes are not data types that are used in Hungarian notations. There can be names where a suffix would not apply.

- InvoiceId is the identity of the invoice record

- InvoiceNumber is an alternate key

- StartDate is the date something started

- LineAmount is a currency amount not dependent on the data type like

decimal(19, 4) - GroupName is the text string not dependent on the data type like

varchar()ornvarchar() - StateCode indicates the short form of something

- Booleans - TODO: See Affirmative Boolean Naming

- UnitPrice is the price of a product unit

- WebsiteURL is the internet address - TODO: See URL or URI Naming

- ModifyPersonId is the person who last updated a record

- CreatePersonId is the person who created a record

- ModifyTime is the date and time something was modified

- CreateTime is the date and time something was created

- VersionStamp is the

rowversion/timestamp(unique binary numbers) to increment for each insert or update - ValidFromTime is the period start for a system-versioned temporal tables

- ValidToTime is the period end for a system-versioned temporal tables

For columns that are the primary key for a table and uniquely identify each record in the table, the name should be [TableName] + Id (e.g. On the Make table, the primary key column would be MakeId).

Though MakeId conveys no more information about the field than Make.Id and is a far wordier implementation, it is still preferable to Id.

Naming a primary key column Id is also "bad" when you query from several tables you will need to rename the Id columns so you can distinguish them in result set.

When you have the same column names in joins it will mask errors that would otherwise be more obvious.

/* This has an error that is not obvious at first sight */

SELECT

C.Name

,M.Id AS MakeId

FROM

Car AS C

INNER JOIN Make AS MK ON C.Id = MK.Id

INNER JOIN Model AS MD ON MD.Id = C.Id

INNER JOIN Color AS CL ON MK.Id = C.Id;

/* Now you can see MK.MakeId does not equal C.ColorId in the last table join */

SELECT

C.Name

,M.MakeId

FROM

Car AS C

INNER JOIN Make AS MK ON C.MakeId = MK.MakeId

INNER JOIN Model AS MD ON MD.ModelId = C.ModelId

INNER JOIN Color AS CL ON MK.MakeId = C.ColorId;Foreign key columns should have the exact same name as they do in the parent table where the column is the primary. For example, in the Customer table the primary key column might be CustomerId. In an Order table where the customer id is kept, it would also be CustomerId.

There is one exception to this rule, which is when you have more than one foreign key column per table referencing the same primary key column in another table. In this situation, it is helpful to add a descriptor before the column name. An example of this is if you had an Address table. You might have a Person table with foreign key columns like HomeAddressId, WorkAddressId, MailingAddressId, or ShippingAddressId.

This naming combined with TODO: Primary Key Column Naming naming makes for much more readable SQL:

SELECT

F.FileName

FROM

dbo.File AS F

INNER JOIN dbo.Directory AS D ON F.FileId = D.FileId;whereas this has a lot of repeating and confusing information:

SELECT

F.FileName

FROM

dbo.File AS F

INNER JOIN dbo.Directory AS D ON F.Id = D.FileId;Bit columns should be given affirmative boolean names like IsActive, IsDeleted, HasPermission, or IsValid so that the meaning of the data in the column is not ambiguous; negative boolean names are harder to read when checking values in T-SQL because of double-negatives (e.g. IsNotDeleted).

- IsActive indicates a status

- IsDeleted indicates a soft delete status

- IsLocked indicates if a record is immutable

- IsDefault indicates if a record is defaulted

- IsPrimary indicates first in an order

- IsValid indicated validity

- HasPermission indicated permissions

- CanExport indicates permission to export

In 99% of everyday cases, you should use URL instead of URI because both are technically true, but URL is more specific. The difference between a URI and a URL is that a URI can be just a name by itself (emergentsoftware.net), or a name with a protocol (https://emergentsoftware.net, ftp://emergentsoftware.net, mailto://[email protected], file://emergentsoftware/~user/file.csv) that tells you how to reach it—which is a URL.

Use the format of [FOREIGN-KEY-TABLE]_[PRIMARY-KEY-TABLE] in most cases. This gives you a quick view of the tables that are involved in the relationship. The first table named depends on the second table named.

Example: Invoice_Product

In cases where there are multiple foreign key relationships to one primary key table like Address, Date, Time, ... your foreign key relationship name should include the context of the relationship.

Example: Invoice_ShippingAddress or Invoice_BillingAddress

In a more rare case when not referencing the primary key of the primary key table you should use the format of [FOREIGN-KEY-TABLE]_[CHILD-COLUMN]_[PRIMARY-KEY-TABLE]_[PARENT-COLUMN].

Example: Invoice_ProductCode_Product_ProductCode

If utilizing schemas other than dbo, prefix the schema name before the [TABLE-NAME].

Example: Purchasing_PurchaseOrderLine_Application_Product

In addition to the general naming standards regarding no special characters, no spaces, and limited use of abbreviations and acronyms, common sense should prevail in naming variables and parameters; variable and parameter names should be meaningful and natural.

All variables and parameters must begin with the @ symbol. Do not use @@ to prefix a variable as this signifies a SQL Server system global variable and will affect performance.

All variables and parameters should be written in PascalCase, e.g., @FirstName or @City or @SiteId.

Variable and parameter names should contain only letters and numbers. No special characters or spaces should be used.

Parameter and variable and names should be named identically as the column names for the data they represent other than the @ symbol.

-

Index Names should be

[SchemaName_]TableName_Column1_Column2_Column3 -

Index Names should indicate if there are included columns with

[SchemaName_]TableName_Column1_Column2_Column3_Includes -

When using

uniqueidentifier/guidcolumns for clustered index you can use[SchemaName_]TableName_ColumnName_INDEX_REBUILD_ONLYto signify special index maintenance handling. -

TODO: See uniqueidentifier in a Clustered Index

Use the format of [TABLE-NAME]_[COLUMN-NAME]

Basic Example: Invoice_InvoiceId

If utilizing schemas other than dbo, prefix the schema name before the [TABLE-NAME].

Example: Purchasing_PurchaseOrder_PurchaseOrderId

- TODO: See Unique Indexes

If utilizing schemas other than dbo, prefix the schema name before the [TABLE-NAME] below.

Use the format [TABLE-NAME]_[COLUMN-NAME]_Default

CONSTRAINT Person_RowUpdateTime_Default DEFAULT (SYSDATETIMEOFFSET())

Use the format [TABLE-NAME]_[COLUMN-NAME]_[DESCRIPTION]

Examples

CONSTRAINT ProductItem_RegularPrice_Minimum CHECK (RegularPrice > 0)

CONSTRAINT ProductItem_SalePrice_Less_To_RegularPrice CHECK (SalePrice < RegularPrice)

CONSTRAINT Feedback_Stars_Range CHECK (Stars BETWEEN 0 AND 5)

TODO: See: Unique Indexes

Create explicit object names and do not let SQL Server name objects.

If you do not specify an object name, SQL Server will create one for you. This causes issues when comparing different environments that would have differently generated names.

CREATE TABLE dbo.TableName (

TableNameId int NOT NULL PRIMARY KEY /* This creates a primary key with a name like "PK__TableNam__38F491856B661278" */

,ParentTableNameId int NOT NULL FOREIGN KEY REFERENCES dbo.TableName (TableNameId)/* This creates a ParentTableNameId foreign key with a name like "FK__TableName__Paren__4A0EDAD1" */

,SomeCheck char(1) NOT NULL CHECK (SomeCheck IN ('A', 'B', 'C')) /* This creates a SomeCheck constraint with a name like "CK__TableName__Speci__49C3F6B7" */

,SomeDefault varchar(50) NOT NULL DEFAULT ('') /* This creates a SomeDefault default constraint with a name like "DF__TableName__SomeN__4AB81AF0" */

,SomeUniqueIndex nvarchar(100) NOT NULL UNIQUE NONCLUSTERED /* This creates a SomeUniqueIndex nonclustered index with a name like "UQ__TableNam__2A2E95CE05DDEAFE" */

,SomeUniqueConstraint nvarchar(100) NOT NULL UNIQUE /* This creates a SomeUniqueConstraint constraint with a name like "UQ__TableNam__4F58406D42E8D6BD" */

);

/* Drop the bad version of the table */

DROP TABLE dbo.TableName;

/* Create a better version of the table with actual constraint names */

CREATE TABLE dbo.TableName (

TableNameId int NOT NULL CONSTRAINT TableName_TableNameId PRIMARY KEY

,ParentTableNameId int NOT NULL CONSTRAINT TableName_TableName FOREIGN KEY REFERENCES dbo.TableName (TableNameId)

,SomeCheck char(1) NOT NULL CONSTRAINT TableName_SomeCheck_InList CHECK (SomeCheck IN ('A', 'B', 'C'))

,SomeDefault varchar(50) NOT NULL CONSTRAINT TableName_SomeDefault_Default DEFAULT ('')

,SomeUniqueIndex nvarchar(100) NOT NULL CONSTRAINT TableName_SomeUniqueIndex_Unique UNIQUE NONCLUSTERED

,SomeUniqueConstraint nvarchar(100) NOT NULL CONSTRAINT TableName_SomeUniqueConstraint_Unique UNIQUE

);- TODO: See Naming Constraint Usage

- TODO: See Naming Primary Keys

Stored procedures and functions should be named so they can be ordered by the table/business entity (ObjectAction) they perform a database operation on, and adding the database activity "Get, Update, Insert, Upsert, Delete, Merge" as a suffix, e.g., (ProductGet or OrderUpdate).

Table design matters because it is essential for building software applications that are scalable and capable of performing during high workload.

TODO: WIP: Write up about using inline indexes on the table creation statement that have been around since SQL Server 2014. Prior to SQL Server 2014, the CREATE/ALTER TABLE only accepted CONSTRAINT. Write about indexes with includes not being supported yet.

Database Normalizing tables are regarded as a best practice methodology for relational databases design. Relational database tables should be normalized to at least the Boyce–Codd normal form (BCNF or 3.5NF).

These normalizing principles are used to reduce data duplication, avoid data anomalies, ensure table relationship integrity, and make data management simplified.

A normalized table design like addresses and phone numbers in separate tables allows for extensibility when the requirements today might only call for a single phone number or address. You can utilize a UNIQUE constraint/index to ensure only a single row can exist but allows for flexibility in the future. You will already have the Phone table so you will not have to create a new table and migrate the data and drop the UNIQUE constraint/index.

You should always normalize the database schema unless you have a really good reason not to. Denormalizing has been performed in the past with the saying "Normalize until it hurts, denormalize until it works". Denormalization almost always leads to eventual issues. Denormalization complicates updates, deletes & inserts making the database difficult to modify. We have tools like indexes or materialized views and covering indexes to remediate performance issues. There are query rewriting techniques that can make queries more performant.

A legally binding entity like a contract that will be created as an immutable snapshot can contain redundant data. This helps with possible future reprinting of documents. Consider using an auditable append-only Ledger table.

If your tables have many columns like 20+ or many nullable columns, you have what is called a wide or "God Object" and you might need to assess your modeling. Each table should be an entity and columns be an attribute of the entity.

If data is denormalized there are methods of keeping denormalized or duplicated data values correct. The data should be strongly consistent instead of eventually consistent.

Strongly consistent is with the use of transactions that will not allow the data to be out-of-sync like in a stored procedure, triggers are the next best option, but triggers have performance issues.

Eventually consistent methods are something like jobs that run at a predetermined or event-based time to synchronize the denormalized (duplicate) data. Eventually consistent leaves a gap of time, even if for milliseconds, which could cause data consistency issues.

- See Keeping Denormalized Values Correct article by Kenneth Downs

Use the Table Per Type (TPT) table design pattern.

The Table Per Concrete (TPC) design is not good as it would have redundant data and no relationship between the sub tables. The redundant data would just be in multiple tables vs squished into one table with Table Per Hierarchy (TPH). TPC would help with the extra nullable columns compared to TPH.

The Table Per Type (TPT) table design pattern is performant with proper indexes well into the 100s of millions of rows and beyond. With proper indexes a query can join multiple tables and still execute in less than a second even for costly queries that scan indexes like exporting of data. Seeking on indexes for row-level queries like finding a specific CustomerId, should only take on order of 10s of milliseconds. Until multiple of billions of rows are a consideration there is no performance reason not to follow database norm form best practices. If you have slow joined table queries, you're probably not using your database correctly.

TPC & TPH do not follow normal form.

- TODO: See Not Normalizing Tables.

An entity is a table and is a single set of attributes. There is a need sometimes for entity/table inheritance when you might have a parent entity/table named Person, you would need another inherited entity/table for Employee. The Employee entity/table will store attributes (Salary, Job Title, ...) that are not attributes in the Person entity/table.

A use case exception for using proper table entities is for security purposes. You might encounter a requirement for security that utilizing a linking table makes it impossible to have a discriminator like PhoneTypeId to prevent read or modifications to a row based on how the software was/is written.

This exception use can lead to table schema development issues for cases when you have a multiple entity/tables like dbo.BuyerPhone, dbo.SellerPhone, dbo.ServicerPhone, dbo.VendorPhone. With the address entity not being materialized in one dbo.Phone entity/table, the table schemas need to be kept in sync which might not occur. Ask me how I know. ;-(

Use the proper weak or strong table type based on the entity.

A weak table is one that can only exist when owned by another table. For example: a 'Room' can only exist in a 'Building'.

An 'Address' is a strong table because it exists with or without a person or organization.

With an 'Address' table you would have a linking, or many-to-many table.

| ResidenceId | AddressTypeId | PersonId | AddressId |

|---|---|---|---|

| 1 | 1 | 456 | 233 |

| 2 | 1 | 234 | 167 |

| 3 | 2 | 622 | 893 |

A 'Phone Number' is a weak table because it generally does not exist without a person or organization.

With a 'Phone Number' table you would not use a linking table. The 'Phone Number' table would reference back to the person table.

| PersonId | FistName | LastName |

|---|---|---|

| 1 | Kevin | Martin |

| 2 | Han | Solo |

| 3 | Mace | Windu |

| PersonPhoneId | PhoneTypeId | PersonId | PhoneNumber |

|---|---|---|---|

| 1 | 1 | 1 | 555-899-5543 |

| 2 | 1 | 2 | (612) 233-2255 |

| 3 | 2 | 3 | 1+ (453) 556-9902 |

By default, the history table is PAGE compressed for System Versioned Temporal Tables. There are cases where the compression is removed or not deployed.

The SQL script below will check for the issue and provide an ALTER TSQL statement to apply page compression. Do not forget to change ONLINE to ON OR OFF.

SELECT

SchemaName = S.name

,TableName = T.name

,DataCompression = P.data_compression_desc

,[ALTER TSQL] = N'ALTER INDEX ' + CAST(QUOTENAME(I.name) AS nvarchar(128)) + N' ON ' + CAST(QUOTENAME(S.name) AS nvarchar(128)) + N'.' + CAST(QUOTENAME(T.name) AS nvarchar(128)) + N' REBUILD PARTITION = ALL WITH (STATISTICS_NORECOMPUTE = OFF, ONLINE = ?, DATA_COMPRESSION = PAGE);'

FROM

sys.partitions AS P

INNER JOIN sys.indexes AS I

ON I.object_id = P.object_id

AND I.index_id = P.index_id

INNER JOIN sys.objects AS O

ON I.object_id = O.object_id

INNER JOIN sys.tables AS T

ON O.object_id = T.object_id

INNER JOIN sys.schemas AS S

ON T.schema_id = S.schema_id

WHERE

T.temporal_type = 1 /* HISTORY_TABLE */

AND I.type <> 5 /* CLUSTERED */

AND P.data_compression <> 2 /* Not Page compressed, which is the default for system-versioned temporal tables */

ORDER BY

S.name ASC

,T.name ASC;Database table partitions should not be used to "speed up" query performance. Use an index instead.

Partitioning can make your workload run slower if the partitions are not set up correctly and your queries cannot remain within a single partition. Instead of thinking about a partition, think about an index.

Partitioning can be used as a maintenance tool for either distributing data between cold or hot storage.

Partition switching/swapping/truncating is another use case for managing large amounts of table data like in a data warehouse.

The Entity–Attribute–Value (EAV) model falls victim to the Inner-platform effect.

The inner-platform effect is the tendency of software architects to create a system so customizable as to become a replica, and often a poor replica, of the software development platform they are using. This is generally inefficient and such systems are often considered to be examples of an anti-pattern.

In the database world, developers are sometimes tempted to bypass the SQL Server relations database, for example by storing everything in one big table with three columns labelled EntityId, Key, and Value. While this entity-attribute-value model allows the developer to break out from the structure imposed by a SQL Server database, it loses out on all the benefits, since all of the work that could be done efficiently by the SQL Server relational database is forced onto the application instead. Queries become much more convoluted, the indexes and query optimizer can no longer work effectively, and data validity constraints are not enforced. Performance and maintainability can be extremely poor.

Data that meets the following criteria might be okay to use the EAV pattern at the discretion of the software or database architect.

- The definition of the data is highly dynamic and is likely to change numerous times of the course of the development and/or life of the application.

- The data has no intrinsic value outside the context of the application itself.

- The data will not be materialized in models but simply passed through the application layers as key value pairs.

Examples of valid use cases that meet this criteria are:

- Application user preferences

- User interface styling & custom layouts

- Saved user interface state

- Front-end application configuration

Another use case exception for utilizing an EAV model in a database is where the attributes are user defined and a developer will not have control over them.

Another use case exception for utilizing an EAV model is for something like a multiple product (entity) type shopping cart. Where each specific product (entity) type has its own list of attributes. If the product (entity) catalog only sells a manageable number of product different types the attributes, the attributes should be materialized as physical columns in the table schema. This type of EAV model will have diminishing returns for performance with more data housed in the database. Caching this type of EAV model will allow for a larger data set as to lessen the chance of performance issues. Ensure this is communicated to the stakeholders.

Basic table model for a product catalog that supports multiple product types

CREATE TABLE dbo.Attribute (

AttributeId int IDENTITY(1, 1) NOT NULL

,AttributeName nvarchar(100) NOT NULL

,CONSTRAINT Attribute_AttributeId PRIMARY KEY CLUSTERED (AttributeId ASC)

);

/* Insert values like "Size", "Color" */

CREATE TABLE dbo.AttributeTerm (

AttributeTermId int IDENTITY(1, 1) NOT NULL

,AttributeId int NOT NULL CONSTRAINT AttributeTerm_Attribute FOREIGN KEY REFERENCES dbo.Attribute (AttributeId)

,TermName nvarchar(100) NOT NULL

,CONSTRAINT AttributeTerm_AttributeTermId PRIMARY KEY CLUSTERED (AttributeTermId ASC)

);

/* Insert values like "Small", "Large", "Red", "Green" */

CREATE TABLE dbo.Product (

ProductId int IDENTITY(1, 1) NOT NULL

,ProductName nvarchar(200) NOT NULL

,ProductSlug nvarchar(400) NOT NULL

,ProductDescription nvarchar(MAX) NULL

,CONSTRAINT Product_ProductId PRIMARY KEY CLUSTERED (ProductId ASC)

,CONSTRAINT Product_ProductName UNIQUE NONCLUSTERED (ProductName ASC)

,CONSTRAINT Product_ProductSlug UNIQUE NONCLUSTERED (ProductSlug ASC)

);

/* Insert values like "Shirt", "Pants" */

CREATE TABLE dbo.ProductItem (

ProductItemId int IDENTITY(1, 1) NOT NULL

,ProductId int NOT NULL CONSTRAINT ProductItem_Product FOREIGN KEY REFERENCES dbo.Product (ProductId)

,UPC varchar(12) NULL

,RegularPrice decimal(18, 2) NOT NULL

,SalePrice decimal(18, 2) NULL

,CONSTRAINT ProductItem_ProductItemId PRIMARY KEY CLUSTERED (ProductItemId ASC)

);

/* Insert values product UPC codes and prices */

CREATE TABLE dbo.ProductVariant (

ProductVariantId int IDENTITY(1, 1) NOT NULL

,ProductItemId int NOT NULL CONSTRAINT ProductVariant_ProductItem FOREIGN KEY REFERENCES dbo.ProductItem (ProductItemId)

,AttributeTermId int NOT NULL CONSTRAINT ProductVariant_AttributeTerm FOREIGN KEY REFERENCES dbo.AttributeTerm (AttributeTermId)

,CONSTRAINT ProductVariant_ProductVariantId PRIMARY KEY CLUSTERED (ProductVariantId ASC)

);

/* Insert values that link Shirt or Pants product items to the attributes available for sale */Every table should have a column that uniquely identifies one and only one row. It makes it much easier to maintain the data. The best practice is for each row to have a surrogate key. It is best practice to use int or bigint.

- TODO: See Primary Key Column Naming

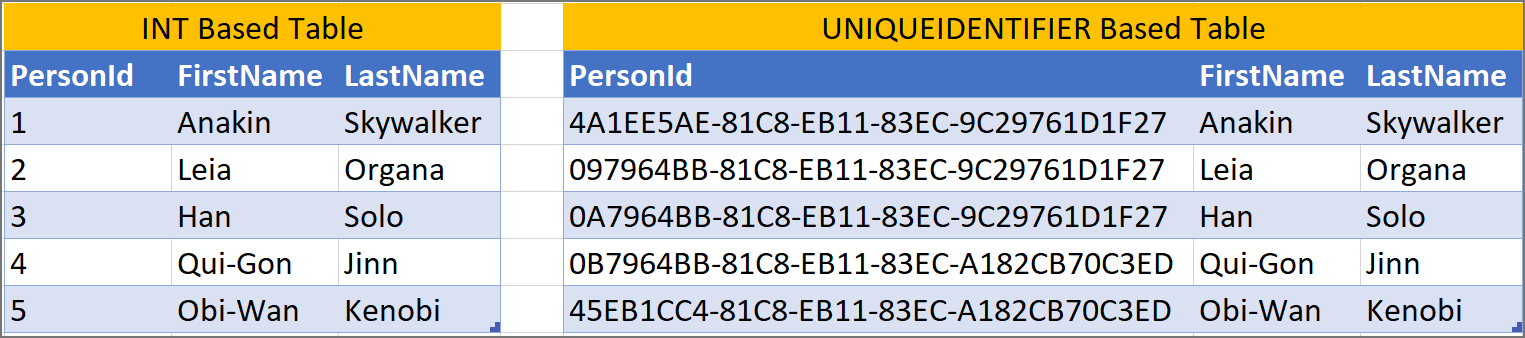

Using uniqueidentifier/guid as primary keys causes issues with SQL Server databases. uniqueidentifier/guid are unnecessarily wide (4x wider than an int).

uniqueidentifiers/guids are not user friendly when working with data. PersonId = 2684 is more user friendly than PersonId = 0B7964BB-81C8-EB11-83EC-A182CB70C3ED.

A use case for when you can use uniqueidentifier/guid as primary keys, is when there are separate systems and merging rows would be difficult. The uniqueness of uniqueidentifier/guid simplifies the data movements.

If the row data in the table does not contain any NULL values you should assess setting the column to not 'Allow Nulls'.

Null-ness does more than constraining the data, it factors in on performance optimizer decisions.

For data warehouses do not allow NULL values for dimensional tables. You can create a -1 identifier with the value of [UNKNOWN]. This helps business analysts who might not understand the difference between INNER and OUTER JOINs exclude data in their TSQL queries.

If a column has a foreign key and nullable, it will not be trusted and JOIN Elimination will not occur, forcing your query to perform more query operations than needed.

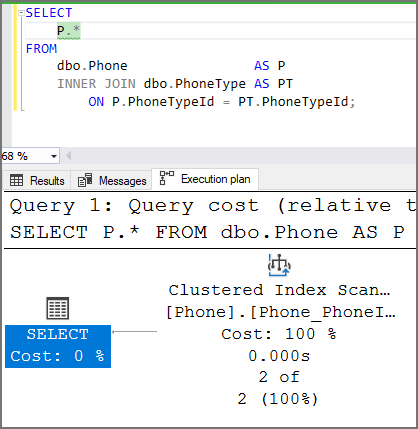

This execution plan shows how JOIN elimination works. There is a foreign key on the column and the column does not allow null. There is only one index operation in the execution plan. SQL Server is able to eliminate JOINs because it "trusts" the data relationship.

- TODO: See Untrusted Foreign Key

This execution plan shows JOIN elimination has not occurred. While there is a foreign key on the column, the column allows null. There are two index operations and a merge operation causing SQL Server to perform more work.

You should always explicitly define NULL or NOT NULL for columns when creating or declaring a table. The default of allowing NULLs can be changed with the database setting ANSI_NULL_DFLT_ON.

If the row data in the table does not contain any NULL values, you should assess setting the column to not 'Allow Nulls'.

Null-ness does more than constraining the data, it factors in on performance optimizer decisions.

For data warehouses do not allow NULL values for dimensional tables. You can create a -1 identifier with the value of [UNKNOWN]. This helps business analysts who might not understand the difference between INNER and OUTER JOINs exclude data in their TSQL queries.

If a column has a foreign key and nullable, it will not be trusted and JOIN Elimination will not occur, forcing your query to perform more query operations than needed.

This execution plan shows how JOIN elimination works. There is a foreign key on the column and the column does not allow null. There is only one index operation in the execution plan. SQL Server is able to eliminate JOINs because it "trusts" the data relationship.

TODO: - See Untrusted Foreign Key

This execution plan shows JOIN elimination has not occurred. While there is a foreign key on the column, the column allows null. There are two index operations and a merge operation causing SQL Server to perform more work.

TODO: This could cause issues if the code is not aware of different collations and does include features to work with them correctly.

Each foreign key in your table should be included in an index. Start off with an index on just the foreign key column if you have no workload to tune a multi-column index. There is a really good chance the indexes will be used when queries join on the parent table.

You will not get JOIN Eliminations without a foreign key and a column that allows NULLs.

Use a stored procedure or SQL command in your software code to delete data in multiple related tables instead of ON DELETE CASCADE on the foreign key.

Foreign keys with cascading actions like ON DELETE CASCADE or ON UPDATE ? perform slowly due to SQL Server taking out serializable locks (RANGEX-X) to guarantee referential integrity so that it can trust the foreign keys.

You may also receive the error message below when you utilize ON DELETE CASCADE.

Msg 1785, Level 16, State 0, Line 190

Introducing FOREIGN KEY constraint [ForeignKeyName] on table [TableName] may cause cycles or multiple cascade paths.

Specify ON DELETE NO ACTION or ON UPDATE NO ACTION, or modify other FOREIGN KEY constraints.

Msg 1750, Level 16, State 1, Line 190

Could not create constraint or index. See previous errors.

A general rule is to aim for 5 indexes per table, with around 5 columns or less on each index. This is not a set-in-stone rule, but if you go beyond the general rule you should ensure you are only creating indexes that are required for the SQL Server query workload. If you have a read-only database, there will be no inserts, updates, or deletes to maintain on all those indexes. The more you have the less performant insert, update & deletes are.

You or your DBA should implement an index tuning strategy like below.

- Dedupe - reduce overlapping indexes

- Eliminate - unused indexes

- Add - badly needed missing indexes

- Tune - indexes for specific queries

- Heap - usually need clustered indexes

The Index Tuning Wizard and Database Tuning Advisor are not known for generating the best indexes and you should not blindly implement the recommendations without understanding the overall consequences. Feel free to reach out to your local DBA for assistance.

The SQL Server Missing Indexes recommendations feature has limitations and even recommends you create indexes that already exist. It is not meant for you fine tune and only provides sometimes adequate recommendations.

You should assess the missing index recommendation but create a fine-tuned custom index that includes all that is excluded items.

See the Books Online: Limitations of the Missing Indexes Feature

The missing index feature has the following limitations:

- It is not intended to fine tune an indexing configuration.

- It cannot gather statistics for more than 500 missing index groups.

- It does not specify an order for columns to be used in an index.

- For queries involving only inequality predicates, it returns less accurate cost information.

- It reports only include columns for some queries, so index key columns must be manually selected.

- It returns only raw information about columns on which indexes might be missing.

- It does not suggest filtered indexes.

- It can return different costs for the same missing index group that appears multiple times in XML Showplans.

- It does not consider trivial query plans.

You should always explicitly define ascending (ASC) or descending (DESC) for indexes. This allows others who review or edit the code to not know that ASC is the default sort order.

CREATE NONCLUSTERED INDEX Application_Person_LastName ON Application.Person

(

LastName ASC

/* ↑ Look Here */

);An index rebuild or reorganization will enabled disabled indexes. It is now best practices to delete instead of disable if not needed.

Add the filtered columns in the INCLUDE() on your index so your queries do not need to perform a key lookup. By including the filtered columns, SQL Server generates statistics on the columns.

Instead Of:

CREATE NONCLUSTERED INDEX Account_Greater_Than_Half_Million

ON dbo.Account (AccountName, AccountId)

WHERE Balance > 500000;Do This:

CREATE NONCLUSTERED INDEX Account_Greater_Than_Half_Million

ON dbo.Account (AccountName, AccountId)

INCLUDE (Balance)

WHERE Balance > 500000;SQL Server storage is built around the clustered index as a fundamental part of the data storage and retrieval engine. The data itself is stored with the clustered key. All this makes having an appropriate clustered index a vital part of database design. The places where a table without a clustered index is preferable are rare, which is why a missing clustered index is a common code smell in database design.

A table without a clustered index is a heap, which is a particularly bad idea when its data is usually returned in an aggregated form, or in a sorted order. Paradoxically, though, it can be rather good for implementing a log or a ‘staging’ table used for bulk inserts, since it is read very infrequently, and there is less overhead in writing to it.

A table with a non-clustered index, but without a clustered index can sometimes perform well even though the index must reference individual rows via a Row Identifier rather than a more meaningful clustered index. The arrangement can be effective for a table that isn’t often updated if the table is always accessed by a non-clustered index and there is no good candidate for a clustered index.

Heaps have performance issues like table scans, forward fetches.

The default fill factor is (100 or 0) for SQL Server. Best practice is to ONLY use a low fill factor on indexes where you know you need it. Setting a low fill factor on too many indexes will hurt your performance:

- Wasted space in storage

- Wasted space in memory (and therefore greater memory churn)

- More IO, and with it, higher CPU usage

Add the filtered columns in the INCLUDE() on your index so your queries do not need to perform a key lookup. By including the filtered columns, SQL Server generates statistics on the columns. This is also known as a covering index.

Instead Of:

CREATE NONCLUSTERED INDEX Account_Greater_Than_Half_Million

ON dbo.Account (AccountName, AccountId)

WHERE Balance > 500000;Do This:

CREATE NONCLUSTERED INDEX Account_Greater_Than_Half_Million

ON dbo.Account (AccountName, AccountId)

INCLUDE (Balance)

WHERE Balance > 500000;uniqueidentifier/guid columns should not be in a clustered index. Even NEWSEQUENTIALID() should not be used in a clustered index. The sequential uniqueidentifier/guid is based on the SQL Server's MAC address. When an Availability Group fails over the next uniqueidentifier/guid will not be sequential anymore.

SQL Server will bad page split and fragment an index when a new record is inserted instead of being inserted on the last page using standard best practices for index maintenance. The clustered index will become fragmented because of randomness of uniqueidentifier/guid. Index maintenance set to the default fill factor of 0 (packed 100%) will force bad page splits.

A use case for when you can use uniqueidentifier/guid as a primary key & clustered index, is when there are separate systems and merging rows would be difficult. The uniqueness of uniqueidentifier/guid simplifies the data movements.

DBAs have historically not implemented uniqueidentifier/guid as primary keys and/or clustered indexes. The traditional index maintenance jobs would keep the uniqueidentifier/guid indexes in a perpetual state of fragmentation causing bad page splits, which is an expensive process.

A new index maintenance strategy is to name these uniqueidentifier/guid indexes with the ending "*_INDEX_REBUILD_ONLY" and create a customized index maintenance plan. One job step will perform the standard index maintenance ignoring the uniqueidentifier/guid indexes and another job step will only perform an index rebuild, skipping index reorganizations. Ola Hallengren maintenance scripts is recommended.

These uniqueidentifier/guid indexes should be created and rebuilt with a custom fill factor to account for at least a weeks' worth of data. This is not a "set it, and forget it" maintenance plan and will need some looking after.

These are a 400 level tasks and please feel free to reach out to a DBA for assistance.

Create unique indexes instead of unique constraints (unique key). Doing so removes a dependency of a unique key to the unique index that is created automatically and tightly coupled.

Instead of: CONSTRAINT AddressType_AddressTypeName_Unique UNIQUE (AddressTypeName ASC)

Use: INDEX AddressType_AddressTypeName UNIQUE NONCLUSTERED (AddressTypeName ASC)

In some table design cases you might need to create uniqueness for one or more columns. This could be for a natural [composite] key or to ensure a person can only have one phone number and phone type combination.

There is no functional or performance difference between a unique constraint (unique key) and a unique index. With both you get a unique index, but with a unique constraint (unique key) the 'Ignore Duplicate Keys' and 'Re-compute statistics' index creation options are not available.

The only possible benefit of a unique constraint (unique key) has over a unique index is to emphasize the purpose of the index and is displayed in the SSMS (SQL Server Management Studio) table 'Keys' folder in the 'Object Explorer' side pane.

SQL Server is not going to consider using untrusted constraints to compile a better execution plan. This can have a huge performance impact on your database queries.

You might have disabled a constraint instead of dropping and recreating it for bulk loading data. This is fine, as long as your remember to enable it correctly.

ALTER TABLE dbo.TableName WITH CHECK CHECK CONSTRAINT ConstraintName;

GOThe CHECK CHECK syntax is correct. The 1st CHECK is the end of WITH CHECK statement. The 2nd CHECK is the start of the CHECK CONSTRAINT clause to enable the constraint

To find untrusted foreign keys and check constraints in your database run the script below to identify and create an ALTER statement to correct the issue. In Redgate SQL Prompt the snippet code is fk. If you receive an error with the generated ALTER statement it means past constraint violations have been being suppressed by WITH NOCHECK. You will have to figure out how to fix the rows that do not comply with the constraint.

SELECT

SchemaName = S.name

,TableName = T.name

,ObjectName = FK.name

,ObjectType = 'FOREIGN KEY'

,FixSQL = 'ALTER TABLE ' + QUOTENAME(S.name) + '.' + QUOTENAME(T.name) + ' WITH CHECK CHECK CONSTRAINT ' + FK.name + ';'

FROM

sys.foreign_keys AS FK

INNER JOIN sys.tables AS T

ON FK.parent_object_id = T.object_id

INNER JOIN sys.schemas AS S

ON T.schema_id = S.schema_id

WHERE

FK.is_not_trusted = 1

AND FK.is_not_for_replication = 0

UNION ALL

SELECT

SchemaName = S.name

,TableName = T.name

,ObjectName = CC.name

,ObjectType = 'CHECK CONSTRAINT'

,FixSQL = 'ALTER TABLE ' + QUOTENAME(S.name) + '.' + QUOTENAME(T.name) + ' WITH CHECK CHECK CONSTRAINT ' + CC.name + ';'

FROM

sys.check_constraints AS CC

INNER JOIN sys.tables AS T

ON CC.parent_object_id = T.object_id

INNER JOIN sys.schemas AS S

ON T.schema_id = S.schema_id

WHERE

CC.is_not_trusted = 1

AND CC.is_not_for_replication = 0

AND CC.is_disabled = 0

ORDER BY

SchemaName ASC

,TableName ASC

,ObjectName ASC;Generally, there should not be a reason check constraint should be disabled. Check constraints can be disabled before bulk loading but should be enabled afterwards.

Poor data type choices can have significant impact on a database design and performance. A best practice is to right size the data type by understanding the data.

- A

varchar, ornvarcharthat is declared without an explicit length will use a default length. It is safer to be explicit. decimal,numeric. If no precision and scale are provided, SQL Server will use (18, 0), and that might not be what you want.- Explicitly define data type default lengths instead of excluding them.

[n][var]char(1)or[n][var]char(30)- The default length is 1 when n isn't specified in a data definition or variable declaration statement. When n isn't specified with the CAST function, the default length is 30.

datetime2(7)datetimeoffset(7)float(53)[var]binary(1)or[var]binary(30)- The default length is 1 when n isn't specified in a data definition or variable declaration statement. When n isn't specified with the CAST function, the default length is 30.

If the length of the type will be very small (size 1 or 2) and consistent, declare them as a type of fixed length, such as char, nchar, and binary.

When you use data types of variable length such as varchar, nvarchar, and varbinary, you incur an additional storage cost to track the length of the value stored in the data type. In addition, columns of variable length are stored after all columns of fixed length, which can have performance implications.

Columns, parameters, and variables used in a JOIN and WHERE clauses should have the same data type.

There are two situations where not doing this is going to hurt performance. When you have a mismatch of data types in a JOIN and a WHERE clause. SQL Server will need to convert one of them to match the other data type. This is called an implicit conversion.

What will it hurt

- Indexes will not be used correctly

- Missing index requests will not be logged in the Dynamic Management Views (DMVs)

- Extra CPU cycles are going to be required for the conversion

- Do not use the deprecated data types below.

textntextimagetimestamp

There is no good reason to use text or ntext. They were a flawed attempt at BLOB storage and are there only for backward compatibility. Likewise, the WRITETEXT, UPDATETEXT and READTEXT statements are also deprecated. All this complexity has been replaced by the varchar(MAX) and nvarchar(MAX) data types, which work with all of SQL Server’s string functions.

Use nvarchar(128) when storing database object names. Database object names include table names, column names, and view names.

sysname is a special data type used for database objects like database names, table names, column names, et cetera. When you need to store database, table or column names in a table use nvarchar(128).

- See Microsoft docs

Minimize the use of (n)varchar(MAX) on your tables.

(n)varchar(MAX) columns can be included in an index but not as a key. Queries will not be able to perform an index seek on this column.

(n)varchar(MAX) should only every be used if the size of the field is known to be over 8K for varchar and 4K for nvarchar.

Since SQL Server 2016 if the size of the cell is < 8K characters for varchar(MAX) it will be treated as Row data. If > 8K it will be treated as a Large Object (LOB) for storage purposes.

Use the bit data type for boolean columns, parameters, and variables. These columns will have names like IsSpecialSale.

Only use the float and real data types for scientific use cases.

The float (8 byte) and real (4 byte) data types are suitable only for specialist scientific use since they are approximate types with an enormous range (-1.79E+308 to -2.23E-308, 0 and 2.23E-308 to 1.79E+308, in the case of float). Any other use needs to be regarded as suspect, and a float or real used as a key or found in an index needs to be investigated. The decimal type is an exact data type and has an impressive range from -10^38+1 through 10^38-1. Although it requires more storage than the float or real types, it is generally a better choice.

Do not use the sql_variant data type.

The sql_variant type is not your typical data type. It stores values from a number of different data types and is used internally by SQL Server. It is hard to imagine a valid use in a relational database. It cannot be returned to an application via ODBC except as binary data, and it isn’t supported in Microsoft Azure SQL Database.

User-defined data types should be avoided whenever possible. They are an added processing overhead whose functionality could typically be accomplished more efficiently with simple data type variables, table variables, temporary tables, or JSON.

Use the datetimeoffset data type when time zone awareness is needed.

datetimeoffset defines a date that is combined with a time of a day that has time zone awareness and is based on a 24-hour clock. This allows you to use datetimeoffset AT TIME ZONE [timezonename] to convert the datetime to a local time zone.

Use this query to see all the timezone names:

SELECT * FROM sys.time_zone_info;Use the date data type when time values are not required or the smalldatetime data type when precision of minute is acceptable.

Even with data storage being so cheap, a saving in a data type adds up and makes comparison and calculation easier. When appropriate, use the date or smalldatetime type. Narrow tables perform better and use fewer resources.

Use the time data type when date values are not required.

Being frugal with memory is important for large tables, not only to save space but also to reduce I/O activity during access. When appropriate, use the time or smalldatetime type. Queries too are generally simpler on the appropriate data type.

Use the decimal data type instead of the money data type.

The money data type confuses the storage of data values with their display, though it clearly suggests, by its name, the sort of data held. Use decimal(19, 4) instead. It is proprietary to SQL Server.

money has limited precision (the underlying type is a bigint or in the case of smallmoney an int) so you can unintentionally get a loss of precision due to roundoff errors. While simple addition or subtraction is fine, more complicated calculations that can be done for financial reports can show errors.

Although the money data type generally takes less storage and takes less bandwidth when sent over networks, it is generally far better to use a data type such as the decimal(19, 4) type that is less likely to suffer from rounding errors or scale overflow.

Use the nvarchar data type instead of the varchar data type for Unicode National Language Character Set values. Kevin in Chinese is 凯文. You cannot store 凯文 in a varchar column, it will be stored as ??. You must use a nvarchar column to be safely stored and queried.

You can't require everyone to stop using national characters or accents any more. Names are likely to have accents in them if spelled properly, and international addresses and language strings will almost certainly have accents and national characters that can’t be represented by 8-bit ASCII!

Column names to check:

- FirstName

- MiddleName

- LastName

- FullName

- Suffix

- Title

- ContactName

- CompanyName

- OrganizationName

- BusinessName

- Line1

- Line2

- CityName

- TownName

- StateName

- ProvinceName

An email address column, parameter, or variable should be set to nvarchar(254) to leave 2 characters for <> if needed.

This was accepted by the IETF following submitted erratum. The original version of RFC 3696 described 320 as the maximum length, but John Klensin subsequently accepted an incorrect value, since a Path is defined as Path = "<" [ A-d-l ":" ] Mailbox ">"

A URL column, parameter, or variable should be set to nvarchar(2083).

RFC 2616, "Hypertext Transfer Protocol -- HTTP/1.1," does not specify any requirement for URL length. A web server will should return RFC 7231, section 6.5.12: 414 URI Too Long

The Internet Explorer browser has the shortest allowed URL max length in the address bar at 2083 characters.

TODO: - See URL or URI Naming

Use Case Exception

If your application requires more than 2083 characters, ensure the users are not utilizing IE and increase the nvarchar length.

Your database objects (tables, views, stored procedures, functions, triggers, users, roles, schemas, static data, ...) should be in a version control system.

Source control lets you see who made what changes, when, and why. Automate SQL changes during deployment. Rollback any changes you don't want. Source control helps when you develop branch features.

Most importantly, you work from a single source of truth, greatly reducing the risk of downtime at deployment.

Your choices are Redgate SQL Source Control or a SSDT (SQL Server Data Tools) database project backed by DevOps or GitHub depending on the client requirements.

It is recommended that the database project source control be kept separate from the application code. Database and reporting team members might/should not need access to the app source code. The data and business intelligence development team might have their own changes (performance tuning, data warehouse, reporting) in a "dev" branch, but their version is not ready for production yet.

Reasons to use a monorepo for multiple projects

- All the projects have the same permissions and access needs

- All the projects are fully aligned in terms of versioning

- All the projects must always be deployed together by the CI/CD pipeline(s)

- All the projects cannot be built separately unless they are in the same solution

If you choose to use a monorepo, please ensure you have accounted for the issues that can occur if not all the bullet points are fully true.

- See Should the Database and Application projects be in the same Repository? article by Eitan Blumin.

You should have a Development, Testing & Production environment.

SQL Server development is a continuous process to avoid the issues caused by development and reduce the risks of blocking business.

Accidents happen! Imagine you accidentally made an update to thousands of records that you cannot undo without hours of work. Imagine that you do not have access to original data before you changed it. Feeling scared yet? This is where a development and test environment save effort.

A development environment allows developers to program and perform test ensuring their code is correct before pushing to a centralized testing environment for UAT (User Acceptance Testing) or staging for production.

When a feature is marked deprecated, it means:

- The feature is in maintenance mode only. No new changes will be made, including those related to addressing inter-operability with new features.

- Microsoft strives not to remove a deprecated feature from future releases to make upgrades easier. However, under rare situations, they may choose to permanently discontinue (remove) the feature from SQL Server if it limits future innovations.

- For new development work, do not use deprecated features. For existing aplications, plan to modify applications that currently use these features as soon as possible.

A discontinued feature means it is no longer available. If a discontinued feature is being used, when we upgrade to a new SQL Server version, the code will break.

See:

- Deprecated database engine features in SQL Server 2022

- Deprecated database engine features in SQL Server 2019

- Deprecated Database Engine Features in SQL Server 2017

- No Discontinued features in SQL Server 2017

- Deprecated Database Engine Features in SQL Server 2016

- Discontinued Database Engine Functionality in SQL Server 2014

- Discontinued Features in SQL Server 2012

Put data access code (T-SQL) in the database and not in the application/ORM layer.

Utilizing an ORM or application generated database commands has downsides. ORM or app generated SQL code that sticks with basic CRUD (Create, Read, Update, Delete) are not too bad but they do not generate reasonable queries for reporting or beyond the basic CRUD commands.

The benefit of using stored procedures is with query performance tuning. Eventually the queries will outgrow the ORMs ability to generate optimal queries and execution plans. You end up attempting to rewrite software code to force the ORM to produce more performant SQL code.

With stored procedures there are a lot more options to tune a query. You can split up complex SQL code so the SQL Server engine can generate better execution plans, convert to dynamic SQL, use different hints, trace flags, and isolation levels, etc.

The free opensource sp_CRUDGen can be utilized to create eleven different stored procedures from basic your Create, Read, Update, Delete, Upsert stored procedures to extremely advanced safe dynamic Search stored procedures otherwise known as optional parameters, kitchen sink, Swiss army knife, catch-all queries. The generated stored procedure code utilizes the SQL Server community best practices.

- See Database-First: Stored Procedure in Entity Framework

- See Simple C# Data Access with Dapper and SQL - Minimal API Project Part 1

- See Simple C# Data Access with Dapper and SQL - Minimal API Project Part 2

The BEGIN...END control-of-flow statement block is optional for stored procedures and IF statements but is required for multi-line user-defined functions. It is best to avoid confusion and be consistent and specific.

Always included BEGIN...END blocks for IF statements with a semicolon to terminate the statement. Using BEGIN...END in an IF statement and semicolon is critical in some cases.

Use this:

IF @@TRANCOUNT > 0

BEGIN

ROLLBACK TRANSACTION;

END;Instead of this:

IF @@TRANCOUNT > 0

ROLLBACK TRANSACTION;Instead of this:

IF @@TRANCOUNT > 0 ROLLBACK TRANSACTION;Use this:

CREATE OR ALTER PROCEDURE dbo.BusinessEntityAction

AS

BEGIN

SET NOCOUNT ON;

/* [T-SQL GOES HERE] */

END;

GOInstead of this:

CREATE OR ALTER PROCEDURE dbo.BusinessEntityAction

AS

SET NOCOUNT ON;

/* [T-SQL GOES HERE] */

GOUse SET NOCOUNT ON; at the beginning of your SQL batches, stored procedures for report output and triggers in production environments, as this suppresses messages like '(10000 row(s) affected)' after executing INSERT, UPDATE, DELETE and SELECT statements. This improves the performance of stored procedures by reducing network traffic.

SET NOCOUNT ON; is a procedural level instructions and as such there is no need to include a corresponding SET NOCOUNT OFF; command as the last statement in the batch.

SET NOCOUNT OFF; can be helpful when debugging your queries in displaying the number of rows impacted when performing INSERTs, UPDATEs and DELETEs.

CREATE OR ALTER PROCEDURE dbo.PersonInsert

@PersonId int

,@JobTitle nvarchar(100)

,@HiredOn date

,@Gender char(1)

AS

BEGIN

SET NOCOUNT ON;

INSERT INTO

dbo.Person (PersonId, JobTitle, HiredOn, Gender)

SELECT

PersonId = @PersonId,

JobTitle = 'CEO',

HiredOn = '5/2/1971',

Gender = 'M';

END;Using WITH (NOLOCK), WITH (READUNCOMMITTED) and TRANSACTION ISOLATION LEVEL READ UNCOMMITTED does not mean your SELECT query does not take out a lock, it does not obey locks.

Can NOLOCK be used when the data is not changing? Nope. It has the same problems.

Problems

- You can see rows twice

- You can skip rows altogether

- You can see records that were never committed

- Your query can fail with an error "could not continue scan with

NOLOCKdue to data movement"

These problems will cause non-reproducible errors. You might end up blaming the issue on user error which will not be accurate.

Only use NOLOCK when the application stakeholders understand the problems and approve of them occurring. Get their approval in writing to CYA.

Alternatives

- Index Tuning

- Use

READ COMMITTED SNAPSHOT ISOLATION (RCSI)- On by default in Azure SQL Server databases, local SQL Servers should be checked for TempDB latency before enabling

So, while DISTINCT and GROUP BY are identical in a lot of scenarios, there is one case where the GROUP BY approach leads to better performance (at the cost of less clear declarative intent in the query itself).

You also might be using SELECT DISTINCT to mask a JOIN problem. It is much better to determine why rows are being duplicated and fix the problem.

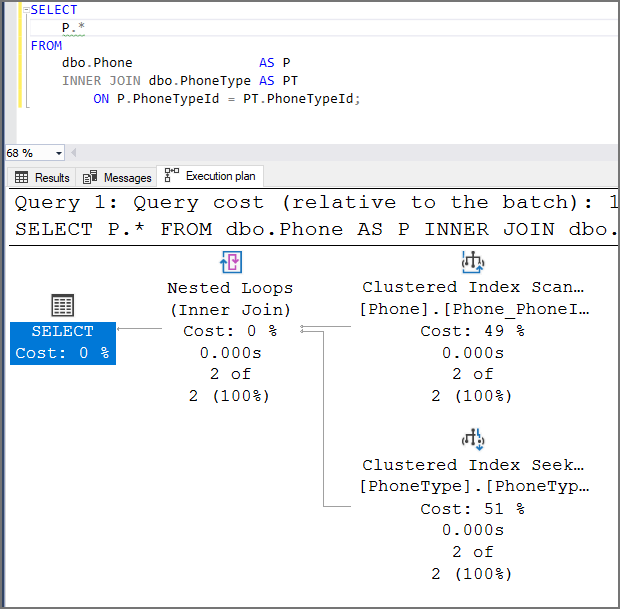

Use aliases for your table names in most multi-table T-SQL statements; a useful convention is to make the alias out of the first or first two letters of each capitalized table name, e.g., `Phone" becomes "P" and "PhoneType" becomes "PT".

If you have duplicate two-character aliases, choose a third letter from each. "PhoneType" becomes "PHT" and "PlaceType" becomes "PLT".

Ensure you use AS between the table name and the alias like [TABLE-Name] AS [ALIAS]. AS should be used to make it clear that something is being given a new name and easier to spot. This rule also applies for column aliases if the column aliases is not listed first.

Do not use table aliases like S1, S2, S3. Make your aliases meaningful.

SELECT

P.PhoneId

,P.PhoneNumber

,PT.PhoneTypeName

FROM

dbo.Phone AS P

INNER JOIN dbo.PhoneType AS PT

ON P.PhoneTypeId = PT.PhoneTypeId;Because the SQL Server Query Optimizer typically selects the best execution plan for a query, we recommend that table hints and query hints be used only as a last resort by experienced developers and database administrators.

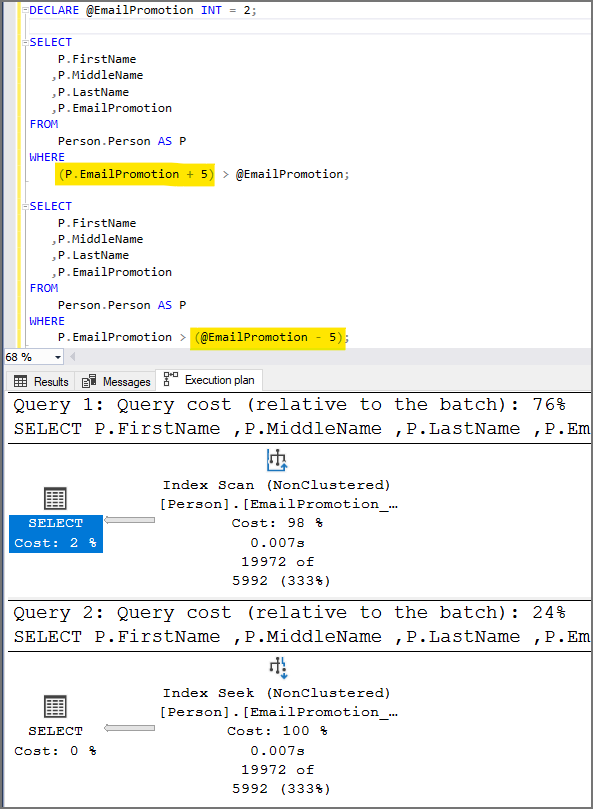

Search ARGument..able. Avoid having a column or variable used within an expression or used as a function parameter. You will get a table scan instead of an index seek which will hurt performance.

Another issue with non-sargable queries besides the forced table scan is SQL Server will not be able to provide a recommended index.

- TODO: See Using Missing Indexes Recommendations

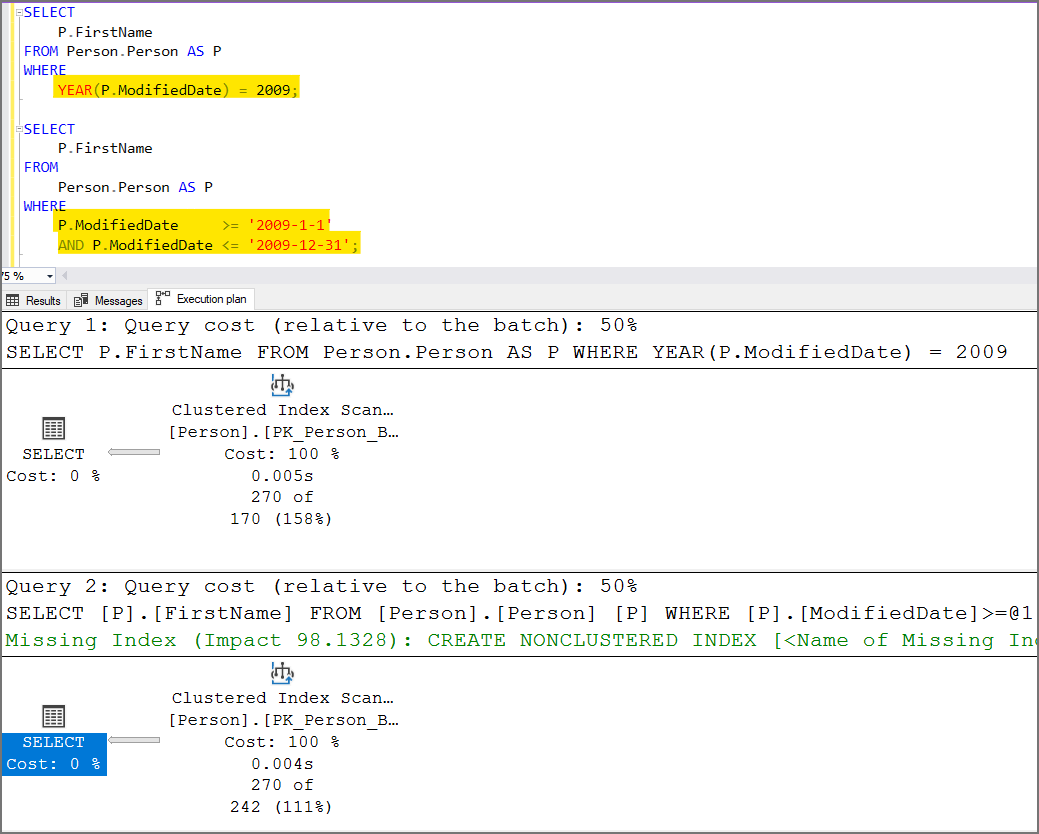

Changing the WHERE clause to not use the YEAR() function and doing a bit more typing allows SQL Server to understand what you want it to do.

SQL Server is the second most expensive sorting system. Make all attempts to sort the data results in the application layer.

2020 Pricing

- Oracle Enterprise Edition = $47,500 per core

- Microsoft SQL Server Enterprise Edition = $7,128 per core

- ⬇

- Microsoft Access $159.99

You should always explicitly define ascending (ASC) or descending (DESC) for ORDER BY expressions. This allows others who review or edit the code to not know that ASC is the default sort order.

SELECT

P.PersonId,

P.Title,

P.FirstName,

P.LastName,

P.NickName

FROM

Application.Person AS P

ORDER BY

P.LastName ASC;

/* ↑ Look Here */Use the column name in your ORDER BY expressions instead of the column number. The use of constants in the ORDER BY is deprecated for future removal. It makes it difficult to understand the code at a glance and leads to issue when alter the order of the columns in the SELECT.

Instead Of:

SELECT

P.FirstName

,P.LastName

FROM

dbo.Person AS P

ORDER BY

2 ASC;

/* ↑ Look Here */Do This:

SELECT

P.FirstName

,P.LastName

FROM

dbo.Person AS P

ORDER BY

P.LastName ASC;

/* ↑ Look Here */Queries should be parameterized so SQL Server can reuse the execution plan. Ensure your application code is not hardcoding the query values in the WHERE clause or using some sort of dynamic T-SQL and not doing it correctly.

A temporary fix is to force parameterization until you can refactor the code and include some @parameters.

To determine whether an expression is NULL, use IS NULL or IS NOT NULL instead of using comparison operators such as = or <>. Comparison operators return UNKNOWN when either or both arguments are NULL.

DECLARE @NULL AS varchar(10) = NULL;

DECLARE @NOTNULL AS varchar(10) = 'Some Value';

SELECT 'No Result' WHERE @NULL = NULL;

SELECT 'No Result' WHERE @NULL <> NULL;

SELECT 'Has Result' WHERE @NULL IS NULL;

SELECT 'Has Result' WHERE @NOTNULL IS NOT NULL;Use EXISTS or NOT EXISTS if referencing a subquery, and IN or NOT IN when using a list of literal values.

EXISTS used to be faster than IN when comparing data from a subquery. Using EXISTS would stop searching as soon as it found the first row. IN would collect all the results. SQL Server got smarter and treats EXISTS and IN the same way so performance is not an issue.

Option 1 (NOT IN)

The NOT IN operator causes issues when the subquery data contains NULL values. NOT EXIST or LEFT JOIN / IS NULL should be used below.

SELECT

P.FirstName

,P.LastName

FROM

dbo.Person AS P

WHERE

P.PersonId NOT IN (SELECT L.PersonId FROM dbo.List AS L);Option 2 (LEFT JOIN / IS NULL) Option 2 & 3 are semantically equivalent

SELECT

P.FirstName

,P.LastName

FROM

dbo.Person AS P

LEFT OUTER JOIN dbo.List AS L ON P.PersonId = L.PersonId

WHERE

L.PersonId IS NULL;Option 3 (NOT EXISTS) Option 2 & 3 are semantically equivalent

SELECT

P.FirstName

,P.LastName

FROM

dbo.Person AS P

WHERE

NOT EXISTS (SELECT * FROM dbo.List AS L WHERE P.PersonId = L.PersonId);Option 4 (IN)

Option 4 here uses IN with a list of literal values.

SELECT

EmailAddress

FROM

dbo.PersonEmail

WHERE

EmailTypeId IN (1, 2);You will not get an index seek using the percent wildcard (%) first in your search predicate. If you intend on performing this type of "Contains" or "Ends With" type of search, understand it will not be as performant.

SELECT

P.FirstName

,P.MiddleName

,P.LastName

FROM

dbo.Person AS P

WHERE

P.LastName LIKE '%son';Check WHERE clauses for columns that are not included in an index. Might want to exclude checking for tables with small (5k or less) number of records.

An example is if your SQL code uses the LastName column in the WHERE clause, you should ensure there is an index where the LastName column is the leading key on an index.

Check IN() predicates for columns that are not included in an index. Might want to exclude check for tables with small (5k or less) number of records.

When you utilize NOT IN in your WHERE clause the SQL Server optimizer will likely perform a table scan instead of an index seek. Index seeks are generally more performant than table scans.

Try rewriting the query to use LEFT OUTER JOIN and check for NULL on the right-handed side table.

Don't convert dates to strings to compare. Dates should be stored with the pattern YYYY-MM-DD. Not all are and string comparisons can provide the wrong results.

Transactions allow for database operations to be atomic. A group of related SQL commands that must all complete successfully or not at all and must be rolled back.

If you are performing a funds transfer and updating multiple bank account tables with debiting one and crediting the other, they must all complete successfully or there will be an imbalance.

- When

SET XACT_ABORT ON, if a T-SQL statement raises a run-time error, the entire transaction is terminated and rolled back. - When

SET XACT_ABORT OFF, in some cases only the T-SQL statement that raised the error is rolled back and the transaction continues processing. Depending upon the severity of the error, the entire transaction may be rolled back even when SET XACT_ABORT is OFF. OFF is the default setting in a T-SQL statement, while ON is the default setting in a trigger.

A use case for SET XACT_ABORT OFF is when debugging to trap an error.

SET NOCOUNT, XACT_ABORT ON;

/* Exclude SQL code that does not need to be inclued in the transaction. Keep transactions shrt. */

BEGIN TRY

BEGIN TRANSACTION;

UPDATE dbo.Account SET Balance = -100.00 WHERE AccountId = 1;

UPDATE dbo.Account SET Balance = 'not a decimal' WHERE AccountId = 2;

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

IF @@TRANCOUNT > 0

BEGIN

ROLLBACK TRANSACTION;

END;

/* Handle the error here, cleanup, et cetera.

In most cases it is best to bubble up (THROW) the error to the application/client to be displayed to the user and logged.

*/

THROW;

END CATCH;SET NOCOUNT, XACT_ABORT ON;

BEGIN TRY

DECLARE @ErrorMessageText nvarchar(2048);

BEGIN TRANSACTION;

/* Debit the first account */

UPDATE dbo.Account SET Balance = -100.00 WHERE AccountId = 1;

/* Check the first account was debited */

IF @@ROWCOUNT <> 1

BEGIN

SET @ErrorMessageText = N'The debited account failed to update.';

END;

/* Credit the second account */

UPDATE dbo.Account SET Balance = 100.00 WHERE AccountId = 2;

/* Check the second account was credited */

IF @@ROWCOUNT <> 1

BEGIN

SET @ErrorMessageText = N'The credited account failed to update.';

END;

/* Check if we have any errors to throw */

IF @ErrorMessageText <> ''

BEGIN

; THROW 50001, @ErrorMessageText, 1;

END;

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

IF @@TRANCOUNT > 0

BEGIN

ROLLBACK TRANSACTION;

END;

/* Handle the error here, cleanup, et cetera.

In most cases it is best to bubble up (THROW) the error to the application/client to be displayed to the user and logged.

*/

THROW;

END CATCH;- TODO: See Not Using SET XACT_ABORT ON

- TODO: See Not Using BEGIN END

- TODO: See Not Using Semicolon THROW

- TODO: See Using RAISERROR Instead of THROW

Do not use SET IMPLICIT_TRANSACTIONS ON.

The default behavior of SQL Servers is IMPLICIT_TRANSACTIONS OFF that does not keep TSQL commands open waiting for a ROLLBACK TRANSACTION or COMMIT TRANSACTION command. When OFF, we say the transaction mode is autocommit.

When IMPLICIT_TRANSACTIONS ON is used, it could appear that the command finished instantly, but there will be an exclusive lock on the row(s) until either a roll back or commit command is issued. This makes IMPLICIT_TRANSACTIONS ON not popular as they can cause considerable blocking and locking.

When a connection is operating in implicit transaction mode IMPLICIT_TRANSACTIONS ON, the instance of the SQL Server Database Engine automatically starts a new transaction after the current transaction is committed or rolled back. You do nothing to delineate the start of a transaction; you only commit or roll back each transaction. Implicit transaction mode generates a continuous chain of transactions.

- See Transaction locking and row versioning guide > Implicit Transactions

- See SET IMPLICIT_TRANSACTIONS ON Is One Hell of a Bad Idea

Locating the row to confirm it exists is doing the work twice.

IF EXISTS (SELECT * FROM dbo.Person WHERE PersonId = @PersonId)

BEGIN

UPDATE dbo.Person SET FirstName = @FirstName WHERE PersonId = @PersonId;

END;

ELSE

BEGIN

INSERT dbo.Person (PersonId, FirstName) VALUES (@PersonId, @FirstName);