-

Notifications

You must be signed in to change notification settings - Fork 82

VTS Model Settings

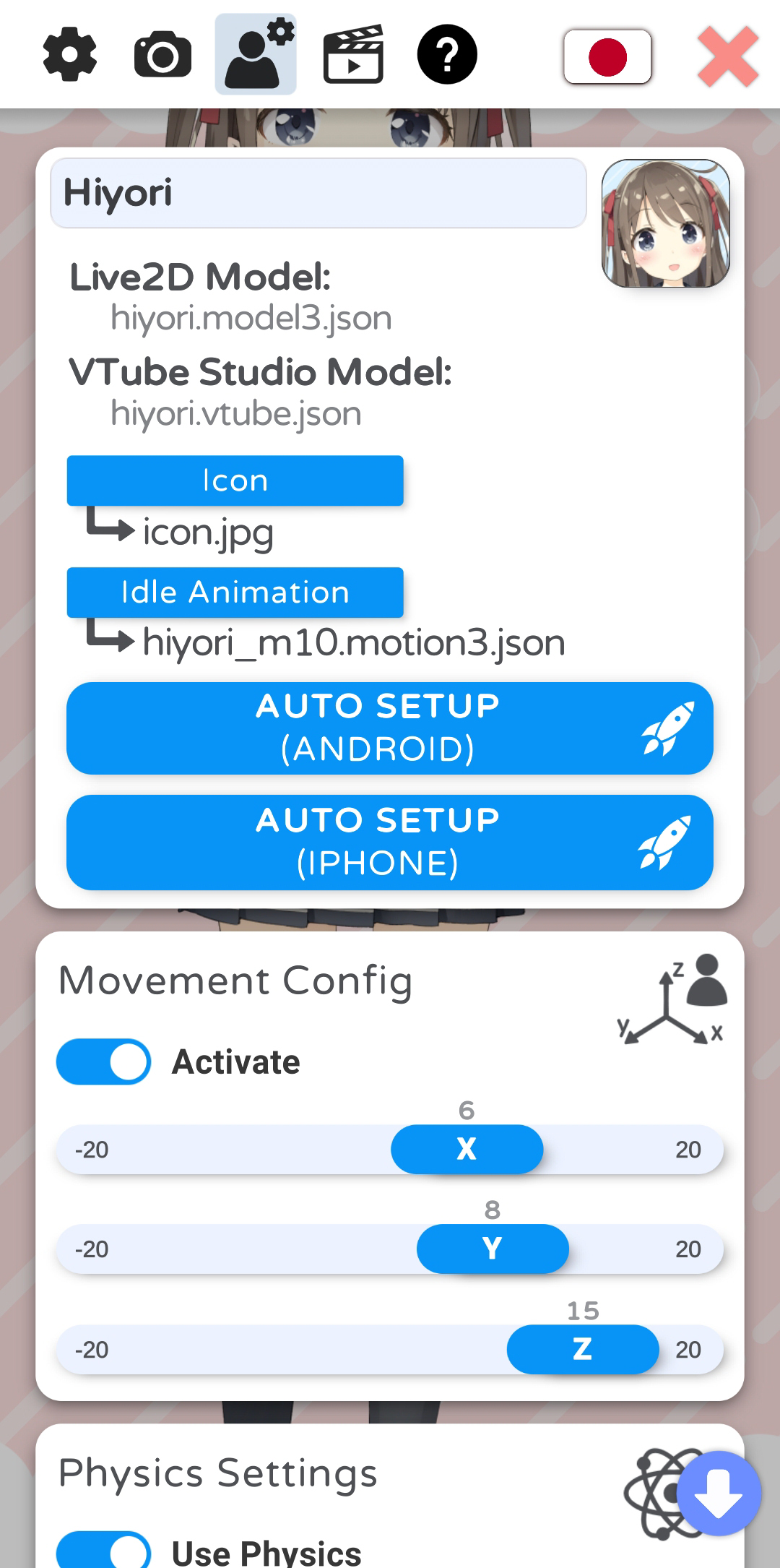

Once you have loaded a model, you will be able to access the model settings in the VTube Studio model config tab.

At the top, you can enter a name for your model. This name will be shown in the model selection bar. Below that, you can see the name of the Live2D model file and the automatically created VTube Studio model file (VTS model).

You can choose an icon (.jpg or .png, click icon at top right to open file selection) and default idle animation (.motion3.json) for your model. You can choose from any file inside your model folder.

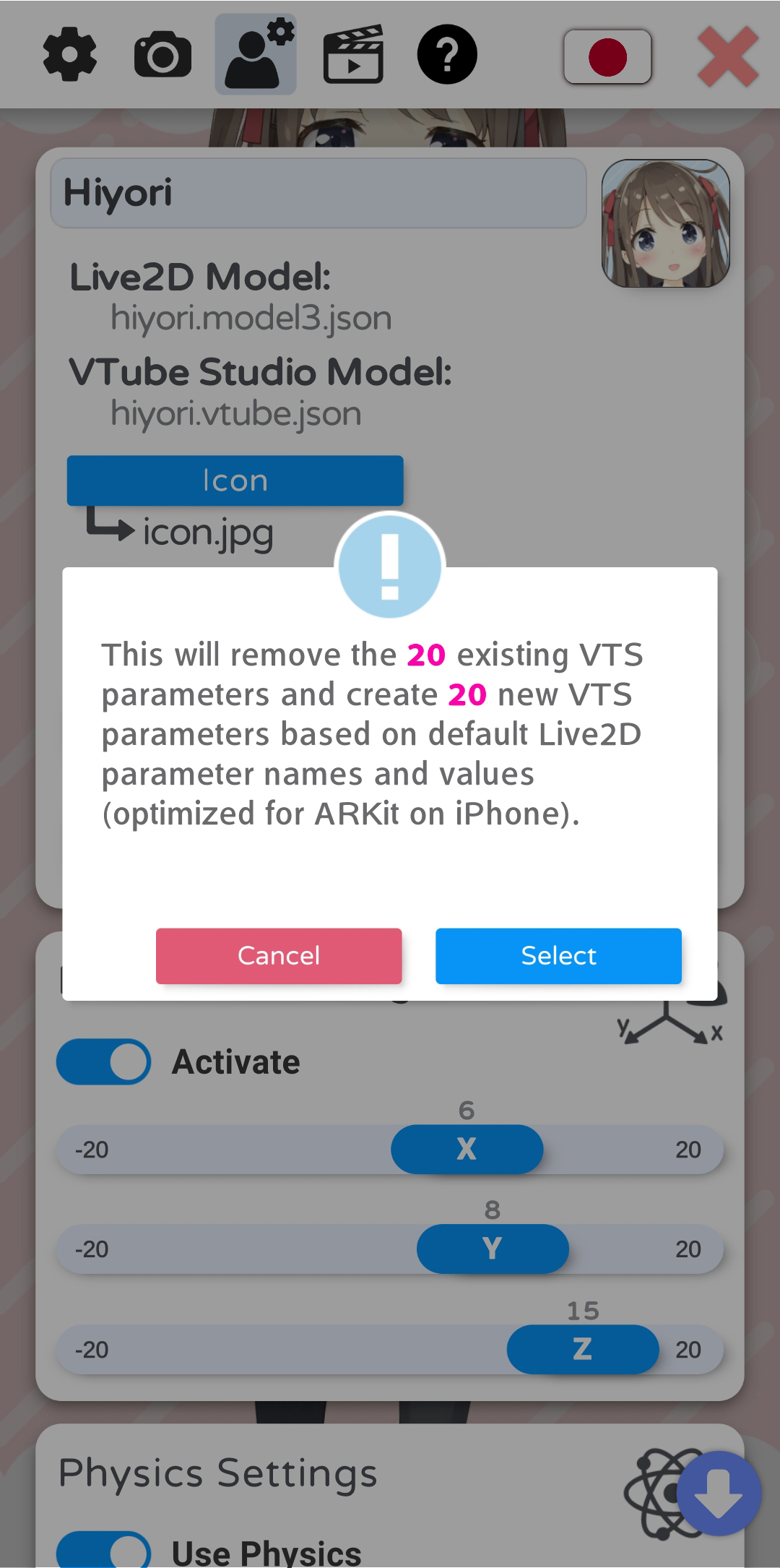

You can also run the auto-setup for your VTube Studio model. This will set up your model based on standard Live2D parameter names and values. For more information about these parameters, please see: https://docs.live2d.com/cubism-editor-manual/standard-parametor-list/#

If you change the parameter IDs or ranges, auto-setup will not be able to set up your model and you'll have to do it manually. Even if you use auto-setup, you may have to fine-tune your VTS model to fit your Live2D model.

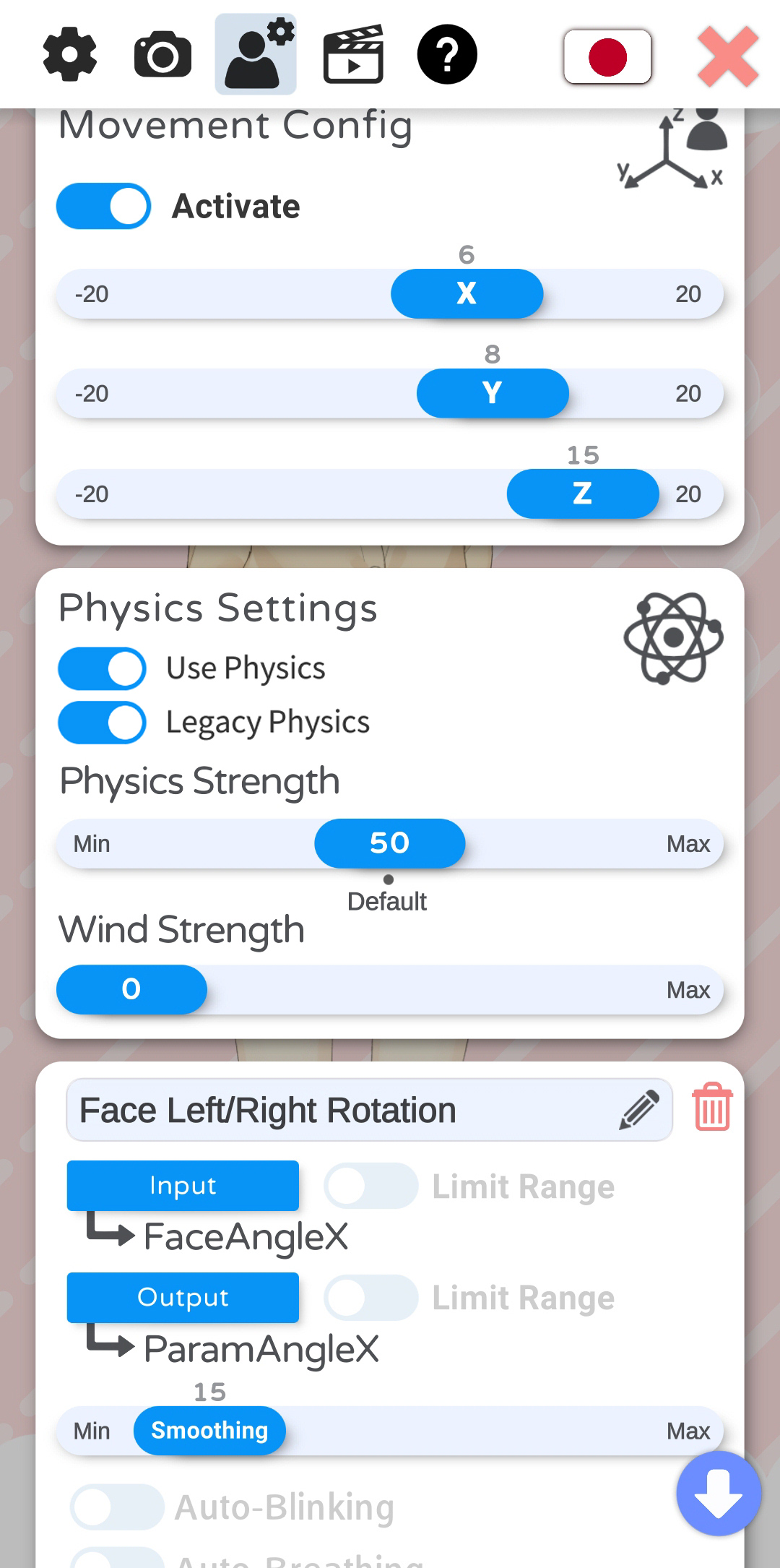

With the "Model Movement" settings, you can move the model left/right and closer/further away from the screen based on your head position. With the sliders, you can configure how much the model is moved.

Here, you can boost or reduce the effect of your Live2D physics setup. You can also activate “wind”, which will randomly apply a wind-like force to the physics system. This is experimental and may not look good depending on how your model is set up.

VTube Studio used to ignore the Live2D physics setting "Effectiveness". This has been fixed in a recent update. To turn on "Effectiveness", make sure the "Legacy Physics toggle is off.

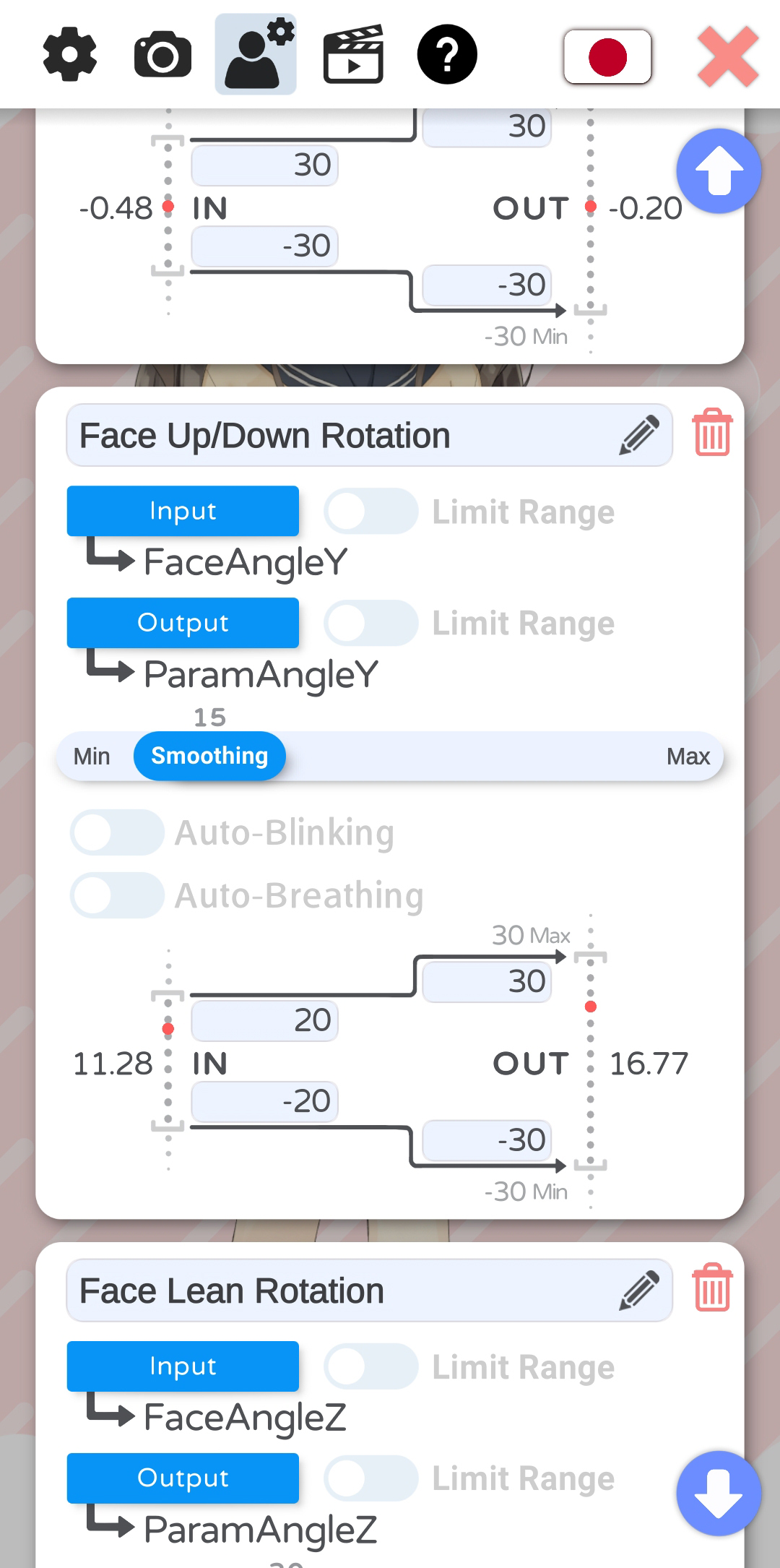

This is the most important part of your model settings. Here, you set up which face tracking parameters control which Live2D parameters.

The general idea is:

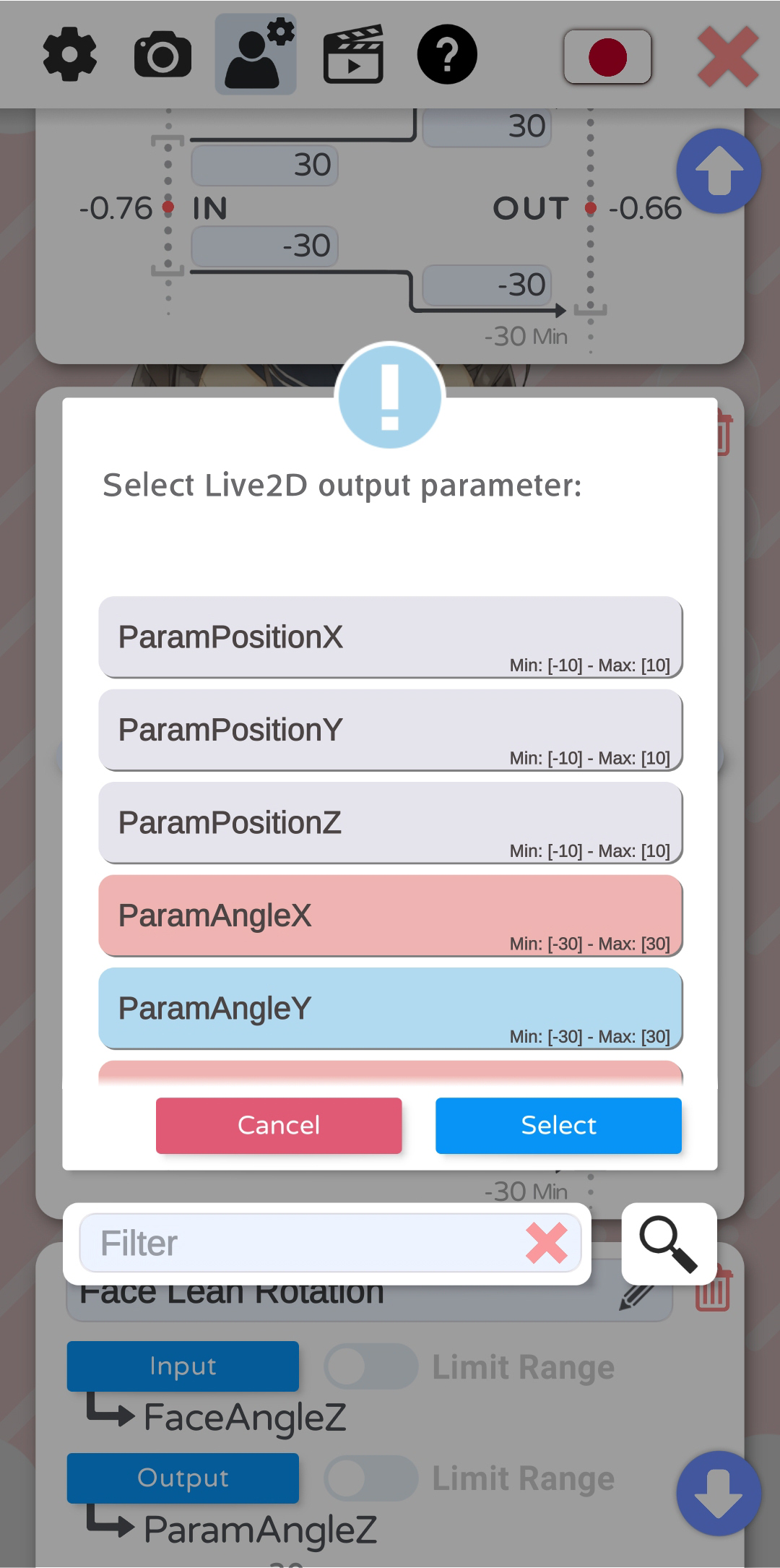

- You can freely map any INPUT parameter (face-tracking, mouse, etc.) to any OUTPUT parameter (Live2D parameter).

- How the values are mapped can be freely configured. For example, the input parameter MouthOpen is within the range [0, 1], with 0 being closed and 1 being all the way open. If your Live2D parameter (for example named ParamMouthOpen) has the range [-10, 10], you could map the ranges so (IN, 0) becomes (OUT, -10) and (IN, 1) becomes (OUT, 10). Or you map it any other way, there are no restrictions.

- The red dot will show where the current value is placed within the input and output range.

- By activating "Limit Range", you can make sure the the input or output values never exceed the range you set for the input or output. This will also cause the value to stop smoothly when approaching the limits.

- Generally, it is recommended to only change the output range and leave the input range as is.

- More smoothing will make the movements less shaky but will introduce lag. Experiment with the smoothing values until you find something that works for each parameter. On iOS, you will most likely need very little smoothing.

- Auto-breath will fade the output parameter up and down in a breath-like motion. No input parameter is required when using this option. Any input will be ignored.

- Auto-blink will randomly reduce the parameter to zero. This can be combined with an input parameter, but none is necessary.

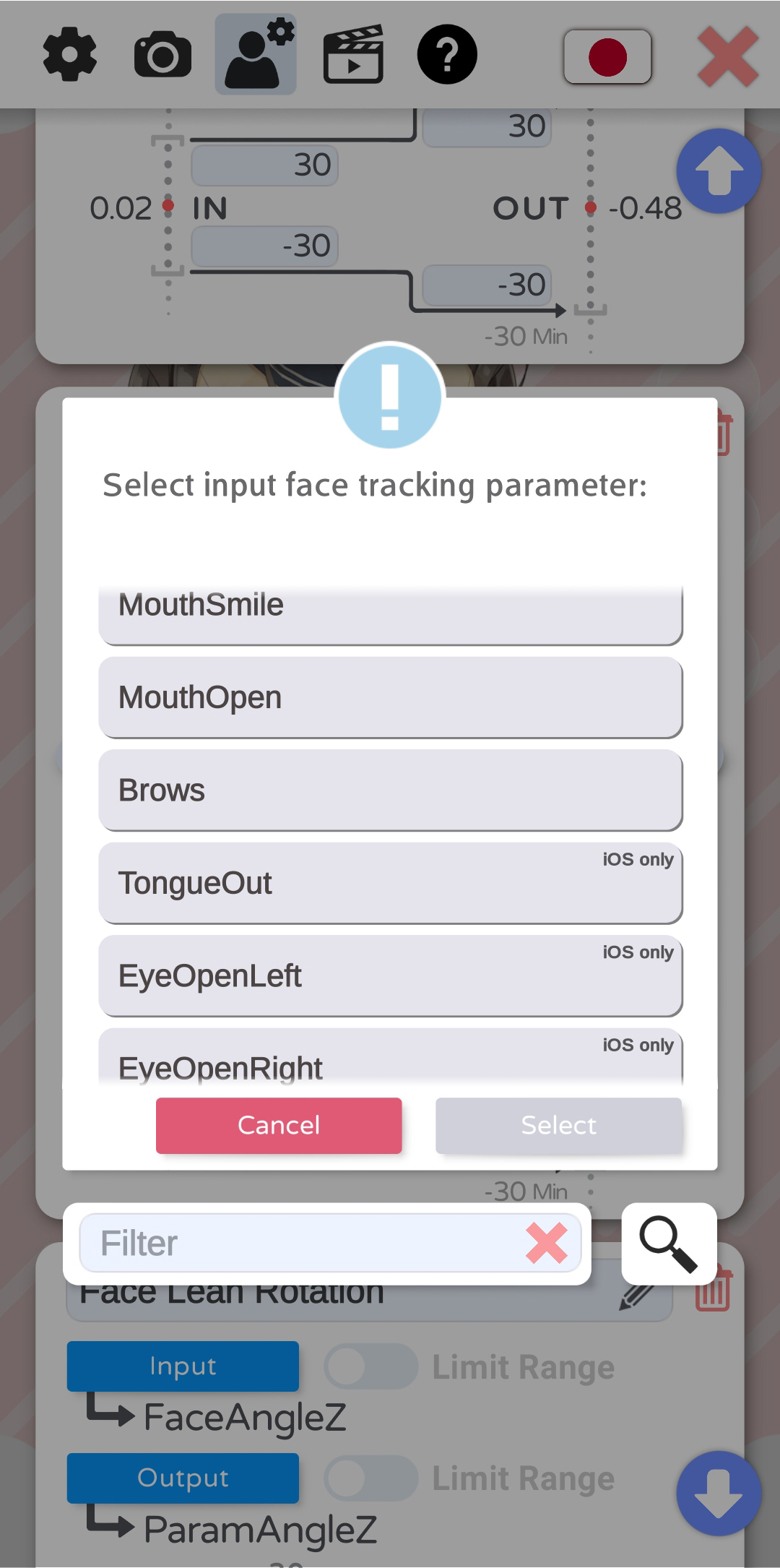

- As input parameter, you can choose from a list of available parameters. iOS supports more face tracking parameters than Android (see below).

- As output parameter, you can choose any Live2D parameter. Each output parameter can only be chosen once, as otherwise you would have multiple input parameters writing to the same output parameter.

If your model doesn’t move despite the Live2D parameter clearly moving in the VTS model config, the cause is most likely an expression, an animation or the physics system overwriting the value from the face tracking. This is explained in detail in the chapter:

Interaction between Animations, Expressions, Face Tracking, Physics, etc.

VTube Studio currently supports the following input parameters that can be mapped to output Live2D parameters. The iOS-only parameters are also marked accordingly in the app.

| Parameter Name | Explanation | iOS | Android | Webcam |

|---|---|---|---|---|

| FacePositionX | horizontal position of face | ✔️ | ✔️ | ✔️ |

| FacePositionY | vertical position of face | ✔️ | ✔️ | ✔️ |

| FacePositionZ | distance from camera | ✔️ | ✔️ | ✔️ |

| FaceAngleX | face right/left rotation | ✔️ | ✔️ | ✔️ |

| FaceAngleY | face up/down rotation | ✔️ | ✔️ | ✔️ |

| FaceAngleZ | face lean rotation | ✔️ | ✔️ | ✔️ |

| MouthSmile | how much you’re smiling | ✔️ | ✔️ | ✔️ |

| MouthOpen | how open your mouth is | ✔️ | ✔️ | ✔️ |

| Brows | up/down for both brows combined | ✔️ | ✔️ | ✔️ |

| MousePositionX | x-pos. of mouse or finger within set range | ✔️ | ✔️ | ✔️ |

| MousePositionY | y-pos. of mouse or finger within set range | ✔️ | ✔️ | ✔️ |

| TongueOut | stick out your tongue | ✔️ | ❌ | ❌ |

| EyeOpenLeft | how open your left eye is | ✔️ | ❌ | ✔️ |

| EyeOpenRight | how open your right eye is | ✔️ | ❌ | ✔️ |

| EyeLeftX | eye-tracking | ✔️ | ❌ | ✔️ |

| EyeLeftY | eye-tracking | ✔️ | ❌ | ✔️ |

| EyeRightX | eye-tracking | ✔️ | ❌ | ✔️ |

| EyeRightY | eye-tracking | ✔️ | ❌ | ✔️ |

| CheekPuff | detects when you puff out your cheeks | ✔️ | ❌ | ❌ |

| BrowLeftY | up/down for left brow | ✔️ | ❌ | ✔️ |

| BrowRightY | up/down for right brow | ✔️ | ❌ | ✔️ |

| VoiceFrequency* | depends on detected phonemes | ❌ | ❌ | ✔️ |

| VoiceVolume* | how loud microphone volume is | ❌ | ❌ | ✔️ |

|

VoiceVolume PlusMouthOpen* |

MouthOpen + VoiceVolume | ❌ | ❌ | ✔️ |

|

VoiceFrequency PlusMouthSmile* |

MouthSmile + VoiceFrequency | ❌ | ❌ | ✔️ |

| MouthX | Mouth X position (shift mouth left/right) | ✔️ | ❌ | ❌ |

| FaceAngry | detects angry face (EXPERIMENTAL, not recommended) |

✔️ | ❌ | ❌ |

* these parameters cannot be used directly on iOS/Android but will work on PC/Mac when using a smartphone for tracking. Parameters like VoiceVolumePlusMouthOpen will default to using the value from MouthOpen when the model is loaded on the smartphone.

![]() If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord

If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord![]() !!

!!

- Android vs. iPhone vs. Webcam

- Getting Started

- Introduction & Requirements

- Preparing your model for VTube Studio

- Where to get models?

- Restore old VTS Versions

- Controlling multiple models with one device

- Copy config between models

- Loading your own Backgrounds

- Recoloring Models and Items

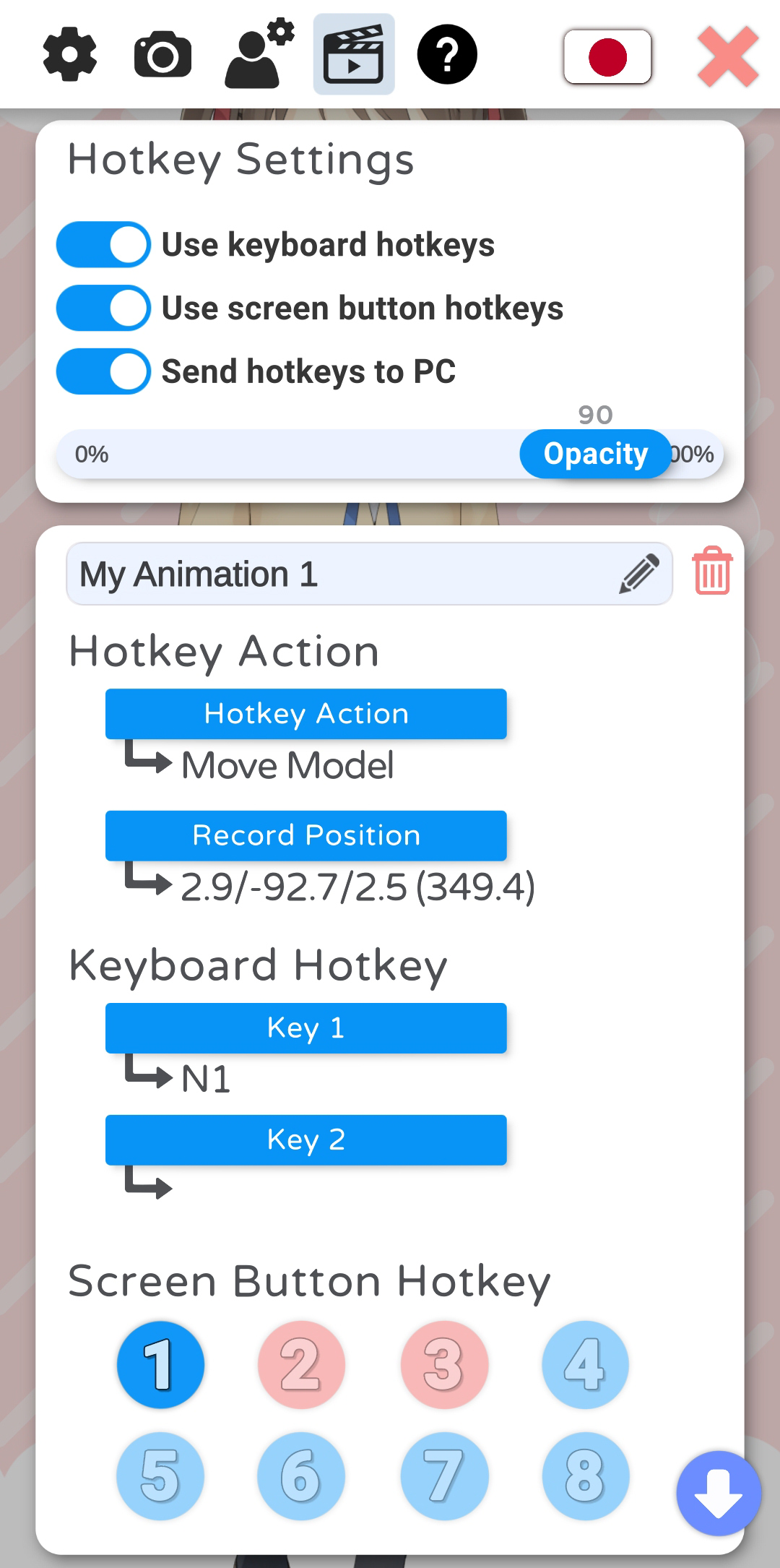

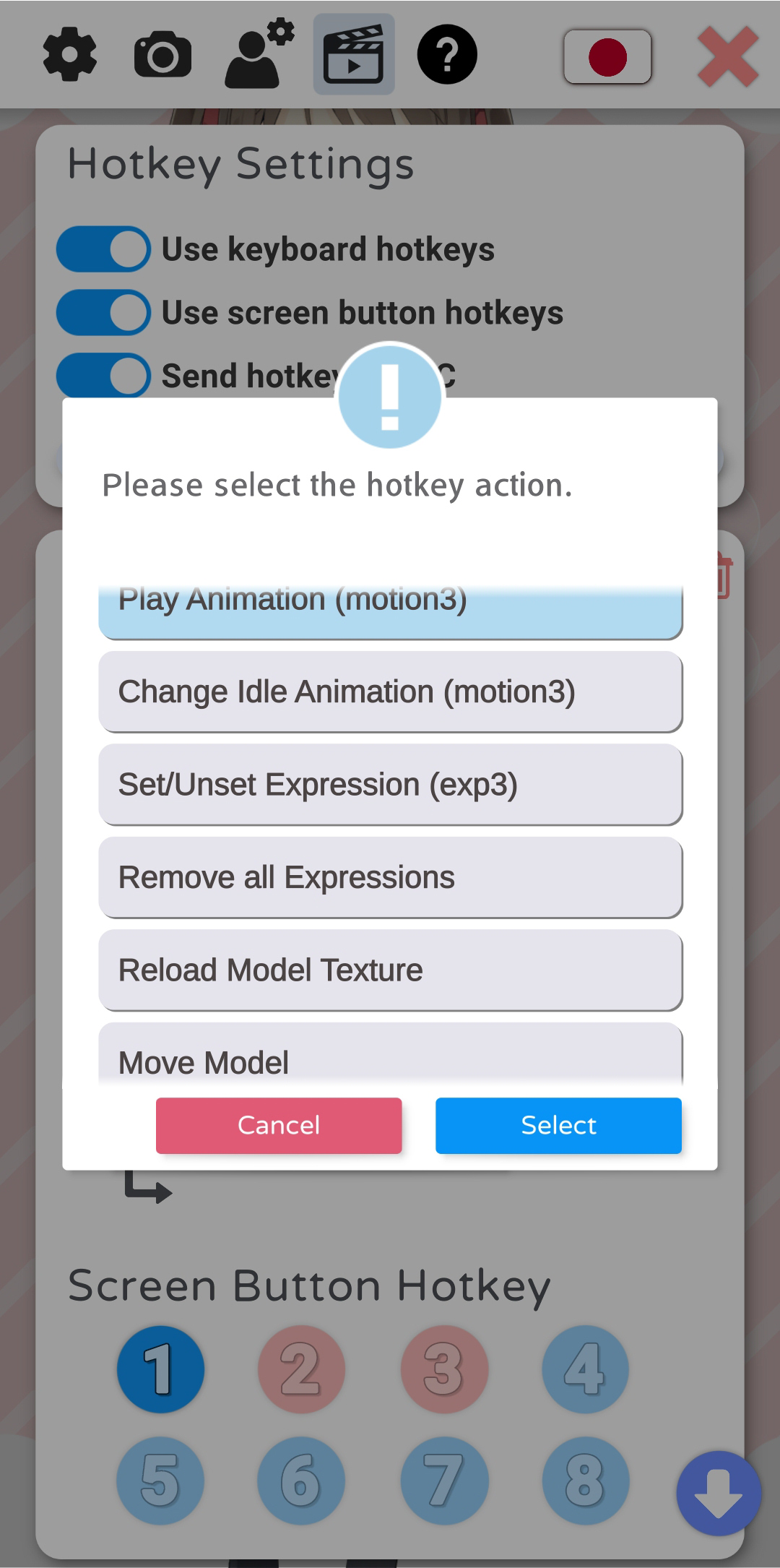

- Record Animations

- Recording/Streaming with OBS

- Sending data to VSeeFace

- Starting as Admin

- Starting without Steam

- Streaming to Mac/PC

- VNet Multiplayer Overview

- Steam Workshop

- Taking/Sharing Screenshots

- Live2D Cubism Editor Communication

- Lag Troubleshooting

- Connection Troubleshooting

- Webcam Troubleshooting

- Crash Troubleshooting

- Known Issues

- FAQ

- VTube Studio Settings

- VTS Model Settings

- VTube Studio Model File

- Visual Effects

- Twitch Interaction

- Twitch Hotkey Triggers

- Spout2 Background

- Expressions ("Stickers"/"Emotes")

- Animations

- Interaction between Animations, Tracking, Physics, etc.

- Google Mediapipe Face Tracker

- NVIDIA Broadcast Face Tracker

- Tobii Eye-Tracker

- Hand-Tracking

- Lipsync

- Item System

- Live2D-Items

- Item Scenes & Item Hotkeys

- Add Special ArtMesh Functionality

- Display Light Overlay

- VNet Security

- Plugins (YouTube, Twitch, etc.)

- Web-Items

- Web-Item Plugins