-

Notifications

You must be signed in to change notification settings - Fork 82

Mediapipe Webcam Tracker

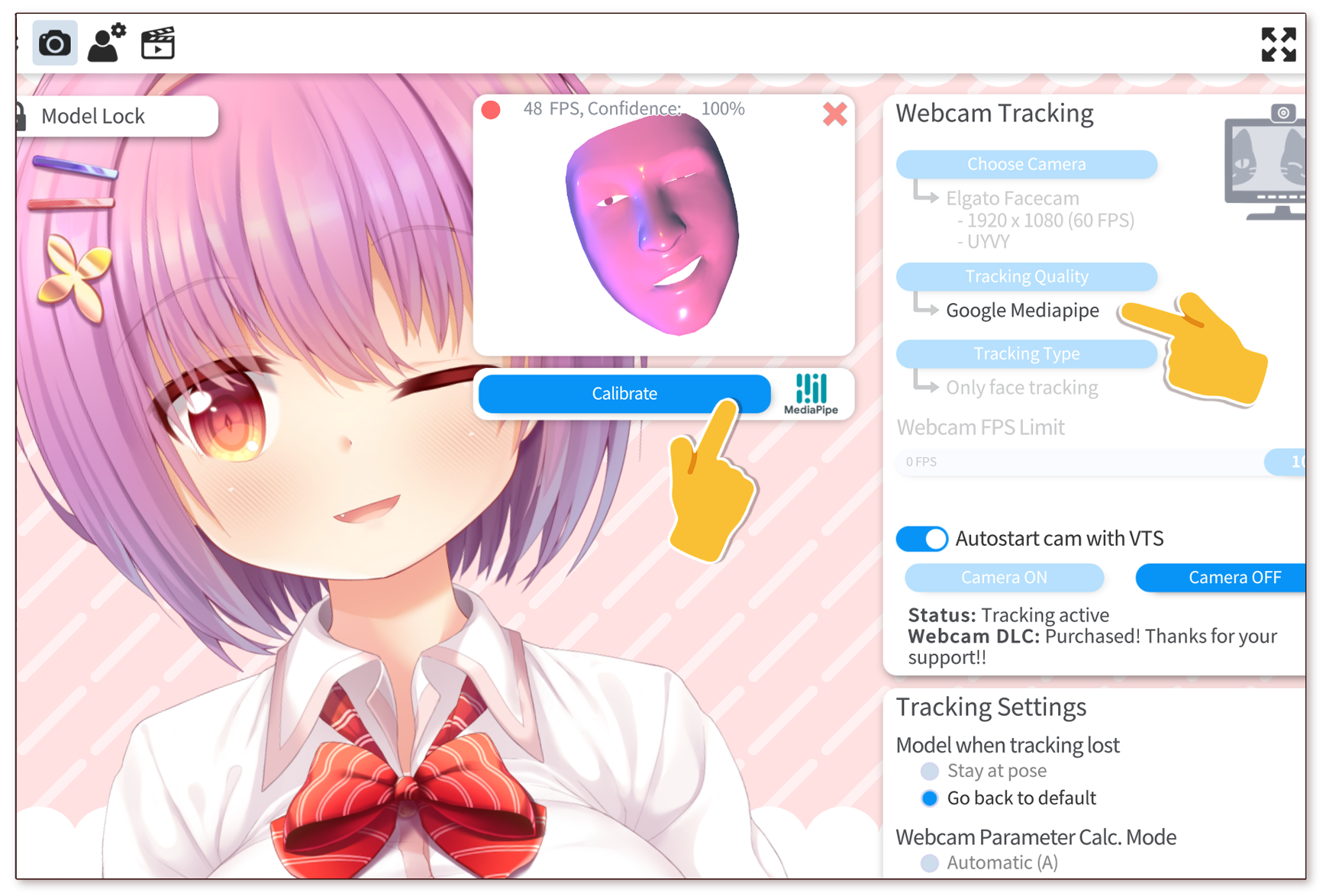

In addition to tracking using OpenSeeFace and the NVIDIA RTX webcam tracker, VTube Studio now supports high-quality webcam tracking using the new Google Mediapipe Webcam tracker. The quality is mostly comparable to the NVIDIA tracker but no special hardware is required.

It is directly included in VTube Studio (Windows only for now), no DLC or further download needed.

The Google Mediapipe tracker is only available for Windows, but unlike the NVIDIA tracker, no special GPU is required. The tracker is directly included in VTube Studio, no further download or DLC needed.

The Google Mediapipe tracker can be started just like the regular OpenSeeFace webcam tracker. Just select "[Google] Mediapipe Tracker" after clicking the "Tracking Type" button (see 1). This will only be available if you actually have a RTX-supported GPU.

- Click this button to select the new NVIDIA Broadcast tracker. Will not be available if your GPU is not supported.

- The tracking type. Only "Face Tracking" will be available here if you use the NVIDIA Broadcast tracker. Hand tracking is currently not supported when using the NVIDIA Broadcast tracker.

- If you want to limit the tracking FPS, do it using this slider. Since VTube Studio interpolates the tracking data to 60 FPS, running the tracking above 30 FPS does not add much in terms of model movement quality.

- Click this once after the tracking has initialized. Click while looking at the camera to set a neutral face pose and neutral blendshapes.

- Click this to turn the external tracking preview window with the creepy face on or off (see 6).

- The external tracking preview window. Can be useful for checking if the blendshapes are properly calibrated. Rendering the 3D face takes some CPU/GPU resources, so you should have it off most of the time if you don't need it. You can also close this window with the normal "X button" to turn it off.

Once the tracker has initialized and tracking has started, you can click the gray gear icon in the tracking preview screen (bottom right) to show the Windows webcam config window.

It is free (with the watermark) just like the regular webcam tracking. No additional purchase needed.

In terms of tracking quality, it's very similar. Mouth-tracking is very accurate and so is eye-tracking. Blink-tracking works well. Wink-tracking is fine too, but as always, depending on your eye shape/size it may work better or worse. You'll just have to try it out and see for yourself.

The face rotation range of the NVIDIA tracker is also very big so it's unlikely to lose tracking in most situations, even with fast face movement. I tried shaking my head really fast until I got a headache and never managed to make it lose tracking.

It supports the same parameters you have with iOS tracking, including "Mouth X" and individual brow tracking, but it DOES NOT currently support cheek puff and tongue-tracking. The tracker is in active development, so it is likely that those will be added eventually.

It depends on your CPU/GPU, but it should be fairly minimal, even at high framerates. On my RTX 3080, running the tracker at 60 FPS and at 1920x1080 webcam resolution, both CPU and GPU usage stay below 10%.

A lot of the tracking system (but not all of it) can be executed on the machine-learning-optimized tensor-cores of your NVIDIA RTX GPU. They aren't used by most video games, so they don't interfere with your gaming.

That isn't currently supported with this tracker but NVIDIA are experimenting with various tracking types, so I would not be surprised if it's added eventually. I don't have any info about it though.

I've tried it with glasses and the eye-tracking and wink-tracking seems to work fairly well. Of course, it depends on the webcam placement and lighting too.

Of course! It is available and will always stay available as the main webcam tracker. This is just another option you can try out.

Parameter ranges/setups will be as close to iOS tracking as I can get them, so all your existing models should work without any changes (except cheek-puff and tongue-tracking since they are not available with this tracker for now).

![]() If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord

If you have any questions that this manual doesn't answer, please ask in the VTube Studio Discord![]() !!

!!

- Android vs. iPhone vs. Webcam

- Getting Started

- Introduction & Requirements

- Preparing your model for VTube Studio

- Where to get models?

- Restore old VTS Versions

- Controlling multiple models with one device

- Copy config between models

- Loading your own Backgrounds

- Recoloring Models and Items

- Record Animations

- Recording/Streaming with OBS

- Sending data to VSeeFace

- Starting as Admin

- Starting without Steam

- Streaming to Mac/PC

- VNet Multiplayer Overview

- Steam Workshop

- Taking/Sharing Screenshots

- Live2D Cubism Editor Communication

- Lag Troubleshooting

- Connection Troubleshooting

- Webcam Troubleshooting

- Crash Troubleshooting

- Known Issues

- FAQ

- VTube Studio Settings

- VTS Model Settings

- VTube Studio Model File

- Visual Effects

- Twitch Interaction

- Twitch Hotkey Triggers

- Spout2 Background

- Expressions ("Stickers"/"Emotes")

- Animations

- Interaction between Animations, Tracking, Physics, etc.

- Google Mediapipe Face Tracker

- NVIDIA Broadcast Face Tracker

- Tobii Eye-Tracker

- Hand-Tracking

- Lipsync

- Item System

- Live2D-Items

- Between-Layer Item Pinning

- Item Scenes & Item Hotkeys

- Add Special ArtMesh Functionality

- Display Light Overlay

- VNet Security

- Plugins (YouTube, Twitch, etc.)

- Web-Items

- Web-Item Plugins