This repository is a Tensorflow implementation of the Semantic Image Inpainting with Deep Generative Models, CVPR2017.

- tensorflow 1.9.0

- python 3.5.3

- numpy 1.14.2

- pillow 5.0.0

- matplotlib 2.0.2

- scipy 0.19.0

- opencv 3.2.0

- pyamg 3.3.2

- celebA

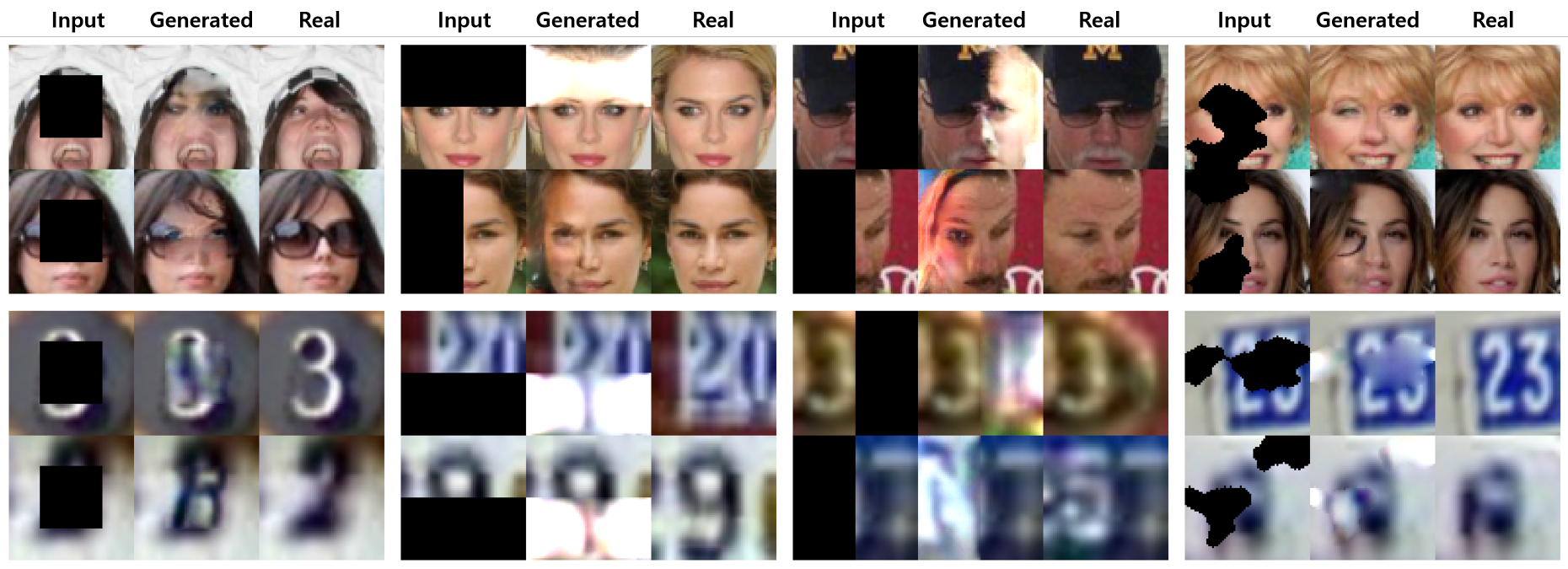

- Note: The following resutls are cherry-picked images

- SVHN

- Note: The following resutls are cherry-picked images

- Failure Examples

- celebA Dataset

Use the following command to download

CelebAdataset and copy theCelebAdataset on the corresponding file as introduced in Directory Hierarchy information. Manually remove approximately 2,000 images from the dataset for testing, put them on thevalfolder and others in the `train' folder.

python download.py celebA

- SVHN Dataset

Download SVHN data from The Street View House Numbers (SVHN) Dataset website. Two mat files you need to download aretrain_32x32.matandtest_32x32.matin Cropped Digits Format 2.

.

│ semantic_image_inpainting

│ ├── src

│ │ ├── dataset.py

│ │ ├── dcgan.py

│ │ ├── download.py

│ │ ├── inpaint_main.py

│ │ ├── inpaint_model.py

│ │ ├── inpaint_solver.py

│ │ ├── main.py

│ │ ├── solver.py

│ │ ├── mask_generator.py

│ │ ├── poissonblending.py

│ │ ├── tensorflow_utils.py

│ │ └── utils.py

│ Data

│ ├── celebA

│ │ ├── train

│ │ └── val

│ ├── svhn

│ │ ├── test_32x32.mat

│ │ └── train_32x32.mat

src: source codes of the Semantic-image-inpainting

We need two sperate stages to utilize semantic image inpainting model.

- First, independently train DCGAN on your dataset as the original DCGAN process.

- Second, use pretrained DCGAN and semantic-image-inpainting model to restore the corrupt images.

Same generator and discriminator networks of the DCGAN are used as described in Alec Radford's paper, except that batch normalization of training mode is used in training and test mode that we found to get more stalbe results. Semantic image inpainting model is implemented as moodoki's semantic_image_inpainting. Some bugs and different implementations of the original paper are fixed.

Use main.py to train a DCGAN network. Example usage:

python main.py --is_train=true

gpu_index: gpu index, default:0batch_size: batch size for one feed forward, default:256dataset: dataset name for choice [celebA|svhn], default:celebAis_train: training or inference mode, default:Falselearning_rate: initial learning rate, default:0.0002beta1: momentum term of Adam, default:0.5z_dim: dimension of z vector, default:100iters: number of interations, default:200000print_freq: print frequency for loss, default:100save_freq: save frequency for model, default:10000sample_freq: sample frequency for saving image, default:500sample_size: sample size for check generated image quality, default:64load_model: folder of save model that you wish to test, (e.g. 20180704-1736). default:None

Use main.py to evaluate a DCGAN network. Example usage:

python main.py --is_train=false --load_model=folder/you/wish/to/test/e.g./20180704-1746

Please refer to the above arguments.

Use inpaint_main.py to utilize semantic-image-inpainting model. Example usage:

python inpaint_main.py --dataset=celebA \

--load_model=DCGAN/model/you/want/to/use/e.g./20180704-1746 \

--mask_type=center

gpu_index': gpu index, default:0`dataset: dataset name for choice [celebA|svhn], default:celebAlearning_rate: learning rate to update latent vector z, default:0.01momentum: momentum term of the NAG optimizer for latent vector, default:0.9z_dim: dimension of z vector, default:100lamb: hyper-parameter for prior loss, default:3is_blend: blend predicted image to original image, default:truemask_type: mask type choice in [center|random|half|pattern], default:centerimg_size: image height or width, default:64iters: number of iterations to optimize latent vector, default:1500num_try: number of random samples, default:20print_freq: print frequency for loss, default:100sample_batch: number of sampling images, default:2load_model: saved DCGAN model that you with to test, (e.g. 20180705-1736), default:None

- Content Loss

- Prior Loss

- Total Loss

@misc{chengbinjin2018semantic-image-inpainting,

author = {Cheng-Bin Jin},

title = {semantic-image-inpainting},

year = {2018},

howpublished = {\url{https://github.com/ChengBinJin/semantic-image-inpainting}},

note = {commit xxxxxxx}

}

- This project borrowed some code from carpedm20 and moodoki.

- Some readme formatting was borrowed from Logan Engstrom

Copyright (c) 2018 Cheng-Bin Jin. Contact me for commercial use (or rather any use that is not academic research) (email: [email protected]). Free for research use, as long as proper attribution is given and this copyright notice is retained.