diff --git a/docs/DICTIONARY.md b/docs/DICTIONARY.md

new file mode 100644

index 00000000000000..8a2b67ba4d2800

--- /dev/null

+++ b/docs/DICTIONARY.md

@@ -0,0 +1,36 @@

+# Terminology Dictionary

+

+This guide standardizes terminology for Netdata's documentation and communications. When referring to Netdata mechanisms or concepts, use these terms and definitions to ensure clarity and consistency.

+

+When the context is clear, we can omit the "Netdata" prefix for brevity.

+

+## Core Components

+

+| Term | Definition |

+|------------------------|--------------------------------------------------------------------------|

+| **Agent** (**Agents**) | The core monitoring software that collects, processes and stores metrics |

+| **Cloud** | The centralized platform for managing and visualizing Netdata metrics |

+

+## Database

+

+| Term | Definition |

+|----------------------|----------------------------------------------------|

+| **Tier** (**Tiers**) | Database storage layers with different granularity |

+| **Mode(s)** | The different Modes of the Database |

+

+## Streaming

+

+| Term | Definition |

+|--------------------------|-------------------------------------------------------------|

+| **Parent** (**Parents**) | An Agent that receives metrics from other Agents (Children) |

+| **Child** (**Children**) | An Agent that streams metrics to another Agent (Parent) |

+

+## Machine Learning

+

+| Term | Abbreviation | Definition |

+|-------------------------|:------------:|-------------------------------------------------------------------------------------------------|

+| **Machine Learning** | ML | An umbrella term for Netdata's ML-powered features |

+| **Model(s)** | | Uppercase when referring to the ML Models Netdata uses |

+| **Anomaly Detection** | | The capability to identify unusual patterns in metrics |

+| **Metric Correlations** | | Filters dashboard to show metrics with the most significant changes in the selected time window |

+| **Anomaly Advisor** | | The interface and tooling for analyzing detected anomalies |

\ No newline at end of file

diff --git a/docs/dashboards-and-charts/visualization-date-and-time-controls.md b/docs/dashboards-and-charts/visualization-date-and-time-controls.md

index 3e2b6dbdca4d21..0515f11bae57ef 100644

--- a/docs/dashboards-and-charts/visualization-date-and-time-controls.md

+++ b/docs/dashboards-and-charts/visualization-date-and-time-controls.md

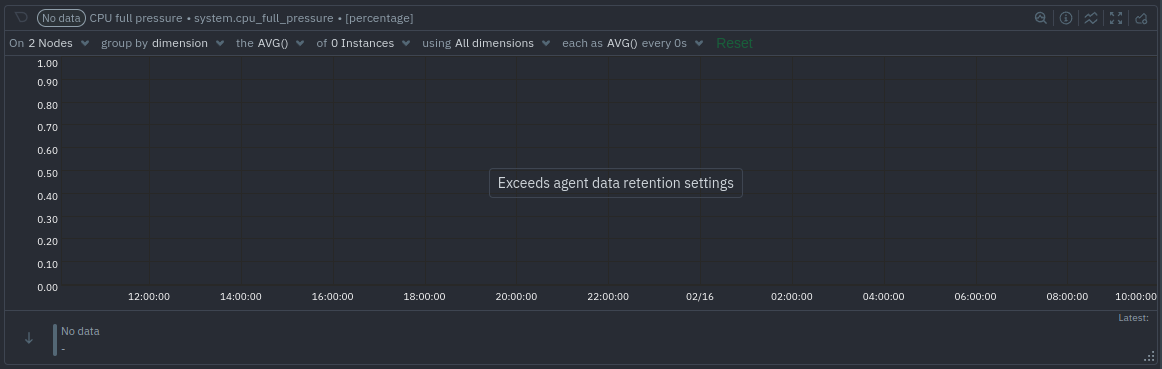

@@ -81,7 +81,7 @@ beyond stored historical metrics, you'll see this message:

-At any time, [configure the internal TSDB's storage capacity](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md) to expand your

+At any time, [configure the internal TSDB's storage capacity](/src/database/README.md) to expand your

depth of historical metrics.

### Timezone selector

diff --git a/docs/deployment-guides/deployment-strategies.md b/docs/deployment-guides/deployment-strategies.md

index ffb6357ac1a9f7..078cc015f41aa9 100644

--- a/docs/deployment-guides/deployment-strategies.md

+++ b/docs/deployment-guides/deployment-strategies.md

@@ -2,7 +2,7 @@

## Deployment Options Overview

-This section provides a quick overview for a few common deployment options for Netdata.

+This section provides a quick overview of a few common deployment options for Netdata.

You can read about [Standalone Deployment](/docs/deployment-guides/standalone-deployment.md) and [Deployment with Centralization Points](/docs/deployment-guides/deployment-with-centralization-points.md) in the documentation inside this section.

@@ -24,9 +24,9 @@ An API key is a key created with `uuidgen` and is used for authentication and/or

#### Child config

-As mentioned above, we do not recommend to connect the Child to Cloud directly during your setup.

+As mentioned above, we do not recommend connecting the Child to Cloud directly during your setup.

-This is done in order to reduce the footprint of the Netdata Agent on your production system, as some capabilities can be switched OFF for the Child and kept ON for the Parent.

+This is done to reduce the footprint of the Netdata Agent on your production system, as some capabilities can be switched OFF for the Child and kept ON for the Parent.

In this example, Machine Learning and Alerting are disabled for the Child, so that the Parent can take the load. We also use RAM instead of disk to store metrics with limited retention, covering temporary network issues.

@@ -34,14 +34,14 @@ In this example, Machine Learning and Alerting are disabled for the Child, so th

On the child node, edit `netdata.conf` by using the [edit-config](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) script and set the following parameters:

-```yaml

+```text

[db]

# https://github.com/netdata/netdata/blob/master/src/database/README.md

# none = no retention, ram = some retention in ram

mode = ram

# The retention in seconds.

- # This provides some tolerance to the time the child has to find a parent in

- # order to transfer the data. For IoT this can be lowered to 120.

+ # This provides some tolerance to the time the child has to find a parent

+ # to transfer the data. For IoT, this can be lowered to 120.

retention = 1200

# The granularity of metrics, in seconds.

# You may increase this to lower CPU resources.

@@ -56,8 +56,7 @@ On the child node, edit `netdata.conf` by using the [edit-config](/docs/netdata-

# Disable remote access to the local dashboard

bind to = lo

[plugins]

- # Uncomment the following line to disable all external plugins on extreme

- # IoT cases by default.

+ # Uncomment the following line to disable all external plugins on extreme IoT cases by default.

# enable running new plugins = no

```

@@ -65,7 +64,7 @@ On the child node, edit `netdata.conf` by using the [edit-config](/docs/netdata-

To edit `stream.conf`, use again the [edit-config](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) script and set the following parameters:

-```yaml

+```text

[stream]

# Stream metrics to another Netdata

enabled = yes

@@ -77,7 +76,7 @@ To edit `stream.conf`, use again the [edit-config](/docs/netdata-agent/configura

#### Parent config

-For the Parent, besides setting up streaming, this example also provides configuration for multiple [tiers of metrics storage](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md), for 10 Children, with about 2k metrics each. This allows for:

+For the Parent, besides setting up streaming, this example also provides configuration for multiple [tiers of metrics storage](/src/database/README.md#tiers), for 10 Children, with about 2k metrics each. This allows for:

- 1s granularity at tier 0 for 1 week

- 1m granularity at tier 1 for 1 month

@@ -92,7 +91,7 @@ Requiring:

On the Parent, edit `netdata.conf` by using the [edit-config](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) script and set the following parameters:

-```yaml

+```text

[db]

mode = dbengine

dbengine tier backfill = new

@@ -124,19 +123,19 @@ On the Parent node, edit `stream.conf` by using the [edit-config](/docs/netdata-

```yaml

[API_KEY]

- # Accept metrics streaming from other Agents with the specified API key

- enabled = yes

+ # Accept metrics streaming from other Agents with the specified API key

+ enabled = yes

```

### Active–Active Parents

-In order to setup active–active streaming between Parent 1 and Parent 2, Parent 1 needs to be instructed to stream data to Parent 2 and Parent 2 to stream data to Parent 1. The Child Agents need to be configured with the addresses of both Parent Agents. An Agent will only connect to one Parent at a time, falling back to the next upon failure. These examples use the same API key between Parent Agents and for connections for Child Agents.

+To set up active–active streaming between Parent 1 and Parent 2, Parent 1 needs to be instructed to stream data to Parent 2 and Parent 2 to stream data to Parent 1. The Child Agents need to be configured with the addresses of both Parent Agents. An Agent will only connect to one Parent at a time, falling back to the next upon failure. These examples use the same API key between Parent Agents and for connections for Child Agents.

On both Netdata Parent and all Child Agents, edit `stream.conf` by using the [edit-config](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) script:

#### stream.conf on Parent 1

-```yaml

+```text

[stream]

# Stream metrics to another Netdata

enabled = yes

@@ -147,11 +146,12 @@ On both Netdata Parent and all Child Agents, edit `stream.conf` by using the [ed

[API_KEY]

# Accept metrics streams from Parent 2 and Child Agents

enabled = yes

+

```

#### stream.conf on Parent 2

-```yaml

+```text

[stream]

# Stream metrics to another Netdata

enabled = yes

@@ -165,7 +165,7 @@ On both Netdata Parent and all Child Agents, edit `stream.conf` by using the [ed

#### stream.conf on Child Agents

-```yaml

+```text

[stream]

# Stream metrics to another Netdata

enabled = yes

@@ -193,7 +193,7 @@ We also suggest that you:

For increased security, user management and access to our latest features, tools and troubleshooting solutions.

-2. [Change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md)

+2. [Change how long Netdata stores metrics](/src/database/README.md#modes)

To control Netdata's memory use, when you have a lot of ephemeral metrics.

diff --git a/docs/exporting-metrics/enable-an-exporting-connector.md b/docs/exporting-metrics/enable-an-exporting-connector.md

index 16fbe0b9bc4ac2..4dc57ba579eb82 100644

--- a/docs/exporting-metrics/enable-an-exporting-connector.md

+++ b/docs/exporting-metrics/enable-an-exporting-connector.md

@@ -9,7 +9,7 @@ the OpenTSDB and Graphite connectors.

>

> When you enable the exporting engine and a connector, the Netdata Agent exports metrics _beginning from the time you

> restart its process_, not the entire

-> [database of long-term metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md).

+> [database of long-term metrics](/src/database/README.md).

Once you understand how to enable a connector, you can apply that knowledge to any other connector.

diff --git a/docs/glossary.md b/docs/glossary.md

index cd253efef3cef4..ca8b64ca387c35 100644

--- a/docs/glossary.md

+++ b/docs/glossary.md

@@ -35,7 +35,7 @@ Use the alphabetized list below to find the answer to your single-term questions

- [**Cloud** or **Netdata Cloud**](/docs/netdata-cloud/README.md): Netdata Cloud is a web application that gives you real-time visibility for your entire infrastructure. With Netdata Cloud, you can view key metrics, insightful charts, and active alerts from all your nodes in a single web interface.

-- [**Collector**](/src/collectors/README.md#collector-architecture-and-terminology): A catch-all term for any Netdata process that gathers metrics from an endpoint.

+- [**Collector**](/src/collectors/README.md): A catch-all term for any Netdata process that gathers metrics from an endpoint.

- [**Community**](https://community.netdata.cloud/): As a company with a passion and genesis in open-source, we are not just very proud of our community, but we consider our users, fans, and chatters to be an imperative part of the Netdata experience and culture.

@@ -75,7 +75,7 @@ Use the alphabetized list below to find the answer to your single-term questions

## I

-- [**Internal plugins**](/src/collectors/README.md#collector-architecture-and-terminology): These gather metrics from `/proc`, `/sys`, and other Linux kernel sources. They are written in `C` and run as threads within the Netdata daemon.

+- [**Internal plugins**](/src/collectors/README.md): These gather metrics from `/proc`, `/sys`, and other Linux kernel sources. They are written in `C` and run as threads within the Netdata daemon.

## K

@@ -91,11 +91,10 @@ Use the alphabetized list below to find the answer to your single-term questions

- [**Metrics Exporting**](/docs/exporting-metrics/README.md): Netdata allows you to export metrics to external time-series databases with the exporting engine. This system uses a number of connectors to initiate connections to more than thirty supported databases, including InfluxDB, Prometheus, Graphite, ElasticSearch, and much more.

-- [**Metrics Storage**](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md): Upon collection the collected metrics need to be either forwarded, exported or just stored for further treatment. The Agent is capable to store metrics both short and long-term, with or without the usage of non-volatile storage.

+- [**Metrics Storage**](/src/database/README.md#modes): Upon collection the collected metrics need to be either forwarded, exported or just stored for further treatment. The Agent is capable to store metrics both short and long-term, with or without the usage of non-volatile storage.

- [**Metrics Streaming Replication**](/docs/observability-centralization-points/README.md): Each node running Netdata can stream the metrics it collects, in real time, to another node. Metric streaming allows you to replicate metrics data across multiple nodes, or centralize all your metrics data into a single time-series database (TSDB).

-- [**Module**](/src/collectors/REFERENCE.md#enable-and-disable-a-specific-collection-module): A type of collector.

## N

@@ -112,7 +111,7 @@ metrics, troubleshoot complex performance problems, and make data interoperable

- [**Obsoletion**(of nodes)](/docs/dashboards-and-charts/nodes-tab.md): Removing nodes from a space.

-- [**Orchestrators**](/src/collectors/README.md#collector-architecture-and-terminology): External plugins that run and manage one or more modules. They run as independent processes.

+- [**Orchestrators**](/src/collectors/README.md): External plugins that run and manage one or more modules. They run as independent processes.

## P

diff --git a/docs/netdata-agent/configuration/common-configuration-changes.md b/docs/netdata-agent/configuration/common-configuration-changes.md

index 0eda7dd863b7e0..de5abffeba5753 100644

--- a/docs/netdata-agent/configuration/common-configuration-changes.md

+++ b/docs/netdata-agent/configuration/common-configuration-changes.md

@@ -19,36 +19,7 @@ changes reflected in those visualizations due to the way Netdata Cloud proxies m

### Increase the long-term metrics retention period

-Read our doc on [increasing long-term metrics storage](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md) for details.

-

-### Reduce the data collection frequency

-

-Change `update every` in

-the [`[global]` section](/src/daemon/config/README.md#global-section-options)

-of `netdata.conf` so

-that it is greater than `1`. An `update every` of `5` means the Netdata Agent enforces a _minimum_ collection frequency

-of 5 seconds.

-

-```text

-[global]

- update every = 5

-```

-

-Every collector and plugin has its own `update every` setting, which you can also change in the `go.d.conf`,

-`python.d.conf` or `charts.d.conf` files, or in individual collector configuration files. If the `update

-every` for an individual collector is less than the global, the Netdata Agent uses the global setting. See

-the [enable or configure a collector](/src/collectors/REFERENCE.md#enable-and-disable-a-specific-collection-module)

-doc for details.

-

-### Disable a collector or plugin

-

-Turn off entire plugins in

-the [`[plugins]` section](/src/daemon/config/README.md#plugins-section-options)

-of

-`netdata.conf`.

-

-To disable specific collectors, open `go.d.conf`, `python.d.conf` or `charts.d.conf` and find the line

-for that specific module. Uncomment the line and change its value to `no`.

+Read our doc on [increasing long-term metrics storage](/src/database/README.md#tiers) for details.

## Modify alerts and notifications

diff --git a/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md b/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

index 26abcb38ee45a4..7b698b9768c660 100644

--- a/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

+++ b/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

@@ -17,16 +17,16 @@ Netdata for production use.

The following table summarizes the effect of each optimization on the CPU, RAM and Disk IO utilization in production.

-| Optimization | CPU | RAM | Disk IO |

-|-----------------------------------------------------------------------------------------------------------------------------------|--------------------|--------------------|--------------------|

-| [Use streaming and replication](#use-streaming-and-replication) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

-| [Disable unneeded plugins or collectors](#disable-unneeded-plugins-or-collectors) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

-| [Reduce data collection frequency](#reduce-collection-frequency) | :heavy_check_mark: | | :heavy_check_mark: |

-| [Change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md) | | :heavy_check_mark: | :heavy_check_mark: |

-| [Use a different metric storage database](/src/database/README.md) | | :heavy_check_mark: | :heavy_check_mark: |

-| [Disable machine learning](#disable-machine-learning) | :heavy_check_mark: | | |

-| [Use a reverse proxy](#run-netdata-behind-a-proxy) | :heavy_check_mark: | | |

-| [Disable/lower gzip compression for the Agent dashboard](#disablelower-gzip-compression-for-the-dashboard) | :heavy_check_mark: | | |

+| Optimization | CPU | RAM | Disk IO |

+|------------------------------------------------------------------------------------------------------------|--------------------|--------------------|--------------------|

+| [Use streaming and replication](#use-streaming-and-replication) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

+| [Disable unneeded plugins or collectors](#disable-unneeded-plugins-or-collectors) | :heavy_check_mark: | :heavy_check_mark: | :heavy_check_mark: |

+| [Reduce data collection frequency](#reduce-collection-frequency) | :heavy_check_mark: | | :heavy_check_mark: |

+| [Change how long Netdata stores metrics](/src/database/README.md#tiers) | | :heavy_check_mark: | :heavy_check_mark: |

+| [Use a different metric storage database](/src/database/README.md) | | :heavy_check_mark: | :heavy_check_mark: |

+| [Disable machine learning](#disable-machine-learning) | :heavy_check_mark: | | |

+| [Use a reverse proxy](#run-netdata-behind-a-proxy) | :heavy_check_mark: | | |

+| [Disable/lower gzip compression for the Agent dashboard](#disablelower-gzip-compression-for-the-dashboard) | :heavy_check_mark: | | |

## Resources required by a default Netdata installation

@@ -72,7 +72,7 @@ The memory footprint of Netdata is mainly influenced by the number of metrics co

To estimate and control memory consumption, you can (either one or a combination of the following actions):

1. [Disable unneeded plugins or collectors](#disable-unneeded-plugins-or-collectors)

-2. [Change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md)

+2. [Change how long Netdata stores metrics](/src/database/README.md#tiers)

3. [Use a different metric storage database](/src/database/README.md).

### Disk footprint and I/O

@@ -90,7 +90,7 @@ To optimize your disk footprint in any aspect described below, you can:

To configure retention, you can:

-1. [Change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md).

+1. [Change how long Netdata stores metrics](/src/database/README.md#tiers).

To control disk I/O:

@@ -127,8 +127,7 @@ See [using a different metric storage database](/src/database/README.md).

## Disable unneeded plugins or collectors

-If you know that you don't need an [entire plugin or a specific

-collector](/src/collectors/README.md#collector-architecture-and-terminology),

+If you know that you don't need an [entire plugin or a specific collector](/src/collectors/README.md),

you can disable any of them. Keep in mind that if a plugin/collector has nothing to do, it simply shuts down and doesn’t consume system resources. You will only improve the Agent's performance by disabling plugins/collectors that are

actively collecting metrics.

@@ -191,8 +190,7 @@ every` for an individual collector is less than the global, the Netdata Agent us

the [collectors configuration reference](/src/collectors/REFERENCE.md) for

details.

-To reduce the frequency of

-an [internal_plugin/collector](/src/collectors/README.md#collector-architecture-and-terminology),

+To reduce the frequency of an [internal_plugin/collector](/src/collectors/README.md),

open `netdata.conf` and find the appropriate section. For example, to reduce the frequency of the `apps` plugin, which

collects and visualizes metrics on application resource utilization:

@@ -201,7 +199,7 @@ collects and visualizes metrics on application resource utilization:

update every = 5

```

-To [configure an individual collector](/src/collectors/REFERENCE.md#configure-a-collector),

+To configure an individual collector,

open its specific configuration file with `edit-config` and look for the `update_every` setting. For example, to reduce

the frequency of the `nginx` collector, run `sudo ./edit-config go.d/nginx.conf`:

@@ -213,7 +211,7 @@ update_every: 10

## Lower memory usage for metrics retention

See how

-to [change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md).

+to [change how long Netdata stores metrics](/src/database/README.md#tiers).

## Use a different metric storage database

diff --git a/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md b/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md

deleted file mode 100644

index 8c0c11bc1fac2a..00000000000000

--- a/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md

+++ /dev/null

@@ -1,138 +0,0 @@

-# Change how long Netdata stores metrics

-

-Netdata offers a granular approach to data retention, allowing you to manage storage based on both **time** and **disk

-space**. This provides greater control and helps you optimize storage usage for your specific needs.

-

-**Default Retention Limits**:

-

-| Tier | Resolution | Time Limit | Size Limit (min 256 MB) |

-|:----:|:-------------------:|:----------:|:-----------------------:|

-| 0 | high (per second) | 14d | 1 GiB |

-| 1 | middle (per minute) | 3mo | 1 GiB |

-| 2 | low (per hour) | 2y | 1 GiB |

-

-> **Note**: If a user sets a disk space size less than 256 MB for a tier, Netdata will automatically adjust it to 256 MB.

-

-With these defaults, Netdata requires approximately 4 GiB of storage space (including metadata).

-

-## Retention Settings

-

-> **In a parent-child setup**, these settings manage the shared storage space used by the Netdata parent Agent for storing metrics collected by both the parent and its child nodes.

-

-You can fine-tune retention for each tier by setting a time limit or size limit. Setting a limit to 0 disables it,

-allowing for no time-based deletion for that tier or using all available space, respectively. This enables various

-retention strategies as shown in the table below:

-

-| Setting | Retention Behavior |

-|--------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------|

-| Size Limit = 0, Time Limit > 0 | **Time-based only:** data is stored for a specific duration regardless of disk usage. |

-| Time Limit = 0, Size Limit > 0 | **Space-based only:** data is stored until it reaches a certain amount of disk space, regardless of time. |

-| Time Limit > 0, Size Limit > 0 | **Combined time and space limits:** data is deleted once it reaches either the time limit or the disk space limit, whichever comes first. |

-

-You can change these limits in `netdata.conf`:

-

-```text

-[db]

- mode = dbengine

- storage tiers = 3

-

- # Tier 0, per second data. Set to 0 for no limit.

- dbengine tier 0 retention size = 1GiB

- dbengine tier 0 retention time = 14d

-

- # Tier 1, per minute data. Set to 0 for no limit.

- dbengine tier 1 retention size = 1GiB

- dbengine tier 1 retention time = 3mo

-

- # Tier 2, per hour data. Set to 0 for no limit.

- dbengine tier 2 retention size = 1GiB

- dbengine tier 2 retention time = 2y

-```

-

-## Monitoring Retention Utilization

-

-Netdata provides a visual representation of storage utilization for both time and space limits across all tiers within

-the 'dbengine retention' subsection of the 'Netdata Monitoring' section on the dashboard. This chart shows exactly how

-your storage space (disk space limits) and time (time limits) are used for metric retention.

-

-## Legacy configuration

-

-### v1.99.0 and prior

-

-Netdata prior to v2 supports the following configuration options in `netdata.conf`.

-They have the same defaults as the latest v2, but the unit of each value is given in the option name, not at the value.

-

-```text

-storage tiers = 3

-# Tier 0, per second data. Set to 0 for no limit.

-dbengine tier 0 disk space MB = 1024

-dbengine tier 0 retention days = 14

-# Tier 1, per minute data. Set to 0 for no limit.

-dbengine tier 1 disk space MB = 1024

-dbengine tier 1 retention days = 90

-# Tier 2, per hour data. Set to 0 for no limit.

-dbengine tier 2 disk space MB = 1024

-dbengine tier 2 retention days = 730

-```

-

-### v1.45.6 and prior

-

-Netdata versions prior to v1.46.0 relied on a disk space-based retention.

-

-**Default Retention Limits**:

-

-| Tier | Resolution | Size Limit |

-|:----:|:-------------------:|:----------:|

-| 0 | high (per second) | 256 MB |

-| 1 | middle (per minute) | 128 MB |

-| 2 | low (per hour) | 64 GiB |

-

-You can change these limits in `netdata.conf`:

-

-```text

-[db]

- mode = dbengine

- storage tiers = 3

- # Tier 0, per second data

- dbengine multihost disk space MB = 256

- # Tier 1, per minute data

- dbengine tier 1 multihost disk space MB = 1024

- # Tier 2, per hour data

- dbengine tier 2 multihost disk space MB = 1024

-```

-

-### v1.35.1 and prior

-

-These versions of the Agent do not support tiers. You could change the metric retention for the parent and

-all of its children only with the `dbengine multihost disk space MB` setting. This setting accounts the space allocation

-for the parent node and all of its children.

-

-To configure the database engine, look for the `page cache size MB` and `dbengine multihost disk space MB` settings in

-the `[db]` section of your `netdata.conf`.

-

-```text

-[db]

- dbengine page cache size MB = 32

- dbengine multihost disk space MB = 256

-```

-

-### v1.23.2 and prior

-

-_For Netdata Agents earlier than v1.23.2_, the Agent on the parent node uses one dbengine instance for itself, and

-another instance for every child node it receives metrics from. If you had four streaming nodes, you would have five

-instances in total (`1 parent + 4 child nodes = 5 instances`).

-

-The Agent allocates resources for each instance separately using the `dbengine disk space MB` (**deprecated**) setting.

-If `dbengine disk space MB`(**deprecated**) is set to the default `256`, each instance is given 256 MiB in disk space,

-which means the total disk space required to store all instances is,

-roughly, `256 MiB * 1 parent * 4 child nodes = 1280 MiB`.

-

-#### Backward compatibility

-

-All existing metrics belonging to child nodes are automatically converted to legacy dbengine instances and the localhost

-metrics are transferred to the multihost dbengine instance.

-

-All new child nodes are automatically transferred to the multihost dbengine instance and share its page cache and disk

-space. If you want to migrate a child node from its legacy dbengine instance to the multihost dbengine instance, you

-must delete the instance's directory, which is located in `/var/cache/netdata/MACHINE_GUID/dbengine`, after stopping the

-Agent.

diff --git a/docs/netdata-agent/sizing-netdata-agents/cpu-requirements.md b/docs/netdata-agent/sizing-netdata-agents/cpu-requirements.md

index 76580b1c38e50b..fe019924fd2c42 100644

--- a/docs/netdata-agent/sizing-netdata-agents/cpu-requirements.md

+++ b/docs/netdata-agent/sizing-netdata-agents/cpu-requirements.md

@@ -1,4 +1,4 @@

-# CPU

+# CPU Utilization

Netdata's CPU usage depends on the features you enable. For details, see [resource utilization](/docs/netdata-agent/sizing-netdata-agents/README.md).

@@ -6,15 +6,15 @@ Netdata's CPU usage depends on the features you enable. For details, see [resour

With default settings on Children, CPU utilization typically falls within the range of 1% to 5% of a single core. This includes the combined resource usage of:

-- Three database tiers for data storage.

-- Machine learning for anomaly detection.

-- Per-second data collection.

-- Alerts.

-- Streaming to a [Parent Agent](/docs/observability-centralization-points/metrics-centralization-points/README.md).

+- Three Database Tiers for storage

+- ML for Anomaly Detection

+- Per-second data collection

+- Alerts

+- Streaming to a [Parent Agent](/docs/observability-centralization-points/metrics-centralization-points/README.md)

## Parents

-For Netdata Parents (Metrics Centralization Points), we estimate the following CPU utilization:

+For Parents, we estimate the following CPU utilization:

| Feature | Depends On | Expected Utilization (CPU cores per million) | Key Reasons |

|:--------------------:|:---------------------------------------------------:|:--------------------------------------------:|:------------------------------------------------------------------------:|

@@ -26,18 +26,18 @@ To ensure optimal performance, keep total CPU utilization below 60% when the Par

## Increased CPU consumption on Parent startup

-When a Netdata Parent starts up, it undergoes a series of initialization tasks that can temporarily increase CPU, network, and disk I/O usage:

+When a Parent starts up, it undergoes a series of initialization tasks that can temporarily increase CPU, network, and disk I/O usage:

1. **Backfilling Higher Tiers**: The Parent calculates aggregated metrics for missing data points, ensuring consistency across different time resolutions.

2. **Metadata Synchronization**: The Parent and Children exchange metadata information about collected metrics.

3. **Data Replication**: Missing data is transferred from Children to the Parent.

4. **Normal Streaming**: Regular streaming of new metrics begins.

-5. **Machine Learning Initialization**: Machine learning models are loaded and prepared for anomaly detection.

+5. **Machine Learning Initialization**: ML models are loaded and prepared for Anomaly Detection.

6. **Health Check Initialization**: The health engine starts monitoring metrics and triggering alerts.

Additional considerations:

- **Compression Optimization**: The compression algorithm learns data patterns to optimize compression ratios.

-- **Database Optimization**: The database engine adjusts page sizes for efficient disk I/O.

+- **Database Optimization**: The Database engine adjusts page sizes for efficient disk I/O.

These initial tasks can temporarily increase resource usage, but the impact typically diminishes as the Parent stabilizes and enters a steady-state operation.

diff --git a/docs/netdata-agent/sizing-netdata-agents/disk-requirements-and-retention.md b/docs/netdata-agent/sizing-netdata-agents/disk-requirements-and-retention.md

index 68da44000cc713..1703ee7dad302e 100644

--- a/docs/netdata-agent/sizing-netdata-agents/disk-requirements-and-retention.md

+++ b/docs/netdata-agent/sizing-netdata-agents/disk-requirements-and-retention.md

@@ -36,7 +36,7 @@ gantt

**Configuring dbengine mode and retention**:

- Enable dbengine mode: The dbengine mode is already the default, so no configuration change is necessary. For reference, the dbengine mode can be configured by setting `[db].mode` to `dbengine` in `netdata.conf`.

-- Adjust retention (optional): see [Change how long Netdata stores metrics](/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md).

+- Adjust retention (optional): see [Change how long Netdata stores metrics](/src/database/README.md#tiers).

## `ram`

diff --git a/docs/netdata-agent/sizing-netdata-agents/ram-requirements.md b/docs/netdata-agent/sizing-netdata-agents/ram-requirements.md

index f45e4516e57d0b..fe50dba2a32b38 100644

--- a/docs/netdata-agent/sizing-netdata-agents/ram-requirements.md

+++ b/docs/netdata-agent/sizing-netdata-agents/ram-requirements.md

@@ -1,54 +1,51 @@

+# RAM Utilization

-# RAM Requirements

+Using the default [Database Tier configuration](/src/database/README.md#tiers), Netdata needs about 16KiB per unique metric collected, independently of the data collection frequency.

-With default configuration about database tiers, Netdata should need about 16KiB per unique metric collected, independently of the data collection frequency.

+## Children

-Netdata supports memory ballooning and automatically sizes and limits the memory used, based on the metrics concurrently being collected.

+Netdata by default should need 100MB to 200MB of RAM, depending on the number of metrics being collected.

-## On Production Systems, Netdata Children

+This number can be lowered by limiting the number of Database Tiers or switching Database modes. For more information, check [the Database section of our documentation](/src/database/README.md).

-With default settings, Netdata should run with 100MB to 200MB of RAM, depending on the number of metrics being collected.

+## Parents

-This number can be lowered by limiting the number of database tier or switching database modes. For more information, check [Disk Requirements and Retention](/docs/netdata-agent/sizing-netdata-agents/disk-requirements-and-retention.md).

+| Description | Scope | RAM Required | Notes |

+|:-------------------------------------|:---------------------:|:------------:|:------------------------------------------------:|

+| metrics with retention | time-series in the db | 1 KiB | Metadata and indexes |

+| metrics currently collected | time-series collected | 20 KiB | 16 KiB for db + 4 KiB for collection structures |

+| metrics with Machine Learning Models | time-series collected | 5 KiB | The trained models per dimension |

+| nodes with retention | nodes in the db | 10 KiB | Metadata and indexes |

+| nodes currently received | nodes collected | 512 KiB | Structures and reception buffers |

+| nodes currently sent | nodes collected | 512 KiB | Structures and dispatch buffers |

-## On Metrics Centralization Points, Netdata Parents

+These numbers vary depending on name length, the number of dimensions per instance and per context, the number and length of the labels added, the number of Machine Learning models maintained and similar parameters. For most use cases, they represent the worst case scenario, so you may find out Netdata actually needs less than that.

-|Description|Scope|RAM Required|Notes|

-|:---|:---:|:---:|:---|

-|metrics with retention|time-series in the db|1 KiB|Metadata and indexes.

-|metrics currently collected|time-series collected|20 KiB|16 KiB for db + 4 KiB for collection structures.

-|metrics with machine learning models|time-series collected|5 KiB|The trained models per dimension.

-|nodes with retention|nodes in the db|10 KiB|Metadata and indexes.

-|nodes currently received|nodes collected|512 KiB|Structures and reception buffers.

-|nodes currently sent|nodes collected|512 KiB|Structures and dispatch buffers.

+Each metric currently being collected needs (1 index + 20 collection + 5 ml) = 26 KiB. When it stops being collected, it needs 1 KiB (index).

-These numbers vary depending on name length, the number of dimensions per instance and per context, the number and length of the labels added, the number of machine learning models maintained and similar parameters. For most use cases, they represent the worst case scenario, so you may find out Netdata actually needs less than that.

-

-Each metric currently being collected needs (1 index + 20 collection + 5 ml) = 26 KiB. When it stops being collected it needs 1 KiB (index).

-

-Each node currently being collected needs (10 index + 512 reception + 512 dispatch) = 1034 KiB. When it stops being collected it needs 10 KiB (index).

+Each node currently being collected needs (10 index + 512 reception + 512 dispatch) = 1034 KiB. When it stops being collected, it needs 10 KiB (index).

### Example

-A Netdata Parents cluster (2 nodes) has 1 million currently collected metrics from 500 nodes, and 10 million archived metrics from 5000 nodes:

+A Netdata cluster (two Parents) has one million currently collected metrics from 500 nodes, and 10 million archived metrics from 5000 nodes:

-|Description|Entries|RAM per Entry|Total RAM|

-|:---|:---:|:---:|---:|

-|metrics with retention|11 million|1 KiB|10742 MiB|

-|metrics currently collected|1 million|20 KiB|19531 MiB|

-|metrics with machine learning models|1 million|5 KiB|4883 MiB|

-|nodes with retention|5500|10 KiB|52 MiB|

-|nodes currently received|500|512 KiB|256 MiB|

-|nodes currently sent|500|512 KiB|256 MiB|

-|**Memory required per node**|||**35.7 GiB**|

+| Description | Entries | RAM per Entry | Total RAM |

+|:-------------------------------------|:----------:|:-------------:|-------------:|

+| metrics with retention | 11 million | 1 KiB | 10742 MiB |

+| metrics currently collected | 1 million | 20 KiB | 19531 MiB |

+| metrics with Machine Learning Models | 1 million | 5 KiB | 4883 MiB |

+| nodes with retention | 5500 | 10 KiB | 52 MiB |

+| nodes currently received | 500 | 512 KiB | 256 MiB |

+| nodes currently sent | 500 | 512 KiB | 256 MiB |

+| **Memory required per node** | | | **35.7 GiB** |

-On highly volatile environments (like Kubernetes clusters), the database retention can significantly affect memory usage. Usually reducing retention on higher database tiers helps reducing memory usage.

+In highly volatile environments (like Kubernetes clusters), Database retention can significantly affect memory usage. Usually, reducing retention on higher Database Tiers helps to reduce memory usage.

## Database Size

-Netdata supports memory ballooning to automatically adjust its database memory size based on the number of time-series concurrently being collected.

+Netdata supports memory ballooning to automatically adjust its Database memory size based on the number of time-series concurrently being collected.

-The general formula, with the default configuration of database tiers, is:

+The general formula, with the default configuration of Database Tiers, is:

```text

memory = UNIQUE_METRICS x 16KiB + CONFIGURED_CACHES

@@ -56,7 +53,7 @@ memory = UNIQUE_METRICS x 16KiB + CONFIGURED_CACHES

The default `CONFIGURED_CACHES` is 32MiB.

-For one million concurrently collected time-series (independently of their data collection frequency), the memory required is:

+For **one million concurrently collected time-series** (independently of their data collection frequency), **the required memory is 16 GiB**. In detail:

```text

UNIQUE_METRICS = 1000000

@@ -68,19 +65,11 @@ CONFIGURED_CACHES = 32MiB

about 16 GiB

```

-There are two cache sizes that can be configured in `netdata.conf`:

-

-1. `[db].dbengine page cache size`: this is the main cache that keeps metrics data into memory. When data is not found in it, the extent cache is consulted, and if not found in that too, they are loaded from the disk.

-2. `[db].dbengine extent cache size`: this is the compressed extent cache. It keeps in memory compressed data blocks, as they appear on disk, to avoid reading them again. Data found in the extent cache but not in the main cache have to be uncompressed to be queried.

-

-Both of them are dynamically adjusted to use some of the total memory computed above. The configuration in `netdata.conf` allows providing additional memory to them, increasing their caching efficiency.

-

-

-## I have a Netdata Parent that is also a systemd-journal logs centralization point, what should I know?

+## Parents that also act as `systemd-journal` Logs centralization points

Logs usually require significantly more disk space and I/O bandwidth than metrics. For optimal performance, we recommend to store metrics and logs on separate, independent disks.

-Netdata uses direct-I/O for its database, so that it does not pollute the system caches with its own data. We want Netdata to be a nice citizen when it runs side-by-side with production applications, so this was required to guarantee that Netdata does not affect the operation of databases or other sensitive applications running on the same servers.

+Netdata uses direct-I/O for its Database to not pollute the system caches with its own data.

To optimize disk I/O, Netdata maintains its own private caches. The default settings of these caches are automatically adjusted to the minimum required size for acceptable metrics query performance.

diff --git a/src/collectors/COLLECTORS.md b/src/collectors/COLLECTORS.md

index 0a9ad30893a7cf..fc16c87976bddf 100644

--- a/src/collectors/COLLECTORS.md

+++ b/src/collectors/COLLECTORS.md

@@ -3,7 +3,7 @@

Netdata uses collectors to help you gather metrics from your favorite applications and services and view them in

real-time, interactive charts. The following list includes all the integrations where Netdata can gather metrics from.

-Learn more about [how collectors work](/src/collectors/README.md), and then learn how to [enable or configure](/src/collectors/REFERENCE.md#enable-and-disable-a-specific-collection-module) a specific collector.

+Learn more about [how collectors work](/src/collectors/README.md), and then learn how to [enable or configure](/src/collectors/REFERENCE.md#enable-or-disable-collectors-and-plugins) a specific collector.

> **Note**

>

diff --git a/src/collectors/README.md b/src/collectors/README.md

index e7b9c155273ac7..35424eff7cf2a9 100644

--- a/src/collectors/README.md

+++ b/src/collectors/README.md

@@ -11,12 +11,11 @@ If you don't see charts for your application, check our collectors' [configurati

Netdata's collectors are specialized data collection plugins that gather metrics from various sources. They are divided into two main categories:

-| Type | Description | Key Features |

-|----------|-----------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| Internal | Native collectors that gather system-level metrics | • Written in `C` for optimal performance

• Run as threads within Netdata daemon

• Zero external dependencies

• Minimal system overhead |

+| Type | Description | Key Features |

+|----------|-----------------------------------------------------------------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| Internal | Native collectors that gather system-level metrics | • Written in `C` for optimal performance

• Run as threads within Netdata daemon

• Zero external dependencies

• Minimal system overhead |

| External | Modular collectors that gather metrics from applications and services | • Support multiple programming languages

• Run as independent processes

• Communicate via pipes with Netdata

• Managed by [plugins.d](/src/plugins.d/README.md)

• Examples: MySQL, Nginx, Redis collectors |

-

## Collector Privileges

Netdata uses various plugins and helper binaries that require elevated privileges to collect system metrics.

diff --git a/src/collectors/REFERENCE.md b/src/collectors/REFERENCE.md

index af745013c5b574..89f1b9d8f2c7ba 100644

--- a/src/collectors/REFERENCE.md

+++ b/src/collectors/REFERENCE.md

@@ -1,138 +1,103 @@

-# Collectors configuration reference

-

-The list of supported collectors can be found in [the documentation](/src/collectors/COLLECTORS.md),

-and on [our website](https://www.netdata.cloud/integrations). The documentation of each collector provides all the

-necessary configuration options and prerequisites for that collector. In most cases, either the charts are automatically generated

-without any configuration, or you just fulfil those prerequisites and [configure the collector](#configure-a-collector).

-

-If the application you are interested in monitoring is not listed in our integrations, the collectors list includes

-the available options to

-[add your application to Netdata](https://github.com/netdata/netdata/edit/master/src/collectors/COLLECTORS.md#add-your-application-to-netdata).

+# Collector configuration

-If we do support your collector but the charts described in the documentation don't appear on your dashboard, the reason will

-be one of the following:

+Find available collectors in the [Collecting Metrics](/src/collectors/README.md) guide and on our [Integrations page](https://www.netdata.cloud/integrations).

-- The entire data collection plugin is disabled by default. Read how to [enable and disable plugins](#enable-and-disable-plugins)

+Each collector's documentation includes detailed setup instructions and configuration options. Most collectors either work automatically without configuration or require minimal setup to begin collecting data.

-- The data collection plugin is enabled, but a specific data collection module is disabled. Read how to

- [enable and disable a specific collection module](#enable-and-disable-a-specific-collection-module).

+> **Info**

+>

+> Enable and configure Go collectors directly through the UI using the [Dynamic Configuration Manager](/docs/netdata-agent/configuration/dynamic-configuration.md).

-- Autodetection failed. Read how to [configure](#configure-a-collector) and [troubleshoot](#troubleshoot-a-collector) a collector.

+## Enable or disable Collectors and Plugins

-## Enable and disable plugins

+Most collectors and plugins are enabled by default. You can selectively disable them to optimize performance.

-You can enable or disable individual plugins by opening `netdata.conf` and scrolling down to the `[plugins]` section.

-This section features a list of Netdata's plugins, with a boolean setting to enable or disable them. The exception is

-`statsd.plugin`, which has its own `[statsd]` section. Your `[plugins]` section should look similar to this:

+**To disable plugins**:

-```text

-[plugins]

- # timex = yes

- # idlejitter = yes

- # netdata monitoring = yes

- # tc = yes

- # diskspace = yes

- # proc = yes

- # cgroups = yes

- # enable running new plugins = yes

- # check for new plugins every = 60

- # slabinfo = no

- # python.d = yes

- # perf = yes

- # ioping = yes

- # fping = yes

- # nfacct = yes

- # go.d = yes

- # apps = yes

- # ebpf = yes

- # charts.d = yes

- # statsd = yes

-```

+1. Open `netdata.conf` using [`edit-config`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config).

+2. Navigate to the `[plugins]` section

+3. Uncomment the relevant line and set it to `no`

-By default, most plugins are enabled, so you don't need to enable them explicitly to use their collectors. To enable or

-disable any specific plugin, remove the comment (`#`) and change the boolean setting to `yes` or `no`.

+ ```text

+ [plugins]

+ proc = yes

+ python.d = no

+ ```

-## Enable and disable a specific collection module

+**To disable specific collectors**:

-You can enable/disable of the collection modules supported by `go.d`, `python.d` or `charts.d` individually, using the

-configuration file of that orchestrator. For example, you can change the behavior of the Go orchestrator, or any of its

-collectors, by editing `go.d.conf`.

+1. Open the corresponding plugin configuration file:

+ ```bash

+ sudo ./edit-config go.d.conf

+ ```

+2. Uncomment the collector's line and set it to `no`:

+ ```yaml

+ modules:

+ xyz_collector: no

+ ```

+3. [Restart](/docs/netdata-agent/start-stop-restart.md) the Agent after making changes.

-Use `edit-config` from your [Netdata config directory](/docs/netdata-agent/configuration/README.md#the-netdata-config-directory)

-to open the orchestrator primary configuration file:

+## Adjust data collection frequency

-```bash

-cd /etc/netdata

-sudo ./edit-config go.d.conf

-```

+You can modify how often collectors gather metrics to optimize CPU usage. This can be done globally or for specific collectors.

-Within this file, you can either disable the orchestrator entirely (`enabled: yes`), or find a specific collector and

-enable/disable it with `yes` and `no` settings. Uncomment any line you change to ensure the Netdata daemon reads it on

-start.

+### Global

-After you make your changes, restart the Agent with the [appropriate method](/docs/netdata-agent/start-stop-restart.md) for your system.

+1. Open `netdata.conf` using [`edit-config`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config).

+2. Set the `update every` value (default is `1`, meaning one-second intervals):

+ ```text

+ [global]

+ update every = 2

+ ```

-## Configure a collector

+3. [Restart](/docs/netdata-agent/start-stop-restart.md) the Agent after making changes.

-Most collector modules come with **auto-detection**, configured to work out-of-the-box on popular operating systems with

-the default settings.

+### Specific Plugin or Collector

-However, there are cases that auto-detection fails. Usually, the reason is that the applications to be monitored do not

-allow Netdata to connect. In most of the cases, allowing the user `netdata` from `localhost` to connect and collect

-metrics, will automatically enable data collection for the application in question (it will require a Netdata restart).

+**For Plugins**:

-When Netdata starts up, each collector searches for exposed metrics on the default endpoint established by that service

-or application's standard installation procedure. For example,

-the [Nginx collector](/src/go/plugin/go.d/modules/nginx/README.md) searches at

-`http://127.0.0.1/stub_status` for exposed metrics in the correct format. If an Nginx web server is running and exposes

-metrics on that endpoint, the collector begins gathering them.

+1. Open `netdata.conf` using [`edit-config`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config).

+2. Locate the plugin's section and set its frequency:

-However, not every node or infrastructure uses standard ports, paths, files, or naming conventions. You may need to

-enable or configure a collector to gather all available metrics from your systems, containers, or applications.

+ ```text

+ [plugin:apps]

+ update every = 5

+ ```

+3. [Restart](/docs/netdata-agent/start-stop-restart.md) the Agent after making changes.

-First, [find the collector](/src/collectors/COLLECTORS.md) you want to edit

-and open its documentation. Some software has collectors written in multiple languages. In these cases, you should always

-pick the collector written in Go.

+**For Collectors**:

-Use `edit-config` from your

-[Netdata config directory](/docs/netdata-agent/configuration/README.md#the-netdata-config-directory)

-to open a collector's configuration file. For example, edit the Nginx collector with the following:

-

-```bash

-./edit-config go.d/nginx.conf

-```

-

-Each configuration file describes every available option and offers examples to help you tweak Netdata's settings

-according to your needs. In addition, every collector's documentation shows the exact command you need to run to

-configure that collector. Uncomment any line you change to ensure the collector's orchestrator or the Netdata daemon

-read it on start.

-

-After you make your changes, restart the Agent with the [appropriate method](/docs/netdata-agent/start-stop-restart.md) for your system.

+Each collector has its own configuration format and options. Refer to the collector's documentation for specific instructions on adjusting its data collection frequency.

## Troubleshoot a collector

-First, navigate to your plugins directory, which is usually at `/usr/libexec/netdata/plugins.d/`. If that's not the case

-on your system, open `netdata.conf` and look for the setting `plugins directory`. Once you're in the plugins directory,

-switch to the `netdata` user.

-

-```bash

-cd /usr/libexec/netdata/plugins.d/

-sudo su -s /bin/bash netdata

-```

-

-The next step is based on the collector's orchestrator.

-

-```bash

-# Go orchestrator (go.d.plugin)

-./go.d.plugin -d -m

-

-# Python orchestrator (python.d.plugin)

-./python.d.plugin debug trace

-

-# Bash orchestrator (bash.d.plugin)

-./charts.d.plugin debug 1

-```

-

-The output from the relevant command will provide valuable troubleshooting information. If you can't figure out how to

-enable the collector using the details from this output, feel free to [join our Discord server](https://discord.com/invite/2mEmfW735j),

-to get help from our experts.

+1. Navigate to the plugins directory. If not found, check the `plugins directory` setting in `netdata.conf`.

+ ```bash

+ cd /usr/libexec/netdata/plugins.d/

+ ```

+2. Switch to the netdata user.

+ ```bash

+ sudo su -s /bin/bash netdata

+ ```

+3. Run debug mode

+

+ ```bash

+ # Go collectors

+ ./go.d.plugin -d -m

+

+ # Python collectors

+ ./python.d.plugin debug trace

+

+ # Bash collectors

+ ./charts.d.plugin debug 1

+ ```

+4. Analyze output

+

+The debug output will show:

+

+- Configuration issues

+- Connection problems

+- Permission errors

+- Other potential failures

+

+Need help interpreting the results? Join our [Discord community](https://discord.com/invite/2mEmfW735j) for expert assistance.

diff --git a/src/collectors/statsd.plugin/README.md b/src/collectors/statsd.plugin/README.md

index b93d6c7987a9aa..7af7c14d18e887 100644

--- a/src/collectors/statsd.plugin/README.md

+++ b/src/collectors/statsd.plugin/README.md

@@ -337,7 +337,7 @@ Using the above configuration `myapp` should get its own section on the dashboar

- `gaps when not collected = yes|no`, enables or disables gaps on the charts of the application in case that no metrics are collected.

- `memory mode` sets the memory mode for all charts of the application. The default is the global default for Netdata (not the global default for StatsD private charts). We suggest not to use this (we have commented it out in the example) and let your app use the global default for Netdata, which is our dbengine.

-- `history` sets the size of the round-robin database for this application. The default is the global default for Netdata (not the global default for StatsD private charts). This is only relevant if you use `memory mode = save`. Read more on our [metrics storage(]/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md) doc.

+- `history` sets the size of the round-robin database for this application. The default is the global default for Netdata (not the global default for StatsD private charts). This is only relevant if you use `memory mode = save`. Read more on our documentation for the Agent's [Database](/src/database/README.md).

`[dictionary]` defines name-value associations. These are used to renaming metrics, when added to synthetic charts. Metric names are also defined at each `dimension` line. However, using the dictionary dimension names can be declared globally, for each app and is the only way to rename dimensions when using patterns. Of course the dictionary can be empty or missing.

diff --git a/src/database/README.md b/src/database/README.md

index 771a2bbf02631e..803ff5a9150e7c 100644

--- a/src/database/README.md

+++ b/src/database/README.md

@@ -1,153 +1,141 @@

# Database

-Netdata is fully capable of long-term metrics storage, at per-second granularity, via its default database engine

-(`dbengine`). But to remain as flexible as possible, Netdata supports several storage options:

+Netdata stores detailed metrics at one-second granularity using its Database engine.

-1. `dbengine`, (the default) data are in database files. The [Database Engine](/src/database/engine/README.md) works like a

- traditional database. There is some amount of RAM dedicated to data caching and indexing and the rest of the data

- reside compressed on disk. The number of history entries is not fixed in this case, but depends on the configured

- disk space and the effective compression ratio of the data stored. This is the **only mode** that supports changing

- the data collection update frequency (`update every`) **without losing** the previously stored metrics. For more

- details see [here](/src/database/engine/README.md).

+## Modes

-2. `ram`, data are purely in memory. Data are never saved on disk. This mode uses `mmap()` and supports [KSM](#ksm).

+| Mode | Description |

+|------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| `dbengine` | Stores data in a database with RAM for caching and indexing, while keeping compressed data on disk. Storage capacity depends on available disk space and data compression ratio. For details, see [Database Engine](/src/database/engine/README.md). |

+| `ram` | Stores data entirely in memory without disk persistence. |

+| `none` | Operates without storage (metrics can only be streamed to another Agent). |

-3. `alloc`, like `ram` but it uses `calloc()` and does not support [KSM](#ksm). This mode is the fallback for all others

- except `none`.

+The default `dbengine` mode is optimized for:

-4. `none`, without a database (collected metrics can only be streamed to another Netdata).

+- Long-term data retention

+- Parent nodes in [Centralization](/docs/observability-centralization-points/README.md) setups

-## Which database mode to use

+For resource-constrained environments, particularly Child nodes in Centralization setups, consider using `ram`.

-The default mode `[db].mode = dbengine` has been designed to scale for longer retentions and is the only mode suitable

-for parent Agents in the _Parent - Child_ setups

-

-The other available database modes are designed to minimize resource utilization and should only be considered on

-[Parent - Child](/docs/observability-centralization-points/README.md) setups at the children side and only when the

-resource constraints are very strict.

-

-So,

-

-- On a single node setup, use `[db].mode = dbengine`.

-- On a [Parent - Child](/docs/observability-centralization-points/README.md) setup, use `[db].mode = dbengine` on the

- parent to increase retention, and a more resource-efficient mode like, `dbengine` with light retention settings, `ram`, or `none` for the children to minimize resource utilization.

-

-## Choose your database mode

-

-You can select the database mode by editing `netdata.conf` and setting:

+Use [`edit-config`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) to open `netdata.conf` and set your preferred mode:

```text

[db]

- # dbengine (default), ram (the default if dbengine not available), alloc, none

+ # dbengine, ram, none

mode = dbengine

```

-## Netdata Longer Metrics Retention

+## Tiers

-Metrics retention is controlled only by the disk space allocated to storing metrics. But it also affects the memory and

-CPU required by the Agent to query longer timeframes.

+Netdata offers a granular approach to data retention, allowing you to manage storage based on both **time** and **disk space**. This provides greater control and helps you optimize storage usage for your specific needs.

-Since Netdata Agents usually run on the edge, on production systems, Netdata Agent **parents** should be considered.

-When having a [**parent - child**](/docs/observability-centralization-points/README.md) setup, the child (the

-Netdata Agent running on a production system) delegates all of its functions, including longer metrics retention and

-querying, to the parent node that can dedicate more resources to this task. A single Netdata Agent parent can centralize

-multiple children Netdata Agents (dozens, hundreds, or even thousands depending on its available resources).

+**Default Retention Limits**:

-## Running Netdata on embedded devices

+| Tier | Resolution | Time Limit | Size Limit (min 256 MB) |

+|:----:|:-------------------:|:----------:|:-----------------------:|

+| 0 | high (per second) | 14d | 1 GiB |

+| 1 | middle (per minute) | 3mo | 1 GiB |

+| 2 | low (per hour) | 2y | 1 GiB |

-Embedded devices typically have very limited RAM resources available.

+> **Note**

+>

+> If a user sets a disk space size less than 256 MB for a tier, Netdata will automatically adjust it to 256 MB.

-There are two settings for you to configure:

+With these defaults, Netdata requires approximately 4 GiB of storage space (including metadata).

-1. `[db].update every`, which controls the data collection frequency

-2. `[db].retention`, which controls the size of the database in memory (except for `[db].mode = dbengine`)

+### Retention Settings

-By default `[db].update every = 1` and `[db].retention = 3600`. This gives you an hour of data with per second updates.

+> **Important**

+>

+> In a Parent-Child setup, these settings manage the entire storage space used by the Parent for storing metrics collected both by itself and its Children.

-If you set `[db].update every = 2` and `[db].retention = 1800`, you will still have an hour of data, but collected once

-every 2 seconds. This will **cut in half** both CPU and RAM resources consumed by Netdata. Of course experiment a bit to find the right setting.

-On very weak devices you might have to use `[db].update every = 5` and `[db].retention = 720` (still 1 hour of data, but

-1/5 of the CPU and RAM resources).

+You can fine-tune retention for each tier by setting a time limit or size limit. Setting a limit to 0 disables it. This enables the following retention strategies:

-You can also disable [data collection plugins](/src/collectors/README.md) that you don't need. Disabling such plugins will also

-free both CPU and RAM resources.

+| Setting | Retention Behavior |

+|--------------------------------|------------------------------------------------------------------------------------------------------------------------------------------|

+| Size Limit = 0, Time Limit > 0 | **Time based:** data is stored for a specific duration regardless of disk usage |

+| Time Limit = 0, Size Limit > 0 | **Space based:** data is stored with a disk space limit, regardless of time |

+| Time Limit > 0, Size Limit > 0 | **Combined time and space limits:** data is deleted once it reaches either the time limit or the disk space limit, whichever comes first |

-## Memory optimizations

+You can change these limits using [`edit-config`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) to open `netdata.conf`:

-### KSM

-

-KSM performs memory deduplication by scanning through main memory for physical pages that have identical content, and

-identifies the virtual pages that are mapped to those physical pages. It leaves one page unchanged, and re-maps each

-duplicate page to point to the same physical page. Netdata offers all of its in-memory database to kernel for

-deduplication.

-

-In the past, KSM has been criticized for consuming a lot of CPU resources. This is true when KSM is used for

-deduplicating certain applications, but it is not true for Netdata. Agent's memory is written very infrequently

-(if you have 24 hours of metrics in Netdata, each byte at the in-memory database will be updated just once per day). KSM

-is a solution that will provide 60+% memory savings to Netdata.

+```text

+[db]

+ mode = dbengine

+ storage tiers = 3

-### Enable KSM in kernel

+ # Tier 0, per second data. Set to 0 for no limit.

+ dbengine tier 0 retention size = 1GiB

+ dbengine tier 0 retention time = 14d

-To enable KSM in kernel, you need to run a kernel compiled with the following:

+ # Tier 1, per minute data. Set to 0 for no limit.

+ dbengine tier 1 retention size = 1GiB

+ dbengine tier 1 retention time = 3mo

-```sh

-CONFIG_KSM=y

+ # Tier 2, per hour data. Set to 0 for no limit.

+ dbengine tier 2 retention size = 1GiB

+ dbengine tier 2 retention time = 2y

```

-When KSM is enabled at the kernel, it is just available for the user to enable it.

-

-If you build a kernel with `CONFIG_KSM=y`, you will just get a few files in `/sys/kernel/mm/ksm`. Nothing else

-happens. There is no performance penalty (apart from the memory this code occupies into the kernel).

+### Monitoring Retention Utilization

-The files that `CONFIG_KSM=y` offers include:

+Netdata provides a visual representation of storage utilization for both the time and space limits across all Tiers under "Netdata" -> "dbengine retention" on the dashboard. This chart shows exactly how your storage space (disk space limits) and time (time limits) are used for metric retention.

-- `/sys/kernel/mm/ksm/run` by default `0`. You have to set this to `1` for the kernel to spawn `ksmd`.

-- `/sys/kernel/mm/ksm/sleep_millisecs`, by default `20`. The frequency ksmd should evaluate memory for deduplication.

-- `/sys/kernel/mm/ksm/pages_to_scan`, by default `100`. The amount of pages ksmd will evaluate on each run.

+### Legacy configuration

-So, by default `ksmd` is just disabled. It will not harm performance and the user/admin can control the CPU resources

-they are willing to have used by `ksmd`.

+v1.99.0 and prior

-### Run `ksmd` kernel daemon

+Netdata prior to v2 supports the following configuration options in `netdata.conf`.

+They have the same defaults as the latest v2, but the unit of each value is given in the option name, not at the value.

-To activate / run `ksmd,` you need to run the following:

-

-```sh

-echo 1 >/sys/kernel/mm/ksm/run

-echo 1000 >/sys/kernel/mm/ksm/sleep_millisecs

+```text

+storage tiers = 3

+# Tier 0, per second data. Set to 0 for no limit.

+dbengine tier 0 disk space MB = 1024

+dbengine tier 0 retention days = 14

+# Tier 1, per minute data. Set to 0 for no limit.

+dbengine tier 1 disk space MB = 1024

+dbengine tier 1 retention days = 90

+# Tier 2, per hour data. Set to 0 for no limit.

+dbengine tier 2 disk space MB = 1024

+dbengine tier 2 retention days = 730

```

-With these settings, ksmd does not even appear in the running process list (it will run once per second and evaluate 100

-pages for de-duplication).

-

-Put the above lines in your boot sequence (`/etc/rc.local` or equivalent) to have `ksmd` run at boot.

-

-### Monitoring Kernel Memory de-duplication performance

-

-Netdata will create charts for kernel memory de-duplication performance, the **deduper (ksm)** charts can be seen under the **Memory** section in the Netdata UI.

+v1.45.6 and prior

-The summary gives you a quick idea of how much savings (in terms of bytes and in terms of percentage) KSM is able to achieve.

+Netdata versions prior to v1.46.0 relied on disk space-based retention.

-

+**Default Retention Limits**:

-#### KSM pages merge performance

+| Tier | Resolution | Size Limit |

+|:----:|:-------------------:|:----------:|

+| 0 | high (per second) | 256 MB |

+| 1 | middle (per minute) | 128 MB |

+| 2 | low (per hour) | 64 GiB |

-This chart indicates the performance of page merging. **Shared** indicates used shared pages, **Unshared** indicates memory no longer shared (pages are unique but repeatedly checked for merging), **Sharing** indicates memory currently shared(how many more sites are sharing the pages, i.e. how much saved) and **Volatile** indicates volatile pages (changing too fast to be placed in a tree).

+You can change these limits in `netdata.conf`:

-A high ratio of Sharing to Shared indicates good sharing, but a high ratio of Unshared to Sharing indicates wasted effort.

-

-

-

-#### KSM savings

+```text

+[db]

+ mode = dbengine

+ storage tiers = 3

+ # Tier 0, per second data

+ dbengine multihost disk space MB = 256

+ # Tier 1, per minute data

+ dbengine tier 1 multihost disk space MB = 1024

+ # Tier 2, per hour data

+ dbengine tier 2 multihost disk space MB = 1024

+```

-This chart shows the amount of memory saved by KSM. **Savings** indicates saved memory. **Offered** indicates memory marked as mergeable.

+