diff --git a/README.md b/README.md

index 9fe09572492b9e..d4e8208e653586 100644

--- a/README.md

+++ b/README.md

@@ -233,7 +233,7 @@ By default, Netdata will send e-mail notifications if there is a configured MTA

### 4. **Configure Netdata Parents** :family:

-Optionally, configure one or more Netdata Parents. A Netdata Parent is a Netdata Agent that has been configured to accept [streaming connections](https://learn.netdata.cloud/docs/streaming/streaming-configuration-reference) from other Netdata agents.

+Optionally, configure one or more Netdata Parents. A Netdata Parent is a Netdata Agent that has been configured to accept [streaming connections](https://learn.netdata.cloud/docs/streaming/streaming-configuration-reference) from other Netdata Agents.

Netdata Parents provide:

@@ -264,8 +264,8 @@ If you connect your Netdata Parents, there is no need to connect your Netdata Ag

When your Netdata nodes are connected to Netdata Cloud, you can (on top of the above):

-- Access your Netdata agents from anywhere

-- Access sensitive Netdata agent features (like "Netdata Functions": processes, systemd-journal)

+- Access your Netdata Agents from anywhere

+- Access sensitive Netdata Agent features (like "Netdata Functions": processes, systemd-journal)

- Organize your infra in spaces and Rooms

- Create, manage, and share **custom dashboards**

- Invite your team and assign roles to them (Role-Based Access Control)

@@ -573,7 +573,7 @@ Here are some suggestions on how to manage and navigate this wealth of informati

If you're looking for specific information, you can use the search feature to find the relevant metrics or charts. This can help you avoid scrolling through all the data.

3. **Customize your Dashboards**

- Netdata allows you to create custom dashboards, which can help you focus on the metrics that are most important to you. Sign-in to Netdata and there you can have your custom dashboards. (coming soon to the agent dashboard too)

+ Netdata allows you to create custom dashboards, which can help you focus on the metrics that are most important to you. Sign-in to Netdata and there you can have your custom dashboards. (coming soon to the Agent dashboard too)

4. **Leverage Netdata's Anomaly Detection**

Netdata uses machine learning to detect anomalies in your metrics. This can help you identify potential issues before they become major problems. We have added an `AR` button above the dashboard table of contents to reveal the anomaly rate per section so that you can spot what could need your attention.

@@ -633,7 +633,7 @@ We are aware that for privacy or regulatory reasons, not all environments can al

These steps will disable the anonymous telemetry for your Netdata installation.

-Please note, even with telemetry disabled, Netdata still requires a [Netdata Registry](https://learn.netdata.cloud/docs/configuring/securing-netdata-agents/registry) for alert notifications' Call To Action (CTA) functionality. When you click an alert notification, it redirects you to the Netdata Registry, which then directs your web browser to the specific Netdata Agent that issued the alert for further troubleshooting. The Netdata Registry learns the URLs of your agents when you visit their dashboards.

+Please note, even with telemetry disabled, Netdata still requires a [Netdata Registry](https://learn.netdata.cloud/docs/configuring/securing-netdata-agents/registry) for alert notifications' Call To Action (CTA) functionality. When you click an alert notification, it redirects you to the Netdata Registry, which then directs your web browser to the specific Netdata Agent that issued the alert for further troubleshooting. The Netdata Registry learns the URLs of your Agents when you visit their dashboards.

Any Netdata Agent can act as a Netdata Registry. Designate one Netdata Agent as your registry, and our global Netdata Registry will no longer be in use. For further information on this, please refer to [this guide](https://learn.netdata.cloud/docs/configuring/securing-netdata-agents/registry).

diff --git a/docs/alerts-and-notifications/notifications/README.md b/docs/alerts-and-notifications/notifications/README.md

index 870076b974e584..2efcdbe48b041c 100644

--- a/docs/alerts-and-notifications/notifications/README.md

+++ b/docs/alerts-and-notifications/notifications/README.md

@@ -6,4 +6,4 @@ This section includes the documentation of the integrations for both of Netdata'

- Netdata Cloud provides centralized alert notifications, utilizing the health status data already sent to Netdata Cloud from connected nodes to send alerts to configured integrations. [Supported integrations](/docs/alerts-&-notifications/notifications/centralized-cloud-notifications) include Amazon SNS, Discord, Slack, Splunk, and others.

-- The Netdata Agent offers a [wider range of notification options](/docs/alerts-&-notifications/notifications/agent-dispatched-notifications) directly from the agent itself. You can choose from over a dozen services, including email, Slack, PagerDuty, Twilio, and others, for more granular control over notifications on each node.

+- The Netdata Agent offers a [wider range of notification options](/docs/alerts-&-notifications/notifications/agent-dispatched-notifications) directly from the Agent itself. You can choose from over a dozen services, including email, Slack, PagerDuty, Twilio, and others, for more granular control over notifications on each node.

diff --git a/docs/dashboards-and-charts/README.md b/docs/dashboards-and-charts/README.md

index f94d776a3a1230..3008cfccb37085 100644

--- a/docs/dashboards-and-charts/README.md

+++ b/docs/dashboards-and-charts/README.md

@@ -25,7 +25,7 @@ The Netdata dashboard consists of the following main sections:

> **Note**

>

-> Some sections of the dashboard, when accessed through the agent, may require the user to be signed in to Netdata Cloud or have the Agent claimed to Netdata Cloud for their full functionality. Examples include saving visualization settings on charts or custom dashboards, claiming the node to Netdata Cloud, or executing functions on an Agent.

+> Some sections of the dashboard, when accessed through the Agent, may require the user to be signed in to Netdata Cloud or have the Agent claimed to Netdata Cloud for their full functionality. Examples include saving visualization settings on charts or custom dashboards, claiming the node to Netdata Cloud, or executing functions on an Agent.

## How to access the dashboards?

diff --git a/docs/dashboards-and-charts/events-feed.md b/docs/dashboards-and-charts/events-feed.md

index 34d6ee0e652542..8e31ebb5f453f0 100644

--- a/docs/dashboards-and-charts/events-feed.md

+++ b/docs/dashboards-and-charts/events-feed.md

@@ -49,8 +49,8 @@ At a high-level view, these are the domains from which the Events feed will prov

| Node Removed | The node was removed from the Space, for example by using the `Delete` action on the node. This is a soft delete in that the node gets marked as deleted, but retains the association with this space. If it becomes live again, it will be restored (see `Node Restored` below) and reappear in this space as before. | Node `ip-xyz.ec2.internal` was **deleted (soft)** |

| Node Restored | The node was restored. See `Node Removed` above. | Node `ip-xyz.ec2.internal` was **restored** |

| Node Deleted | The node was deleted from the Space. This is a hard delete and no information on the node is retained. | Node `ip-xyz.ec2.internal` was **deleted (hard)** |

-| Agent Connected | The agent connected to the Cloud MQTT server (Agent-Cloud Link established).

These events can only be seen on _All nodes_ Room. | Agent with claim ID `7d87bqs9-cv42-4823-8sd4-3614548850c7` has connected to Cloud. |

-| Agent Disconnected | The agent disconnected from the Cloud MQTT server (Agent-Cloud Link severed).

These events can only be seen on _All nodes_ Room. | Agent with claim ID `7d87bqs9-cv42-4823-8sd4-3614548850c7` has disconnected from Cloud: **Connection Timeout**. |

+| Agent Connected | The Agent connected to the Cloud MQTT server (Agent-Cloud Link established).

These events can only be seen on _All nodes_ Room. | Agent with claim ID `7d87bqs9-cv42-4823-8sd4-3614548850c7` has connected to Cloud. |

+| Agent Disconnected | The Agent disconnected from the Cloud MQTT server (Agent-Cloud Link severed).

These events can only be seen on _All nodes_ Room. | Agent with claim ID `7d87bqs9-cv42-4823-8sd4-3614548850c7` has disconnected from Cloud: **Connection Timeout**. |

| Space Statistics | Daily snapshot of space node statistics.

These events can only be seen on _All nodes_ Room. | Space statistics. Nodes: **22 live**, **21 stale**, **18 removed**, **61 total**. |

### Alert events

diff --git a/docs/dashboards-and-charts/netdata-charts.md b/docs/dashboards-and-charts/netdata-charts.md

index c7563aa2901a4c..50b7c15a2b5bfb 100644

--- a/docs/dashboards-and-charts/netdata-charts.md

+++ b/docs/dashboards-and-charts/netdata-charts.md

@@ -274,7 +274,7 @@ Finally, you can reset everything to its defaults by clicking the green "Reset"

## Anomaly Rate ribbon

-Netdata's unsupervised machine learning algorithm creates a unique model for each metric collected by your agents, using exclusively the metric's past data.

+Netdata's unsupervised machine learning algorithm creates a unique model for each metric collected by your Agents, using exclusively the metric's past data.

It then uses these unique models during data collection to predict the value that should be collected and check if the collected value is within the range of acceptable values based on past patterns and behavior.

If the value collected is an outlier, it is marked as anomalous.

diff --git a/docs/developer-and-contributor-corner/style-guide.md b/docs/developer-and-contributor-corner/style-guide.md

index b64a9df0bffff3..16e07f54d06fb2 100644

--- a/docs/developer-and-contributor-corner/style-guide.md

+++ b/docs/developer-and-contributor-corner/style-guide.md

@@ -160,8 +160,7 @@ capitalization. In summary:

Docker, Apache, NGINX)

- Avoid camel case (NetData) or all caps (NETDATA).

-Whenever you refer to the company Netdata, Inc., or the open-source monitoring agent the company develops, capitalize

-**Netdata**.

+Whenever you refer to the company Netdata, Inc., or the open-source monitoring Agent the company develops, capitalize both words.

However, if you are referring to a process, user, or group on a Linux system, use lowercase and fence the word in an

inline code block: `` `netdata` ``.

diff --git a/docs/glossary.md b/docs/glossary.md

index 78ba180728494b..873f5e27585bfe 100644

--- a/docs/glossary.md

+++ b/docs/glossary.md

@@ -128,7 +128,7 @@ metrics, troubleshoot complex performance problems, and make data interoperable

## S

-- [**Single Node Dashboard**](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md): A dashboard pre-configured with every installation of the Netdata agent, with thousand of metrics and hundreds of interactive charts that requires no set up.

+- [**Single Node Dashboard**](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md): A dashboard pre-configured with every installation of the Netdata Agent, with thousand of metrics and hundreds of interactive charts that requires no set up.

- [**Space**](/docs/netdata-cloud/organize-your-infrastructure-invite-your-team.md#netdata-cloud-spaces): A high-level container and virtual collaboration area where you can organize team members, access levels,and the nodes you want to monitor.

diff --git a/docs/netdata-agent/README.md b/docs/netdata-agent/README.md

index 8096e911a0e27a..ef538f2426b60f 100644

--- a/docs/netdata-agent/README.md

+++ b/docs/netdata-agent/README.md

@@ -59,7 +59,7 @@ stateDiagram-v2

6. **Check**: a health engine, triggering alerts and sending notifications. Netdata comes with hundreds of alert configurations that are automatically attached to metrics when they get collected, detecting errors, common configuration errors and performance issues.

7. **Query**: a query engine for querying time-series data.

8. **Score**: a scoring engine for comparing and correlating metrics.

-9. **Stream**: a mechanism to connect Netdata agents and build Metrics Centralization Points (Netdata Parents).

+9. **Stream**: a mechanism to connect Netdata Agents and build Metrics Centralization Points (Netdata Parents).

10. **Visualize**: Netdata's fully automated dashboards for all metrics.

11. **Export**: export metric samples to 3rd party time-series databases, enabling the use of 3rd party tools for visualization, like Grafana.

@@ -77,8 +77,8 @@ stateDiagram-v2

## Dashboard Versions

-The Netdata agents (Standalone, Children and Parents) **share the dashboard** of Netdata Cloud. However, when the user is logged in and the Netdata agent is connected to Netdata Cloud, the following are enabled (which are otherwise disabled):

+The Netdata Agents (Standalone, Children and Parents) **share the dashboard** of Netdata Cloud. However, when the user is logged in and the Agent is connected to the Cloud, the following are enabled (which are otherwise disabled):

-1. **Access to Sensitive Data**: Some data, like systemd-journal logs and several [Top Monitoring](/docs/top-monitoring-netdata-functions.md) features expose sensitive data, like IPs, ports, process command lines and more. To access all these when the dashboard is served directly from a Netdata agent, Netdata Cloud is required to verify that the user accessing the dashboard has the required permissions.

+1. **Access to Sensitive Data**: Some data, like systemd-journal logs and several [Top Monitoring](/docs/top-monitoring-netdata-functions.md) features expose sensitive data, like IPs, ports, process command lines and more. To access all these when the dashboard is served directly from an Agent, Netdata Cloud is required to verify that the user accessing the dashboard has the required permissions.

-2. **Dynamic Configuration**: Netdata agents are configured via configuration files, manually or through some provisioning system. The latest Netdata includes a feature to allow users to change some configurations (collectors, alerts) via the dashboard. This feature is only available to users of paid Netdata Cloud plan.

+2. **Dynamic Configuration**: Netdata Agents are configured via configuration files, manually or through some provisioning system. The latest Netdata includes a feature to allow users to change some configurations (collectors, alerts) via the dashboard. This feature is only available to users of paid Netdata Cloud plan.

diff --git a/docs/netdata-agent/backup-and-restore-an-agent.md b/docs/netdata-agent/backup-and-restore-an-agent.md

index db9398b2782297..e0b8869ed29cec 100644

--- a/docs/netdata-agent/backup-and-restore-an-agent.md

+++ b/docs/netdata-agent/backup-and-restore-an-agent.md

@@ -34,18 +34,18 @@ In this standard scenario, you’re backing up your Netdata Agent in case of a n

sudo tar -cvpzf netdata_backup.tar.gz /etc/netdata/ /var/cache/netdata /var/lib/netdata

```

- Stopping the Netdata agent is typically necessary to back up the database files of the Netdata Agent.

+ Stopping the Netdata Agent is typically necessary to back up the database files of the Netdata Agent.

If you want to minimize the gap in metrics caused by stopping the Netdata Agent, consider implementing a backup job or script that follows this sequence:

- Backup the Agent configuration Identity directories

- Stop the Netdata service

- Backup up the database files

-- Restart the netdata agent.

+- Restart the Netdata Agent.

### Restoring Netdata

-1. Ensure that the Netdata agent is installed and is [stopped](/docs/netdata-agent/start-stop-restart.md)

+1. Ensure that the Netdata Agent is installed and is [stopped](/docs/netdata-agent/start-stop-restart.md)

If you plan to deploy the Agent and restore a backup on top of it, then you might find it helpful to use the [`--dont-start-it`](/packaging/installer/methods/kickstart.md#other-options) option upon installation.

@@ -66,4 +66,4 @@ If you want to minimize the gap in metrics caused by stopping the Netdata Agent,

sudo tar -xvpzf /path/to/netdata_backup.tar.gz -C /

```

-3. [Start the Netdata agent](/docs/netdata-agent/start-stop-restart.md)

+3. [Start the Netdata Agent](/docs/netdata-agent/start-stop-restart.md)

diff --git a/docs/netdata-agent/configuration/anonymous-telemetry-events.md b/docs/netdata-agent/configuration/anonymous-telemetry-events.md

index 4d48de4a219899..a5b4880c92141b 100644

--- a/docs/netdata-agent/configuration/anonymous-telemetry-events.md

+++ b/docs/netdata-agent/configuration/anonymous-telemetry-events.md

@@ -1,7 +1,6 @@

# Anonymous telemetry events

-By default, Netdata collects anonymous usage information from the open-source monitoring agent. For agent events like start, stop, crash, etc. we use our own cloud function in GCP. For frontend telemetry (page views etc.) on the agent dashboard itself, we use the open-source

-product analytics platform [PostHog](https://github.com/PostHog/posthog).

+By default, Netdata collects anonymous usage information from the open-source monitoring Agent. For events like start, stop, crash, etc. we use our own cloud function in GCP. For frontend telemetry (page views etc.) on the dashboard itself, we use the open-source product analytics platform [PostHog](https://github.com/PostHog/posthog).

We are strongly committed to your [data privacy](https://netdata.cloud/privacy/).

@@ -10,7 +9,7 @@ We use the statistics gathered from this information for two purposes:

1. **Quality assurance**, to help us understand if Netdata behaves as expected, and to help us classify repeated

issues with certain distributions or environments.

-2. **Usage statistics**, to help us interpret how people use the Netdata agent in real-world environments, and to help

+2. **Usage statistics**, to help us interpret how people use the Netdata Agent in real-world environments, and to help

us identify how our development/design decisions influence the community.

Netdata collects usage information via two different channels:

@@ -59,7 +58,7 @@ filename and source code line number of the fatal error.

Starting with v1.21, we additionally collect information about:

- Failures to build the dependencies required to use Cloud features.

-- Unavailability of Cloud features in an agent.

+- Unavailability of Cloud features in an Agent.

- Failures to connect to the Cloud in case the [connection process](/src/claim/README.md) has been completed. This includes error codes

to inform the Netdata team about the reason why the connection failed.

diff --git a/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md b/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

index ff51fbf78e44a4..26abcb38ee45a4 100644

--- a/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

+++ b/docs/netdata-agent/configuration/optimize-the-netdata-agents-performance.md

@@ -26,7 +26,7 @@ The following table summarizes the effect of each optimization on the CPU, RAM a

| [Use a different metric storage database](/src/database/README.md) | | :heavy_check_mark: | :heavy_check_mark: |

| [Disable machine learning](#disable-machine-learning) | :heavy_check_mark: | | |

| [Use a reverse proxy](#run-netdata-behind-a-proxy) | :heavy_check_mark: | | |

-| [Disable/lower gzip compression for the agent dashboard](#disablelower-gzip-compression-for-the-dashboard) | :heavy_check_mark: | | |

+| [Disable/lower gzip compression for the Agent dashboard](#disablelower-gzip-compression-for-the-dashboard) | :heavy_check_mark: | | |

## Resources required by a default Netdata installation

@@ -62,7 +62,7 @@ To reduce CPU usage, you can (either one or a combination of the following actio

3. [Reduce the data collection frequency](#reduce-collection-frequency)

4. [Disable unneeded plugins or collectors](#disable-unneeded-plugins-or-collectors)

5. [Use a reverse proxy](#run-netdata-behind-a-proxy),

-6. [Disable/lower gzip compression for the agent dashboard](#disablelower-gzip-compression-for-the-dashboard).

+6. [Disable/lower gzip compression for the Agent dashboard](#disablelower-gzip-compression-for-the-dashboard).

### Memory consumption

@@ -111,7 +111,7 @@ using [streaming and replication](/docs/observability-centralization-points/READ

### Disable health checks on the child nodes

When you set up streaming, we recommend you run your health checks on the parent. This saves resources on the children

-and makes it easier to configure or disable alerts and agent notifications.

+and makes it easier to configure or disable alerts and Agent notifications.

The parents by default run health checks for each child, as long as the child is connected (the details are

in `stream.conf`). On the child nodes you should add to `netdata.conf` the following:

diff --git a/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md b/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md

index 2282cbc44e96eb..8c0c11bc1fac2a 100644

--- a/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md

+++ b/docs/netdata-agent/configuration/optimizing-metrics-database/change-metrics-storage.md

@@ -17,8 +17,7 @@ With these defaults, Netdata requires approximately 4 GiB of storage space (incl

## Retention Settings

-> **In a parent-child setup**, these settings manage the shared storage space used by the Netdata parent agent for

-> storing metrics collected by both the parent and its child nodes.

+> **In a parent-child setup**, these settings manage the shared storage space used by the Netdata parent Agent for storing metrics collected by both the parent and its child nodes.

You can fine-tune retention for each tier by setting a time limit or size limit. Setting a limit to 0 disables it,

allowing for no time-based deletion for that tier or using all available space, respectively. This enables various

diff --git a/docs/netdata-agent/configuration/organize-systems-metrics-and-alerts.md b/docs/netdata-agent/configuration/organize-systems-metrics-and-alerts.md

index f7f56279b7df7c..efc38c00f5c1d7 100644

--- a/docs/netdata-agent/configuration/organize-systems-metrics-and-alerts.md

+++ b/docs/netdata-agent/configuration/organize-systems-metrics-and-alerts.md

@@ -104,8 +104,7 @@ can reload labels using the helpful `netdatacli` tool:

netdatacli reload-labels

```

-Your host labels will now be enabled. You can double-check these by using `curl http://HOST-IP:19999/api/v1/info` to

-read the status of your agent. For example, from a VPS system running Debian 10:

+Your host labels will now be enabled. You can double-check these by using `curl http://HOST-IP:19999/api/v1/info` to read the status of your Agent. For example, from a VPS system running Debian 10:

```json

{

@@ -232,7 +231,7 @@ All go.d plugin collectors support the specification of labels at the "collectio

labels (e.g. generic Prometheus collector, Kubernetes, Docker and more). But you can also add your own custom labels by configuring

the data collection jobs.

-For example, suppose we have a single Netdata agent, collecting data from two remote Apache web servers, located in different data centers.

+For example, suppose we have a single Netdata Agent, collecting data from two remote Apache web servers, located in different data centers.

The web servers are load balanced and provide access to the service "Payments".

You can define the following in `go.d.conf`, to be able to group the web requests by service or location:

diff --git a/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/README.md b/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/README.md

index a0810bb5103924..af35c3c662b387 100644

--- a/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/README.md

+++ b/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/README.md

@@ -1,6 +1,6 @@

# Running the Netdata Agent behind a reverse proxy

-If you need to access a Netdata agent's user interface or API in a production environment we recommend you put Netdata behind

+If you need to access a Netdata Agent's user interface or API in a production environment we recommend you put Netdata behind

another web server and secure access to the dashboard via SSL, user authentication and firewall rules.

A dedicated web server also provides more robustness and capabilities than the Agent's [internal web server](/src/web/README.md).

diff --git a/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/Running-behind-nginx.md b/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/Running-behind-nginx.md

index c0364633a5a950..d38fbe8272e786 100644

--- a/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/Running-behind-nginx.md

+++ b/docs/netdata-agent/configuration/running-the-netdata-agent-behind-a-reverse-proxy/Running-behind-nginx.md

@@ -12,7 +12,7 @@ The software is known for its low impact on memory resources, high scalability,

- Nginx is used and useful in cases when you want to access different instances of Netdata from a single server.

-- Password-protect access to Netdata, until distributed authentication is implemented via the Netdata cloud Sign In mechanism.

+- Password-protect access to Netdata, until distributed authentication is implemented via the Netdata Cloud Sign In mechanism.

- A proxy was necessary to encrypt the communication to Netdata, until v1.16.0, which provided TLS (HTTPS) support.

diff --git a/docs/netdata-agent/sizing-netdata-agents/bandwidth-requirements.md b/docs/netdata-agent/sizing-netdata-agents/bandwidth-requirements.md

index fbbc279d559c74..954860b923dd62 100644

--- a/docs/netdata-agent/sizing-netdata-agents/bandwidth-requirements.md

+++ b/docs/netdata-agent/sizing-netdata-agents/bandwidth-requirements.md

@@ -44,4 +44,4 @@ The information transferred to Netdata Cloud is:

This is not a constant stream of information. Netdata Agents update Netdata Cloud only about status changes on all the above (e.g., an alert being triggered, or a metric stopped being collected). So, there is an initial handshake and exchange of information when Netdata starts, and then there only updates when required.

-Of course, when you view Netdata Cloud dashboards that need to query the database a Netdata agent maintains, this query is forwarded to an agent that can satisfy it. This means that Netdata Cloud receives metric samples only when a user is accessing a dashboard and the samples transferred are usually aggregations to allow rendering the dashboards.

+Of course, when you view Netdata Cloud dashboards that need to query the database a Netdata Agent maintains, this query is forwarded to an Agent that can satisfy it. This means that Netdata Cloud receives metric samples only when a user is accessing a dashboard and the samples transferred are usually aggregations to allow rendering the dashboards.

diff --git a/docs/netdata-cloud/README.md b/docs/netdata-cloud/README.md

index 6a2406aebf7100..73a0bcc658ebb8 100644

--- a/docs/netdata-cloud/README.md

+++ b/docs/netdata-cloud/README.md

@@ -37,7 +37,7 @@ flowchart TB

NC <-->|secure connection| Agents

```

-Netdata Cloud provides the following features, on top of what the Netdata agents already provide:

+Netdata Cloud provides the following features, on top of what the Netdata Agents already provide:

1. **Horizontal scalability**: Netdata Cloud allows scaling the observability infrastructure horizontally, by adding more independent Netdata Parents and Children. It can aggregate such, otherwise independent, observability islands into one uniform and integrated infrastructure.

@@ -45,11 +45,11 @@ Netdata Cloud provides the following features, on top of what the Netdata agents

2. **Role Based Access Control (RBAC)**: Netdata Cloud has all the mechanisms for user-management and access control. It allows assigning all users a role, segmenting the infrastructure into rooms, and associating Rooms with roles and users.

-3. **Access from anywhere**: Netdata agents are installed on-prem and this is where all your data are always stored. Netdata Cloud allows querying all the Netdata agents (Standalone, Children and Parents) in real-time when dashboards are accessed via Netdata Cloud.

+3. **Access from anywhere**: Netdata Agents are installed on-prem and this is where all your data are always stored. Netdata Cloud allows querying all the Netdata Agents (Standalone, Children and Parents) in real-time when dashboards are accessed via Netdata Cloud.

This enables a much simpler access control, eliminating the complexities of setting up VPNs to access observability, and the bandwidth costs for centralizing all metrics to one place.

-4. **Central dispatch of alert notifications**: Netdata Cloud allows controlling the dispatch of alert notifications centrally. By default, all Netdata agents (Standalone, Children and Parents) send their own notifications. This becomes increasingly complex as the infrastructure grows. So, Netdata Cloud steps in to simplify this process and provide central control of all notifications.

+4. **Central dispatch of alert notifications**: Netdata Cloud allows controlling the dispatch of alert notifications centrally. By default, all Netdata Agents (Standalone, Children and Parents) send their own notifications. This becomes increasingly complex as the infrastructure grows. So, Netdata Cloud steps in to simplify this process and provide central control of all notifications.

Netdata Cloud also enables the use of the **Netdata Mobile App** offering mobile push notifications for all users in commercial plans.

@@ -61,18 +61,18 @@ Netdata Cloud provides the following features, on top of what the Netdata agents

## Data Exposed to Netdata Cloud

-Netdata is thin layer of top of Netdata agents. It does not receive the samples collected, or the logs Netdata agents maintain.

+Netdata is thin layer of top of Netdata Agents. It does not receive the samples collected, or the logs Netdata Agents maintain.

This is a key design decision for Netdata. If we were centralizing metric samples and logs, Netdata would have the same constrains and cost structure other observability solutions have, and we would be forced to lower metrics resolution, filter out metrics and eventually increase significantly the cost of observability.

Instead, Netdata Cloud receives and stores only metadata related to the metrics collected, such as the nodes collecting metrics and their labels, the metric names, their labels and their retention, the data collection plugins and modules running, the configured alerts and their transitions.

-This information is a small fraction of the total information maintained by Netdata agents, allowing Netdata Cloud to remain high-resolution, high-fidelity and real-time, while being able to:

+This information is a small fraction of the total information maintained by Netdata Agents, allowing Netdata Cloud to remain high-resolution, high-fidelity and real-time, while being able to:

- dispatch alerts centrally for all alert transitions.

-- know which Netdata agents to query when users view the dashboards.

+- know which Netdata Agents to query when users view the dashboards.

-Metric samples and logs are transferred via Netdata Cloud to your Web Browser, only when you view them via Netdata Cloud. And even then, Netdata Cloud does not store this information. It only aggregates the responses of multiple Netdata agents to a single response for your web browser to visualize.

+Metric samples and logs are transferred via Netdata Cloud to your Web Browser, only when you view them via Netdata Cloud. And even then, Netdata Cloud does not store this information. It only aggregates the responses of multiple Netdata Agents to a single response for your web browser to visualize.

## High-Availability

@@ -80,38 +80,38 @@ You can subscribe to Netdata Cloud updates at the [Netdata Cloud Status](https:/

Netdata Cloud is a highly available, auto-scalable solution, however being a monitoring solution, we need to ensure dashboards are accessible during crisis.

-Netdata agents provide the same dashboard Netdata Cloud provides, with the following limitations:

+Netdata Agents provide the same dashboard Netdata Cloud provides, with the following limitations:

-1. Netdata agents (Children and Parents) dashboards are limited to their databases, while on Netdata Cloud the dashboard presents the entire infrastructure, from all Netdata agents connected to it.

+1. Netdata Agents (Children and Parents) dashboards are limited to their databases, while on Netdata Cloud the dashboard presents the entire infrastructure, from all Netdata Agents connected to it.

-2. When you are not logged-in or the agent is not connected to Netdata Cloud, certain features of the Netdata agent dashboard will not be available.

+2. When you are not logged-in or the Agent is not connected to Netdata Cloud, certain features of the Netdata Agent dashboard will not be available.

- When you are logged-in and the agent is connected to Netdata Cloud, the agent dashboard has the same functionality as Netdata Cloud.

+ When you are logged-in and the Agent is connected to Netdata Cloud, the dashboard has the same functionality as Netdata Cloud.

-To ensure dashboard high availability, Netdata agent dashboards are available by directly accessing them, even when the connectivity between Children and Parents or Netdata Cloud faces issues. This allows the use of the individual Netdata agents' dashboards during crisis, at different levels of aggregation.

+To ensure dashboard high availability, Netdata Agent dashboards are available by directly accessing them, even when the connectivity between Children and Parents or Netdata Cloud faces issues. This allows the use of the individual Netdata Agents' dashboards during crisis, at different levels of aggregation.

## Fidelity and Insights

-Netdata Cloud queries Netdata agents, so it provides exactly the same fidelity and insights Netdata agents provide. Dashboards have the same resolution, the same number of metrics, exactly the same data.

+Netdata Cloud queries Netdata Agents, so it provides exactly the same fidelity and insights Netdata Agents provide. Dashboards have the same resolution, the same number of metrics, exactly the same data.

## Performance

-The Netdata agent and Netdata Cloud have similar query performance, but there are additional network latencies involved when the dashboards are viewed via Netdata Cloud.

+The Netdata Agent and Netdata Cloud have similar query performance, but there are additional network latencies involved when the dashboards are viewed via Netdata Cloud.

-Accessing Netdata agents on the same LAN has marginal network latency and their response time is only affected by the queries. However, accessing the same Netdata agents via Netdata Cloud has a bigger network round-trip time, that looks like this:

+Accessing Netdata Agents on the same LAN has marginal network latency and their response time is only affected by the queries. However, accessing the same Netdata Agents via Netdata Cloud has a bigger network round-trip time, that looks like this:

1. Your web browser makes a request to Netdata Cloud.

-2. Netdata Cloud sends the request to your Netdata agents. If multiple Netdata agents are involved, they are queried in parallel.

+2. Netdata Cloud sends the request to your Netdata Agents. If multiple Netdata Agents are involved, they are queried in parallel.

3. Netdata Cloud receives their responses and aggregates them into a single response.

4. Netdata Cloud replies to your web browser.

-If you are sitting on the same LAN as the Netdata agents, the latency will be 2 times the round-trip network latency between this LAN and Netdata Cloud.

+If you are sitting on the same LAN as the Netdata Agents, the latency will be 2 times the round-trip network latency between this LAN and Netdata Cloud.

-However, when there are multiple Netdata agents involved, the queries will be faster compared to a monitoring solution that has one centralization point. Netdata Cloud splits each query into multiple parts and each of the Netdata agents involved will only perform a small part of the original query. So, when querying a large infrastructure, you enjoy the performance of the combined power of all your Netdata agents, which is usually quite higher than any single-centralization-point monitoring solution.

+However, when there are multiple Netdata Agents involved, the queries will be faster compared to a monitoring solution that has one centralization point. Netdata Cloud splits each query into multiple parts and each of the Netdata Agents involved will only perform a small part of the original query. So, when querying a large infrastructure, you enjoy the performance of the combined power of all your Netdata Agents, which is usually quite higher than any single-centralization-point monitoring solution.

## Does Netdata Cloud require Observability Centralization Points?

-No. Any or all Netdata agents can be connected to Netdata Cloud.

+No. Any or all Netdata Agents can be connected to Netdata Cloud.

We recommend to create [observability centralization points](/docs/observability-centralization-points/README.md), as required for operational efficiency (ephemeral nodes, teams or services isolation, central control of alerts, production systems performance), security policies (internet isolation), or cost optimization (use existing capacities before allocating new ones).

diff --git a/docs/netdata-cloud/authentication-and-authorization/api-tokens.md b/docs/netdata-cloud/authentication-and-authorization/api-tokens.md

index a8f304ffba9aac..d5d88779c67714 100644

--- a/docs/netdata-cloud/authentication-and-authorization/api-tokens.md

+++ b/docs/netdata-cloud/authentication-and-authorization/api-tokens.md

@@ -2,9 +2,7 @@

## Overview

-Every single user can get access to the Netdata resource programmatically. It is done through the API Token which

-can be also called as Bearer Token. This token is used for authentication and authorization, it can be issued

-in the Netdata UI under the user Settings:

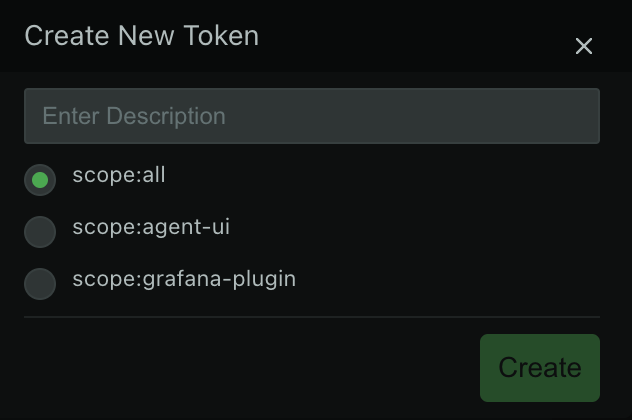

+Every single user can get access to the Netdata resource programmatically. It is done through the API Token, also called Bearer Token. This token is used for authentication and authorization, it can be issued in the Netdata UI under the user Settings:

@@ -16,18 +14,18 @@ The API Tokens are not going to expire and can be limited to a few scopes:

* `scope:agent-ui`

- this token is mainly used by the local Netdata agent accessing the Cloud UI

+ this token is mainly used by the local Netdata Agent accessing the Cloud UI

* `scope:grafana-plugin`

this token is used for the [Netdata Grafana plugin](https://github.com/netdata/netdata-grafana-datasource-plugin/blob/master/README.md)

to access Netdata charts

-Currently, the Netdata Cloud is not exposing stable API.

+Currently, Netdata Cloud is not exposing the stable API.

## Example usage

-* get the cloud space list

+* get the Netdata Cloud space list

```console

curl -H 'Accept: application/json' -H "Authorization: Bearer " https://app.netdata.cloud/api/v2/spaces

diff --git a/docs/netdata-cloud/netdata-cloud-on-prem/README.md b/docs/netdata-cloud/netdata-cloud-on-prem/README.md

index 49373c454cfaa9..df53e06982a87e 100644

--- a/docs/netdata-cloud/netdata-cloud-on-prem/README.md

+++ b/docs/netdata-cloud/netdata-cloud-on-prem/README.md

@@ -6,7 +6,7 @@ The overall architecture looks like this:

```mermaid

flowchart TD

- agents("🌍 Netdata Agents

@@ -16,18 +14,18 @@ The API Tokens are not going to expire and can be limited to a few scopes:

* `scope:agent-ui`

- this token is mainly used by the local Netdata agent accessing the Cloud UI

+ this token is mainly used by the local Netdata Agent accessing the Cloud UI

* `scope:grafana-plugin`

this token is used for the [Netdata Grafana plugin](https://github.com/netdata/netdata-grafana-datasource-plugin/blob/master/README.md)

to access Netdata charts

-Currently, the Netdata Cloud is not exposing stable API.

+Currently, Netdata Cloud is not exposing the stable API.

## Example usage

-* get the cloud space list

+* get the Netdata Cloud space list

```console

curl -H 'Accept: application/json' -H "Authorization: Bearer " https://app.netdata.cloud/api/v2/spaces

diff --git a/docs/netdata-cloud/netdata-cloud-on-prem/README.md b/docs/netdata-cloud/netdata-cloud-on-prem/README.md

index 49373c454cfaa9..df53e06982a87e 100644

--- a/docs/netdata-cloud/netdata-cloud-on-prem/README.md

+++ b/docs/netdata-cloud/netdata-cloud-on-prem/README.md

@@ -6,7 +6,7 @@ The overall architecture looks like this:

```mermaid

flowchart TD

- agents("🌍 Netdata Agents

Users' infrastructure

Netdata Children & Parents")

+ Agents("🌍 Netdata Agents

Users' infrastructure

Netdata Children & Parents")

users[["🔥 Unified Dashboards

Integrated Infrastructure

Dashboards"]]

ingress("🛡️ Ingress Gateway

TLS termination")

traefik((("🔒 Traefik

Authentication &

Authorization")))

@@ -15,7 +15,7 @@ flowchart TD

frontend("🌐 Front-End

Static Web Files")

auth("👨💼 Users & Agents

Authorization

Microservices")

spaceroom("🏡 Spaces, Rooms,

Nodes, Settings

Microservices for

managing Spaces,

Rooms, Nodes and

related settings")

- charts("📈 Metrics & Queries

Microservices for

dispatching queries

to Netdata agents")

+ charts("📈 Metrics & Queries

Microservices for

dispatching queries

to Netdata Agents")

alerts("🔔 Alerts & Notifications

Microservices for

tracking alert

transitions and

deduplicating alerts")

sql[("✨ PostgreSQL

Users, Spaces, Rooms,

Agents, Nodes, Metric

Names, Metrics Retention,

Custom Dashboards,

Settings")]

redis[("🗒️ Redis

Caches needed

by Microservices")]

diff --git a/docs/netdata-cloud/netdata-cloud-on-prem/installation.md b/docs/netdata-cloud/netdata-cloud-on-prem/installation.md

index a23baa99caa8b2..7082e96cd73ec7 100644

--- a/docs/netdata-cloud/netdata-cloud-on-prem/installation.md

+++ b/docs/netdata-cloud/netdata-cloud-on-prem/installation.md

@@ -123,59 +123,59 @@ Responsible for user registration & authentication. Manages user account informa

### cloud-agent-data-ctrl-service

-Forwards request from the cloud to the relevant agents.

+Forwards request from the Cloud to the relevant Agents.

The requests include:

-- Fetching chart metadata from the agent

-- Fetching chart data from the agent

-- Fetching function data from the agent

+- Fetching chart metadata from the Agent

+- Fetching chart data from the Agent

+- Fetching function data from the Agent

### cloud-agent-mqtt-input-service

-Forwards MQTT messages emitted by the agent related to the agent entities to the internal Pulsar broker. These include agent connection state updates.

+Forwards MQTT messages emitted by the Agent related to the Agent entities to the internal Pulsar broker. These include Agent connection state updates.

### cloud-agent-mqtt-output-service

-Forwards Pulsar messages emitted in the cloud related to the agent entities to the MQTT broker. From there, the messages reach the relevant agent.

+Forwards Pulsar messages emitted in the Cloud related to the Agent entities to the MQTT broker. From there, the messages reach the relevant Agent.

### cloud-alarm-config-mqtt-input-service

-Forwards MQTT messages emitted by the agent related to the alarm-config entities to the internal Pulsar broker. These include the data for the alarm configuration as seen by the agent.

+Forwards MQTT messages emitted by the Agent related to the alarm-config entities to the internal Pulsar broker. These include the data for the alarm configuration as seen by the Agent.

### cloud-alarm-log-mqtt-input-service

-Forwards MQTT messages emitted by the agent related to the alarm-log entities to the internal Pulsar broker. These contain data about the alarm transitions that occurred in an agent.

+Forwards MQTT messages emitted by the Agent related to the alarm-log entities to the internal Pulsar broker. These contain data about the alarm transitions that occurred in an Agent.

### cloud-alarm-mqtt-output-service

-Forwards Pulsar messages emitted in the cloud related to the alarm entities to the MQTT broker. From there, the messages reach the relevant agent.

+Forwards Pulsar messages emitted in the Cloud related to the alarm entities to the MQTT broker. From there, the messages reach the relevant Agent.

### cloud-alarm-processor-service

-Persists latest alert statuses received from the agent in the cloud.

+Persists latest alert statuses received from the Agent in the Cloud.

Aggregates alert statuses from relevant node instances.

-Exposes API endpoints to fetch alert data for visualization on the cloud.

+Exposes API endpoints to fetch alert data for visualization on the Cloud.

Determines if notifications need to be sent when alert statuses change and emits relevant messages to Pulsar.

Exposes API endpoints to store and return notification-silencing data.

### cloud-alarm-streaming-service

-Responsible for starting the alert stream between the agent and the cloud.

-Ensures that messages are processed in the correct order, and starts a reconciliation process between the cloud and the agent if out-of-order processing occurs.

+Responsible for starting the alert stream between the Agent and the Cloud.

+Ensures that messages are processed in the correct order, and starts a reconciliation process between the Cloud and the Agent if out-of-order processing occurs.

### cloud-charts-mqtt-input-service

-Forwards MQTT messages emitted by the agent related to the chart entities to the internal Pulsar broker. These include the chart metadata that is used to display relevant charts on the cloud.

+Forwards MQTT messages emitted by the Agent related to the chart entities to the internal Pulsar broker. These include the chart metadata that is used to display relevant charts on the Cloud.

### cloud-charts-mqtt-output-service

-Forwards Pulsar messages emitted in the cloud related to the charts entities to the MQTT broker. From there, the messages reach the relevant agent.

+Forwards Pulsar messages emitted in the Cloud related to the charts entities to the MQTT broker. From there, the messages reach the relevant Agent.

### cloud-charts-service

Exposes API endpoints to fetch the chart metadata.

-Forwards data requests via the `cloud-agent-data-ctrl-service` to the relevant agents to fetch chart data points.

-Exposes API endpoints to call various other endpoints on the agent, for instance, functions.

+Forwards data requests via the `cloud-agent-data-ctrl-service` to the relevant Agents to fetch chart data points.

+Exposes API endpoints to call various other endpoints on the Agent, for instance, functions.

### cloud-custom-dashboard-service

@@ -183,8 +183,8 @@ Exposes API endpoints to fetch and store custom dashboard data.

### cloud-environment-service

-Serves as the first contact point between the agent and the cloud.

-Returns authentication and MQTT endpoints to connecting agents.

+Serves as the first contact point between the Agent and the Cloud.

+Returns authentication and MQTT endpoints to connecting Agents.

### cloud-feed-service

@@ -193,7 +193,7 @@ Exposes API endpoints to fetch feed events from Elasticsearch.

### cloud-frontend

-Contains the on-prem cloud website. Serves static content.

+Contains the on-prem Cloud website. Serves static content.

### cloud-iam-user-service

@@ -209,11 +209,11 @@ Exposes API endpoints to fetch a human-friendly explanation of various netdata c

### cloud-node-mqtt-input-service

-Forwards MQTT messages emitted by the agent related to the node entities to the internal Pulsar broker. These include the node metadata as well as their connectivity state, either direct or via parents.

+Forwards MQTT messages emitted by the Agent related to the node entities to the internal Pulsar broker. These include the node metadata as well as their connectivity state, either direct or via parents.

### cloud-node-mqtt-output-service

-Forwards Pulsar messages emitted in the cloud related to the charts entities to the MQTT broker. From there, the messages reach the relevant agent.

+Forwards Pulsar messages emitted in the Cloud related to the charts entities to the MQTT broker. From there, the messages reach the relevant Agent.

### cloud-notifications-dispatcher-service

@@ -222,6 +222,6 @@ Handles incoming notification messages and uses the relevant channels(email, sla

### cloud-spaceroom-service

-Exposes API endpoints to fetch and store relations between agents, nodes, spaces, users, and rooms.

-Acts as a provider of authorization for other cloud endpoints.

-Exposes API endpoints to authenticate agents connecting to the cloud.

+Exposes API endpoints to fetch and store relations between Agents, nodes, spaces, users, and rooms.

+Acts as a provider of authorization for other Cloud endpoints.

+Exposes API endpoints to authenticate Agents connecting to the Cloud.

diff --git a/docs/netdata-cloud/netdata-cloud-on-prem/troubleshooting.md b/docs/netdata-cloud/netdata-cloud-on-prem/troubleshooting.md

index ac8bdf6f871d34..39f60b10c7eed8 100644

--- a/docs/netdata-cloud/netdata-cloud-on-prem/troubleshooting.md

+++ b/docs/netdata-cloud/netdata-cloud-on-prem/troubleshooting.md

@@ -8,19 +8,19 @@ The following are questions that are usually asked by Netdata Cloud On-Prem oper

## Loading charts takes a long time or ends with an error

-The charts service is trying to collect data from the agents involved in the query. In most of the cases, this microservice queries many agents (depending on the Room), and all of them have to reply for the query to be satisfied.

+The charts service is trying to collect data from the Agents involved in the query. In most of the cases, this microservice queries many Agents (depending on the Room), and all of them have to reply for the query to be satisfied.

One or more of the following may be the cause:

1. **Slow Netdata Agent or Netdata Agents with unreliable connections**

- If any of the Netdata agents queried is slow or has an unreliable network connection, the query will stall and Netdata Cloud will have timeout before responding.

+ If any of the Netdata Agents queried is slow or has an unreliable network connection, the query will stall and Netdata Cloud will have timeout before responding.

- When agents are overloaded or have unreliable connections, we suggest to install more Netdata Parents for providing reliable backends to Netdata Cloud. They will automatically be preferred for all queries, when available.

+ When Agents are overloaded or have unreliable connections, we suggest to install more Netdata Parents for providing reliable backends to Netdata Cloud. They will automatically be preferred for all queries, when available.

2. **Poor Kubernetes cluster management**

- Another common issue is poor management of the Kubernetes cluster. When a node of a Kubernetes cluster is saturated, or the limits set to its containers are small, Netdata Cloud microservices get throttled by Kubernetes and does not get the resources required to process the responses of Netdata agents and aggregate the results for the dashboard.

+ Another common issue is poor management of the Kubernetes cluster. When a node of a Kubernetes cluster is saturated, or the limits set to its containers are small, Netdata Cloud microservices get throttled by Kubernetes and does not get the resources required to process the responses of Netdata Agents and aggregate the results for the dashboard.

We recommend to review the throttling of the containers and increase the limits if required.

diff --git a/docs/netdata-cloud/versions.md b/docs/netdata-cloud/versions.md

index 1bfd363d601421..37a59d3e2aadee 100644

--- a/docs/netdata-cloud/versions.md

+++ b/docs/netdata-cloud/versions.md

@@ -12,7 +12,7 @@ For more information check our [Pricing](https://www.netdata.cloud/pricing/) pag

## SaaS Version

-[Sign-up to Netdata Cloud](https://app.netdata.cloud) and start connecting your Netdata agents. The commands provided once you have signed up, include all the information to install and automatically connect (claim) Netdata agents to your Netdata Cloud space.

+[Sign-up to Netdata Cloud](https://app.netdata.cloud) and start connecting your Netdata Agents. The commands provided once you have signed up, include all the information to install and automatically connect (claim) Netdata Agents to your Netdata Cloud space.

## On-Prem Version

diff --git a/docs/observability-centralization-points/best-practices.md b/docs/observability-centralization-points/best-practices.md

index 49bd3d6c3b8f81..74a84da1251db3 100644

--- a/docs/observability-centralization-points/best-practices.md

+++ b/docs/observability-centralization-points/best-practices.md

@@ -32,8 +32,8 @@ Compared to other observability solutions, the design of Netdata offers:

- **Optimized Cost and Performance**: By distributing the load across multiple centralization points, Netdata can optimize both performance and cost. This distribution allows for the efficient use of resources and help mitigate the bottlenecks associated with a single centralization point.

-- **Simplicity**: Netdata agents (Children and Parents) require minimal configuration and maintenance, usually less than the configuration and maintenance required for the agents and exporters of other monitoring solutions. This provides an observability pipeline that has less moving parts and is easier to manage and maintain.

+- **Simplicity**: Netdata Agents (Children and Parents) require minimal configuration and maintenance, usually less than the configuration and maintenance required for the Agents and exporters of other monitoring solutions. This provides an observability pipeline that has less moving parts and is easier to manage and maintain.

-- **Always On-Prem**: Netdata centralization points are always on-prem. Even when Netdata Cloud is used, Netdata agents and parents are queried to provide the data required for the dashboards.

+- **Always On-Prem**: Netdata centralization points are always on-prem. Even when Netdata Cloud is used, Netdata Agents and parents are queried to provide the data required for the dashboards.

- **Bottom-Up Observability**: Netdata is designed to monitor systems, containers and applications bottom-up, aiming to provide the maximum resolution, visibility, depth and insights possible. Its ability to segment the infrastructure into multiple independent observability centralization points with customized retention, machine learning and alerts on each of them, while providing unified infrastructure level dashboards at Netdata Cloud, provides a flexible environment that can be tailored per service or team, while still being one unified infrastructure.

diff --git a/docs/observability-centralization-points/metrics-centralization-points/configuration.md b/docs/observability-centralization-points/metrics-centralization-points/configuration.md

index d1f13f0501082f..2ba5d9b070a714 100644

--- a/docs/observability-centralization-points/metrics-centralization-points/configuration.md

+++ b/docs/observability-centralization-points/metrics-centralization-points/configuration.md

@@ -2,7 +2,7 @@

Metrics streaming configuration for both Netdata Children and Parents is done via `stream.conf`.

-`netdata.conf` and `stream.conf` have the same `ini` format, but `netdata.conf` is considered a non-sensitive file, while `stream.conf` contains API keys, IPs and other sensitive information that enable communication between Netdata agents.

+`netdata.conf` and `stream.conf` have the same `ini` format, but `netdata.conf` is considered a non-sensitive file, while `stream.conf` contains API keys, IPs and other sensitive information that enable communication between Netdata Agents.

`stream.conf` has 2 main sections:

diff --git a/docs/observability-centralization-points/metrics-centralization-points/faq.md b/docs/observability-centralization-points/metrics-centralization-points/faq.md

index 1ce0d8534b26c2..917b8088a4c9d1 100644

--- a/docs/observability-centralization-points/metrics-centralization-points/faq.md

+++ b/docs/observability-centralization-points/metrics-centralization-points/faq.md

@@ -49,9 +49,9 @@ Check [Restoring a Netdata Parent after maintenance](/docs/observability-central

When there are multiple data sources for the same node, Netdata Cloud follows this strategy:

-1. Netdata Cloud prefers Netdata agents having `live` data.

-2. For time-series queries, when multiple Netdata agents have the retention required to answer the query, Netdata Cloud prefers the one that is further away from production systems.

-3. For Functions, Netdata Cloud prefers Netdata agents that are closer to the production systems.

+1. Netdata Cloud prefers Netdata Agents having `live` data.

+2. For time-series queries, when multiple Netdata Agents have the retention required to answer the query, Netdata Cloud prefers the one that is further away from production systems.

+3. For Functions, Netdata Cloud prefers Netdata Agents that are closer to the production systems.

## Is there a way to balance child nodes to the parent nodes of a cluster?

@@ -69,7 +69,7 @@ To set the ephemeral flag on a node, edit its netdata.conf and in the `[global]`

A parent node tracks connections and disconnections. When a node is marked as ephemeral and stops connecting for more than 24 hours, the parent will delete it from its memory and local administration, and tell Cloud that it is no longer live nor stale. Data for the node can no longer be accessed, but if the node connects again later, the node will be "revived", and previous data becomes available again.

-A node can be forced into this "forgotten" state with the Netdata CLI tool on the parent the node is connected to (if still connected) or one of the parent agents it was previously connected to. The state will be propagated _upwards_ and _sideways_ in case of an HA setup.

+A node can be forced into this "forgotten" state with the Netdata CLI tool on the parent the node is connected to (if still connected) or one of the parent Agents it was previously connected to. The state will be propagated _upwards_ and _sideways_ in case of an HA setup.

```

netdatacli remove-stale-node

diff --git a/docs/observability-centralization-points/metrics-centralization-points/sizing-netdata-parents.md b/docs/observability-centralization-points/metrics-centralization-points/sizing-netdata-parents.md

index edfbabe934b3d0..677d244a7a6811 100644

--- a/docs/observability-centralization-points/metrics-centralization-points/sizing-netdata-parents.md

+++ b/docs/observability-centralization-points/metrics-centralization-points/sizing-netdata-parents.md

@@ -1,3 +1,3 @@

# Sizing Netdata Parents

-To estimate CPU, RAM, and disk requirements for your Netdata Parents, check [sizing Netdata agents](/docs/netdata-agent/sizing-netdata-agents/README.md).

+To estimate CPU, RAM, and disk requirements for your Netdata Parents, check [sizing Netdata Agents](/docs/netdata-agent/sizing-netdata-agents/README.md).

diff --git a/docs/security-and-privacy-design/README.md b/docs/security-and-privacy-design/README.md

index da484bc0e19c12..5333087a9fcd3f 100644

--- a/docs/security-and-privacy-design/README.md

+++ b/docs/security-and-privacy-design/README.md

@@ -28,7 +28,7 @@ Netdata is committed to adhering to the best practices laid out by the Open Sour

Currently, the Netdata Agent follows the OSSF best practices at the passing level. Feel free to audit our approach to

the [OSSF guidelines](https://bestpractices.coreinfrastructure.org/en/projects/2231)

-Netdata Cloud boasts of comprehensive end-to-end automated testing, encompassing the UI, back-end, and agents, where

+Netdata Cloud boasts of comprehensive end-to-end automated testing, encompassing the UI, back-end, and Agents, where

involved. In addition, the Netdata Agent uses an array of third-party services for static code analysis,

security analysis, and CI/CD integrations to ensure code quality on a per pull request basis. Tools like Github's

CodeQL, Github's Dependabot, our own unit tests, various types of linters,

@@ -100,7 +100,7 @@ laws, including GDPR and CCPA.

Netdata ensures user privacy rights as mandated by the GDPR and CCPA. This includes the right to access, correct, and

delete personal data. These functions are all available online via the Netdata Cloud User Interface (UI). In case a user

-wants to remove all personal information (email and activities), they can delete their cloud account by logging

+wants to remove all personal information (email and activities), they can delete their Netdata Cloud account by logging

into and accessing their profile, at the bottom left of the screen.

### Regular Review and Updates

@@ -124,10 +124,10 @@ Netdata also collects anonymous telemetry events, which provide information on t

and performance metrics. This data is used to understand how the software is being used and to identify areas for

improvement.

-The purpose of collecting these statistics and telemetry data is to guide the development of the open-source agent,

+The purpose of collecting these statistics and telemetry data is to guide the development of the open-source Agent,

focusing on areas that are most beneficial to users.

-Users have the option to opt out of this data collection during the installation of the agent, or at any time by

+Users have the option to opt out of this data collection during the installation of the Agent, or at any time by

removing a specific file from their system.

Netdata retains this data indefinitely in order to track changes and trends within the community over time.

diff --git a/docs/security-and-privacy-design/netdata-agent-security.md b/docs/security-and-privacy-design/netdata-agent-security.md

index d2e2e1429cbec2..6d3acf76c2ab68 100644

--- a/docs/security-and-privacy-design/netdata-agent-security.md

+++ b/docs/security-and-privacy-design/netdata-agent-security.md

@@ -27,25 +27,25 @@ neither do most of the data collecting plugins.

Data collection plugins communicate with the main Netdata process via ephemeral, in-memory, pipes that are inaccessible

to any other process.

-Streaming of metrics between Netdata agents requires an API key and can also be encrypted with TLS if the user

+Streaming of metrics between Netdata Agents requires an API key and can also be encrypted with TLS if the user

configures it.

-The Netdata agent's web API can also use TLS if configured.

+The Netdata Agent's web API can also use TLS if configured.

-When Netdata agents are claimed to Netdata Cloud, the communication happens via MQTT over Web Sockets over TLS, and

+When Netdata Agents are claimed to Netdata Cloud, the communication happens via MQTT over Web Sockets over TLS, and

public/private keys are used for authorizing access. These keys are exchanged during the claiming process (usually

-during the provisioning of each agent).

+during the provisioning of each Agent).

## Authentication

-Direct user access to the agent is not authenticated, considering that users should either use Netdata Cloud, or they

-are already on the same LAN, or they have configured proper firewall policies. However, Netdata agents can be hidden

+Direct user access to the Agent is not authenticated, considering that users should either use Netdata Cloud, or they

+are already on the same LAN, or they have configured proper firewall policies. However, Netdata Agents can be hidden

behind an authenticating web proxy if required.

-For other Netdata agents streaming metrics to an agent, authentication via API keys is required and TLS can be used if

+For other Netdata Agents streaming metrics to an Agent, authentication via API keys is required and TLS can be used if

configured.

-For Netdata Cloud accessing Netdata agents, public/private key cryptography is used and TLS is mandatory.

+For Netdata Cloud accessing Netdata Agents, public/private key cryptography is used and TLS is mandatory.

## Security Vulnerability Response

@@ -57,12 +57,11 @@ information can be found [here](https://github.com/netdata/netdata/security/poli

## Protection Against Common Security Threats

-The Netdata agent is resilient against common security threats such as DDoS attacks and SQL injections. For DDoS,

-Netdata agent uses a fixed number of threads for processing requests, providing a cap on the resources that can be

+The Netdata Agent is resilient against common security threats such as DDoS attacks and SQL injections. For DDoS, the Agent uses a fixed number of threads for processing requests, providing a cap on the resources that can be

consumed. It also automatically manages its memory to prevent over-utilization. SQL injections are prevented as nothing

from the UI is passed back to the data collection plugins accessing databases.

-Additionally, the Netdata agent is running as a normal, unprivileged, operating system user (a few data collections

+Additionally, the Agent is running as a normal, unprivileged, operating system user (a few data collections

require escalated privileges, but these privileges are isolated to just them), every netdata process runs by default

with a nice priority to protect production applications in case the system is starving for CPU resources, and Netdata

agents are configured by default to be the first processes to be killed by the operating system in case the operating

@@ -70,6 +69,4 @@ system starves for memory resources (OS-OOM - Operating System Out Of Memory eve

## User Customizable Security Settings

-Netdata provides users with the flexibility to customize agent security settings. Users can configure TLS across the

-system, and the agent provides extensive access control lists on all its interfaces to limit access to its endpoints

-based on IP. Additionally, users can configure the CPU and Memory priority of Netdata agents.

+Netdata provides users with the flexibility to customize the Agent's security settings. Users can configure TLS across the system, and the Agent provides extensive access control lists on all its interfaces to limit access to its endpoints based on IP. Additionally, users can configure the CPU and Memory priority of Netdata Agents.

diff --git a/docs/security-and-privacy-design/netdata-cloud-security.md b/docs/security-and-privacy-design/netdata-cloud-security.md

index 1df02286075c48..13270e7ec90fce 100644

--- a/docs/security-and-privacy-design/netdata-cloud-security.md

+++ b/docs/security-and-privacy-design/netdata-cloud-security.md

@@ -4,7 +4,7 @@ Netdata Cloud is designed with a security-first approach to ensure the highest l

using Netdata Cloud in environments that require compliance with standards like PCI DSS, SOC 2, or HIPAA, users can be

confident that all collected data is stored within their infrastructure. Data viewed on dashboards and alert

notifications travel over Netdata Cloud, but are not stored—instead, they're transformed in transit, aggregated from

-multiple agents and parents (centralization points), to appear as one data source in the user's browser.

+multiple Agents and parents (centralization points), to appear as one data source in the user's browser.

## User Identification and Authorization

@@ -41,10 +41,7 @@ Netdata Cloud does not store user credentials.

## Security Features and Response

-Netdata Cloud offers a variety of security features, including infrastructure-level dashboards, centralized alerts

-notifications, auditing logs, and role-based access to different segments of the infrastructure. The cloud service

-employs several protection mechanisms against DDoS attacks, such as rate-limiting and automated blacklisting. It also

-uses static code analyzers to prevent other types of attacks.

+Netdata Cloud offers a variety of security features, including infrastructure-level dashboards, centralized alert notifications, auditing logs, and role-based access to different segments of the infrastructure. It employs several protection mechanisms against DDoS attacks, such as rate-limiting and automated blacklisting. It also uses static code analyzers to prevent other types of attacks.

In the event of potential security vulnerabilities or incidents, Netdata Cloud follows the same process as the Netdata

agent. Every report is acknowledged and analyzed by the Netdata team within three working days, and the team keeps the

@@ -59,8 +56,7 @@ security tools, etc.) on a per contract basis.

## Deleting Personal Data

-Users who wish to remove all personal data (including email and activities) can delete their cloud account by logging

-into Netdata Cloud and accessing their profile.

+Users who wish to remove all personal data (including email and activities) can delete their account by logging into Netdata Cloud and accessing their profile.

## User Privacy and Data Protection

diff --git a/docs/top-monitoring-netdata-functions.md b/docs/top-monitoring-netdata-functions.md

index a9caea781337e0..3d461f56eda42c 100644

--- a/docs/top-monitoring-netdata-functions.md

+++ b/docs/top-monitoring-netdata-functions.md

@@ -13,7 +13,7 @@ For more details please check out documentation on how we use our internal colle

The following is required to be able to run Functions from Netdata Cloud.

-- At least one of the nodes claimed to your Space should be on a Netdata agent version higher than `v1.37.1`

+- At least one of the nodes claimed to your Space should be on a Netdata Agent version higher than `v1.37.1`

- Ensure that the node has the collector that exposes the function you want enabled

## What functions are currently available?

diff --git a/integrations/README.md b/integrations/README.md

index 377c1a3061d8ac..3ab22ec4df1519 100644

--- a/integrations/README.md

+++ b/integrations/README.md

@@ -10,7 +10,7 @@ To generate a copy of `integrations.js` locally, you will need:

- A local checkout of https://github.com/netdata/netdata

- A local checkout of https://github.com/netdata/go.d.plugin. The script

expects this to be checked out in a directory called `go.d.plugin`

- in the root directory of the agent repo, though a symlink with that

+ in the root directory of the Agent repo, though a symlink with that

name pointing at the actual location of the repo will work as well.

The first two parts can be easily covered in a Linux environment, such

@@ -21,6 +21,6 @@ as a VM or Docker container:

- On Fedora or RHEL (EPEL is required on RHEL systems): `dnf install python3-jsonschema python3-referencing python3-jinja2 python3-ruamel-yaml`

Once the environment is set up, simply run

-`integrations/gen_integrations.py` from the agent repo. Note that the

+`integrations/gen_integrations.py` from the Agent repo. Note that the

script must be run _from this specific location_, as it uses it’s own

path to figure out where all the files it needs are.

diff --git a/integrations/integrations.js b/integrations/integrations.js

index 95904775a1292d..46041aa145c186 100644

--- a/integrations/integrations.js

+++ b/integrations/integrations.js

@@ -3193,7 +3193,7 @@ export const integrations = [

"most_popular": true

},

"overview": "# Apache\n\nPlugin: go.d.plugin\nModule: apache\n\n## Overview\n\nThis collector monitors the activity and performance of Apache servers, and collects metrics such as the number of connections, workers, requests and more.\n\n\nIt sends HTTP requests to the Apache location [server-status](https://httpd.apache.org/docs/2.4/mod/mod_status.html), \nwhich is a built-in location that provides metrics about the Apache server.\n\n\nThis collector is supported on all platforms.\n\nThis collector supports collecting metrics from multiple instances of this integration, including remote instances.\n\n\n### Default Behavior\n\n#### Auto-Detection\n\nBy default, it detects Apache instances running on localhost that are listening on port 80.\nOn startup, it tries to collect metrics from:\n\n- http://localhost/server-status?auto\n- http://127.0.0.1/server-status?auto\n\n\n#### Limits\n\nThe default configuration for this integration does not impose any limits on data collection.\n\n#### Performance Impact\n\nThe default configuration for this integration is not expected to impose a significant performance impact on the system.\n",

- "setup": "## Setup\n\n### Prerequisites\n\n#### Enable Apache status support\n\n- Enable and configure [status_module](https://httpd.apache.org/docs/2.4/mod/mod_status.html).\n- Ensure that you have [ExtendedStatus](https://httpd.apache.org/docs/2.4/mod/mod_status.html#troubleshoot) set on (enabled by default since Apache v2.3.6).\n\n\n\n### Configuration\n\n#### File\n\nThe configuration file name for this integration is `go.d/apache.conf`.\n\n\nYou can edit the configuration file using the [`edit-config`](https://github.com/netdata/netdata/blob/master/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config) script from the\nNetdata [config directory](https://github.com/netdata/netdata/blob/master/docs/netdata-agent/configuration/README.md#the-netdata-config-directory).\n\n```bash\ncd /etc/netdata 2>/dev/null || cd /opt/netdata/etc/netdata\nsudo ./edit-config go.d/apache.conf\n```\n#### Options\n\nThe following options can be defined globally: update_every, autodetection_retry.\n\n\n{% details open=true summary=\"Config options\" %}\n| Name | Description | Default | Required |\n|:----|:-----------|:-------|:--------:|\n| update_every | Data collection frequency. | 1 | no |\n| autodetection_retry | Recheck interval in seconds. Zero means no recheck will be scheduled. | 0 | no |\n| url | Server URL. | http://127.0.0.1/server-status?auto | yes |\n| timeout | HTTP request timeout. | 1 | no |\n| username | Username for basic HTTP authentication. | | no |\n| password | Password for basic HTTP authentication. | | no |\n| proxy_url | Proxy URL. | | no |\n| proxy_username | Username for proxy basic HTTP authentication. | | no |\n| proxy_password | Password for proxy basic HTTP authentication. | | no |\n| method | HTTP request method. | GET | no |\n| body | HTTP request body. | | no |\n| headers | HTTP request headers. | | no |\n| not_follow_redirects | Redirect handling policy. Controls whether the client follows redirects. | no | no |\n| tls_skip_verify | Server certificate chain and hostname validation policy. Controls whether the client performs this check. | no | no |\n| tls_ca | Certification authority that the client uses when verifying the server's certificates. | | no |\n| tls_cert | Client TLS certificate. | | no |\n| tls_key | Client TLS key. | | no |\n\n{% /details %}\n#### Examples\n\n##### Basic\n\nA basic example configuration.\n\n```yaml\njobs:\n - name: local\n url: http://127.0.0.1/server-status?auto\n\n```\n##### HTTP authentication\n\nBasic HTTP authentication.\n\n{% details open=true summary=\"Config\" %}\n```yaml\njobs:\n - name: local\n url: http://127.0.0.1/server-status?auto\n username: username\n password: password\n\n```\n{% /details %}\n##### HTTPS with self-signed certificate\n\nApache with enabled HTTPS and self-signed certificate.\n\n{% details open=true summary=\"Config\" %}\n```yaml\njobs:\n - name: local\n url: https://127.0.0.1/server-status?auto\n tls_skip_verify: yes\n\n```\n{% /details %}\n##### Multi-instance\n\n> **Note**: When you define multiple jobs, their names must be unique.\n\nCollecting metrics from local and remote instances.\n\n\n{% details open=true summary=\"Config\" %}\n```yaml\njobs:\n - name: local\n url: http://127.0.0.1/server-status?auto\n\n - name: remote\n url: http://192.0.2.1/server-status?auto\n\n```\n{% /details %}\n",