Playing minesweeper with neural networks.

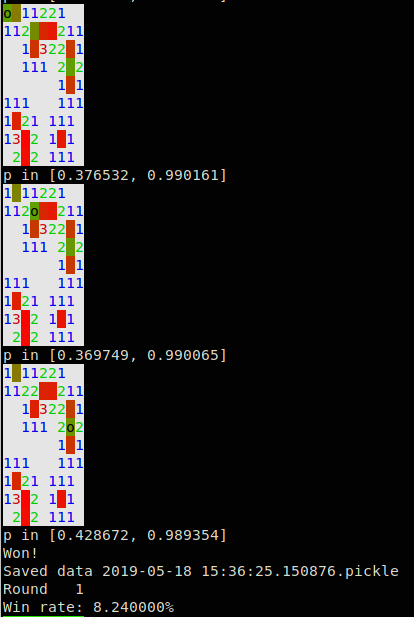

Given an input board the AI player predicts the probability of finding a mine at each open location. It selects the lowest probability location for its next guess. This process repeats until until the game is over.

The first move is always safe in minesweeper so the AI chooses at random to remove bias derived from player initialisation.

This is a suboptimal strategy since a higher risk guess may reveal more information, improving the chance of winning that game.

The optimal approach is to minimise the product of turn probabilities. For example, an algorithm that finishes the game in 10 moves, each with 1% failure, will win 0.99^10 = 90.4% of games while an algorithm that finishes in 100 moves with 0.5% failure rate will only win 0.995^100 = 60.6% of games.

In practice however the strategy used appears to work reasonably well.

A reinforcement learning approach could learn to make this tradeoff optimally. For example, weighting the backpropagation signal according by inverse game length.

Uses a neural net (NN) to predict where mines are going to be. The AI player chooses the lowest probability location for its next guess.

The NN consists of 4 fully connected layers whose sizes are dynamic depending on the game dimensions. A logistic loss function is used.

This variant of minesweeper can be any size. The first guess is guarenteed to be safe by moving a mine if the first guess is unlucky.

Plays trial games to generate training data.

Trains on all saved training data.

Wins >90% of games (<1 min of training).

Wins >80% of games (10 mins of training).

Wins 80% of games (after several days training on CPU).