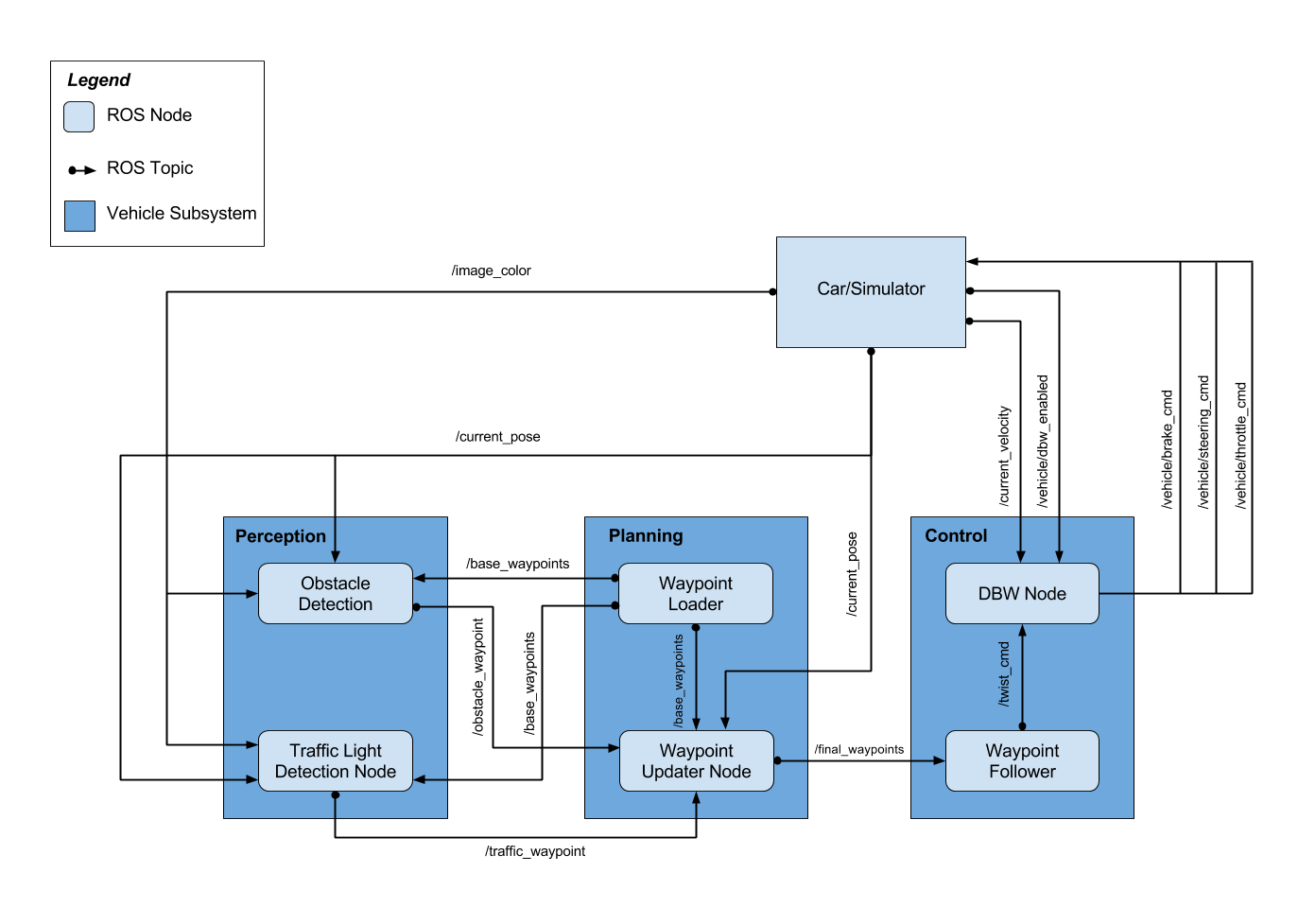

This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

| Name | Responsibility | |

|---|---|---|

| Asad Zia | [email protected] | Team Lead |

| Ferran Garriga | [email protected] | Drive By Wire (Python) |

| Leon Li | [email protected] | Drive By Wire (C++) |

| Mike Allen | [email protected] | Waypoint Updater, Traffic Light (ROS) |

| Shahzad Raza | [email protected] | Traffic Light Detection (TensorFlow) |

First step was to implement a basic waypoint updator.

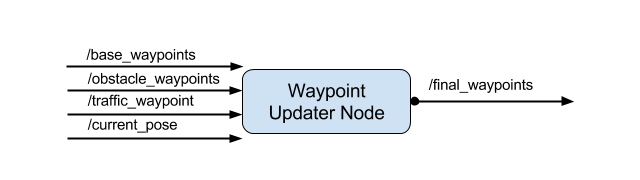

/ros/src/waypoint_detector This package contains the waypoint updater node: waypoint_updater.py. The purpose of this node is to update the target velocity property of each waypoint based on traffic light and obstacle detection data. This node will subscribe to the /base_waypoints, /current_pose, /obstacle_waypoint, and /traffic_waypoint topics, and publish a list of waypoints ahead of the car with target velocities to the /final_waypoints topic.

Steps

The steps that we have followed in order to implement this module are the following:

- Implement a loop in order to publish at 50hz our results

- Calculate the closest waypoint from the car’s current location among the full list of waypoints injected to the code by the subscription

- Calculate the next waypoint taking the direction’s car is facing into account from the closest waypoint and map’s coordinates

- We will return a sublist of injected waypoints starting from the next calculated waypoint

Implementation description

We have implemented the solution in the file waypoint_updater.py, the major blocks implemented are the following:

Main code loop

We have implemented a main loop that cycles at 50hz and continuously publishes the calculated waypoints while we have some data available.

def loop(self):

rate = rospy.Rate(0.5)

while not rospy.is_shutdown():

if self.current_pose is None or self.base_waypoints is None:

continue

self.publish()

rate.sleep()

Publish

From the udacity project planning project, we will get the next_waypoint that’s closets to the direction of the car while base_waypoints is feeded into the code through a subscription rospy.Subscriber('/base_waypoints', Lane, self.waypoints_cb)

The last step would be to publish the calculated waypoints to our publisher self.final_waypoints_pub = rospy.Publisher('final_waypoints', Lane, queue_size=1)

def publish(self):

"""publish Lane message to /final_waypoints topic"""

next_waypoint = self.next_waypoint()

waypoints = self.base_waypoints.waypoints

# shift waypoint indexes to start on next_waypoint so it's easy to grab LOOKAHEAD_WPS

waypoints = waypoints[next_waypoint:] + waypoints[:next_waypoint]

waypoints = waypoints[:LOOKAHEAD_WPS]

lane = Lane()

lane.waypoints = waypoints

self.final_waypoints_pub.publish(lane)

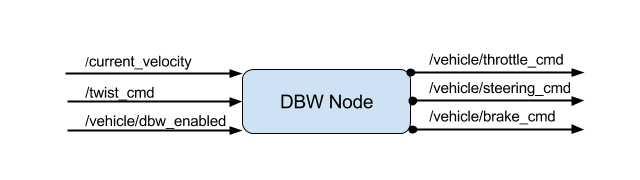

/ros/src/twist_controller Carla is equipped with a drive-by-wire (dbw) system, meaning the throttle, brake, and steering have electronic control. This package contains the files that are responsible for control of the vehicle: the node dbw_node.py and the file twist_controller.py, along with a pid and lowpass filter that you can use in your implementation. The dbw_node subscribes to the /current_velocity topic along with the /twist_cmd topic to receive target linear and angular velocities. Additionally, this node will subscribe to /vehicle/dbw_enabled, which indicates if the car is under dbw or driver control. This node will publish throttle, brake, and steering commands to the /vehicle/throttle_cmd, /vehicle/brake_cmd, and /vehicle/steering_cmd topics.

Implementation description We implemented PID control with dynamic_reconfigure plugin first.

For PID control, use velocity from /twist_cmd and /current_velocity as velocity CTE, use this value to handle speed_pid controller. we can use dynamic_reconfigure to adjust P,I,D’s value to get a good find tuned parameters.

usage:

$ rosrun rqt_reconfigure rqt_reconfigure

to dynamic config the parameters of P,I,D. Following is screen snapshot.

also, we used acceleration pid controller to control the throttle and brake.

Above is the PID testing screen snapshot, we need to test and tune a good PID value to make car run smoothly. Finally, we tuned the values as below:

steer_kp = 3.0;

------

speed_kp = 2.0;

speed_ki = 1.0;

speed_kd = 0;

------

accel_kp = 0.4;

accel_ki = 0.2;

accel_kd = 0.2;

We also use lowpassfilter to filter the fuel variable, we assume it is 50% full of gas in simulator environment, but in real environment, we get the value from /fuel_level_report topic.

The DBW node subscribe the /current_velocity topic and /twist_cmd topic, we use the velocity from these two topic to do CTE estimation.

We get velocity CTE from:

double vel_cte = twist_cmd_.twist.linear.x - cur_velocity_.twist.linear.x;

this drive our PID module to move. there are also some condition check for twist_cmd velocity message, if too small, we reset PID error, in order to avoid accumulated error.

Then we use PID module to drive the vehicle’s throttle, brake. we also downloaded the udacity’s bagfile to do testing. snapshot as below:

After implementation the PID code, we found that the udacity configuration was missing paramters and config neededed for MPC. so we still focus on PID tuning and implementation.

We found the materials about MKZ’s control theory, so we did the code as what it said. Following is the principle of control of MKZ:

The CTE come from speed command and speed measurement, according to related /twist_cmd and /current_velocity, we get cte from here, and then we use uses proportional control with gain Kp, this produced acceleration command, it is used to multiply m and r, to produce torque, which is T=a*``*m**``r, and combined losspass filter from speed measurement, we produced acceleration pid input, use PI controller to produce throttle pedal output.

During the whole process, we actually used all P,I,D method in it. The detailed info can be checked in code.

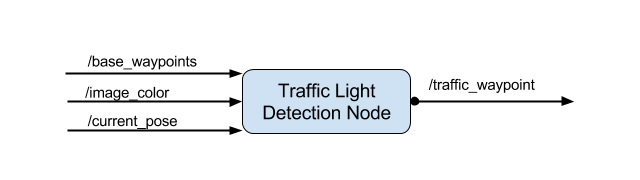

/ros/src/tl_detector This package contains the traffic light detection node: tl_detector.py. This node takes in data from the /image_color, /current_pose, and /base_waypoints topics and publishes the locations to stop for red traffic lights to the /traffic_waypoint topic.

The /current_pose topic provides the vehicle's current position, and /base_waypoints provides a complete list of waypoints the car will be following.

You will build both a traffic light detection node and a traffic light classification node. Traffic light detection should take place within tl_detector.py, whereas traffic light classification should take place within ../tl_detector/light_classification_model/tl_classfier.py.

Steps

-

Get closest light waypoint leveraging light data from the

/vehicle/traffic_lightsas well as the vehicles current location. For closest waypoint, we converted some waypoint_updater.py code into a service so we could leverage the existing closest waypoint code in this module as well.msg = NextWaypointRequest(pose=pose, waypoints=waypoints) response = self.next_waypoint_proxy(msg)

-

Before classifying the image for light state, first check to see if the image is visible using a simple pinhole camera model to project the light waypoint onto the camera image. Before we can do this however, we first need to convert the waypoint coordinates to coordinates with respect to the car or camera. Conveniently, ROS provides a transformation module to perform these transformations.

transformed = self.transform_point(pose.header, point)

Once the light waypoint coordinates are transformed, we can then project onto the camera image. If the projected point lies within bounding box of the image, then we say it is visible.

# create camera info

camera = PinholeCameraModel()

camera.fromCameraInfo(self.camera_info)

# project point onto image

u, v = camera.project3dToPixel((x, y, z))

# setup bounding box

cx = camera.cx()

cy = camera.cy()

width = self.camera_info.width

height = self.camera_info.height

top = cy + height / 2

bottom = cy - height / 2

right = cx + width / 2

left = cx - width / 2

# light is visible if projected within the bounding box of the 2d image

return left <= u and u <= right and bottom <= v and v <= top

- If light is visible we send the current camera image from

/image_colortopic, into the classifier by callingself.light_classifier.get_classification(cv_image), which leverages a tensorflow model for classification. - The Tensorflow classification happens within

tl_classifier.py. Upon initialization of the module, we create a Tensorflow session and populate the default graph with a pre-trained protobuf file that contains the network definition and weights. - When

get_classificationis called, we pass in the given image to our Tensorflow model and request classification scores, labels, and bounding boxes. - From there, we keep the process fairly simple. The classifier returns multiple bounding boxes, scores, and labels, but we just choose the label with the highest score that exceeds a threshold. If no score exceeds the threshold, we return

TrafficLight.UNKNOWN - The Tensorflow model uses slightly different class labels than those found in the Styx messages, so before returning to

tl_detectorwe first map the labels to the correct Styx counterpart.

classifierToStyxLabelMap = {

1: TrafficLight.GREEN,

2: TrafficLight.RED,

3: TrafficLight.YELLOW,

4: TrafficLight.UNKNOWN

}

classfierLabel = detection_classes[0][0]

return classifierToStyxLabelMap.get(classfierLabel)

- Once we have the light state given by the classifier, we can finally publish the result to the

/traffic_waypointtopic to allow thewaypoint_updaterto respond to traffic lights.

TL Classification Implementation description

Our implementation of the Traffic Light classification node uses TensorFlow’s Object Detection API. Since Carla’s environment uses Tensorflow v.1.3.0, we used a compatible version of the Object Detection API to evaluate various pre-trained models on the traffic light detection & classification task.

Our classification model is based on SSD Inception v2 trained on the MS COCO dataset available as part of the Tensorflow Model Zoo. This model was first fine-tuned on the Bosch small traffic light dataset. Two versions of the model were then created, one which was fine-tuned on simulator training images and the other on images from the Udacity test site.

The training images were collected from the simulator and Slack. The training data available on slack was already annotated, however, the simulator training data required annotation. Faster R-CNN pre-trained on COCO was used to annotate the simulator images and the labels of the detected traffic lights were replaced with the color of the traffic light prior to saving the training data. The available training images were heavily biased towards red traffic lights so random translations were applied to augment the green and yellow traffic light images to balance the classes.

The sections below provide details on how to use the Object Detection API to fine-tune a trained model on a custom dataset.

Environment Preparation for TF Object Detection API

- Get the Tensorflow Object Detection API compatible with TF v1.3.0 by cloning the repository here. Please note the specific commit version being used.

- Add the following directories to your PATH variable. Make sure this is done outside of any virtual environment so that the environment variables are inherited.

models/models/research/models/research/slim/** Linux users: Append set these variables in your .bashrc file so this doesn’t need to be done for every shell. Windows users: Add these directories to your system environment variables- Download the v3.4.0 of the Google protobuf binary for your OS from here.

- Extract the binary to

models/research/and execute: - Linux users:

protoc --python_out=. object_detection/protos/*.proto - Windows users:

protoc--``python_out=. object_detection/protos/*.proto - Verify that an equivalent python script has been created for each .proto file in

models/research/object_detection/protos/ - Setup the conda environment that contains versions of Tensorflow compatible with Carla by using the environment file here. The environment name is

capstone. Note: This environment uses Python 3.5.2 for development whereas the environment on Carla uses Python 2.7.12. - Activate the

capstoneenvironment and execute the following frommodels/research: python setup.py buildpython setup.py install- Create a

configs/folder inmodels/research/object_detection/to store custom model training configuration files. - Create a

custom_models/folder withtrained_modelsandfrozen_modelssubfolders to hold models trained/fine-tuned on custom dataset. - Download a pre-trained model from the Tensorflow Model Zoo compatible with your version of the Object Detection API. The COCO pre-trained models are a good starting point since they are already trained to detect a traffic light.

- Extract the model into

models/research/object_detection/. The folder will contain a pre-trained model checkpoint, a frozen inference graph and the model graph. - The downloaded model can be evaluated on test image out of the box by using the

object_detection_tutorialjupyter notebook included inmodels/research/object_detection/and the frozen inference graph included with the model. Additional test images can be put into theobject_detection/test_images/folder. Make sure to update the jupyter notebook code block that reads in these images.

Model Training

The TF Object Detection API uses training data in the TFRecord format to train models. In addition, a corresponding label map file needs to be provided that replicates the labels used to create the TFRecord file. Control of the training parameters for various types of models is done via a model configuration file. Samples of the configuration files for various models are included in object_detection/samples/configs/

- Create a TFRecord file for a dataset by using the conversion scripts here. Place this dataset in a new folder inside

object_detection/custom_models/trained_models/. This folder will contain the trained checkpoints for a model trained on the created TFRecord. - Update the configuration file for the model to specify the number of classes, image dimensions, training data path, label map file path, learning rate, steps (epochs), etc. Sample configuration files for the Udacity dataset can be found here. Put the configuration file in your

object_detection/configs/folder. - Create a label map file for the dataset and place it in

object_detection/data/. Label maps for the Udacity and Bosch datasets can be found here. - Navigate to

models/research/object_detectionand train the model by executing:

python train.py --logtostderr --train_dir=custom_models/trained_models/<model_folder>/ --pipeline_config_path=configs/<model_config_file>.config

- Checkpoints will be created every 10 minutes in the folder containing the training dataset which can later be exported for inference.

Exporting a model

Once a trained checkpoint is available, a frozen graph can be exported for inference using the tools provided as part of the Object Detection API. To export a model, execute the following from object_detection/:

python export_inference_graph.py --input_type image_tensor --pipeline_config_path configs/<model_config_file>.config --trained_checkpoint_prefix custom_models/trained_models/<model_folder>/model.ckpt-XXX --output_directory custom_models/frozen_models/<frozen_model_folder>

Where XXX needs to be replaced by the step number at which the checkpoint was saved.

Note: It is possible to export a frozen inference graph in a different version of TensorFlow than in which the model was originally trained. This can be used to export inference graphs from trained models that were added to model zoo in later releases of the API and used in older versions as long as there are no incompatibility issue.

Implementation description

The full waypoint updater starts with the partial waypoint updater, but now needs to be responsible for decelerating in response to red lights. This module does not need to calculate jerk minimized trajectories (JMT), because the drive by wire (dbw) module already smoothly accelerates between waypoints. For this reason, we simply need to give the car enough time to reach zero velocity through updating target velocities on the published /final_waypoints. Conversely, we do not need to give the car warning on green lights. We can simply send full speed base_waypoints.

Steps

- Receive red light information from the

/traffic_waypoint/topic.

rospy.Subscriber('/traffic_waypoint', Int32, self.traffic_cb)

- If red light, then send waypoints with velocities decelerated until reaching 0 at the given stopping waypoint. We use a simple math square root function (as seen on walkthrough) to reduce the cars velocity as it gets closer to the given stopping waypoint.

stop_wp_idx = self.traffic_waypoint.data

stop_idx = max(stop_wp_idx - closest_idx - 2, 0) # Two waypoints from line so front of car stops at line

dist = self.distance(waypoints, i, stop_idx)

vel = math.sqrt(2 * self.max_decel * dist)

if vel < 1.:

vel = 0.

p.twist.twist.linear.x = min(vel, wp.twist.twist.linear.x)

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions from term 2

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images

Logging and Debugging

- With styx running, open a new terminal tab

docker pswill give you list of running docker processes- run

docker exec -it <container id> bashto enter same docker process that has styx running. - run

source devel/setup.sh - For logging run

tail -f /root/.ros/log/latest/<log> - For info run

rostopic info /<topic>, etc

- After cold boot on

tensorflow-gpusetup, the first two runs are flaky, then it works ok. Logs indicate cuDNN failures. - The

tensorflow-gpusetup has limitation that TL process would crash if quality is set toFantasticor resolution is higher than800x600. - With CPU inference, the TL detection is slower, it take a while before car responds to TL transition. Resolution is not a problem, it works the same even on

2560x1920. However if quality is set ofFantastic, second TL detection fails.