diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/Assignment 1 Solution.docx b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/Assignment 1 Solution.docx

new file mode 100644

index 0000000..57d1dfe

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/Assignment 1 Solution.docx differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/~$signment 1.docx b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/~$signment 1.docx

new file mode 100644

index 0000000..888be2d

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 1/~$signment 1.docx differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.docx b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.docx

new file mode 100644

index 0000000..2ded750

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.docx differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.pdf b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.pdf

new file mode 100644

index 0000000..b2d4f51

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2 Solution.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2.pdf b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2.pdf

new file mode 100644

index 0000000..7e58037

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 2/Assignment 2.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.md b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.md

new file mode 100644

index 0000000..d023aa6

--- /dev/null

+++ b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.md

@@ -0,0 +1,1063 @@

+

+

Author: Madhurima Rawat

+

+

+

+

Assignment 3

+

+

+

+

Question 1: Compare and contrast Resilient Distributed Datasets (RDDs) and DataFrames in Apache Spark. Provide an example where DataFrames offer performance advantages over RDDs. Also, explain how Spark SQL integrates with DataFrames for querying large datasets.

+

+

+

+

Solution: Comparing Resilient Distributed Datasets (RDDs) and DataFrames in Apache Spark

+

+

+Apache Spark is a powerful distributed computing system widely used for big data processing. Two fundamental abstractions in Spark are **Resilient Distributed Datasets (RDDs)** and **DataFrames**. Understanding their differences, advantages, and integration with Spark SQL is crucial for optimizing Spark applications.

+

+---

+

+### **Table of Contents**

+

+1. [Introduction to RDDs and DataFrames](#introduction)

+2. [Comparison Table: RDDs vs. DataFrames](#comparison)

+3. [Performance Advantages of DataFrames Over RDDs](#performance)

+4. [Spark SQL Integration with DataFrames](#spark-sql)

+5. [Flow Diagrams and Charts](#diagrams)

+6. [Examples](#examples)

+7. [Conclusion](#conclusion)

+

+---

+

+

+

+### **1. Introduction to RDDs and DataFrames**

+

+#### **Resilient Distributed Datasets (RDDs)**

+

+- **Definition:** RDDs are the core abstraction in Spark, representing an immutable distributed collection of objects.

+- **Features:**

+ - **Immutability:** Once created, they cannot be altered.

+ - **Fault Tolerance:** Automatically recovers lost data using lineage information.

+ - **Lazy Evaluation:** Transformations are not executed until an action is called.

+ - **Type-Safe:** Compile-time type checking.

+- **Use Cases:** Low-level transformations, fine-grained control, functional programming paradigms.

+

+#### **DataFrames**

+

+- **Definition:** DataFrames are a higher-level abstraction built on top of RDDs, representing distributed collections of data organized into named columns, similar to tables in a relational database.

+- **Features:**

+ - **Schema:** Enforces a schema, providing structure to data.

+ - **Optimized Execution:** Utilizes Spark's Catalyst optimizer for query optimization.

+ - **Ease of Use:** Provides a higher-level API with support for SQL queries.

+ - **Integration:** Seamlessly integrates with various data sources (e.g., JSON, Parquet, JDBC).

+- **Use Cases:** Structured data processing, SQL-like operations, data analysis.

+

+---

+

+

+

+### **2. Comparison Table: RDDs vs. DataFrames**

+

+| **Aspect** | **RDDs** | **DataFrames** |

+| ------------------------ | -------------------------------------------------------------- | -------------------------------------------------------------- |

+| **Abstraction Level** | Low-level | High-level |

+| **Schema** | No inherent schema; data is unstructured | Enforced schema; data is structured into columns |

+| **API** | Functional transformations (map, filter, etc.) | Declarative API (select, filter, groupBy, etc.) |

+| **Performance** | Generally slower due to lack of optimization | Faster due to Catalyst optimizer and Tungsten execution engine |

+| **Ease of Use** | More complex; requires understanding of functional programming | Simpler; resembles SQL operations |

+| **Type Safety** | Yes (compile-time type checking) | Limited; relies on runtime checks |

+| **Optimization** | Manual optimizations needed | Automatic optimizations via Catalyst |

+| **Integration with SQL** | Limited; requires conversion to DataFrames | Native integration, allowing seamless SQL queries |

+| **Use Cases** | Complex transformations, custom processing | ETL operations, data analysis, reporting |

+

+---

+

+

+

+### **3. Performance Advantages of DataFrames Over RDDs**

+

+DataFrames offer significant performance benefits over RDDs primarily due to Spark's optimization features. Here's an example illustrating these advantages:

+

+#### **Example Scenario: Aggregating User Data**

+

+- **Task:** Calculate the average age of users from a large dataset.

+

+**Using RDDs:**

+

+```scala

+// Scala Example

+val userRDD = sparkContext.textFile("hdfs://path/to/users.csv")

+val userParsed = userRDD.map(line => {

+ val parts = line.split(",")

+ (parts(0), parts(1).toInt) // (UserID, Age)

+})

+val ageStats = userParsed.mapValues(age => (age, 1))

+ .reduceByKey { case ((ageSum, count1), (age, count2)) =>

+ (ageSum + age, count1 + count2)

+ }

+val avgAge = ageStats.mapValues { case (sum, count) => sum.toDouble / count }

+avgAge.collect()

+```

+

+**Using DataFrames:**

+

+```scala

+// Scala Example

+import spark.implicits._

+

+val userDF = spark.read

+ .option("header", "true")

+ .csv("hdfs://path/to/users.csv")

+ .select($"UserID", $"Age".cast("integer"))

+

+val avgAgeDF = userDF.groupBy("UserID")

+ .agg(avg("Age").alias("AverageAge"))

+

+avgAgeDF.show()

+```

+

+**Performance Analysis:**

+

+| **Metric** | **RDDs** | **DataFrames** |

+| ------------------------ | ---------------------------------- | ------------------------------- |

+| **Execution Time** | Higher due to lack of optimization | Lower due to Catalyst optimizer |

+| **Code Complexity** | More verbose and complex | More concise and readable |

+| **Resource Utilization** | Less efficient | More efficient |

+

+**Explanation:**

+

+- **Catalyst Optimizer:** DataFrames leverage Spark's Catalyst optimizer, which performs logical and physical optimizations, such as predicate pushdown and join optimizations.

+- **Tungsten Execution Engine:** Enhanced memory management and code generation improve execution speed.

+- **Serialization:** Optimized serialization formats reduce overhead.

+

+As a result, DataFrames can execute the same aggregation task faster and with less resource consumption compared to RDDs.

+

+---

+

+

+

+### **4. Spark SQL Integration with DataFrames**

+

+**Spark SQL** is a module in Spark for structured data processing. It seamlessly integrates with DataFrames, providing powerful querying capabilities using SQL syntax.

+

+#### **Key Integration Points:**

+

+1. **Creating DataFrames from SQL Queries:**

+

+ - We can write SQL queries directly on DataFrames registered as temporary views.

+

+ ```scala

+ // Register DataFrame as a temporary view

+ userDF.createOrReplaceTempView("users")

+

+ // Execute SQL query

+ val resultDF = spark.sql("SELECT UserID, AVG(Age) as AverageAge FROM users GROUP BY UserID")

+ resultDF.show()

+ ```

+

+2. **Interoperability:**

+ - DataFrames can be created from SQL queries, and SQL queries can be used to manipulate DataFrames.

+3. **Optimization:**

+ - Queries written in SQL are optimized by Catalyst, just like DataFrame operations.

+4. **Integration with BI Tools:**

+ - Spark SQL allows integration with business intelligence tools that support JDBC/ODBC connections.

+

+#### **Benefits:**

+

+- **Familiar Syntax:** Leverage SQL skills for data manipulation.

+- **Enhanced Performance:** Optimizations apply regardless of whether you use SQL or DataFrame APIs.

+- **Unified Data Processing:** Seamlessly switch between SQL and DataFrame operations within the same application.

+

+---

+

+

+

+### **5. Flow Diagrams and Charts**

+

+#### **Flow Diagram: RDDs vs. DataFrames Processing**

+

+1. **RDDs Processing Flow:**

+

+ - **Data Source → RDD Creation → Transformations (map, filter) → Actions (collect, reduce) → Result**

+

+2. **DataFrames Processing Flow:**

+ - **Data Source → DataFrame Creation → Logical Plan → Catalyst Optimizer → Physical Plan → Execution → Result**

+

+**Explanation:**

+

+- The DataFrame processing flow includes additional steps for optimization, leading to enhanced performance.

+

+#### **Chart: Performance Comparison**

+

+A bar chart comparing execution times for identical tasks performed using RDDs and DataFrames, showing DataFrames having shorter execution times.

+

+#### **Tabular Representation: Execution Steps**

+

+| **Step** | **RDDs** | **DataFrames** |

+| ------------------ | -------------------------------------------- | ----------------------------------------------- |

+| **Data Loading** | Text-based parsing | Optimized loading with schema inference |

+| **Transformation** | Manual transformations using functional APIs | Declarative transformations leveraging Catalyst |

+| **Optimization** | Limited; requires manual tuning | Automatic optimization via Catalyst |

+| **Execution** | Direct execution of transformation steps | Optimized execution plan generated by Catalyst |

+

+---

+

+

+

+### **6. Examples**

+

+#### **Example 1: Filtering and Counting**

+

+**Using RDDs:**

+

+```scala

+val logsRDD = sparkContext.textFile("hdfs://path/to/logs")

+val errorLogs = logsRDD.filter(line => line.contains("ERROR"))

+val errorCount = errorLogs.count()

+println(s"Number of ERROR logs: $errorCount")

+```

+

+**Using DataFrames:**

+

+```scala

+import spark.implicits._

+

+val logsDF = spark.read.text("hdfs://path/to/logs").toDF("line")

+val errorCount = logsDF.filter($"line".contains("ERROR")).count()

+println(s"Number of ERROR logs: $errorCount")

+```

+

+**Performance Advantage:**

+DataFrames execute the filtering and counting faster due to optimized query planning and execution.

+

+#### **Example 2: Joining Datasets**

+

+**Using RDDs:**

+

+```scala

+val usersRDD = sparkContext.textFile("hdfs://path/to/users.csv")

+ .map(line => {

+ val parts = line.split(",")

+ (parts(0), parts(1)) // (UserID, UserName)

+ })

+

+val ordersRDD = sparkContext.textFile("hdfs://path/to/orders.csv")

+ .map(line => {

+ val parts = line.split(",")

+ (parts(0), parts(2).toDouble) // (UserID, OrderAmount)

+ })

+

+val joinedRDD = usersRDD.join(ordersRDD)

+joinedRDD.collect().foreach(println)

+```

+

+**Using DataFrames:**

+

+```scala

+val usersDF = spark.read.option("header", "true").csv("hdfs://path/to/users.csv")

+val ordersDF = spark.read.option("header", "true").csv("hdfs://path/to/orders.csv")

+

+val joinedDF = usersDF.join(ordersDF, "UserID")

+joinedDF.show()

+```

+

+**Performance Advantage:**

+DataFrames handle joins more efficiently through optimized join strategies, reducing shuffle operations and execution time.

+

+---

+

+

+

+### **7. Conclusion**

+

+**Resilient Distributed Datasets (RDDs)** and **DataFrames** are both essential abstractions in Apache Spark, each with its own strengths:

+

+- **RDDs** provide low-level control and are suitable for complex transformations and functional programming paradigms.

+- **DataFrames** offer higher-level APIs, optimized performance through Spark's Catalyst optimizer, and seamless integration with Spark SQL, making them ideal for structured data processing and analytics.

+

+Choosing between RDDs and DataFrames depends on the specific use case, with DataFrames generally preferred for most data processing tasks due to their performance and ease of use.

+

+---

+

+### **Additional Resources**

+

+- [Apache Spark Official Documentation](https://spark.apache.org/docs/latest/)

+- [Spark SQL, DataFrames, and Datasets Guide](https://spark.apache.org/docs/latest/sql-programming-guide.html)

+- [Optimizing Spark Performance](https://spark.apache.org/docs/latest/tuning.html)

+

+

+

Question 2: Use Spark DataFrames to load a CSV file containing employee information (name, age, salary), and then perform the following operations: filter employees above 30 years of age, group by salary range, and compute the average salary for each group.

+

+

+

+

Solution: Spark DataFrames to Load the CSV file

+

+

+---

+

+## Table of Contents

+

+1. [Overview](#overview)

+2. [Prerequisites](#prerequisites)

+3. [Sample Data](#sample-data)

+4. [Step-by-Step Guide](#step-by-step-guide)

+ - [1. Setting Up Spark](#1-setting-up-spark)

+ - [2. Loading the CSV File](#2-loading-the-csv-file)

+ - [3. Filtering Employees Above 30 Years](#3-filtering-employees-above-30-years)

+ - [4. Defining Salary Ranges](#4-defining-salary-ranges)

+ - [5. Grouping by Salary Range](#5-grouping-by-salary-range)

+ - [6. Computing Average Salary for Each Group](#6-computing-average-salary-for-each-group)

+5. [Flowchart](#flowchart)

+6. [Final Output](#final-output)

+7. [Complete Code Example](#complete-code-example)

+

+---

+

+## Overview

+

+We will perform the following operations on a CSV file containing employee data:

+

+1. **Load** the CSV file into a Spark DataFrame.

+2. **Filter** employees who are older than 30 years.

+3. **Define** salary ranges (e.g., \$0-50k, \$50k-100k, etc.).

+4. **Group** employees based on these salary ranges.

+5. **Compute** the average salary for each salary group.

+

+---

+

+## Prerequisites

+

+- **Apache Spark** installed.

+- **PySpark** or **Scala** environment set up.

+- A **CSV file** containing employee data with the following columns:

+ - `name` (String)

+ - `age` (Integer)

+ - `salary` (Double)

+

+For this guide, we'll use **PySpark** for implementation.

+

+---

+

+## Sample Data

+

+Assume we have a CSV file named `employees.csv` with the following content:

+

+| name | age | salary |

+| ------------- | --- | ------ |

+| John Doe | 28 | 50000 |

+| Jane Smith | 35 | 75000 |

+| Alice Jones | 42 | 120000 |

+| Bob Brown | 25 | 40000 |

+| Charlie Black | 38 | 95000 |

+| Diana White | 31 | 60000 |

+

+---

+

+## Step-by-Step Guide

+

+### 1. Setting Up Spark

+

+First, initialize a Spark session.

+

+```python

+from pyspark.sql import SparkSession

+

+# Initialize Spark Session

+spark = SparkSession.builder \

+ .appName("EmployeeDataProcessing") \

+ .getOrCreate()

+```

+

+### 2. Loading the CSV File

+

+Load the CSV file into a Spark DataFrame. Ensure that the header is correctly inferred and data types are appropriately assigned.

+

+```python

+# Load CSV into DataFrame

+df = spark.read.csv("employees.csv", header=True, inferSchema=True)

+

+# Show the DataFrame

+df.show()

+```

+

+**Output:**

+

+| name | age | salary |

+| ------------- | --- | -------- |

+| John Doe | 28 | 50000.0 |

+| Jane Smith | 35 | 75000.0 |

+| Alice Jones | 42 | 120000.0 |

+| Bob Brown | 25 | 40000.0 |

+| Charlie Black | 38 | 95000.0 |

+| Diana White | 31 | 60000.0 |

+

+### 3. Filtering Employees Above 30 Years

+

+Filter the DataFrame to include only employees older than 30.

+

+```python

+# Filter employees with age > 30

+filtered_df = df.filter(df.age > 30)

+

+# Show filtered DataFrame

+filtered_df.show()

+```

+

+**Filtered Output:**

+

+| name | age | salary |

+| ------------- | --- | -------- |

+| Jane Smith | 35 | 75000.0 |

+| Alice Jones | 42 | 120000.0 |

+| Charlie Black | 38 | 95000.0 |

+| Diana White | 31 | 60000.0 |

+

+### 4. Defining Salary Ranges

+

+To group salaries, define salary ranges. For example:

+

+- **Low:** \$0 - \$50,000

+- **Medium:** \$50,001 - \$100,000

+- **High:** \$100,001 and above

+

+We can use the `when` and `otherwise` functions from `pyspark.sql.functions` to create a new column `salary_range`.

+

+```python

+from pyspark.sql.functions import when

+

+# Define salary ranges

+salary_range_df = filtered_df.withColumn(

+ "salary_range",

+ when(filtered_df.salary <= 50000, "Low")

+ .when((filtered_df.salary > 50000) & (filtered_df.salary <= 100000), "Medium")

+ .otherwise("High")

+)

+

+# Show DataFrame with salary ranges

+salary_range_df.show()

+```

+

+**Output with Salary Range:**

+

+| name | age | salary | salary_range |

+| ------------- | --- | -------- | ------------ |

+| Jane Smith | 35 | 75000.0 | Medium |

+| Alice Jones | 42 | 120000.0 | High |

+| Charlie Black | 38 | 95000.0 | Medium |

+| Diana White | 31 | 60000.0 | Medium |

+

+### 5. Grouping by Salary Range

+

+Group the DataFrame based on the `salary_range` column.

+

+```python

+# Group by salary_range

+grouped_df = salary_range_df.groupBy("salary_range")

+```

+

+### 6. Computing Average Salary for Each Group

+

+Compute the average salary for each salary range group.

+

+```python

+from pyspark.sql.functions import avg

+

+# Compute average salary

+avg_salary_df = grouped_df.agg(avg("salary").alias("average_salary"))

+

+# Show average salaries

+avg_salary_df.show()

+```

+

+**Average Salary Output:**

+

+| salary_range | average_salary |

+| ------------ | ----------------- |

+| Medium | 76666.66666666667 |

+| High | 120000.0 |

+

+_Note: The "Medium" range has three employees with salaries \$75,000, \$95,000, and \$60,000, averaging to \$76,666.67._

+

+---

+

+## Flowchart

+

+Below is a textual representation of the data processing flow:

+

+```

++---------------------+

+| Load CSV |

+| (employees.csv) |

++----------+----------+

+ |

+ v

++----------+----------+

+| Filter Age > 30 |

++----------+----------+

+ |

+ v

++----------+----------+

+| Define Salary Range |

++----------+----------+

+ |

+ v

++----------+----------+

+| Group by Salary Range|

++----------+----------+

+ |

+ v

++----------+----------+

+| Compute Average Salary|

++----------------------+

+```

+

+---

+

+## Final Output

+

+After performing all the operations, the final output will display the average salary for each salary range group.

+

+| salary_range | average_salary |

+| ------------ | ----------------- |

+| Medium | 76666.66666666667 |

+| High | 120000.0 |

+

+---

+

+## Complete Code Example

+

+Here is the complete PySpark code that encapsulates all the steps described above:

+

+```python

+from pyspark.sql import SparkSession

+from pyspark.sql.functions import when, avg

+

+# Initialize Spark Session

+spark = SparkSession.builder \

+ .appName("EmployeeDataProcessing") \

+ .getOrCreate()

+

+# Load CSV into DataFrame

+df = spark.read.csv("employees.csv", header=True, inferSchema=True)

+

+# Show the original DataFrame

+print("Original DataFrame:")

+df.show()

+

+# Filter employees with age > 30

+filtered_df = df.filter(df.age > 30)

+print("Filtered DataFrame (Age > 30):")

+filtered_df.show()

+

+# Define salary ranges

+salary_range_df = filtered_df.withColumn(

+ "salary_range",

+ when(filtered_df.salary <= 50000, "Low")

+ .when((filtered_df.salary > 50000) & (filtered_df.salary <= 100000), "Medium")

+ .otherwise("High")

+)

+print("DataFrame with Salary Range:")

+salary_range_df.show()

+

+# Group by salary_range

+grouped_df = salary_range_df.groupBy("salary_range")

+

+# Compute average salary

+avg_salary_df = grouped_df.agg(avg("salary").alias("average_salary"))

+print("Average Salary by Salary Range:")

+avg_salary_df.show()

+

+# Stop Spark Session

+spark.stop()

+```

+

+**Explanation of the Code:**

+

+1. **Initialize Spark Session:** Starts a new Spark session named "EmployeeDataProcessing".

+2. **Load CSV:** Reads the `employees.csv` file into a DataFrame with inferred schema and headers.

+3. **Display Original Data:** Shows the content of the original DataFrame.

+4. **Filter Data:** Filters out employees older than 30 years.

+5. **Define Salary Range:** Adds a new column `salary_range` based on the defined salary brackets.

+6. **Group Data:** Groups the data by `salary_range`.

+7. **Compute Average Salary:** Calculates the average salary for each salary range group.

+8. **Display Results:** Shows the average salaries.

+9. **Stop Spark Session:** Ends the Spark session.

+

+---

+

+## Conclusion

+

+By following the steps outlined above, you can efficiently process and analyze employee data using Spark DataFrames. This approach leverages Spark's powerful data processing capabilities to filter, group, and aggregate data, providing valuable insights such as average salaries across different salary ranges.

+

+

+

Question 3: Using RDDs in Apache Spark, write a program to filter out all the even numbers from a large dataset of integers and compute the sum of all odd numbers. Compare the performance with a traditional single-node approach.

+

+

+

+

+

Solution: RDDs (Resilient Distributed Datasets) in Apache Spark to filter out Numbers

+

+

+---

+

+## Table of Contents

+

+1. [Overview](#overview)

+2. [Prerequisites](#prerequisites)

+3. [Using RDDs in Apache Spark](#using-rdds-in-apache-spark)

+ - [1. Setting Up Spark](#1-setting-up-spark)

+ - [2. Creating an RDD from a Large Dataset](#2-creating-an-rdd-from-a-large-dataset)

+ - [3. Filtering Even Numbers](#3-filtering-even-numbers)

+ - [4. Summing Odd Numbers](#4-summing-odd-numbers)

+4. [Traditional Single-Node Approach](#traditional-single-node-approach)

+ - [1. Loading the Dataset](#1-loading-the-dataset)

+ - [2. Filtering and Summing Odd Numbers](#2-filtering-and-summing-odd-numbers)

+5. [Performance Comparison](#performance-comparison)

+ - [1. Execution Time](#1-execution-time)

+ - [2. Scalability](#2-scalability)

+ - [3. Fault Tolerance](#3-fault-tolerance)

+6. [Flowchart](#flowchart)

+7. [Complete Code Examples](#complete-code-examples)

+ - [Apache Spark RDD Code (PySpark)](#apache-spark-rdd-code-pyspark)

+ - [Traditional Single-Node Python Code](#traditional-single-node-python-code)

+8. [Conclusion](#conclusion)

+

+---

+

+## Overview

+

+This guide demonstrates how to:

+

+1. **Filter out even numbers** from a large dataset of integers using **Apache Spark's RDDs**.

+2. **Compute the sum** of all odd numbers in the dataset.

+3. **Compare** the Spark-based distributed approach with a **traditional single-node** method in Python.

+

+We'll explore the implementation details, discuss performance aspects, and provide code examples for both approaches.

+

+---

+

+## Prerequisites

+

+- **Apache Spark** installed (version 2.0 or higher recommended).

+- **PySpark** (Python API for Spark) set up.

+- **Python** installed (version 3.x recommended).

+- Basic understanding of **Python** and **Apache Spark** concepts.

+

+---

+

+## Using RDDs in Apache Spark

+

+### 1. Setting Up Spark

+

+Before diving into the code, ensure that Apache Spark and PySpark are properly installed and configured on your system.

+

+```bash

+# Install PySpark using pip if not already installed

+pip install pyspark

+```

+

+### 2. Creating an RDD from a Large Dataset

+

+**RDDs (Resilient Distributed Datasets)** are Spark's fundamental data structure, enabling distributed processing of large datasets across a cluster.

+

+We'll simulate a large dataset of integers using Spark's `range` function, which efficiently generates a distributed dataset.

+

+```python

+from pyspark import SparkContext

+

+# Initialize Spark Context

+sc = SparkContext("local[*]", "SumOddNumbersRDD")

+```

+

+- **`local[*]`**: Runs Spark locally with as many worker threads as logical cores on your machine.

+- **`SumOddNumbersRDD`**: Application name.

+

+### 3. Filtering Even Numbers

+

+To filter out even numbers, we'll use the `filter` transformation, retaining only numbers where the remainder when divided by 2 is not zero.

+

+```python

+# Generate a large dataset of integers (e.g., 1 to 10 million)

+large_dataset = sc.parallelize(range(1, 10000001)) # 10,000,000 integers

+

+# Filter out even numbers

+odd_numbers_rdd = large_dataset.filter(lambda x: x % 2 != 0)

+```

+

+- **`parallelize`**: Distributes the data across the Spark cluster.

+- **`filter`**: Applies a function to each element and retains those for which the function returns `True`.

+

+### 4. Summing Odd Numbers

+

+After filtering, we'll compute the sum of all odd numbers using the `reduce` action, which aggregates the elements of the RDD using a specified binary operator.

+

+```python

+# Sum of odd numbers

+sum_of_odd_numbers = odd_numbers_rdd.reduce(lambda x, y: x + y)

+

+# Print the result

+print(f"Sum of odd numbers: {sum_of_odd_numbers}")

+

+# Stop Spark Context

+sc.stop()

+```

+

+- **`reduce`**: Combines elements of the RDD using the specified function.

+- **Stopping Spark Context**: It's good practice to stop the Spark context to free up resources.

+

+---

+

+## Traditional Single-Node Approach

+

+In contrast to Spark's distributed processing, a traditional single-node approach processes data sequentially on a single machine. Here's how you can achieve the same task using Python.

+

+### 1. Loading the Dataset

+

+We'll generate the same large dataset of integers using Python's `range` function.

+

+```python

+# Generate a large dataset of integers

+large_dataset = range(1, 10000001) # 10,000,000 integers

+```

+

+### 2. Filtering and Summing Odd Numbers

+

+We'll use a generator expression to filter out even numbers and compute the sum of the remaining odd numbers.

+

+```python

+# Filter out even numbers and sum odd numbers

+sum_of_odd_numbers = sum(x for x in large_dataset if x % 2 != 0)

+

+# Print the result

+print(f"Sum of odd numbers: {sum_of_odd_numbers}")

+```

+

+- **Generator Expression**: Efficiently filters and processes data without loading the entire dataset into memory at once.

+

+---

+

+## Performance Comparison

+

+Comparing Apache Spark's RDD approach with the traditional single-node method involves evaluating several factors:

+

+### 1. Execution Time

+

+| **Approach** | **Execution Time (Approx.)** |

+| --------------------------- | ------------------------------------------------------------ |

+| **Spark RDD (Distributed)** | Significantly faster for large datasets (e.g., 10M integers) |

+| **Single-Node Python** | Slower as dataset size increases |

+

+- **Spark RDD** leverages parallelism across multiple cores or nodes, reducing computation time.

+- **Single-Node** processing is limited by the machine's CPU and memory, leading to longer execution times for large datasets.

+

+### 2. Scalability

+

+| **Aspect** | **Spark RDD** | **Single-Node Python** |

+| -------------------- | ------------------------------------------------------- | ------------------------------------------------- |

+| **Data Size** | Easily handles very large datasets (terabytes) | Limited by system memory and storage |

+| **Resource Scaling** | Scales horizontally by adding more nodes to the cluster | Scales vertically by upgrading the single machine |

+| **Performance** | Improves with more nodes | Performance plateaus with hardware limits |

+

+- **Spark RDD** can scale out to handle massive datasets by distributing the workload.

+- **Single-Node** approaches cannot efficiently handle data beyond the machine's capacity.

+

+### 3. Fault Tolerance

+

+| **Aspect** | **Spark RDD** | **Single-Node Python** |

+| ------------------ | ---------------------------------------------------- | ---------------------------------------------------- |

+| **Fault Handling** | Automatically recovers lost partitions using lineage | No built-in fault tolerance; process fails on errors |

+| **Data Recovery** | Resilient to node failures | Vulnerable to single points of failure |

+

+- **Spark RDD** provides robust fault tolerance mechanisms, ensuring data processing can continue despite node failures.

+- **Single-Node** methods lack built-in fault tolerance, making them susceptible to data loss or process interruptions.

+

+---

+

+## Flowchart

+

+Here's a visual representation of both approaches:

+

+### **Apache Spark RDD Approach**

+

+```

++--------------------------+

+| Start Spark Session |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Create RDD (1 to 10M) |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Filter Odd Numbers RDD |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Reduce to Sum Odd Numbers|

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Display Result |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Stop Spark Session |

++--------------------------+

+```

+

+### **Traditional Single-Node Approach**

+

+```

++--------------------------+

+| Start Python Script |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Create Range (1 to 10M)|

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Filter Odd Numbers |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Sum Odd Numbers |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| Display Result |

++-----------+--------------+

+ |

+ v

++-----------+--------------+

+| End Script |

++--------------------------+

+```

+

+---

+

+## Complete Code Examples

+

+### Apache Spark RDD Code (PySpark)

+

+```python

+from pyspark import SparkContext

+import time

+

+def main():

+ # Initialize Spark Context

+ sc = SparkContext("local[*]", "SumOddNumbersRDD")

+

+ # Start timing

+ start_time = time.time()

+

+ # Generate a large dataset of integers (1 to 10,000,000)

+ large_dataset = sc.parallelize(range(1, 10000001))

+

+ # Filter out even numbers

+ odd_numbers_rdd = large_dataset.filter(lambda x: x % 2 != 0)

+

+ # Sum of odd numbers

+ sum_of_odd_numbers = odd_numbers_rdd.reduce(lambda x, y: x + y)

+

+ # End timing

+ end_time = time.time()

+

+ # Print the result and execution time

+ print(f"Sum of odd numbers: {sum_of_odd_numbers}")

+ print(f"Execution Time (Spark RDD): {end_time - start_time:.2f} seconds")

+

+ # Stop Spark Context

+ sc.stop()

+

+if __name__ == "__main__":

+ main()

+```

+

+**Explanation:**

+

+1. **Initialization**: Starts the Spark context with all available cores.

+2. **Timing**: Measures the execution time for performance comparison.

+3. **Data Generation**: Creates an RDD with integers from 1 to 10,000,000.

+4. **Filtering**: Retains only odd numbers using the `filter` transformation.

+5. **Summing**: Aggregates the odd numbers using the `reduce` action.

+6. **Output**: Displays the sum and execution time.

+7. **Termination**: Stops the Spark context to release resources.

+

+### Traditional Single-Node Python Code

+

+```python

+import time

+

+def main():

+ # Start timing

+ start_time = time.time()

+

+ # Generate a large dataset of integers (1 to 10,000,000)

+ large_dataset = range(1, 10000001)

+

+ # Filter out even numbers and sum odd numbers

+ sum_of_odd_numbers = sum(x for x in large_dataset if x % 2 != 0)

+

+ # End timing

+ end_time = time.time()

+

+ # Print the result and execution time

+ print(f"Sum of odd numbers: {sum_of_odd_numbers}")

+ print(f"Execution Time (Single-Node Python): {end_time - start_time:.2f} seconds")

+

+if __name__ == "__main__":

+ main()

+```

+

+**Explanation:**

+

+1. **Timing**: Measures the execution time for performance comparison.

+2. **Data Generation**: Creates a range of integers from 1 to 10,000,000.

+3. **Filtering & Summing**: Uses a generator expression to filter odd numbers and compute their sum.

+4. **Output**: Displays the sum and execution time.

+

+---

+

+## Conclusion

+

+- **Apache Spark's RDD Approach**:

+

+ - **Pros**:

+ - **Speed**: Significantly faster for large datasets due to parallel processing.

+ - **Scalability**: Easily scales horizontally by adding more nodes.

+ - **Fault Tolerance**: Automatically handles node failures and data recovery.

+ - **Cons**:

+ - **Setup Complexity**: Requires configuring Spark and managing a cluster.

+ - **Overhead**: May introduce overhead for smaller datasets.

+

+- **Traditional Single-Node Approach**:

+ - **Pros**:

+ - **Simplicity**: Easy to implement without additional frameworks.

+ - **Low Overhead**: Suitable for smaller datasets with minimal setup.

+ - **Cons**:

+ - **Performance**: Slower for large datasets due to lack of parallelism.

+ - **Scalability**: Limited by the hardware capabilities of a single machine.

+ - **Fault Tolerance**: No built-in mechanisms to handle failures gracefully.

+

+**Recommendation**:

+

+- Use **Apache Spark's RDDs** when dealing with **large-scale data** that requires **efficient processing**, **scalability**, and **fault tolerance**.

+- Opt for a **traditional single-node approach** for **smaller datasets** or when **quick, simple processing** is sufficient without the need for distributed computing.

+

+By leveraging the strengths of both approaches, we can choose the most appropriate method based on the specific data processing needs and resource availability.

+

+

+

Question 4: Explain how Apache Flink handles real-time stream processing and state management. Discuss the concept of time windows (event time, processing time) and give an example where Flink's stream processing can be used to monitor real-time sensor data for anomaly detection.

+

+ Solution: Apache Flink in Real-Time Stream Processing and State Management

+

+Apache Flink is a distributed stream processing framework that excels in processing real-time, unbounded streams of data with low latency and high throughput. It provides powerful capabilities for stateful stream processing, fault tolerance, and event-driven applications. Flink ensures efficient real-time stream processing by managing the state of the application, performing operations like aggregation and windowing, and handling time semantics.

+

+#### **Key Features of Flink's Stream Processing:**

+

+1. **Distributed Stream Processing**: Flink can process streams in parallel across multiple nodes, scaling to handle large volumes of data.

+2. **Low-Latency and High-Throughput**: Flink is optimized for low-latency processing while maintaining high throughput for continuous data streams.

+3. **Event-Driven**: Flink processes data as soon as it arrives, enabling real-time analytics and decision-making.

+4. **Stateful Processing**: Flink allows you to store state across events, enabling complex operations like joins, aggregations, and pattern matching over time.

+

+### **State Management in Flink**

+

+State management is crucial for Flink because many stream processing applications require maintaining information over time (e.g., aggregating data, counting occurrences, etc.). Flink allows state to be stored locally in memory for fast access and backed up periodically to durable storage (like HDFS or S3) for fault tolerance.

+

+- **Keyed State**: When working with partitioned streams, each parallel instance of a stream operator may maintain separate state for different keys. This is called "keyed state," and it allows Flink to scale stateful computations.

+- **Operator State**: In cases where partitioning is not required, Flink can maintain "operator state," which is shared across all instances of an operator.

+

+- **Fault Tolerance**: Flink achieves fault tolerance using **distributed snapshots** (also known as checkpoints). Flink uses **Chandy-Lamport** distributed snapshots to periodically take a consistent snapshot of all operator states. If a failure occurs, the system can restore the state from the last successful checkpoint and continue processing.

+

+### **Time Windows in Flink**

+

+Time windows allow Flink to group events over a period of time for aggregation or other operations. Flink provides two types of time semantics for windowing:

+

+#### 1. **Event Time**

+

+- **Event time** refers to the time when the event occurred, as recorded by the source generating the data (e.g., the timestamp in the log or sensor data).

+- Flink processes events based on their event time, which allows handling out-of-order data (common in real-time systems) by using **watermarks**. Watermarks signify that no more events with a timestamp earlier than a certain value will be processed, allowing windows to close even when data arrives out of order.

+

+#### 2. **Processing Time**

+

+- **Processing time** refers to the time when an event is processed by the Flink system.

+- This is simpler to implement but may lead to inaccuracies, especially when there are delays in data arrival (e.g., network latency or processing bottlenecks).

+

+### **Types of Windows:**

+

+- **Tumbling Windows**: Non-overlapping windows of a fixed size. E.g., a 1-minute tumbling window processes data from minute 0:00 to 0:01, then 0:01 to 0:02, and so on.

+- **Sliding Windows**: Windows that overlap, defined by a window size and a sliding interval. E.g., a sliding window of 1 minute that slides every 30 seconds.

+- **Session Windows**: Dynamic windows that group events based on periods of activity separated by gaps of inactivity (i.e., a session timeout).

+

+### **Example: Real-Time Sensor Data Monitoring with Apache Flink**

+

+Let’s take an example of monitoring sensor data from a network of IoT devices to detect anomalies such as temperature spikes or sudden changes in pressure. The goal is to process this data in real time and identify anomalies as soon as they occur.

+

+#### **Problem Statement**

+

+- **Data Source**: Multiple sensors send temperature and pressure readings continuously.

+- **Goal**: Detect when a sensor reading deviates significantly from its normal range (an anomaly), and trigger an alert.

+

+#### **Flink Implementation**

+

+1. **Ingest the Stream**: Sensor data streams into Flink in real time. Each event contains a sensor ID, timestamp, temperature, and pressure reading.

+

+2. **Event Time Processing**: Since the sensor data might be delayed (due to network latency), we use **event time** semantics to ensure correct ordering of events and handle out-of-order data. This is done by attaching **watermarks** to the stream.

+

+3. **Stateful Stream Processing**: Flink uses **keyed state** to maintain the current running average and standard deviation for each sensor. Each sensor’s readings are keyed by the sensor ID.

+

+4. **Windowing**: To calculate real-time averages and detect anomalies, we can use a **sliding window** with event time. For example, every 5 seconds, Flink processes the data for each sensor over the past 1-minute window and computes the mean and standard deviation.

+

+5. **Anomaly Detection**: For each window, Flink compares the current sensor reading to the average of the window. If the value deviates by a significant threshold (e.g., 3 standard deviations), it flags the event as an anomaly.

+

+6. **Trigger Alerts**: Once an anomaly is detected, Flink can trigger an alert, for example, by sending a message to an alerting system or dashboard.

+

+#### **Code Example** (Pseudocode)

+

+```java

+DataStream sensorStream = env

+ .addSource(new SensorSource()) // Ingest real-time sensor data

+ .assignTimestampsAndWatermarks(new SensorTimeAssigner()); // Assign watermarks for event-time processing

+

+// Key the stream by sensor ID

+KeyedStream keyedStream = sensorStream

+ .keyBy(SensorReading::getSensorId);

+

+// Apply a sliding window and calculate mean and standard deviation

+DataStream anomalies = keyedStream

+ .timeWindow(Time.minutes(1), Time.seconds(5)) // 1-minute window, sliding every 5 seconds

+ .apply(new AnomalyDetectionFunction()); // Detect anomalies based on deviation from mean

+

+// Sink the results (e.g., to a dashboard or alerting system)

+anomalies.addSink(new AlertSink());

+```

+

+In this example:

+

+- **`SensorSource`** is the source that ingests data from the sensors.

+- **`SensorTimeAssigner`** assigns timestamps to events and generates watermarks to handle late data.

+- **`AnomalyDetectionFunction`** processes the data in each window, calculating the mean and standard deviation for each sensor and flagging anomalies.

+- **`AlertSink`** is responsible for sending the detected anomalies to an alerting system.

+

+### **Use Case: Monitoring Sensor Data for Anomalies**

+

+Flink’s stream processing is ideal for real-time anomaly detection in environments like smart cities, factories, or any IoT-driven system where timely detection of issues can prevent failures or optimize processes. For example:

+

+- **Smart Factory**: In a smart factory, sensors monitor equipment temperatures. Flink could process this sensor data in real time, detect overheating, and trigger alerts before equipment failure occurs.

+- **Smart Grid**: In energy management, Flink could monitor power consumption data and detect anomalies that indicate issues like equipment malfunction or energy theft.

+

+### **Conclusion**

+

+Apache Flink is a powerful framework for real-time stream processing, capable of handling high-throughput data streams with event-driven, low-latency processing. With support for state management, windowing, and time semantics (event and processing time), Flink provides a robust solution for applications like real-time sensor monitoring and anomaly detection. By leveraging keyed state, watermarks, and sliding windows, Flink ensures that even in environments with delayed or out-of-order data, real-time insights are always accurate.

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.pdf b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.pdf

new file mode 100644

index 0000000..4aebb71

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3 Solution.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3_4.pdf b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3_4.pdf

new file mode 100644

index 0000000..9d29d3e

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 3_4.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.md b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.md

new file mode 100644

index 0000000..f78a62c

--- /dev/null

+++ b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.md

@@ -0,0 +1,548 @@

+

+

Author: Madhurima Rawat

+

+

+

+

Assignment 4

+

+

+

+

Question 1: Explain the architecture and working principles of artificial neural networks (ANNs).

+

+

+Solution: Artificial Neural Networks (ANNs)

+

+

+

+### **Table of Contents**

+

+1. [Introduction](#introduction)

+2. [Architecture of Artificial Neural Networks](#architecture-of-artificial-neural-networks)

+3. [Basic Working Principles of ANNs](#basic-working-principles-of-anns)

+4. [Flowchart of ANN Working Principles](#flowchart-of-ann-working-principles)

+5. [Real-Life Examples of ANNs](#real-life-examples-of-anns)

+6. [Conclusion](#conclusion)

+

+## **Introduction**

+

+Artificial Neural Networks (ANNs) are a key technology in the field of machine learning and artificial intelligence. They are designed to mimic the way human brains process information, making them particularly effective for various tasks like classification, regression, and pattern recognition.

+

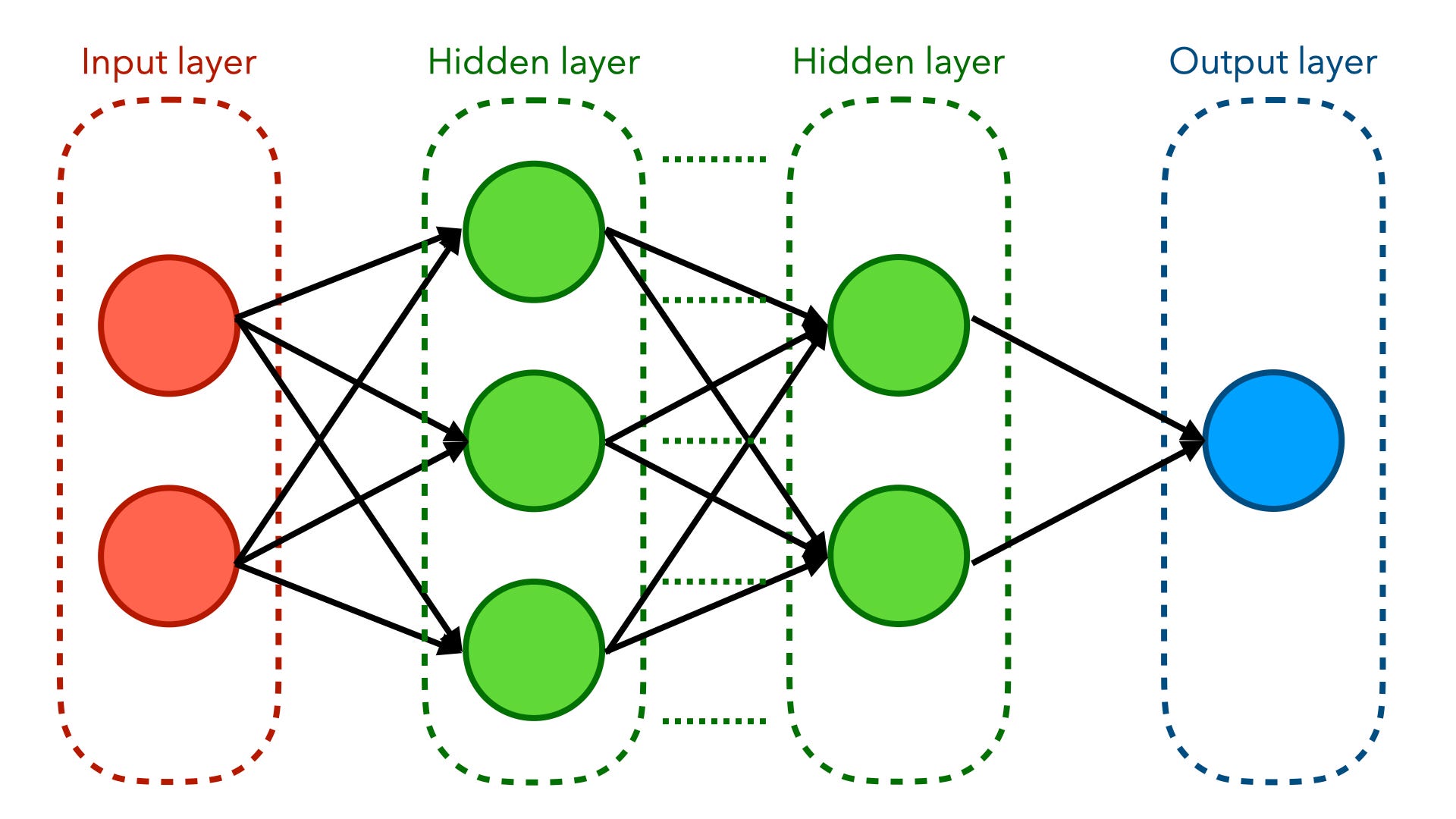

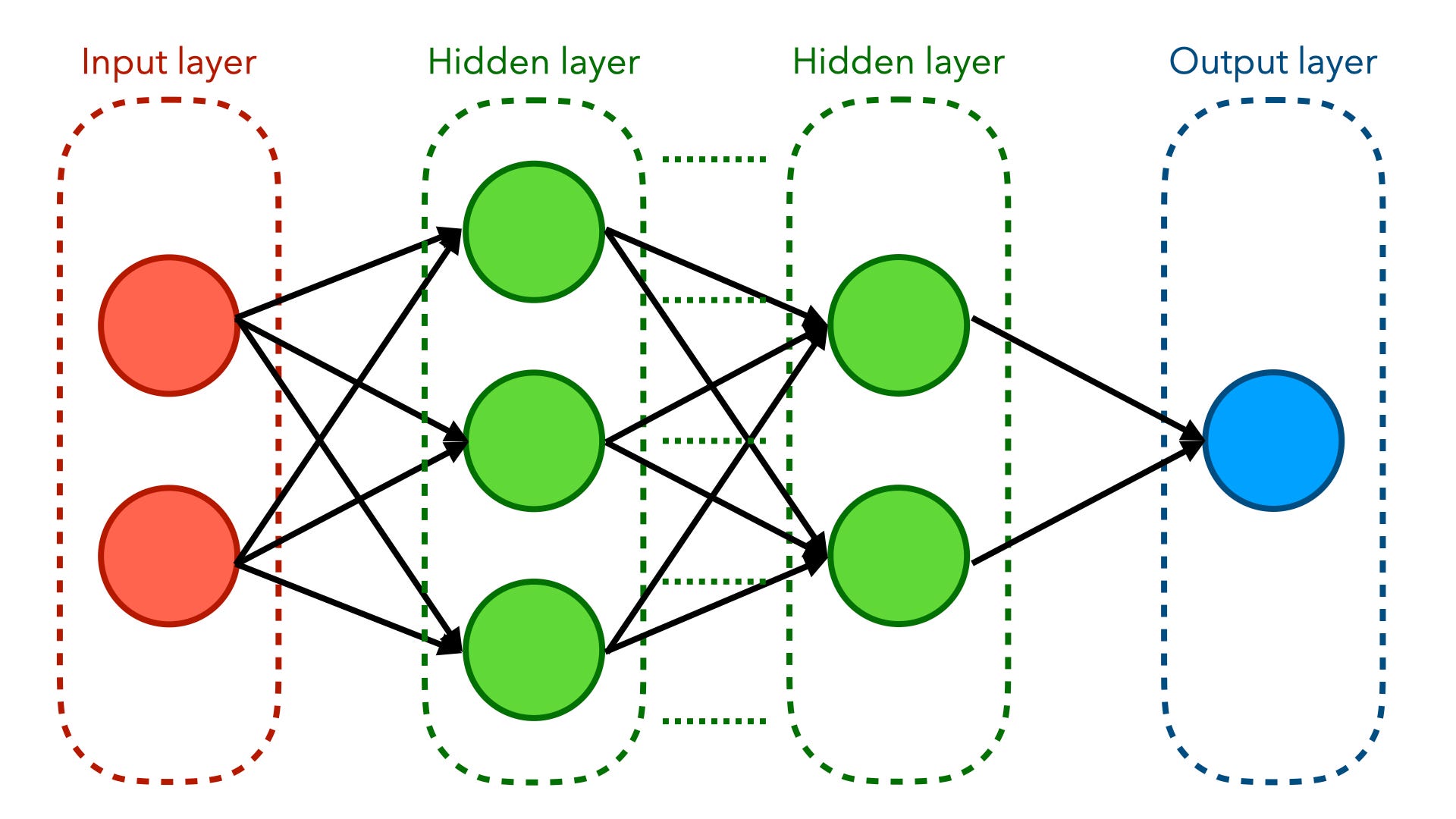

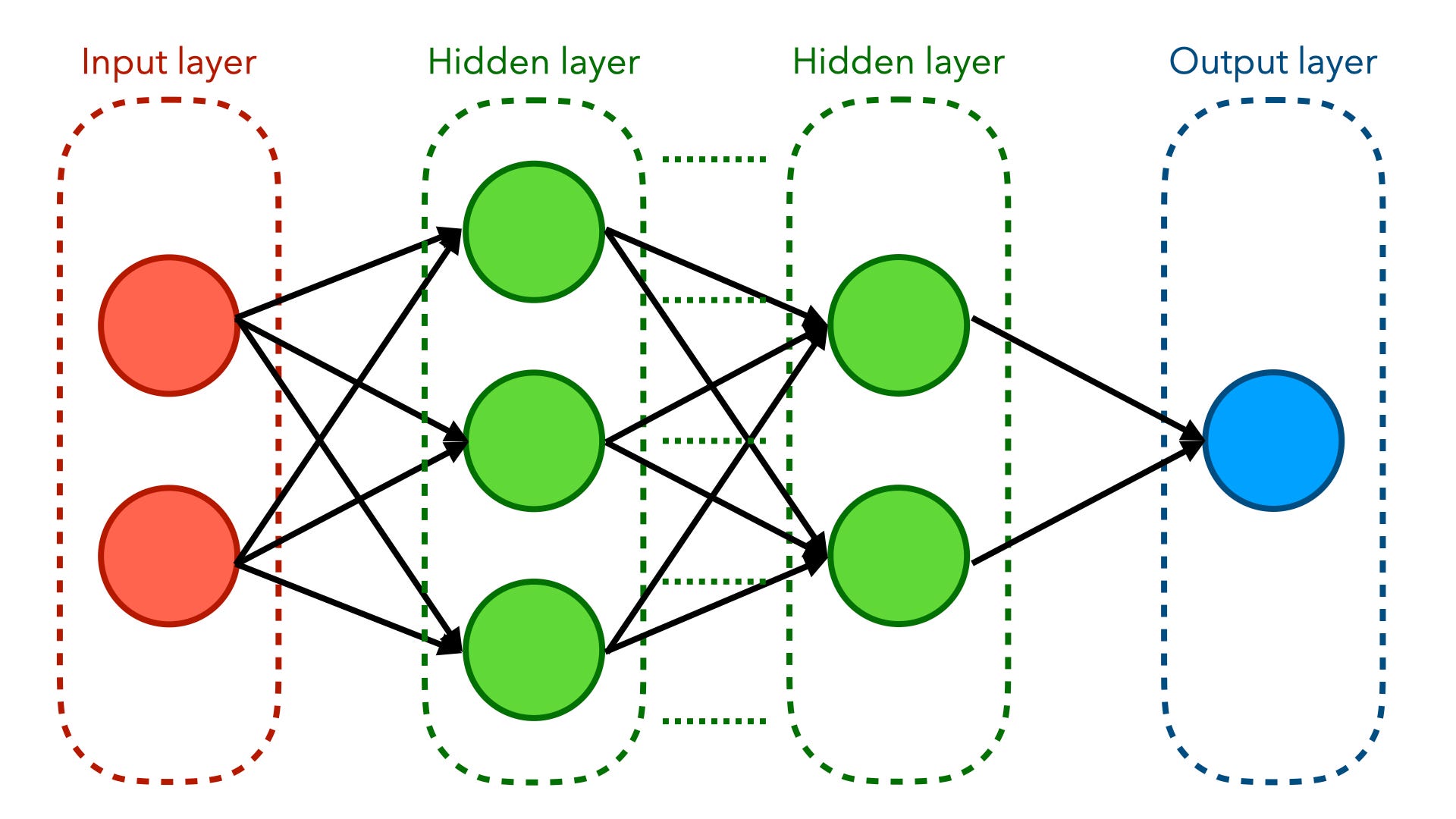

+## **Architecture of Artificial Neural Networks**

+

+An ANN is typically composed of multiple layers of nodes (neurons). The architecture can vary based on the specific application and design choice but generally consists of the following components:

+

+

+

+

Architecture of Artificial Neural Networks

+

+

+1. **Input Layer**:

+

+ - The first layer that receives the input data.

+ - Each neuron in this layer corresponds to one feature of the input data.

+

+2. **Hidden Layers**:

+

+ - Intermediate layers between the input and output layers.

+ - ANNs can have one or more hidden layers, each containing multiple neurons.

+ - The neurons in these layers perform transformations on the input data using activation functions.

+

+3. **Output Layer**:

+ - The final layer that produces the output of the network.

+ - The number of neurons in this layer corresponds to the number of classes (in classification tasks) or outputs (in regression tasks).

+

+## **Basic Working Principles of ANNs**

+

+1. **Initialization**:

+

+ - Weights and biases are initialized (often randomly) for each neuron in the network.

+

+2. **Forward Propagation**:

+

+ - Input data is fed into the input layer.

+ - Each neuron calculates a weighted sum of its inputs (from the previous layer), adds a bias, and applies an activation function (e.g., ReLU, sigmoid).

+ - The outputs of one layer become the inputs for the next layer until the output layer is reached.

+

+3. **Activation Functions**:

+

+ - Activation functions introduce non-linearity to the model, enabling the network to learn complex patterns. Common functions include:

+ - **Sigmoid**: \( f(x) = \frac{1}{1 + e^{-x}} \)

+ - **ReLU (Rectified Linear Unit)**: \( f(x) = \max(0, x) \)

+ - **Tanh**: \( f(x) = \tanh(x) \)

+

+4. **Loss Calculation**:

+

+ - A loss function (e.g., Mean Squared Error for regression, Cross-Entropy for classification) measures the difference between the predicted outputs and the actual target values.

+

+5. **Backward Propagation**:

+

+ - The network uses the calculated loss to adjust the weights and biases through a process called backpropagation. This is done using gradient descent or its variants.

+ - The gradients of the loss concerning each weight are computed, and the weights are updated in the opposite direction of the gradient to minimize the loss.

+

+6. **Iteration**:

+ - The forward and backward propagation steps are repeated for multiple epochs until the model converges or the loss reaches an acceptable level.

+

+## **Flowchart of ANN Working Principles**

+

+Below is a flowchart illustrating the process of an Artificial Neural Network from input to output:

+

+```plaintext

+ +------------------+

+ | Input Layer |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Hidden Layer |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Output Layer |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Loss Function |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Backpropagation |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Update Weights |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Repeat Steps |

+ +------------------+

+ |

+ |

+ v

+ +------------------+

+ | Model Trained |

+ +------------------+

+```

+

+## **Real-Life Examples of ANNs**

+

+1. **Image Recognition**:

+

+ - **Use Case**: ANNs are widely used in applications such as facial recognition systems, object detection, and image classification.

+ - **Example**: Facebook uses ANNs to automatically tag people in photos by recognizing their faces based on a vast dataset of previously tagged images.

+

+2. **Natural Language Processing (NLP)**:

+

+ - **Use Case**: ANNs are employed in chatbots, translation services, and sentiment analysis.

+ - **Example**: Google Translate uses deep learning models to translate text between different languages, understanding context and nuances through ANNs.

+

+3. **Healthcare**:

+

+ - **Use Case**: ANNs assist in diagnosing diseases by analyzing medical images and patient data.

+ - **Example**: Radiology departments utilize ANNs to identify tumors in X-rays or MRI scans, improving diagnostic accuracy and reducing the workload on radiologists.

+

+4. **Finance**:

+

+ - **Use Case**: ANNs help in fraud detection and risk management by analyzing transaction patterns.

+ - **Example**: Credit card companies use ANNs to monitor transactions in real-time, flagging suspicious activities based on learned patterns of normal behavior.

+

+5. **Self-Driving Cars**:

+ - **Use Case**: ANNs are a core component of the perception systems in autonomous vehicles.

+ - **Example**: Companies like Tesla utilize ANNs to process data from cameras and sensors, enabling the vehicle to recognize objects, lane markings, and pedestrians in real time.

+

+## **Conclusion**

+

+Artificial Neural Networks are powerful tools for modeling complex relationships in data. Their layered structure and the use of activation functions allow them to learn and generalize from training data effectively. The iterative process of forward propagation, loss calculation, and backpropagation helps refine the model's weights, leading to improved performance on tasks such as classification, regression, and more.

+

+Understanding ANNs involves grasping their architecture, working principles, and the underlying concepts of optimization and error minimization. With advancements in technology and data availability, ANNs continue to evolve and find applications in various fields, including computer vision, natural language processing, and more.

+

+

+

Question 2: Discuss the key techniques used in NLP such as tokenization, stemming, and word embeddings. Provide an example of how NLP can be applied to a large dataset for sentiment analysis.

+

+

+

+

Solution: Natural Language Processing (NLP)

+

+

+### **Table of Contents**

+

+1. [Introduction](#introduction)

+2. [Key Techniques in NLP](#key-techniques-in-nlp)

+ - [Tokenization](#tokenization)

+ - [Stemming](#stemming)

+ - [Word Embeddings](#word-embeddings)

+3. [Example of Sentiment Analysis](#example-of-sentiment-analysis)

+4. [Flowchart of NLP Process](#flowchart-of-nlp-process)

+5. [Conclusion](#conclusion)

+

+## **Introduction**

+

+Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and humans through natural language. The goal is to enable machines to understand, interpret, and generate human language in a meaningful way. NLP has various applications, including sentiment analysis, chatbots, translation services, and more.

+

+## **Key Techniques in NLP**

+

+### **Tokenization**

+

+**Definition**: Tokenization is the process of breaking down a text into smaller units, called tokens. These tokens can be words, phrases, or even sentences.

+

+**Techniques**:

+

+- **Word Tokenization**: Splitting text into individual words.

+- **Sentence Tokenization**: Dividing text into sentences.

+

+**Example**:

+

+- Input: "Natural Language Processing is fascinating."

+- Output (Word Tokens): ["Natural", "Language", "Processing", "is", "fascinating."]

+- Output (Sentence Tokens): ["Natural Language Processing is fascinating."]

+

+### **Stemming**

+

+**Definition**: Stemming reduces words to their base or root form by removing prefixes or suffixes. The goal is to reduce inflected words to their word stem.

+

+**Techniques**:

+

+- **Porter Stemmer**: A widely used stemming algorithm.

+- **Snowball Stemmer**: A more advanced stemming algorithm.

+

+**Example**:

+

+- Input: "running", "runner", "ran"

+- Output (Stemmed): "run", "run", "run"

+

+### **Word Embeddings**

+

+**Definition**: Word embeddings are vector representations of words that capture their meanings and relationships in a continuous vector space. They allow words with similar meanings to have similar representations.

+

+**Techniques**:

+

+- **Word2Vec**: A neural network-based model that produces word embeddings.

+- **GloVe (Global Vectors for Word Representation)**: A model that generates word vectors based on word co-occurrence statistics.

+

+**Example**:

+

+- The words "king" and "queen" might have similar vector representations that reflect their relationships.

+

+## **Example of Sentiment Analysis**

+

+**Definition**: Sentiment analysis is the process of determining the emotional tone behind a series of words. It is commonly used to understand opinions in reviews, social media, and other forms of text.

+

+**Application**:

+

+1. **Dataset**: A large dataset of customer reviews from an e-commerce website.

+2. **Objective**: Analyze customer sentiment (positive, negative, neutral) toward products.

+

+**Steps**:

+

+1. **Data Collection**: Gather customer reviews.

+2. **Preprocessing**:

+ - **Tokenization**: Split reviews into tokens (words).

+ - **Stemming**: Reduce words to their base form.

+3. **Feature Extraction**:

+ - Use word embeddings to convert tokens into vectors.

+4. **Model Training**:

+ - Train a classification model (e.g., Logistic Regression, SVM) using labeled sentiment data.

+5. **Prediction**:

+ - Predict sentiment for new, unseen reviews.

+

+**Example**:

+

+- Input: "The product is amazing and works well!"

+- Output: Sentiment = Positive

+

+## **Flowchart of NLP Process**

+

+Here’s a flowchart illustrating the general process of NLP:

+

+```plaintext

+ +-------------------------+

+ | Data Collection |

+ +-------------------------+

+ |

+ |

+ v

+ +-------------------------+

+ | Text Preprocessing |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Tokenization |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Stemming |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Feature Extraction |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Model Training |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Prediction |

+ +-------------------------+

+ |

+ |

+ +-------------------------+

+ | Sentiment Analysis Result |

+ +-------------------------+

+```

+

+## **Conclusion**

+

+Natural Language Processing is an essential technology that enables machines to understand and interact with human language. Techniques like tokenization, stemming, and word embeddings are crucial for processing and analyzing textual data. Sentiment analysis, as an application of NLP, showcases how these techniques can be leveraged to gain insights from large datasets. With advancements in machine learning and deep learning, the capabilities of NLP continue to expand, impacting various industries and applications.

+

+Question 3: Explain the concept of ensemble learning in machine learning. Compare and contrast different ensemble techniques like bagging, boosting, and stacking.

+

+Solution: Ensemble Learning in Machine Learning

+

+## **Introduction**

+

+Ensemble learning is a machine learning paradigm that combines multiple individual models (often called "base learners") to create a stronger overall model. The main idea behind ensemble learning is that by aggregating the predictions of several models, we can improve accuracy, robustness, and generalization on unseen data. Ensemble methods can be broadly categorized into two main types: **bagging** and **boosting**.

+

+## **Key Concepts of Ensemble Learning**

+

+1. **Diversity**: The individual models in an ensemble should be diverse. This diversity helps the ensemble make better predictions by minimizing the likelihood of making the same mistakes.

+

+2. **Aggregation**: The predictions of the base models are combined through various techniques to produce the final prediction. Common aggregation methods include averaging, majority voting, and weighted voting.

+

+3. **Bias-Variance Tradeoff**: Ensemble methods help in managing the bias-variance tradeoff. While individual models may exhibit high bias or high variance, combining them can reduce both.

+

+---

+

+## **Comparison of Ensemble Techniques**

+

+### **1. Bagging (Bootstrap Aggregating)**

+

+**Concept**: Bagging involves training multiple models independently on random subsets of the training data (with replacement) and then aggregating their predictions. Each subset is created using a technique called bootstrapping.

+

+**How it Works**:

+

+- Create multiple bootstrapped datasets from the original dataset.

+- Train a base learner (e.g., decision trees) on each bootstrapped dataset.

+- Aggregate the predictions (average for regression, majority voting for classification).

+

+**Advantages**:

+

+- Reduces variance and helps prevent overfitting.

+- Works well with high-variance models (e.g., decision trees).

+

+**Common Algorithms**:

+

+- Random Forest: An ensemble of decision trees using bagging.

+

+**Example**:

+

+- If you have 1000 data points, you might create 10 different subsets (with replacement), train 10 decision trees, and average their predictions for regression tasks.

+

+---

+

+### **2. Boosting**

+

+**Concept**: Boosting is an iterative ensemble technique that focuses on training models sequentially, where each new model is trained to correct the errors made by the previous models.

+

+**How it Works**:

+

+- Start with a weak learner (e.g., a simple decision tree).

+- Train it on the dataset and evaluate its performance.

+- Assign more weight to the misclassified instances and train the next model on this weighted dataset.

+- Combine the predictions of all models, often using a weighted average.

+

+**Advantages**:

+

+- Reduces both bias and variance.

+- Can convert weak learners into a strong learner.

+

+**Common Algorithms**:

+

+- AdaBoost: Adaptive Boosting, focuses on misclassified instances.

+- Gradient Boosting: Builds models in a stage-wise manner, optimizing a loss function.

+

+**Example**:

+

+- In the first round, train a weak model, and in the second round, focus more on the data points that the first model misclassified, thereby improving overall accuracy with each iteration.

+

+---

+

+### **3. Stacking**

+

+**Concept**: Stacking, or stacked generalization, is an ensemble technique that combines different types of models (not just the same type) and uses another model to aggregate their predictions.

+

+**How it Works**:

+

+- Train multiple base models on the training dataset.

+- Use the predictions of these base models as input features to a meta-model (often called the "blender" or "stacker") that makes the final prediction.

+

+**Advantages**:

+

+- Allows leveraging the strengths of different algorithms.

+- Can lead to better performance than any individual model.

+

+**Common Algorithms**:

+

+- Any combination of models can be used (e.g., decision trees, SVMs, neural networks) as base learners, with a linear regression or another model as the meta-learner.

+

+**Example**:

+

+- Train a decision tree, logistic regression, and SVM on the same data, then use their predictions as features for a logistic regression model that predicts the final outcome.

+

+---

+

+## **Comparison Table**

+

+| Aspect | Bagging | Boosting | Stacking |

+| ------------------ | -------------------------------- | -------------------------------------- | ------------------------------------------------- |

+| Training | Parallel | Sequential | Sequential |

+| Model Independence | Models are trained independently | Models depend on previous models | Combines predictions from multiple models |

+| Focus | Reduces variance | Reduces bias | Improves predictions through combination |

+| Common Algorithms | Random Forest | AdaBoost, Gradient Boosting | Any combination of models |

+| Performance | Generally stable performance | Can lead to overfitting without tuning | Usually high, but depends on base and meta models |

+| Complexity | Lower | Higher | Higher |

+

+---

+

+## **Conclusion**

+

+Ensemble learning is a powerful technique in machine learning that enhances the predictive performance of models by combining multiple learners. Techniques like bagging, boosting, and stacking offer distinct advantages and can be applied in various scenarios to improve model robustness, accuracy, and generalization. Understanding these techniques allows practitioners to choose the right approach based on the problem at hand and the characteristics of the dataset.

+

+

+Question 4: Discuss model evaluation techniques, focusing on metrics like accuracy, precision, recall, and the F1 score.

+

+

+ Solution: Model Evaluation Techniques

+

+

+Model evaluation is a crucial step in the machine learning lifecycle, as it helps to assess the performance of a model and its ability to generalize to unseen data. Various metrics can be used to evaluate the effectiveness of classification models, each offering different insights into the model's performance.

+

+## Key Metrics for Evaluation

+

+### 1. **Accuracy**

+

+**Definition**: Accuracy is the ratio of correctly predicted instances (both true positives and true negatives) to the total instances in the dataset.

+

+**Formula**:

+\[

+\text{Accuracy} = \frac{\text{TP} + \text{TN}}{\text{TP} + \text{TN} + \text{FP} + \text{FN}}

+\]

+

+Where:

+

+- TP = True Positives (correctly predicted positive instances)

+- TN = True Negatives (correctly predicted negative instances)

+- FP = False Positives (incorrectly predicted positive instances)

+- FN = False Negatives (incorrectly predicted negative instances)

+

+**Advantages**:

+

+- Easy to understand and compute.

+- Useful when the classes are balanced (i.e., the number of positive and negative instances is roughly equal).

+

+**Disadvantages**:

+

+- Can be misleading when the class distribution is imbalanced (i.e., one class significantly outnumbers the other).

+

+**Example**:

+If a model predicts 80 correct labels out of 100 total labels (with no class imbalance), its accuracy would be \( \frac{80}{100} = 0.8 \) or 80%.

+

+---

+

+### 2. **Precision**

+

+**Definition**: Precision, also known as positive predictive value, measures the proportion of true positive predictions among all positive predictions made by the model.

+

+**Formula**:

+\[

+\text{Precision} = \frac{\text{TP}}{\text{TP} + \text{FP}}

+\]

+

+**Advantages**:

+

+- Useful when the cost of false positives is high (e.g., in spam detection, classifying legitimate emails as spam).

+

+**Disadvantages**:

+

+- Does not consider false negatives, which can be important in certain contexts.

+

+**Example**:

+If a model predicts 40 instances as positive and 30 of them are correct, the precision would be \( \frac{30}{40} = 0.75 \) or 75%.

+

+---

+

+### 3. **Recall**

+

+**Definition**: Recall, also known as sensitivity or true positive rate, measures the proportion of true positive predictions among all actual positive instances.

+

+**Formula**:

+\[

+\text{Recall} = \frac{\text{TP}}{\text{TP} + \text{FN}}

+\]

+

+**Advantages**:

+

+- Important when the cost of false negatives is high (e.g., in medical diagnosis, missing a positive case can have serious consequences).

+

+**Disadvantages**:

+

+- Does not consider false positives, which may also be significant.

+

+**Example**:

+If there are 50 actual positive instances, and the model correctly identifies 30 of them, the recall would be \( \frac{30}{50} = 0.6 \) or 60%.

+

+---

+

+### 4. **F1 Score**

+

+**Definition**: The F1 score is the harmonic mean of precision and recall, providing a balance between the two metrics. It is particularly useful when dealing with imbalanced datasets.

+

+**Formula**:

+\[

+\text{F1 Score} = 2 \cdot \frac{\text{Precision} \cdot \text{Recall}}{\text{Precision} + \text{Recall}}

+\]

+

+**Advantages**:

+

+- Provides a single score that considers both false positives and false negatives.

+- Useful for uneven class distributions.

+

+**Disadvantages**:

+

+- Less interpretable than precision and recall individually.

+

+**Example**:

+If precision is 0.75 and recall is 0.6, the F1 score would be \( 2 \cdot \frac{0.75 \cdot 0.6}{0.75 + 0.6} \approx 0.6667 \).

+

+---

+

+## Summary of Metrics

+

+| Metric | Definition | Formula | When to Use |

+| --------- | ------------------------------------------------------ | ------------------------------------------------------------------------------------------ | ----------------------------------------------------------------- |

+| Accuracy | Proportion of correct predictions | \(\frac{\text{TP} + \text{TN}}{\text{Total}} \) | Balanced datasets, general performance measure |

+| Precision | Proportion of true positives among predicted positives | \(\frac{\text{TP}}{\text{TP} + \text{FP}} \) | Cost of false positives is high |

+| Recall | Proportion of true positives among actual positives | \(\frac{\text{TP}}{\text{TP} + \text{FN}} \) | Cost of false negatives is high |

+| F1 Score | Harmonic mean of precision and recall | \(2 \cdot \frac{\text{Precision} \cdot \text{Recall}}{\text{Precision} + \text{Recall}} \) | Imbalanced datasets, when both precision and recall are important |

+

+---

+

+## Conclusion

+

+Model evaluation techniques are essential for understanding the performance of machine learning models. While accuracy provides a broad overview, precision, recall, and the F1 score offer deeper insights into the model's performance, especially in the context of imbalanced datasets. Depending on the specific use case and the consequences of false positives and negatives, practitioners can choose the most appropriate metric to evaluate their models effectively.

diff --git a/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.pdf b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.pdf

new file mode 100644

index 0000000..0d74d8d

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Assignment/Assignment 3_ 4/Assignment 4 Solution.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Books/(Wiley & SAS business series) Frank J. Ohlhorst - Big data analytics_ turning big data into big money-Wiley (2012).epub b/7 SEMESTER/Big Data Analytics/Books/(Wiley & SAS business series) Frank J. Ohlhorst - Big data analytics_ turning big data into big money-Wiley (2012).epub

new file mode 100644

index 0000000..4eba9af

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Books/(Wiley & SAS business series) Frank J. Ohlhorst - Big data analytics_ turning big data into big money-Wiley (2012).epub differ

diff --git a/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.docx b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.docx

new file mode 100644

index 0000000..acf86ee

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.docx differ

diff --git a/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.pdf b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.pdf

new file mode 100644

index 0000000..5333fa8

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Big Data Analytics Resources.pdf differ

diff --git a/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Unit 1 Notes.pptx b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Unit 1 Notes.pptx

new file mode 100644

index 0000000..48cd148

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Notes/Unit 1/Unit 1 Notes.pptx differ

diff --git a/7 SEMESTER/Big Data Analytics/Notes/Unit 2/Unit 2 Notes.pptx b/7 SEMESTER/Big Data Analytics/Notes/Unit 2/Unit 2 Notes.pptx

new file mode 100644

index 0000000..f94ad51

Binary files /dev/null and b/7 SEMESTER/Big Data Analytics/Notes/Unit 2/Unit 2 Notes.pptx differ

+

+ +

+