diff --git a/docs/user-guide/explore/best-practices.md b/docs/user-guide/explore/best-practices.md

new file mode 100644

index 00000000..e4c61452

--- /dev/null

+++ b/docs/user-guide/explore/best-practices.md

@@ -0,0 +1,118 @@

+---

+sidebar_position: 6

+title: Explore Best Practices

+description: Best practices in Log management and Explore

+image: https://dytvr9ot2sszz.cloudfront.net/logz-docs/social-assets/docs-social.jpg

+keywords: [logz.io, explore, dashboard, log analysis, observability]

+---

+

+Once you've sent your data to Logz.io, you can search and query your logs to identify, debug, and monitor issues as quickly and effectively as possible.

+

+Explore supports a few query methods, including:

+

+

+## Simple Search

+

+Logz.io offers an intuitive and easy way to build your query. You can build queries easily by selecting fields, conditions, and values.

+

+Click the search bar or type to see available fields, add operators, and choose values. To use custom values, type the name and click the + sign. Press Enter to apply the query or Tab to add another condition.

+

+Free-text searches automatically convert into Lucene queries.

+

+## Lucene

+

+Logz.io supports Lucene for more advanced queries.

+

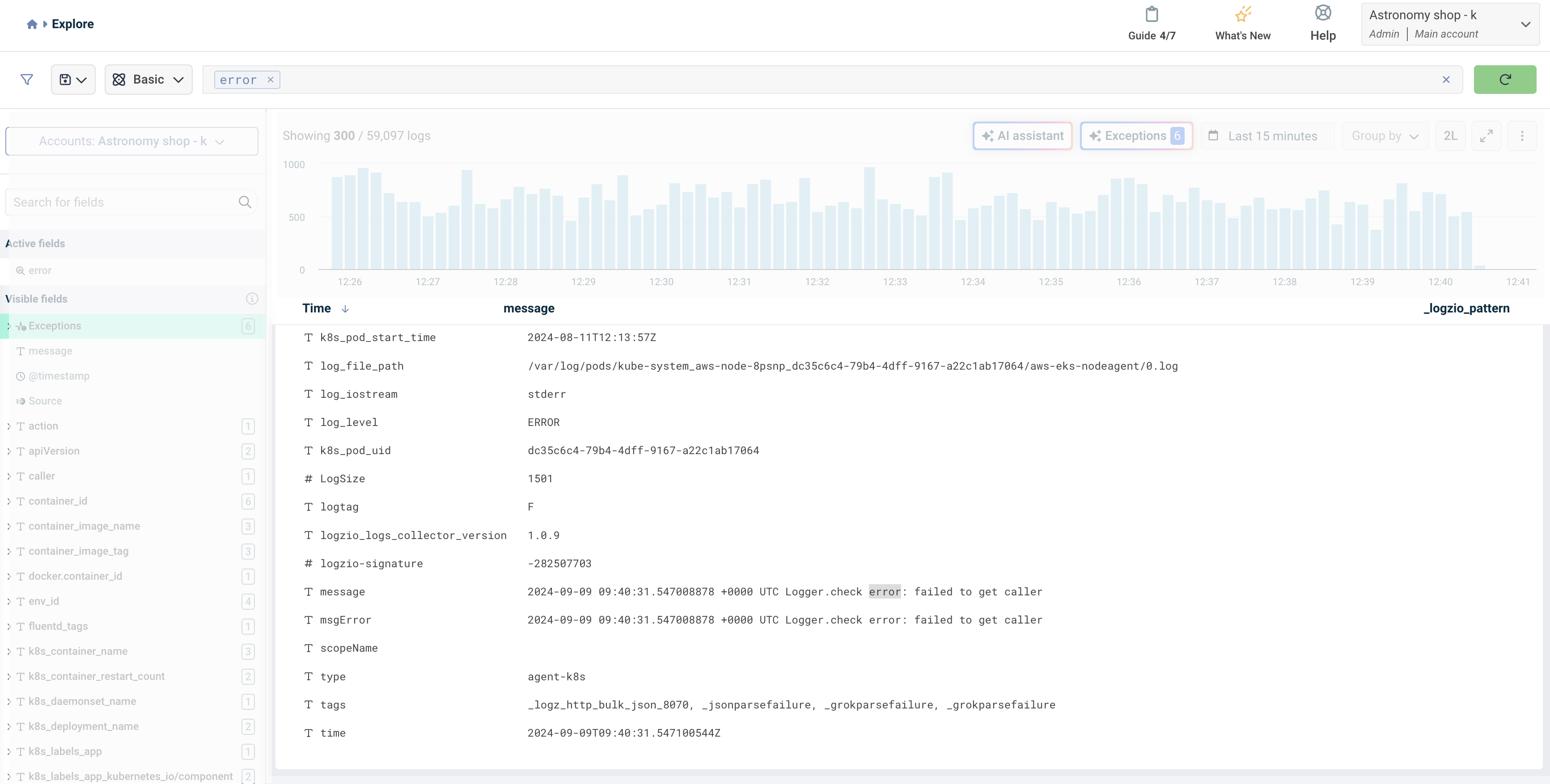

+Search for free text by typing the text string you want to find; for example, `error` will return all words containing this string, and using quotation marks, `"error"`, will return only the specific word you're searching for.

+

+

+

+Search for a value in a specific field:

+

+`log_level:ERROR`

+

+Use the boolean operators AND, OR, and NOT to create more complex searches. For example, to search for a specific status that doesn't contain a certain word:

+

+`log_level:ERROR AND Kubernetes`

+

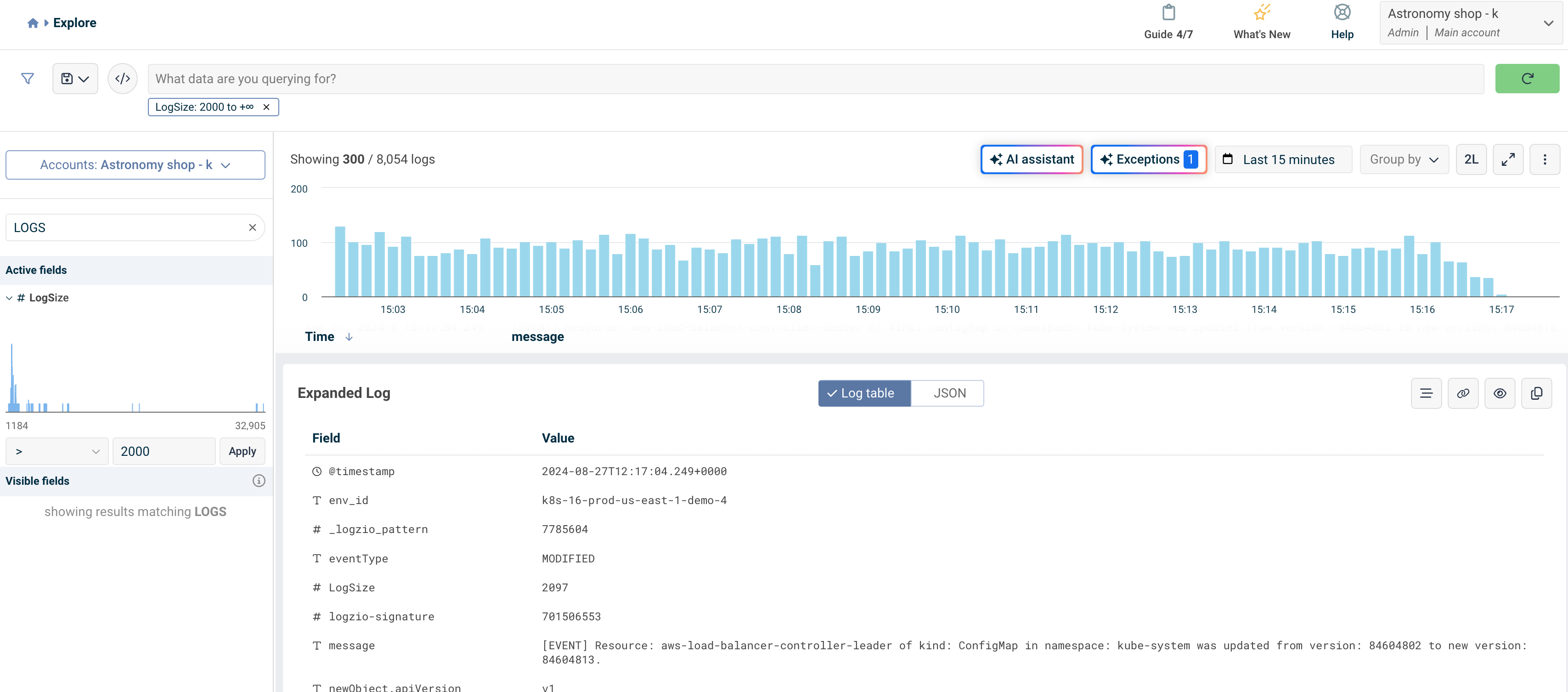

+To perform **range-related searches**, fields must be mapped as numbers (long, float, double, etc.). Then, you can use the following syntax. For example, you can use it to find all status codes between 400-499:

+

+`LogSize:[2000 TO 3000]`

+

+To make your search more complex, you can find status codes 400-499 with the extension php:

+

+`LogSize:[2000 TO 3000] AND eventType:MODIFIED`

+

+Or, find status codes 400-499 with the extension php or html:

+

+`LogSize:[2000 TO 3000] AND logzio-signature:[700000000 TO 710000000]`

+

+To exclude a term from your search, you can use the following syntax:

+

+`LogSize:[2000 TO 3000] AND type NOT (name:"agent-k8s")`

+

+

+## Filters

+

+Use the filters to refine your search, whether you're using Simple or Lucene. Open string fields to view its related values, and open numeric fields to choose a range. For example, `LogSize` lets you select the size of the logs you're interested in:

+

+

+

+

+

+## Regex in Lucene

+

+:::caution

+Using regex can overload your system and cause performance issues in your account. If regex is necessary, it is best to apply filters and use shorter timeframes.

+:::

+

+Logz.io uses Apache Lucene's regular expression engine to parse regex queries, supporting regexp and query_string.

+

+While Lucene's regex supports all Unicode characters, several characters are reserved as operators and cannot be searched on their own:

+

+`. ? + * | { } [ ] ( ) " \`

+

+Depending on the optional operators enabled, some additional characters may also be reserved. These characters are:

+

+`# @ & < > ~`

+

+However, you can still use reserved characters by applying a backslash or double-quotes. For example:

+

+`\*` will render as a * sign.

+

+`\#` will render as a # sign.

+

+`\()` will render as brackets.

+

+

+To use Regex in a search query in OpenSearch, you'll need to use the following template:

+

+`fieldName:/.*value.*/`.

+

+For example, you have a field called `sentence` that holds the following line: "The quick brown fox jumps over the lazy dog".

+

+To find one of the values in the field, such as `fox`, you'll need to use the following query:

+

+`sentence:/.*fox.*/`.

+

+

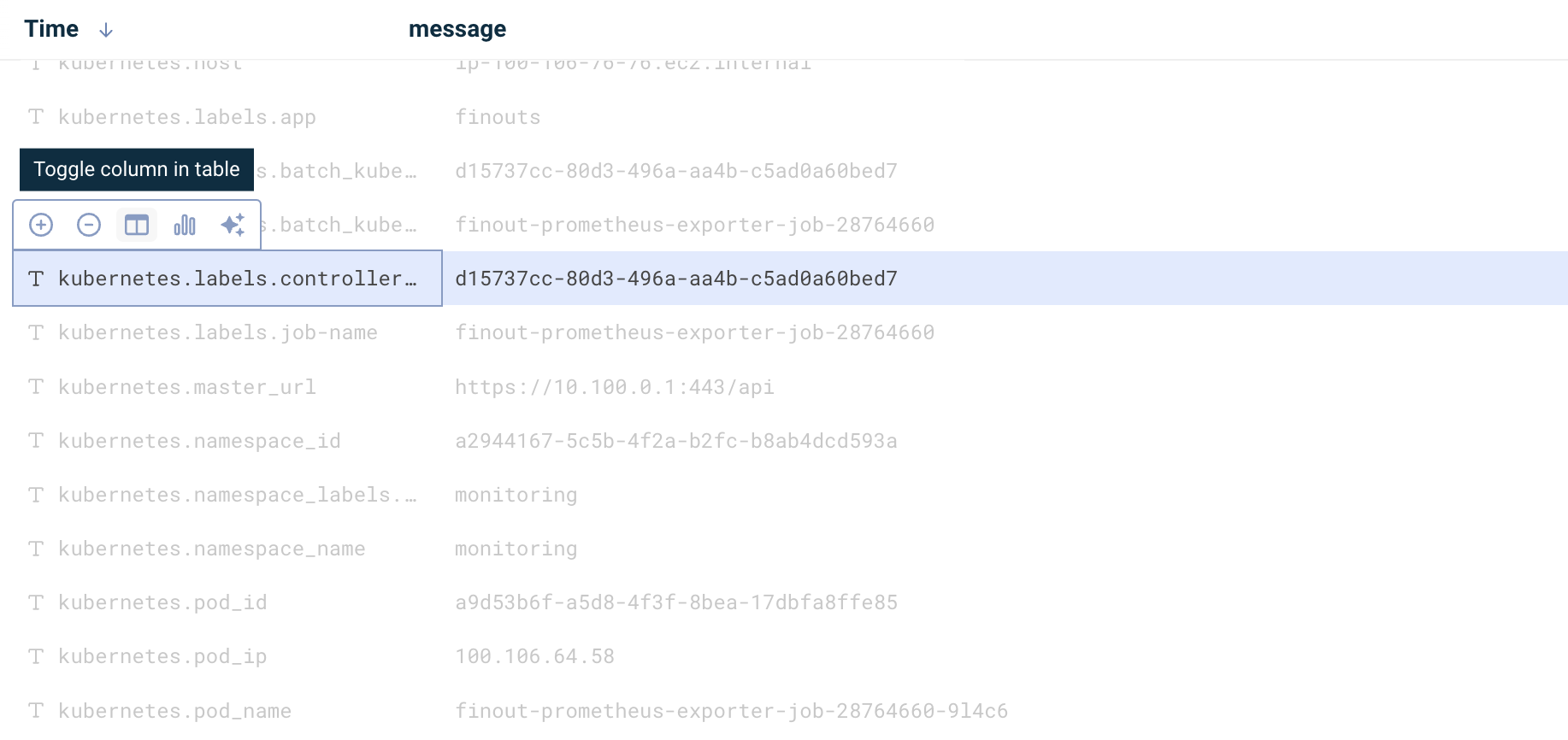

+## Edit log table view

+

+You can add additional columns to your logs table view.

+

+Find the field you'd like to add, hover over it and click the **Toggle column in table** button.

+

+

+

+Once added, you can drag it to reposition it, or click the **X** to remove it.

+

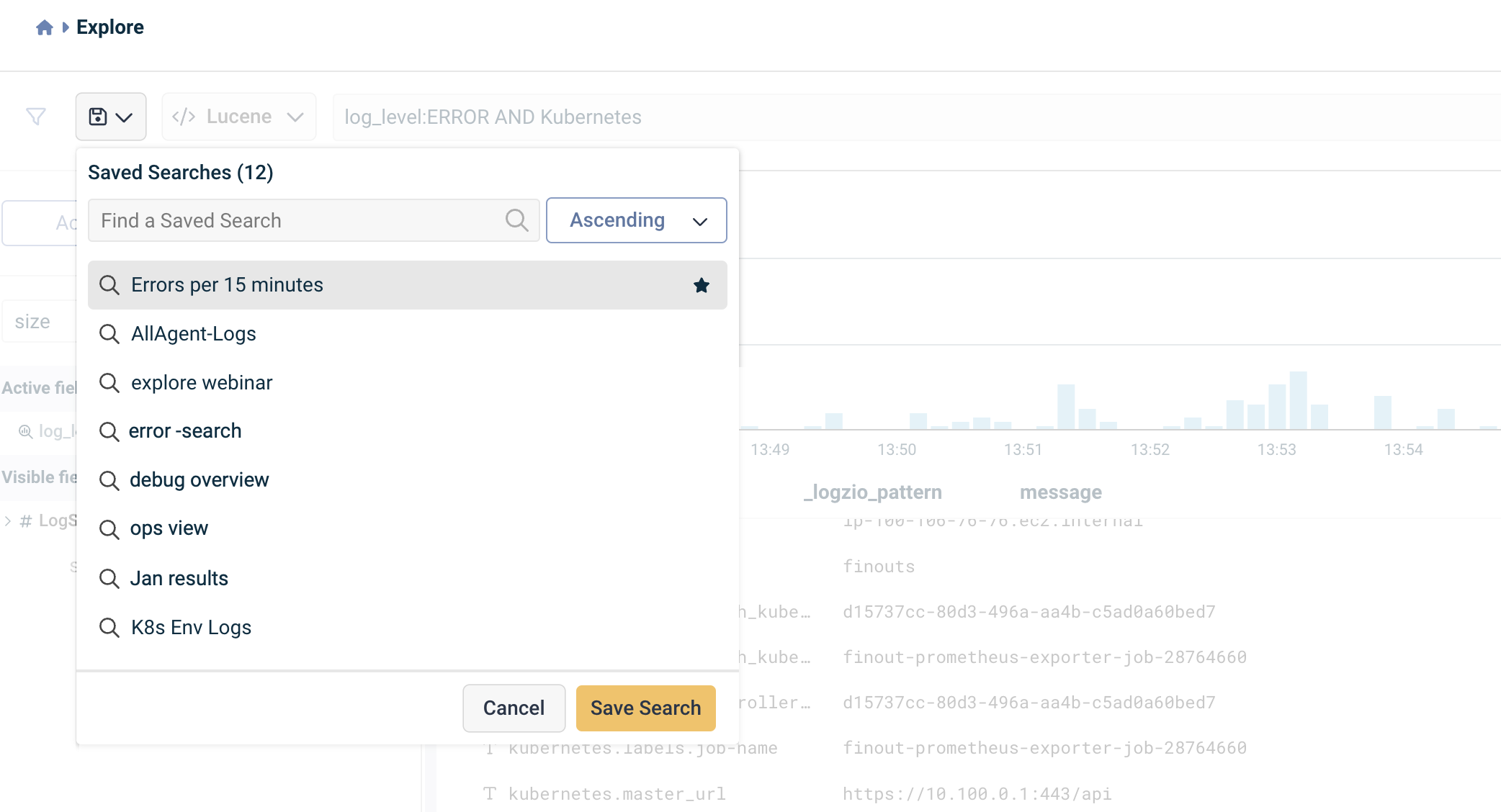

+Save your query to quickly access it whenever needed. The query is saved while the results change according to your chosen relevant time frame.

+

+

+

+

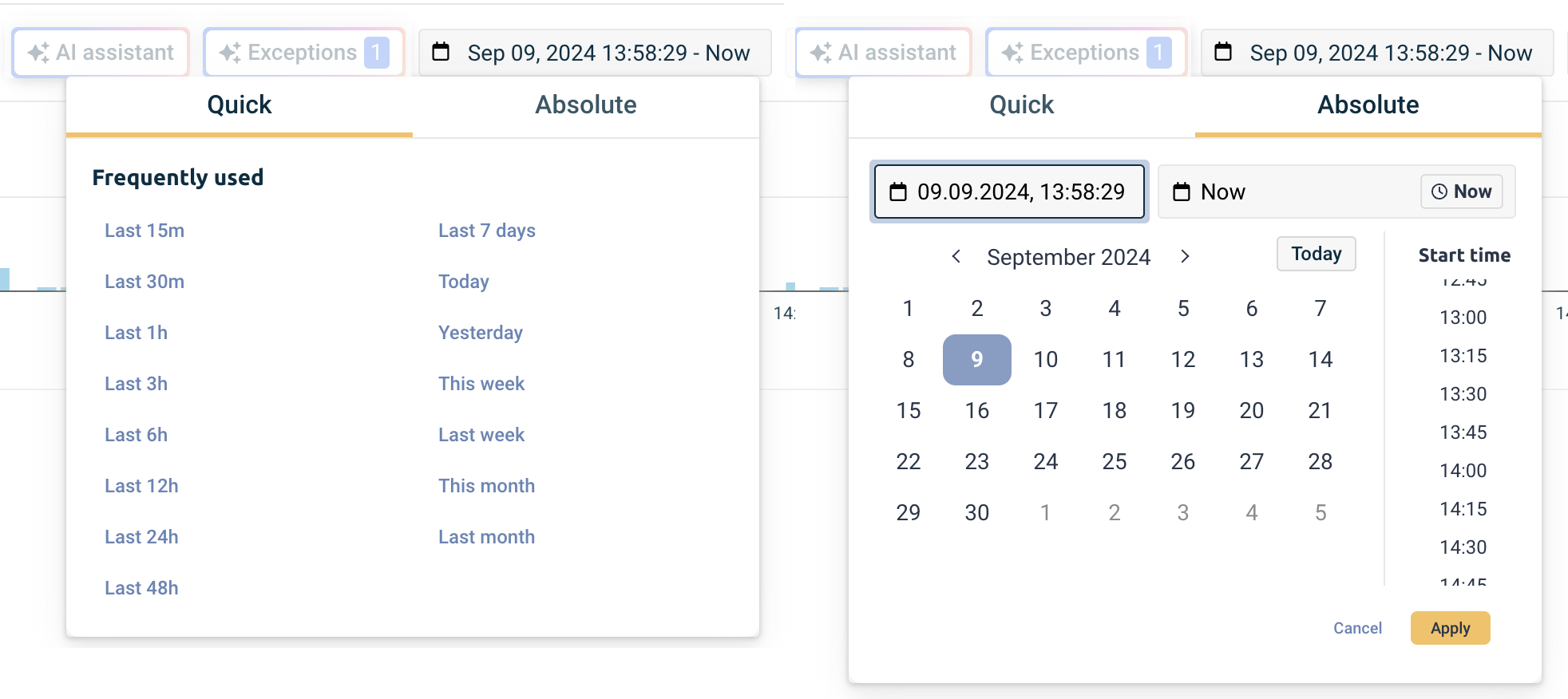

+## Select logs' time frame

+

+The default period to display results is 15 minutes. You can edit this time frame by clicking on the time picker. Choose an option from the quick menu, or switch to the absolute view to select a specific time frame. In this option, you can type the time frame you want to view.

+

+

\ No newline at end of file

diff --git a/docs/user-guide/quick-start.md b/docs/user-guide/quick-start.md

index 4a4afa89..c5abe904 100644

--- a/docs/user-guide/quick-start.md

+++ b/docs/user-guide/quick-start.md

@@ -8,53 +8,48 @@ keywords: [logs, metrics, traces, logz.io, getting started]

---

+Logz.io is a scalable, end-to-end cloud monitoring service that combines the best open-source tools with a fully managed SaaS platform. It provides unified log, metric, and trace collection with AI/ML-enhanced features for improved troubleshooting, faster response times, and cost management.

-

-Logz.io is an end-to-end cloud monitoring service built for scale. It’s the best-of-breed open source monitoring tools on a fully managed cloud service.

-

-One unified SaaS platform to collect and analyze logs, metrics, and traces, combined with human-powered AI/ML features to improve troubleshooting, reduce response time and help you manage costs.

-

-

-Whether you are a new user or looking for a refresher on Logz.io, you are invited to join one of our engineers for a **[training session on the Logz.io platform](https://logz.io/training/)**!

-

+Whether you’re new to Logz.io or need a refresher, join one us for a **[training session on the Logz.io platform](https://logz.io/training/)**!

## Send your data to Logz.io

-Once you’ve set up your account, you can start sending your data.

-

-Logz.io provides various tools, integrations, and methods to send data and monitor your Logs, Metrics, Traces, and SIEM.

+After setting up your account, you can start sending your data to Logz.io using various tools, integrations, and methods for monitoring Logs, Metrics, Traces, and SIEM.

-The fastest and most seamless way to send your data is through our **Telemetry Collector**. It lets you easily configure your data-sending process by executing a single line of code, providing a complete observability platform to monitor and improve your logs, metrics, and traces.

+The quickest way is through our **Telemetry Collector**, which simplifies data configuration with a single line of code, enabling full observability across your systems.

[**Get started with Telemetry Collector**](https://app.logz.io/#/dashboard/integrations/collectors?tags=Quick%20Setup).

-If you prefer to send your data manually, Logz.io offers numerous methods to do so, and here are some of the more popular ones based on what you’d like to monitor:

+If you prefer a manual approach, Logz.io offers multiple methods tailored to different monitoring needs. Here are some popular options:

|**Logs**|**Metrics**|**Traces**|**Cloud SIEM**|

| --- | --- | --- | --- |

|[Filebeat](https://app.logz.io/#/dashboard/integrations/Filebeat-data)|[.NET](https://app.logz.io/#/dashboard/integrations/dotnet)|[Jaeger installation](https://app.logz.io/#/dashboard/integrations/Jaeger-data)|[Cloudflare](https://app.logz.io/#/dashboard/integrations/Cloudflare-network)

|[S3 Bucket](https://app.logz.io/#/dashboard/integrations/AWS-S3-Bucket)|[Prometheus](https://app.logz.io/#/dashboard/integrations/Prometheus-remote-write)|[OpenTelemetry installation](https://app.logz.io/#/dashboard/integrations/OpenTelemetry-data)|[NGINX](https://app.logz.io/#/dashboard/integrations/Nginx-load)

-|[cURL](https://app.logz.io/#/dashboard/integrations/cURL-data)|[Azure Kubernetes Service](https://app.logz.io/#/dashboard/integrations/Kubernetes)|[Docker](https://app.logz.io/#/dashboard/integrations/Docker)|[Active directory](https://app.logz.io/#/dashboard/integrations/Active-Directory)

-|[JSON uploads](https://app.logz.io/#/dashboard/integrations/JSON)|[Google Kubernetes Engine over OpenTelemetry](https://app.logz.io/#/dashboard/integrations/Kubernetes)|[Kubernetes](https://app.logz.io/#/dashboard/integrations/Kubernetes)|[CloudTrail](https://app.logz.io/#/dashboard/integrations/AWS-CloudTrail)

-|[Docker container](https://app.logz.io/#/dashboard/integrations/Docker)|[Amazon EC2](https://app.logz.io/#/dashboard/integrations/AWS-EC2)|[Go instrumentation](https://app.logz.io/#/dashboard/integrations/GO)|[Auditbeat](https://app.logz.io/#/dashboard/integrations/auditbeat) |

+|[cURL](https://app.logz.io/#/dashboard/integrations/cURL-data)|[Java](https://app.logz.io/#/dashboard/integrations/Java)|[Docker](https://app.logz.io/#/dashboard/integrations/Docker)|[Active directory](https://app.logz.io/#/dashboard/integrations/Active-Directory)

+|[HTTP uploads](https://app.logz.io/#/dashboard/integrations/HTTP)|[Node.js](https://app.logz.io/#/dashboard/integrations/Node-js)|[Kubernetes](https://app.logz.io/#/dashboard/integrations/Kubernetes)|[CloudTrail](https://app.logz.io/#/dashboard/integrations/AWS-CloudTrail)

+|[Python](https://app.logz.io/#/dashboard/integrations/Python)|[Amazon EC2](https://app.logz.io/#/dashboard/integrations/AWS-EC2)|[Go instrumentation](https://app.logz.io/#/dashboard/integrations/GO)|[Auditbeat](https://app.logz.io/#/dashboard/integrations/auditbeat) |

-Browse the complete list of available shipping methods [here](https://docs.logz.io/docs/category/send-your-data/).

+Browse the complete list of available shipping methods [here](https://app.logz.io/#/dashboard/integrations/collectors).

-To learn more about shipping your data, check out **Shipping Log Data to Logz.io**:

+

+

### Parsing your data

-Logz.io offers automatic parsing [for over 50 log types](https://docs.logz.io/docs/user-guide/data-hub/log-parsing/default-parsing/).

+Logz.io automatically parses [over 50 log types](https://docs.logz.io/docs/user-guide/data-hub/log-parsing/default-parsing/).

+

+If your log type isn't listed, or you want to send custom logs, we offer parsing-as-a-service as part of your subscription. Just reach out to our **Support team** via chat or email us at [help@logz.io](mailto:help@logz.io?subject=Parse%20my%20data) with your request.

-If you can't find your log type, or if you're interested in sending custom logs, Logz.io will parse the logs for you. Parsing-as-a-service is included in your Logz.io subscription; just open a chat with our **Support team** with your request, you can also email us at [help@logz.io](mailto:help@logz.io).

-###### Additional resources

+ Additional resources

Learn more about sending data to Logz.io:

@@ -63,31 +58,35 @@ Learn more about sending data to Logz.io:

* [Log shipping troubleshooting](https://docs.logz.io/docs/user-guide/log-management/troubleshooting/log-shipping-troubleshooting/)

* [Troubleshooting Filebeat](https://docs.logz.io/docs/user-guide/log-management/troubleshooting/troubleshooting-filebeat/)

-### Explore your data with Logz.io's Log Management platform

+### Navigate your logs with Logz.io Explore

-Logz.io’s **[Log Management](https://app.logz.io/#/dashboard/osd)** is where you can search and query log files. You can use it to identify and analyze your code, and the platform is optimized for debugging and troubleshooting issues as quickly and effectively as possible.

+Logz.io's [Explore](https://app.logz.io/#/dashboard/explore) lets you view, search, and query your data to analyze code, debug issues, and get guidance with its integrated AI Assistant.

-

+

-The following list contains some of the common abilities available in Log Management:

+Key capabilities in Explore include:

-* **[Log Management best practices](https://docs.logz.io/docs/user-guide/log-management/opensearch-dashboards/opensearch-best-practices/)**

+* **[Explore best practices](https://docs.logz.io/docs/user-guide/explore/best-practices)**

* **[Configuring an alert](https://app.logz.io/#/dashboard/alerts/v2019/new)**

+* **[Review and investigate exceptions](https://docs.logz.io/docs/user-guide/explore/exceptions)**

+

+

## Create visualizations with Logz.io's Infrastructure Monitoring

-Monitor your **[Infrastructure Monitoring](https://app.logz.io/#/dashboard/metrics)** to gain a clear picture of the ongoing status of your distributed cloud services at all times.

-Logz.io's Infrastructure Monitoring lets your team curate a handy roster of dashboards to oversee continuous deployment, CI/CD pipelines, prevent outages, manage incidents, and remediate crashes in multi-microservice environments and hybrid infrastructures and complex tech stacks.

+Logz.io's **[Infrastructure Monitoring](https://app.logz.io/#/dashboard/metrics)** provides real-time visibility into the status of your distributed cloud services. It enables your team to create custom dashboards to oversee deployments, manage incidents, and prevent outages in complex environments.

+

-Once you've sent your metrics to Logz.io, you can:

+After sending your metrics to Logz.io, you can:

### Build Metrics visualizations with Logz.io

@@ -97,22 +96,22 @@ Once you've sent your metrics to Logz.io, you can:

You can also:

-* **[Create Metrics related alerts](https://docs.logz.io/docs/user-guide/Infrastructure-monitoring/metrics-alert-manager/)**

-* Work with **[Dashboard variable](https://docs.logz.io/docs/user-guide/infrastructure-monitoring/variables/)** to apply filters on your dashboards and drilldown links

+* **[Create metrics-based alerts](https://docs.logz.io/docs/user-guide/Infrastructure-monitoring/metrics-alert-manager/)**

+* Use **[Dashboard variable](https://docs.logz.io/docs/user-guide/infrastructure-monitoring/variables/)** for filtering and drilldowns

* Mark events on your Metrics dashboard based on data from a logging account, with **[Annotations](https://docs.logz.io/docs/user-guide/infrastructure-monitoring/log-correlations/annotations/)**

-###### Additional resources

+ Additional resources

* [Sending Prometheus metrics to Logz.io](https://logz.io/learn/sending-prometheus-metrics-to-logzio/)

-## Dive deeper into the code with Logz.io's Distributed Tracing

+## Dive deeper with Logz.io's Distributed Tracing

-Use Logz.io’s **[Distributed Tracing](https://app.logz.io/#/dashboard/jaeger)** to look under the hood at how your microservices behave, and access rich information to improve performance, investigate, and troubleshoot issues.

+Leverage Logz.io’s **[Distributed Tracing](https://app.logz.io/#/dashboard/jaeger)** to gain deep insights into your microservices' behavior, enhancing performance and streamlining investigation and troubleshooting.

-To help you understand how Distributed Tracing can enhance your data, check out the following guides:

+To make the most of Distributed Tracing, check out these guides:

* **[Getting started with Tracing](https://docs.logz.io/docs/user-guide/distributed-tracing/set-up-tracing/get-started-tracing/)**

* **[Sending demo traces with HOTROD](https://docs.logz.io/docs/user-guide/distributed-tracing/set-up-tracing/hotrod/)**

@@ -122,21 +121,21 @@ To help you understand how Distributed Tracing can enhance your data, check out

## Secure your environment with Logz.io's Cloud SIEM

-Logz.io **[Cloud SIEM](https://app.logz.io/#/dashboard/security/summary)** (Security Information and Event Management) aggregates security logs and alerts across distributed environments to allow your team to investigate security incidents from a single observability platform.

+Logz.io **[Cloud SIEM](https://app.logz.io/#/dashboard/security/summary)** (Security Information and Event Management) consolidates security logs and alerts across distributed environments, enabling your team to investigate incidents from a single observability platform.

-Here are some popular Cloud SIEM resources to help you get started:

+Get Started with Cloud SIEM:

-* **[Cloud SIEM quick start guide](https://docs.logz.io/docs/category/cloud-siem-quick-start-guide/)**

+* **[Quick start guide](https://docs.logz.io/docs/category/cloud-siem-quick-start-guide/)**

* **[Investigate security events](https://docs.logz.io/docs/user-guide/cloud-siem/investigate-events/security-events/)**

* **[Threat Intelligence feeds](https://docs.logz.io/docs/user-guide/cloud-siem/threat-intelligence/)**

-* **[Configure a security rule](https://docs.logz.io/docs/user-guide/cloud-siem/security-rules/manage-security-rules/)**

+* **[Configure security rules](https://docs.logz.io/docs/user-guide/cloud-siem/security-rules/manage-security-rules/)**

* **[Dashboards and reports](https://docs.logz.io/docs/user-guide/cloud-siem/dashboards/)**

## Manage and optimize your Logz.io account

-Logz.io's account admins can control and edit different elements inside their accounts. These abilities include setting up SSO access, assigning permissions per user, and sharing and managing data.

+Admins can control user permissions, manage accounts, set up SSO, and handle data archiving.

The following list explores the more common use cases for Logz.io's account admins:

@@ -158,9 +157,7 @@ In addition, Logz.io's Data Hub helps you manage and optimize your Logz.io produ

## Get a detailed overview with the Home Dashboard

-Home Dashboard includes your account’s data, logs, metrics, traces, alerts, exceptions, and insights.

-

-You can quickly access the Home Dashboard by clicking on the **Home** icon in the navigation.

+The [Home Dashboard](https://app.logz.io/#/dashboard/home) provides a comprehensive view of your account's data, including logs, metrics, traces, alerts, and insights. Access it by clicking the Home icon in the navigation.

[Learn how to utilize your Home Dashboard](/docs/user-guide/home-dashboard/).

@@ -168,9 +165,9 @@ You can quickly access the Home Dashboard by clicking on the **Home** icon in th

-### 1. Choose elements to view

+### 1. Customize your view

-You can choose which elements you want to view; logs, metrics, traces, number of alerts triggered, and insights gathered within the selected time frame. Click on one of the boxes to add or remove them from your view. The graph and chart will be updated immediately.

+Select elements like logs, metrics, and traces to display or hide, with immediate graph and table updates. Click on one of the boxes to add or remove them from your view. The graph and chart will be updated immediately.

For example, clicking on Insights or Exceptions will remove all of them from the graph and the table, allowing you to shift your focus according to your monitoring needs.

@@ -179,26 +176,25 @@ For example, clicking on Insights or Exceptions will remove all of them from the

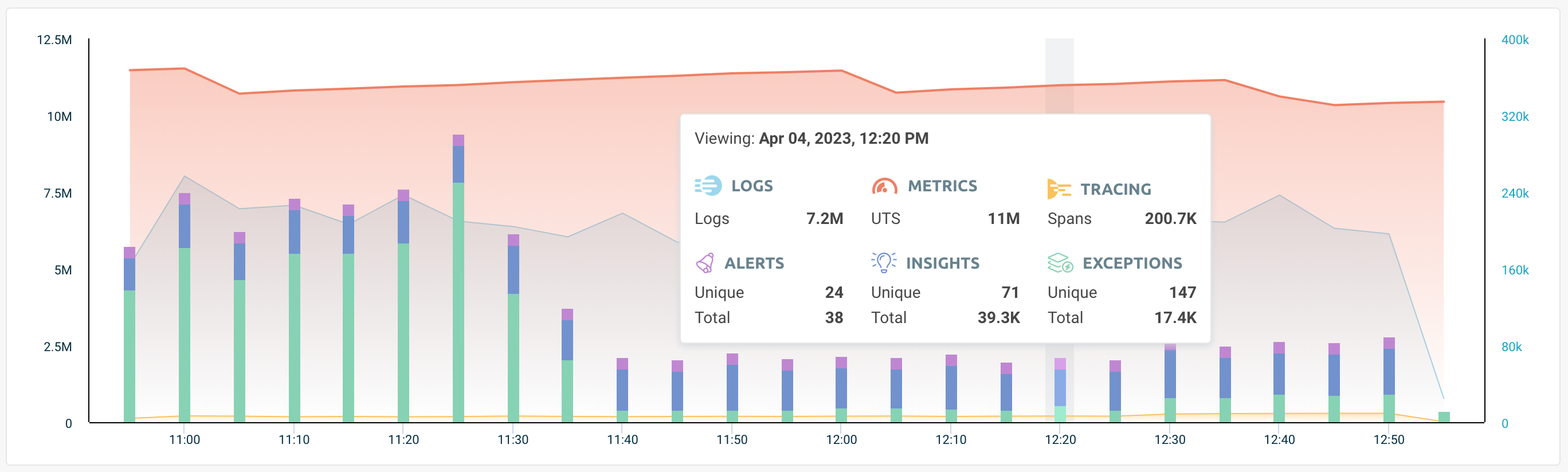

### 2. Graph overview

-This is a visual representation of your account’s data. Hover over the graph to see a breakdown of elements per hour. This view includes the number of overall and unique events.

+Visualize data over time and explore detailed event breakdowns.

### 3. Table overview

-At the bottom of the page, you can view your account's data as a table. The data is broken down by events, and you can view each event’s type, severity, number of grouped events, and the date on which the event was last triggered.

+At the bottom of the page, your data is displayed in a table format, breaking down events by type, severity, grouped count, and the date they were last triggered.

-When hovering over one of the events you'll see an **Investigate** button, which opens it in OpenSearch Dashboards, allowing you to drill down further into the issue.

+Hover over an event to reveal the **Investigate** button, which opens the event in OpenSearch Dashboards for deeper analysis.

### 4. Search and access dashboards

-Home Dashboard offers easy access to your logs and metrics dashboards, allowing you to search any available dashboard across your account. Start typing to search throughout your available dashboards, and click on one of the options to open it in a new tab. This view includes which dashboards you've viewed recently, and you can add critical or important dashboards to your favorites for quick access.

+The Home Dashboard provides quick access to your logs and metrics dashboards, enabling you to search across all available dashboards in your account. Simply start typing to find a specific dashboard, and click to open it in a new tab. The view also shows recently accessed dashboards, and you can mark essential ones as favorites for easy access.

### 5. Set your time frame

-The top of the page indicates when the data was last updated, helping you keep up to date with the data.

-

-You can change the time range to view data from the last 24 hours and up until from the last 2 hours. Once you choose a different time frame, Home Dashboard will update to reflect the relevant data.

+The top of the page shows the last data update, helping you stay current.

+You can adjust the time range to view data from the last 2 to 24 hours. The Home Dashboard will then refresh to display data for the selected period.