-

Notifications

You must be signed in to change notification settings - Fork 69

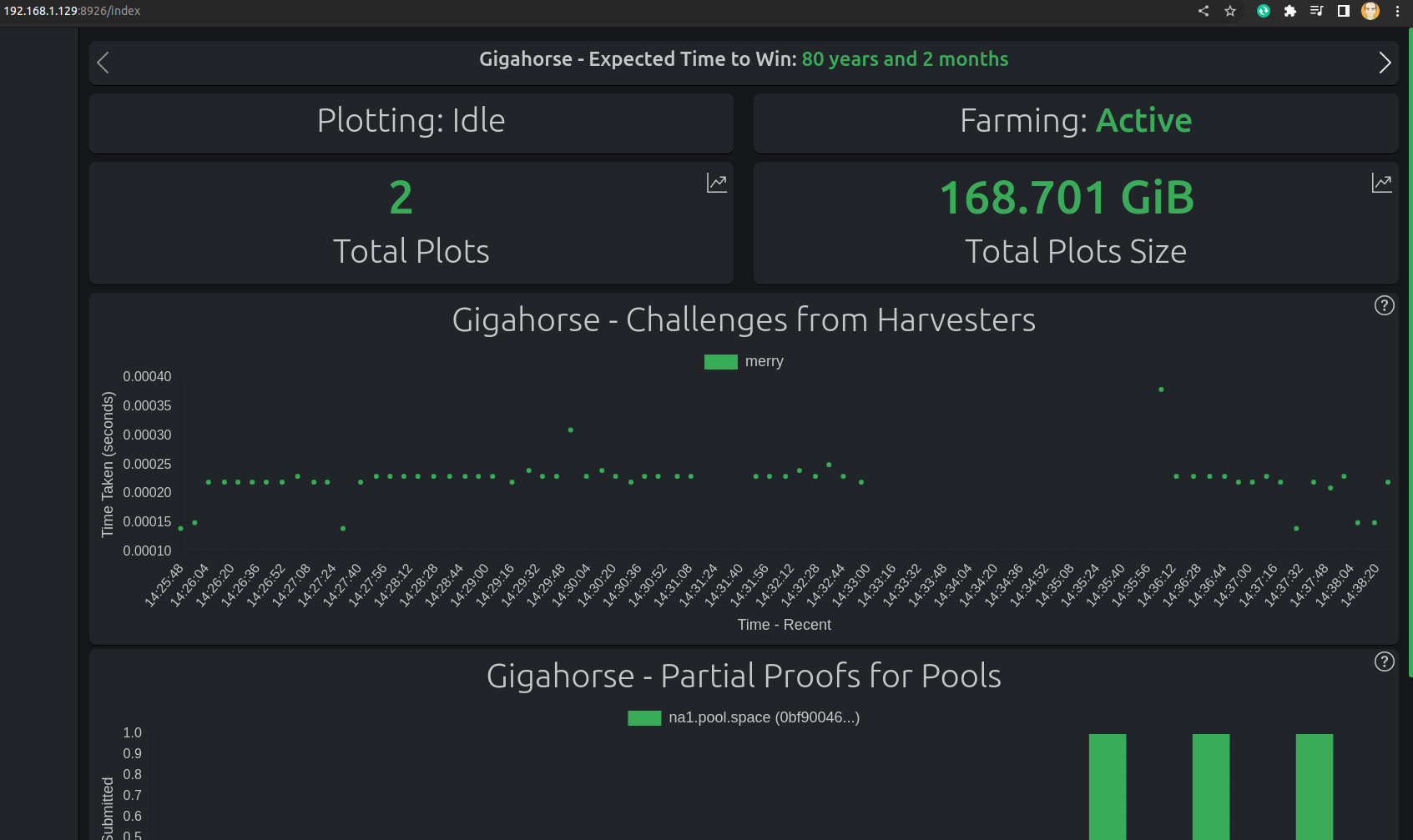

Gigahorse

Start a Discussion or join our Discord for support and to help out.

The Gigahorse closed-source binaries from Madmax allow for plots compressed with his proprietary plotter to be farmed. Available as a pseudo-blockchain within Machinaris, you can create these special plots (not cross-compatible with standard Chia) and farm them with a Nvidia GPU, passed into the Machinaris Docker container.

NOTE: The GPU VRAM requirements are cumulatitve so if you are farming and plotting on the same machine, then you will likely need multiple GPUs.

The Gigahorse plotter supports different modes:

- diskplot - just requires 220 GB of SSD and minimal RAM.

- gpuplot - requires Nvidia GPU with 8 GB+ VRAM and either 256 GB, 128 GB, or 64 GB RAM, depending on what SSDs you provide as temporary storage.

Here's a good sample config to begin plotting with:

# Learn more about Plotman at https://github.com/ericaltendorf/plotman

# https://github.com/ericaltendorf/plotman/wiki/Configuration#versions

version: [2]

logging:

# DO NOT CHANGE THESE IN-CONTAINER PATHS USED BY MACHINARIS!

plots: /root/.chia/plotman/logs

transfers: /root/.chia/plotman/logs/archiving

application: /root/.chia/plotman/logs/plotman.log

user_interface:

use_stty_size: False

commands:

interactive:

autostart_plotting: False

autostart_archiving: False

# Where to plot and log.

directories:

# One or more directories to use as tmp dirs for plotting. The

# scheduler will use all of them and distribute jobs among them.

# It assumes that IO is independent for each one (i.e., that each

# one is on a different physical device).

#

# If multiple directories share a common prefix, reports will

# abbreviate and show just the uniquely identifying suffix.

# REMEMBER ALL PATHS ARE IN-CONTAINER, THEY MAP TO HOST PATHS

tmp:

- /plotting

# Optional: tmp2 directory. If specified, will be passed to the madmax plotter as the '-2' param.

# If gpu-plotting, this will enable 128 GB partial RAM mode onto this SSD path

# tmp2: /plotting2

# Optional: tmp3 directory. If specified, will be passed to the madmax plotter as the '-3' param.

# If gpu-plotting, this will enable 64 GB partial RAM mode onto this SSD path

# tmp3: /plotting3

# One or more directories; the scheduler will use all of them.

# These again are presumed to be on independent physical devices,

# so writes (plot jobs) and reads (archivals) can be scheduled

# to minimize IO contention.

# REMEMBER ALL PATHS ARE IN-CONTAINER, THEY MAP TO HOST PATHS

dst:

- /plots1

# See: https://github.com/guydavis/machinaris/wiki/Plotman#archiving

#archiving:

#target: rsyncd

#env:

#site_root: /mnt/disks

#user: root

#host: aragorn

#rsync_port: 12000

#site: disks

# Plotting scheduling parameters

scheduling:

# Run a job on a particular temp dir only if the number of existing jobs

# before tmpdir_stagger_phase_major tmpdir_stagger_phase_minor

# is less than tmpdir_stagger_phase_limit.

# Phase major corresponds to the plot phase, phase minor corresponds to

# the table or table pair in sequence, phase limit corresponds to

# the number of plots allowed before [phase major, phase minor]

# Five is the final move stage of madmax

tmpdir_stagger_phase_major: 5

tmpdir_stagger_phase_minor: 0

# Optional: default is 1

tmpdir_stagger_phase_limit: 1

# Don't run more than this many jobs at a time on a single temp dir.

# Increase for staggered plotting by chia, leave at 2 for madmax sequential plotting

tmpdir_max_jobs: 2

# Don't run more than this many jobs at a time in total.

# Increase for staggered plotting by chia, leave at 2 for madmax sequential plotting

global_max_jobs: 2

# Don't run any jobs (across all temp dirs) more often than this, in minutes.

global_stagger_m: 30

# How often the daemon wakes to consider starting a new plot job, in seconds.

polling_time_s: 20

# Optional: Allows overriding some characteristics of certain tmp

# directories. This contains a map of tmp directory names to

# attributes. If a tmp directory and attribute is not listed here,

# it uses the default attribute setting from the main configuration.

#

# Currently support override parameters:

# - tmpdir_max_jobs

#tmp_overrides:

# In this example, /plotting3 is larger than the other tmp

# dirs and it can hold more plots than the default.

#/plotting3:

# tmpdir_max_jobs: 5

# Configure the plotter. See: https://github.com/guydavis/machinaris/wiki/Chives#plotting-page

plotting:

# See Keys page, for 'Farmer public key' value

farmer_pk: REPLACE_WITH_THE_REAL_VALUE

# See Keys page, for 'Pool public key' value

pool_pk: REPLACE_WITH_THE_REAL_VALUE

type: madmax

madmax:

# Gigahorse plotter: https://github.com/guydavis/machinaris/wiki/Gigahorse

k: 32 # The default size for Chia plot is k32

n: 1 # The number of plots to create in a single run (process)

mode: gpuplot # Either enable diskplot or gpuplot.

network_port: 8444 # Use 8444 for Chia

compression: 7 # Compression level (default = 1, min = 1, max = 20)

gpu_device: 0 # CUDA device (default = 0)

gpu_ndevices: 1 # Number of CUDA devices (default = 1)

gpu_streams: 4 # Number of parallel streams (default = 4, must be >= 2)

#gpu_shared_memory: 16 # Max shared / pinned memory in GiB (default = unlimited)

As with Chives and MMX, I strongly recommend keeping your Gigahorse plots in a separate folder on your HOST OS. For example, if I have a drive mounted at /mnt/disks/plots7:

- I place Chia plots directly within

/mnt/disks/plots7/ - I place Chives plots within

/mnt/disks/plots7/chives/ - I place Gigahorse plots within

/mnt/disks/plots7/gigahorse/ - I place MMX plots within

/mnt/disks/plots7/mmx/

This is done with volume-mounts, different for each of Chia, Chives, Gigahorse, and MMX. Each container simply read/writes plots from /plots7 mounted in-container.

While, Madmax designed his Gigahorse software to overlay/replace your existing Chia installation, I choose to keep the Docker images for the official Chia Network Inc. software and Madmax Gigahorse software separate. This is with an eye to the future where incompatibilities may arise, support/development may stop altogether for Gigahorse. At that time, Chia will still be around.

By keeping the two images separate, and requiring the running of a separate fullnode for Gigahorse, as with the other Forks one can always just stop running the Gigahorse container in the future with no impact on the main Chia container, keeping in mind the proprietary nature of Madmax's compressed plots.

Yes, as shown in Madmax's comparison, one need not use a GPU until higher levels of plot compression:

Yes, definitely on Linux and Unraid, and usually on Windows too! The basic GPU config is created for a fresh install automatically at machinaris.app and also for workers (New Worker wizard on Workers page). For additional configuration details, see GPUs.

Yes, you can pass through multiple GPUs into the Docker container. Gigahorse farming will use all of them, however you can set environment variable CHIAPOS_MAX_CUDA_DEVICES=1 if you want Gigahorse to use only the fastest GPU, leaving the additional ones for plotting. By default, when CHIAPOS_MAX_CUDA_DEVICES=0, farming uses all GPUs which can conflict with plotting. Thanks to @brummerte for this tip on the Discord.

It seems that the Gigahorse harvester, which connects to the Gigahorse fullnode that lacks a running wallet, may not be able to retrieve your pool information on startup, like a regular Chia harvester would do. To work around this deficiency with Gigahorse harvesters, please manually copy the relevant pool_list section from the Settings | Farming page for the Gigahorse fullnode, over to the Gigahorse harvesters. Thanks to @Gnomuz for this tip on the Discord.

Gigahorse allows for a single server to host the GPU that multiple Gigahorse systems will use to farm/harvest compressed plots. To enable this mode within Machinaris, set an environment variable of gigahorse_recompute_server=true in the docker-compose.yml of the Gigahorse Fullnode/Farmer system with the GPU. In that same docker-compose.yml expose port 11989 to allow Gigahorse harvesters to contact this recompute server.

Then in the docker-compose.yml of the Gigahorse harvesters (have compressed plots, but no GPU) set this environment variable:

CHIAPOS_RECOMPUTE_HOST=1.2.3.4

Replace the placeholder IP address above with the LAN IP of your Gigahorse fullnode/farmer. This will tell the Gigahorse harvester to connect to the Gigahorse recompute server on startup to use its GPU for farming.

By default a Gigahorse fullnode will attempt to farm any completed plots, even if you are still plotting new ones. This can lead to a lack of VRAM in your GPU as both processes attempt to use the card. First, try decreasing gpu_streams from 4 to 2 in the Settings | Plotting config.

If this VRM memory issue still happens, please set mode=plotter in the docker-compose.yml of the Machinaris-Gigahorse container. Then you can plot exclusively. Once you are done plotting, you can switch back to mode=fullnode to farm the Gigahorse plots.

CHIA NETWORK INC, CHIA™, the CHIA BLOCKCHAIN™, the CHIA PROTOCOL™, CHIALISP™ and the “leaf Logo” (including the leaf logo alone when it refers to or indicates Chia), are trademarks or registered trademarks of Chia Network, Inc., a Delaware corporation. There is no affiliation between the Machinaris project and the main Chia Network project.