+

+

+ +

+

+

+

+## Summary

+

+This package performs object detection in the ROS2 environment. There are certainly more sophisticated object detection frameworks out there, eg.

+[NVIDIA Isaac](https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_object_detection).

+

+The chief virtue of this package is the simplicity of the codebase and use of standardised ROS2 messages, with the goal of being simple to understand and to use.

+

+## Packages

+

+coco_detector: package containing coco_detector_node for listening on ROS2 topic /image and publishing ROS2 Detection2DArray message on topic /detected_objects. Also (by default) publishes Image (with labels and bounding boxes) message on topic /annotated_image. The object detection is performed by PyTorch using MobileNet.

+

+### Tested Hardware

+

+Dell Precision Tower 2210, NVIDIA RTX2070 (GPU is optional)

+

+### Tested Software

+

+Ubuntu 22.04, ROS2 Humble (RoboStack), PyTorch 2.1.2, CUDA 12.2 (CUDA is only needed if you require GPU)

+

+## Installation - historical reference only

+

+NOTE - outdated - not relevant - for historial reference only.

+

+Follow the [RoboStack](https://robostack.github.io/GettingStarted.html) installation instructions to install ROS2

+

+(Ensure you have also followed the step Installation tools for local development in the above instructions)

+

+Follow the [PyTorch](https://pytorch.org/) installation instructions to install PyTorch (selecting the conda option).

+

+```

+mamba activate ros2 # (use the name here you decided to call this conda environment)

+mamba install ros-humble-image-tools

+mamba install ros-humble-vision-msgs

+cd ~

+mkdir -p ros2_ws/src

+cd ros2_ws

+git -C src clone https://github.com/jfrancis71/ros2_coco_detector.git

+colcon build --symlink-install

+```

+You may receive a warning on the colcon build step: "SetuptoolsDeprecationWarning: setup.py install is deprecated", this can be ignored.

+

+The above steps assume a RoboStack mamba/conda ROS2 install. If using other installation process, replace the RoboStack image-tools and vision-msgs packages install steps with whichever command is appropriate for your environment. The image-tools package is not required for coco_detector, it is just used in the steps below for convenient demonstration. However vision-msgs is required (this is where the ROS2 DetectionArray2D message is defined)

+

+## Activate Environment

+

+```

+mamba activate ros2 # (use the name here you decided to call this conda environment)

+cd ~/ros2_ws

+source ./install/setup.bash

+```

+

+## Verify Install

+

+Launch a camera stream:

+```

+ros2 run image_tools cam2image

+```

+

+On another terminal enter:

+```

+ros2 run coco_detector coco_detector_node

+```

+There will be a short delay the first time the node is run for PyTorch TorchVision to download the neural network. You should see a downloading progress bar. This network is then cached for subsequent runs.

+

+On another terminal to view the detection messages:

+```

+ros2 topic echo /detected_objects

+```

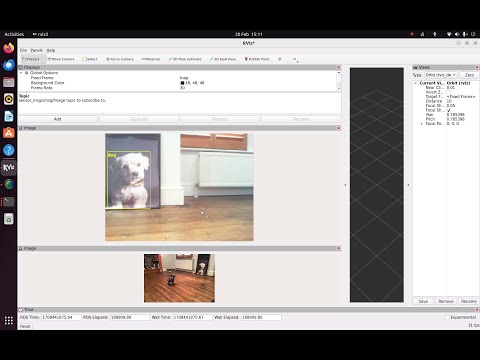

+To view the image stream annotated with the labels and bounding boxes:

+```

+ros2 run image_tools showimage --ros-args -r /image:=/annotated_image

+```

+

+Example Use:

+

+```

+ros2 run coco_detector coco_detector_node --ros-args -p publish_annotated_image:=False -p device:=cuda -p detection_threshold:=0.7

+```

+

+This will run the coco detector without publishing the annotated image (it is True by default) using the default CUDA device (device=cpu by default). It sets the detection_threshold to 0.7 (it is 0.9 by default). The detection_threshold should be between 0.0 and 1.0; the higher this number the more detections will be rejected. If you have too many false detections try increasing this number. Thus only Detection2DArray messages are published on topic /detected_objects.

+

+

+## Suggested Setup For Mobile Robotics

+

+These suggestions are for a Raspberry Pi 3 Model B+ running ROS2.

+

+As of 16/02/2024, the PyTorch Conda install does not appear to be working for Raspberry Pi 3 Model B+.

+There may be other installation options, but I have not explored that.

+

+As an alternative if you have a ROS2 workstation connected to the same network, I suggest publishing the compressed image on the Raspberry Pi and running the COCO detector on the workstation.

+

+The below setup involves the ROS2 compression transport on both the Raspberry Pi and workstation. If using RoboStack ROS2 Humble you can install on each with:

+

+```mamba install ros-humble-compressed-image-transport```

+

+Raspberry Pi (run each command in seperate terminals):

+

+```ros2 run image_tools cam2image --ros-args -r /image:=/charlie/image```

+

+```ros2 run image_transport republish raw compressed --ros-args -r in:=/charlie/image -r out/compressed:=/charlie/compressed```

+

+Workstation (run each command in seperate terminals):

+

+```ros2 run image_transport republish compressed raw --ros-args -r /in/compressed:=/charlie/compressed -r /out:=/server/image```

+

+```ros2 run coco_detector coco_detector_node --ros-args -r /image:=/server/image```

+

+I have relabelled topic names for clarity and keeping the image topics on the different machines seperate. Compression is not necessary, but I have poor performance on my network without compression.

+

+Note you could use launch files (for convenience) to run the above nodes. I do not cover that here as it will be specific to your setup.

+

+## Notes

+

+The ROS2 documentation suggests that the ObjectHypotheses.class_id should be an identifier that the client should then look up in a database. This seems more complex than I have a need for. So this implementation just places the class label here directly, eg. class_id = "dog". See the ROS2 Vision Msgs Github link in the external links section below for more details.

+

+## External Links

+

+[COCO Dataset Homepage](https://cocodataset.org/#home)

+

+[Microsoft COCO: Common Objects in Context](http://arxiv.org/abs/1405.0312)

+

+[PyTorch MobileNet](https://pytorch.org/vision/stable/models/generated/torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn.html)

+

+[MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications](https://arxiv.org/abs/1704.04861)

+

+[ROS2 Vision Msgs Github repo](https://github.com/ros-perception/vision_msgs)

diff --git a/coco_detector/coco_detector/__init__.py b/coco_detector/coco_detector/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/coco_detector/coco_detector/coco_detector_node.py b/coco_detector/coco_detector/coco_detector_node.py

new file mode 100644

index 0000000..64934a2

--- /dev/null

+++ b/coco_detector/coco_detector/coco_detector_node.py

@@ -0,0 +1,120 @@

+"""Detects COCO objects in image and publishes in ROS2.

+

+Subscribes to /image and publishes Detection2DArray message on topic /detected_objects.

+Also publishes (by default) annotated image with bounding boxes on /annotated_image.

+Uses PyTorch and FasterRCNN_MobileNet model from torchvision.

+Bounding Boxes use image convention, ie center.y = 0 means top of image.

+"""

+

+import collections

+import numpy as np

+import rclpy

+from rclpy.node import Node

+from sensor_msgs.msg import Image

+from vision_msgs.msg import BoundingBox2D, ObjectHypothesis, ObjectHypothesisWithPose

+from vision_msgs.msg import Detection2D, Detection2DArray

+from cv_bridge import CvBridge

+import torch

+from torchvision.models import detection as detection_model

+from torchvision.utils import draw_bounding_boxes

+

+Detection = collections.namedtuple("Detection", "label, bbox, score")

+

+class CocoDetectorNode(Node):

+ """Detects COCO objects in image and publishes on ROS2.

+

+ Subscribes to /image and publishes Detection2DArray on /detected_objects.

+ Also publishes augmented image with bounding boxes on /annotated_image.

+ """

+

+ # pylint: disable=R0902 disable too many instance variables warning for this class

+ def __init__(self):

+ super().__init__("coco_detector_node")

+ self.declare_parameter('device', 'cpu')

+ self.declare_parameter('detection_threshold', 0.9)

+ self.declare_parameter('publish_annotated_image', True)

+ self.device = self.get_parameter('device').get_parameter_value().string_value

+ self.detection_threshold = \

+ self.get_parameter('detection_threshold').get_parameter_value().double_value

+ self.subscription = self.create_subscription(

+ Image,

+ "/go2_camera/color/image",

+ self.listener_callback,

+ 10)

+ self.detected_objects_publisher = \

+ self.create_publisher(Detection2DArray, "detected_objects", 10)

+ if self.get_parameter('publish_annotated_image').get_parameter_value().bool_value:

+ self.annotated_image_publisher = \

+ self.create_publisher(Image, "annotated_image", 10)

+ else:

+ self.annotated_image_publisher = None

+ self.bridge = CvBridge()

+ self.model = detection_model.fasterrcnn_mobilenet_v3_large_320_fpn(

+ weights="FasterRCNN_MobileNet_V3_Large_320_FPN_Weights.COCO_V1",

+ progress=True,

+ weights_backbone="MobileNet_V3_Large_Weights.IMAGENET1K_V1").to(self.device)

+ self.class_labels = \

+ detection_model.FasterRCNN_MobileNet_V3_Large_320_FPN_Weights.DEFAULT.meta["categories"]

+ self.model.eval()

+ self.get_logger().info("Node has started.")

+

+ def mobilenet_to_ros2(self, detection, header):

+ """Converts a Detection tuple(label, bbox, score) to a ROS2 Detection2D message."""

+

+ detection2d = Detection2D()

+ detection2d.header = header

+ object_hypothesis_with_pose = ObjectHypothesisWithPose()

+ object_hypothesis = ObjectHypothesis()

+ object_hypothesis.class_id = self.class_labels[detection.label]

+ object_hypothesis.score = detection.score.detach().item()

+ object_hypothesis_with_pose.hypothesis = object_hypothesis

+ detection2d.results.append(object_hypothesis_with_pose)

+ bounding_box = BoundingBox2D()

+ bounding_box.center.position.x = float((detection.bbox[0] + detection.bbox[2]) / 2)

+ bounding_box.center.position.y = float((detection.bbox[1] + detection.bbox[3]) / 2)

+ bounding_box.center.theta = 0.0

+ bounding_box.size_x = float(2 * (bounding_box.center.position.x - detection.bbox[0]))

+ bounding_box.size_y = float(2 * (bounding_box.center.position.y - detection.bbox[1]))

+ detection2d.bbox = bounding_box

+ return detection2d

+

+ def publish_annotated_image(self, filtered_detections, header, image):

+ """Draws the bounding boxes on the image and publishes to /annotated_image"""

+

+ if len(filtered_detections) > 0:

+ pred_boxes = torch.stack([detection.bbox for detection in filtered_detections])

+ pred_labels = [self.class_labels[detection.label] for detection in filtered_detections]

+ annotated_image = draw_bounding_boxes(torch.tensor(image), pred_boxes,

+ pred_labels, colors="yellow")

+ else:

+ annotated_image = torch.tensor(image)

+ ros2_image_msg = self.bridge.cv2_to_imgmsg(annotated_image.numpy().transpose(1, 2, 0),

+ encoding="rgb8")

+ ros2_image_msg.header = header

+ self.annotated_image_publisher.publish(ros2_image_msg)

+

+ def listener_callback(self, msg):

+ """Reads image and publishes on /detected_objects and /annotated_image."""

+ cv_image = self.bridge.imgmsg_to_cv2(msg, desired_encoding="rgb8")

+ image = cv_image.copy().transpose((2, 0, 1))

+ batch_image = np.expand_dims(image, axis=0)

+ tensor_image = torch.tensor(batch_image/255.0, dtype=torch.float, device=self.device)

+ mobilenet_detections = self.model(tensor_image)[0] # pylint: disable=E1102 disable not callable warning

+ filtered_detections = [Detection(label_id, box, score) for label_id, box, score in

+ zip(mobilenet_detections["labels"],

+ mobilenet_detections["boxes"],

+ mobilenet_detections["scores"]) if score >= self.detection_threshold]

+ detection_array = Detection2DArray()

+ detection_array.header = msg.header

+ detection_array.detections = \

+ [self.mobilenet_to_ros2(detection, msg.header) for detection in filtered_detections]

+ self.detected_objects_publisher.publish(detection_array)

+ if self.annotated_image_publisher is not None:

+ self.publish_annotated_image(filtered_detections, msg.header, image)

+

+

+rclpy.init()

+coco_detector_node = CocoDetectorNode()

+rclpy.spin(coco_detector_node)

+coco_detector_node.destroy_node()

+rclpy.shutdown()

diff --git a/coco_detector/package.xml b/coco_detector/package.xml

new file mode 100644

index 0000000..44f7e83

--- /dev/null

+++ b/coco_detector/package.xml

@@ -0,0 +1,18 @@

+

+

+

+

+

+## Summary

+

+This package performs object detection in the ROS2 environment. There are certainly more sophisticated object detection frameworks out there, eg.

+[NVIDIA Isaac](https://github.com/NVIDIA-ISAAC-ROS/isaac_ros_object_detection).

+

+The chief virtue of this package is the simplicity of the codebase and use of standardised ROS2 messages, with the goal of being simple to understand and to use.

+

+## Packages

+

+coco_detector: package containing coco_detector_node for listening on ROS2 topic /image and publishing ROS2 Detection2DArray message on topic /detected_objects. Also (by default) publishes Image (with labels and bounding boxes) message on topic /annotated_image. The object detection is performed by PyTorch using MobileNet.

+

+### Tested Hardware

+

+Dell Precision Tower 2210, NVIDIA RTX2070 (GPU is optional)

+

+### Tested Software

+

+Ubuntu 22.04, ROS2 Humble (RoboStack), PyTorch 2.1.2, CUDA 12.2 (CUDA is only needed if you require GPU)

+

+## Installation - historical reference only

+

+NOTE - outdated - not relevant - for historial reference only.

+

+Follow the [RoboStack](https://robostack.github.io/GettingStarted.html) installation instructions to install ROS2

+

+(Ensure you have also followed the step Installation tools for local development in the above instructions)

+

+Follow the [PyTorch](https://pytorch.org/) installation instructions to install PyTorch (selecting the conda option).

+

+```

+mamba activate ros2 # (use the name here you decided to call this conda environment)

+mamba install ros-humble-image-tools

+mamba install ros-humble-vision-msgs

+cd ~

+mkdir -p ros2_ws/src

+cd ros2_ws

+git -C src clone https://github.com/jfrancis71/ros2_coco_detector.git

+colcon build --symlink-install

+```

+You may receive a warning on the colcon build step: "SetuptoolsDeprecationWarning: setup.py install is deprecated", this can be ignored.

+

+The above steps assume a RoboStack mamba/conda ROS2 install. If using other installation process, replace the RoboStack image-tools and vision-msgs packages install steps with whichever command is appropriate for your environment. The image-tools package is not required for coco_detector, it is just used in the steps below for convenient demonstration. However vision-msgs is required (this is where the ROS2 DetectionArray2D message is defined)

+

+## Activate Environment

+

+```

+mamba activate ros2 # (use the name here you decided to call this conda environment)

+cd ~/ros2_ws

+source ./install/setup.bash

+```

+

+## Verify Install

+

+Launch a camera stream:

+```

+ros2 run image_tools cam2image

+```

+

+On another terminal enter:

+```

+ros2 run coco_detector coco_detector_node

+```

+There will be a short delay the first time the node is run for PyTorch TorchVision to download the neural network. You should see a downloading progress bar. This network is then cached for subsequent runs.

+

+On another terminal to view the detection messages:

+```

+ros2 topic echo /detected_objects

+```

+To view the image stream annotated with the labels and bounding boxes:

+```

+ros2 run image_tools showimage --ros-args -r /image:=/annotated_image

+```

+

+Example Use:

+

+```

+ros2 run coco_detector coco_detector_node --ros-args -p publish_annotated_image:=False -p device:=cuda -p detection_threshold:=0.7

+```

+

+This will run the coco detector without publishing the annotated image (it is True by default) using the default CUDA device (device=cpu by default). It sets the detection_threshold to 0.7 (it is 0.9 by default). The detection_threshold should be between 0.0 and 1.0; the higher this number the more detections will be rejected. If you have too many false detections try increasing this number. Thus only Detection2DArray messages are published on topic /detected_objects.

+

+

+## Suggested Setup For Mobile Robotics

+

+These suggestions are for a Raspberry Pi 3 Model B+ running ROS2.

+

+As of 16/02/2024, the PyTorch Conda install does not appear to be working for Raspberry Pi 3 Model B+.

+There may be other installation options, but I have not explored that.

+

+As an alternative if you have a ROS2 workstation connected to the same network, I suggest publishing the compressed image on the Raspberry Pi and running the COCO detector on the workstation.

+

+The below setup involves the ROS2 compression transport on both the Raspberry Pi and workstation. If using RoboStack ROS2 Humble you can install on each with:

+

+```mamba install ros-humble-compressed-image-transport```

+

+Raspberry Pi (run each command in seperate terminals):

+

+```ros2 run image_tools cam2image --ros-args -r /image:=/charlie/image```

+

+```ros2 run image_transport republish raw compressed --ros-args -r in:=/charlie/image -r out/compressed:=/charlie/compressed```

+

+Workstation (run each command in seperate terminals):

+

+```ros2 run image_transport republish compressed raw --ros-args -r /in/compressed:=/charlie/compressed -r /out:=/server/image```

+

+```ros2 run coco_detector coco_detector_node --ros-args -r /image:=/server/image```

+

+I have relabelled topic names for clarity and keeping the image topics on the different machines seperate. Compression is not necessary, but I have poor performance on my network without compression.

+

+Note you could use launch files (for convenience) to run the above nodes. I do not cover that here as it will be specific to your setup.

+

+## Notes

+

+The ROS2 documentation suggests that the ObjectHypotheses.class_id should be an identifier that the client should then look up in a database. This seems more complex than I have a need for. So this implementation just places the class label here directly, eg. class_id = "dog". See the ROS2 Vision Msgs Github link in the external links section below for more details.

+

+## External Links

+

+[COCO Dataset Homepage](https://cocodataset.org/#home)

+

+[Microsoft COCO: Common Objects in Context](http://arxiv.org/abs/1405.0312)

+

+[PyTorch MobileNet](https://pytorch.org/vision/stable/models/generated/torchvision.models.detection.fasterrcnn_mobilenet_v3_large_320_fpn.html)

+

+[MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications](https://arxiv.org/abs/1704.04861)

+

+[ROS2 Vision Msgs Github repo](https://github.com/ros-perception/vision_msgs)

diff --git a/coco_detector/coco_detector/__init__.py b/coco_detector/coco_detector/__init__.py

new file mode 100644

index 0000000..e69de29

diff --git a/coco_detector/coco_detector/coco_detector_node.py b/coco_detector/coco_detector/coco_detector_node.py

new file mode 100644

index 0000000..64934a2

--- /dev/null

+++ b/coco_detector/coco_detector/coco_detector_node.py

@@ -0,0 +1,120 @@

+"""Detects COCO objects in image and publishes in ROS2.

+

+Subscribes to /image and publishes Detection2DArray message on topic /detected_objects.

+Also publishes (by default) annotated image with bounding boxes on /annotated_image.

+Uses PyTorch and FasterRCNN_MobileNet model from torchvision.

+Bounding Boxes use image convention, ie center.y = 0 means top of image.

+"""

+

+import collections

+import numpy as np

+import rclpy

+from rclpy.node import Node

+from sensor_msgs.msg import Image

+from vision_msgs.msg import BoundingBox2D, ObjectHypothesis, ObjectHypothesisWithPose

+from vision_msgs.msg import Detection2D, Detection2DArray

+from cv_bridge import CvBridge

+import torch

+from torchvision.models import detection as detection_model

+from torchvision.utils import draw_bounding_boxes

+

+Detection = collections.namedtuple("Detection", "label, bbox, score")

+

+class CocoDetectorNode(Node):

+ """Detects COCO objects in image and publishes on ROS2.

+

+ Subscribes to /image and publishes Detection2DArray on /detected_objects.

+ Also publishes augmented image with bounding boxes on /annotated_image.

+ """

+

+ # pylint: disable=R0902 disable too many instance variables warning for this class

+ def __init__(self):

+ super().__init__("coco_detector_node")

+ self.declare_parameter('device', 'cpu')

+ self.declare_parameter('detection_threshold', 0.9)

+ self.declare_parameter('publish_annotated_image', True)

+ self.device = self.get_parameter('device').get_parameter_value().string_value

+ self.detection_threshold = \

+ self.get_parameter('detection_threshold').get_parameter_value().double_value

+ self.subscription = self.create_subscription(

+ Image,

+ "/go2_camera/color/image",

+ self.listener_callback,

+ 10)

+ self.detected_objects_publisher = \

+ self.create_publisher(Detection2DArray, "detected_objects", 10)

+ if self.get_parameter('publish_annotated_image').get_parameter_value().bool_value:

+ self.annotated_image_publisher = \

+ self.create_publisher(Image, "annotated_image", 10)

+ else:

+ self.annotated_image_publisher = None

+ self.bridge = CvBridge()

+ self.model = detection_model.fasterrcnn_mobilenet_v3_large_320_fpn(

+ weights="FasterRCNN_MobileNet_V3_Large_320_FPN_Weights.COCO_V1",

+ progress=True,

+ weights_backbone="MobileNet_V3_Large_Weights.IMAGENET1K_V1").to(self.device)

+ self.class_labels = \

+ detection_model.FasterRCNN_MobileNet_V3_Large_320_FPN_Weights.DEFAULT.meta["categories"]

+ self.model.eval()

+ self.get_logger().info("Node has started.")

+

+ def mobilenet_to_ros2(self, detection, header):

+ """Converts a Detection tuple(label, bbox, score) to a ROS2 Detection2D message."""

+

+ detection2d = Detection2D()

+ detection2d.header = header

+ object_hypothesis_with_pose = ObjectHypothesisWithPose()

+ object_hypothesis = ObjectHypothesis()

+ object_hypothesis.class_id = self.class_labels[detection.label]

+ object_hypothesis.score = detection.score.detach().item()

+ object_hypothesis_with_pose.hypothesis = object_hypothesis

+ detection2d.results.append(object_hypothesis_with_pose)

+ bounding_box = BoundingBox2D()

+ bounding_box.center.position.x = float((detection.bbox[0] + detection.bbox[2]) / 2)

+ bounding_box.center.position.y = float((detection.bbox[1] + detection.bbox[3]) / 2)

+ bounding_box.center.theta = 0.0

+ bounding_box.size_x = float(2 * (bounding_box.center.position.x - detection.bbox[0]))

+ bounding_box.size_y = float(2 * (bounding_box.center.position.y - detection.bbox[1]))

+ detection2d.bbox = bounding_box

+ return detection2d

+

+ def publish_annotated_image(self, filtered_detections, header, image):

+ """Draws the bounding boxes on the image and publishes to /annotated_image"""

+

+ if len(filtered_detections) > 0:

+ pred_boxes = torch.stack([detection.bbox for detection in filtered_detections])

+ pred_labels = [self.class_labels[detection.label] for detection in filtered_detections]

+ annotated_image = draw_bounding_boxes(torch.tensor(image), pred_boxes,

+ pred_labels, colors="yellow")

+ else:

+ annotated_image = torch.tensor(image)

+ ros2_image_msg = self.bridge.cv2_to_imgmsg(annotated_image.numpy().transpose(1, 2, 0),

+ encoding="rgb8")

+ ros2_image_msg.header = header

+ self.annotated_image_publisher.publish(ros2_image_msg)

+

+ def listener_callback(self, msg):

+ """Reads image and publishes on /detected_objects and /annotated_image."""

+ cv_image = self.bridge.imgmsg_to_cv2(msg, desired_encoding="rgb8")

+ image = cv_image.copy().transpose((2, 0, 1))

+ batch_image = np.expand_dims(image, axis=0)

+ tensor_image = torch.tensor(batch_image/255.0, dtype=torch.float, device=self.device)

+ mobilenet_detections = self.model(tensor_image)[0] # pylint: disable=E1102 disable not callable warning

+ filtered_detections = [Detection(label_id, box, score) for label_id, box, score in

+ zip(mobilenet_detections["labels"],

+ mobilenet_detections["boxes"],

+ mobilenet_detections["scores"]) if score >= self.detection_threshold]

+ detection_array = Detection2DArray()

+ detection_array.header = msg.header

+ detection_array.detections = \

+ [self.mobilenet_to_ros2(detection, msg.header) for detection in filtered_detections]

+ self.detected_objects_publisher.publish(detection_array)

+ if self.annotated_image_publisher is not None:

+ self.publish_annotated_image(filtered_detections, msg.header, image)

+

+

+rclpy.init()

+coco_detector_node = CocoDetectorNode()

+rclpy.spin(coco_detector_node)

+coco_detector_node.destroy_node()

+rclpy.shutdown()

diff --git a/coco_detector/package.xml b/coco_detector/package.xml

new file mode 100644

index 0000000..44f7e83

--- /dev/null

+++ b/coco_detector/package.xml

@@ -0,0 +1,18 @@

+

+

+