diff --git a/.github/workflows/pr_flax_dependency_test.yml b/.github/workflows/pr_flax_dependency_test.yml

new file mode 100644

index 000000000000..d7d2a2d4c3d5

--- /dev/null

+++ b/.github/workflows/pr_flax_dependency_test.yml

@@ -0,0 +1,34 @@

+name: Run Flax dependency tests

+

+on:

+ pull_request:

+ branches:

+ - main

+ push:

+ branches:

+ - main

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.head_ref || github.run_id }}

+ cancel-in-progress: true

+

+jobs:

+ check_flax_dependencies:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v3

+ - name: Set up Python

+ uses: actions/setup-python@v4

+ with:

+ python-version: "3.8"

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install -e .

+ pip install "jax[cpu]>=0.2.16,!=0.3.2"

+ pip install "flax>=0.4.1"

+ pip install "jaxlib>=0.1.65"

+ pip install pytest

+ - name: Check for soft dependencies

+ run: |

+ pytest tests/others/test_dependencies.py

diff --git a/.github/workflows/pr_tests.yml b/.github/workflows/pr_tests.yml

index aaaea147f7ab..f7d9dde5258d 100644

--- a/.github/workflows/pr_tests.yml

+++ b/.github/workflows/pr_tests.yml

@@ -72,7 +72,7 @@ jobs:

run: |

apt-get update && apt-get install libsndfile1-dev libgl1 -y

python -m pip install -e .[quality,test]

- python -m pip install git+https://github.com/huggingface/accelerate.git

+ python -m pip install accelerate

- name: Environment

run: |

@@ -115,7 +115,7 @@ jobs:

run: |

python -m pytest -n 2 --max-worker-restart=0 --dist=loadfile \

--make-reports=tests_${{ matrix.config.report }} \

- examples/test_examples.py

+ examples/test_examples.py

- name: Failure short reports

if: ${{ failure() }}

diff --git a/.github/workflows/pr_torch_dependency_test.yml b/.github/workflows/pr_torch_dependency_test.yml

new file mode 100644

index 000000000000..57a7a5c77c74

--- /dev/null

+++ b/.github/workflows/pr_torch_dependency_test.yml

@@ -0,0 +1,32 @@

+name: Run Torch dependency tests

+

+on:

+ pull_request:

+ branches:

+ - main

+ push:

+ branches:

+ - main

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.head_ref || github.run_id }}

+ cancel-in-progress: true

+

+jobs:

+ check_torch_dependencies:

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v3

+ - name: Set up Python

+ uses: actions/setup-python@v4

+ with:

+ python-version: "3.8"

+ - name: Install dependencies

+ run: |

+ python -m pip install --upgrade pip

+ pip install -e .

+ pip install torch torchvision torchaudio

+ pip install pytest

+ - name: Check for soft dependencies

+ run: |

+ pytest tests/others/test_dependencies.py

diff --git a/docs/README.md b/docs/README.md

index 30e5d430765e..f85032c68931 100644

--- a/docs/README.md

+++ b/docs/README.md

@@ -16,7 +16,7 @@ limitations under the License.

# Generating the documentation

-To generate the documentation, you first have to build it. Several packages are necessary to build the doc,

+To generate the documentation, you first have to build it. Several packages are necessary to build the doc,

you can install them with the following command, at the root of the code repository:

```bash

@@ -142,7 +142,7 @@ This will include every public method of the pipeline that is documented, as wel

- __call__

- enable_attention_slicing

- disable_attention_slicing

- - enable_xformers_memory_efficient_attention

+ - enable_xformers_memory_efficient_attention

- disable_xformers_memory_efficient_attention

```

@@ -154,7 +154,7 @@ Values that should be put in `code` should either be surrounded by backticks: \`

and objects like True, None, or any strings should usually be put in `code`.

When mentioning a class, function, or method, it is recommended to use our syntax for internal links so that our tool

-adds a link to its documentation with this syntax: \[\`XXXClass\`\] or \[\`function\`\]. This requires the class or

+adds a link to its documentation with this syntax: \[\`XXXClass\`\] or \[\`function\`\]. This requires the class or

function to be in the main package.

If you want to create a link to some internal class or function, you need to

diff --git a/docs/TRANSLATING.md b/docs/TRANSLATING.md

index 32cd95f2ade9..b5a88812f30a 100644

--- a/docs/TRANSLATING.md

+++ b/docs/TRANSLATING.md

@@ -38,7 +38,7 @@ Here, `LANG-ID` should be one of the ISO 639-1 or ISO 639-2 language codes -- se

The fun part comes - translating the text!

-The first thing we recommend is translating the part of the `_toctree.yml` file that corresponds to your doc chapter. This file is used to render the table of contents on the website.

+The first thing we recommend is translating the part of the `_toctree.yml` file that corresponds to your doc chapter. This file is used to render the table of contents on the website.

> 🙋 If the `_toctree.yml` file doesn't yet exist for your language, you can create one by copy-pasting from the English version and deleting the sections unrelated to your chapter. Just make sure it exists in the `docs/source/LANG-ID/` directory!

diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 3626db3f7b58..a0c6159991b5 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -72,6 +72,8 @@

title: Overview

- local: using-diffusers/sdxl

title: Stable Diffusion XL

+ - local: using-diffusers/lcm

+ title: Latent Consistency Models

- local: using-diffusers/kandinsky

title: Kandinsky

- local: using-diffusers/controlnet

@@ -133,7 +135,7 @@

- local: optimization/memory

title: Reduce memory usage

- local: optimization/torch2.0

- title: Torch 2.0

+ title: PyTorch 2.0

- local: optimization/xformers

title: xFormers

- local: optimization/tome

@@ -200,6 +202,8 @@

title: AsymmetricAutoencoderKL

- local: api/models/autoencoder_tiny

title: Tiny AutoEncoder

+ - local: api/models/consistency_decoder_vae

+ title: ConsistencyDecoderVAE

- local: api/models/transformer2d

title: Transformer2D

- local: api/models/transformer_temporal

@@ -344,6 +348,8 @@

title: Overview

- local: api/schedulers/cm_stochastic_iterative

title: CMStochasticIterativeScheduler

+ - local: api/schedulers/consistency_decoder

+ title: ConsistencyDecoderScheduler

- local: api/schedulers/ddim_inverse

title: DDIMInverseScheduler

- local: api/schedulers/ddim

diff --git a/docs/source/en/api/models/consistency_decoder_vae.md b/docs/source/en/api/models/consistency_decoder_vae.md

new file mode 100644

index 000000000000..b45f7fa059dc

--- /dev/null

+++ b/docs/source/en/api/models/consistency_decoder_vae.md

@@ -0,0 +1,18 @@

+# Consistency Decoder

+

+Consistency decoder can be used to decode the latents from the denoising UNet in the [`StableDiffusionPipeline`]. This decoder was introduced in the [DALL-E 3 technical report](https://openai.com/dall-e-3).

+

+The original codebase can be found at [openai/consistencydecoder](https://github.com/openai/consistencydecoder).

+

+

+

+Inference is only supported for 2 iterations as of now.

+

+

+

+The pipeline could not have been contributed without the help of [madebyollin](https://github.com/madebyollin) and [mrsteyk](https://github.com/mrsteyk) from [this issue](https://github.com/openai/consistencydecoder/issues/1).

+

+## ConsistencyDecoderVAE

+[[autodoc]] ConsistencyDecoderVAE

+ - all

+ - decode

diff --git a/docs/source/en/api/schedulers/consistency_decoder.md b/docs/source/en/api/schedulers/consistency_decoder.md

new file mode 100644

index 000000000000..6c937b913279

--- /dev/null

+++ b/docs/source/en/api/schedulers/consistency_decoder.md

@@ -0,0 +1,9 @@

+# ConsistencyDecoderScheduler

+

+This scheduler is a part of the [`ConsistencyDecoderPipeline`] and was introduced in [DALL-E 3](https://openai.com/dall-e-3).

+

+The original codebase can be found at [openai/consistency_models](https://github.com/openai/consistency_models).

+

+

+## ConsistencyDecoderScheduler

+[[autodoc]] schedulers.scheduling_consistency_decoder.ConsistencyDecoderScheduler

\ No newline at end of file

diff --git a/docs/source/en/conceptual/ethical_guidelines.md b/docs/source/en/conceptual/ethical_guidelines.md

index fe1d849f44ff..86176bcaa33e 100644

--- a/docs/source/en/conceptual/ethical_guidelines.md

+++ b/docs/source/en/conceptual/ethical_guidelines.md

@@ -14,7 +14,7 @@ specific language governing permissions and limitations under the License.

## Preamble

-[Diffusers](https://huggingface.co/docs/diffusers/index) provides pre-trained diffusion models and serves as a modular toolbox for inference and training.

+[Diffusers](https://huggingface.co/docs/diffusers/index) provides pre-trained diffusion models and serves as a modular toolbox for inference and training.

Given its real case applications in the world and potential negative impacts on society, we think it is important to provide the project with ethical guidelines to guide the development, users’ contributions, and usage of the Diffusers library.

@@ -46,7 +46,7 @@ The following ethical guidelines apply generally, but we will primarily implemen

## Examples of implementations: Safety features and Mechanisms

-The team works daily to make the technical and non-technical tools available to deal with the potential ethical and social risks associated with diffusion technology. Moreover, the community's input is invaluable in ensuring these features' implementation and raising awareness with us.

+The team works daily to make the technical and non-technical tools available to deal with the potential ethical and social risks associated with diffusion technology. Moreover, the community's input is invaluable in ensuring these features' implementation and raising awareness with us.

- [**Community tab**](https://huggingface.co/docs/hub/repositories-pull-requests-discussions): it enables the community to discuss and better collaborate on a project.

@@ -60,4 +60,4 @@ The team works daily to make the technical and non-technical tools available to

- **Staged released on the Hub**: in particularly sensitive situations, access to some repositories should be restricted. This staged release is an intermediary step that allows the repository’s authors to have more control over its use.

-- **Licensing**: [OpenRAILs](https://huggingface.co/blog/open_rail), a new type of licensing, allow us to ensure free access while having a set of restrictions that ensure more responsible use.

+- **Licensing**: [OpenRAILs](https://huggingface.co/blog/open_rail), a new type of licensing, allow us to ensure free access while having a set of restrictions that ensure more responsible use.

diff --git a/docs/source/en/conceptual/evaluation.md b/docs/source/en/conceptual/evaluation.md

index 997c5f4016dc..848eec8620cd 100644

--- a/docs/source/en/conceptual/evaluation.md

+++ b/docs/source/en/conceptual/evaluation.md

@@ -12,9 +12,9 @@ specific language governing permissions and limitations under the License.

# Evaluating Diffusion Models

-

-  -

+

+

-

+

+  +

Evaluation of generative models like [Stable Diffusion](https://huggingface.co/docs/diffusers/stable_diffusion) is subjective in nature. But as practitioners and researchers, we often have to make careful choices amongst many different possibilities. So, when working with different generative models (like GANs, Diffusion, etc.), how do we choose one over the other?

@@ -23,7 +23,7 @@ However, quantitative metrics don't necessarily correspond to image quality. So,

of both qualitative and quantitative evaluations provides a stronger signal when choosing one model

over the other.

-In this document, we provide a non-exhaustive overview of qualitative and quantitative methods to evaluate Diffusion models. For quantitative methods, we specifically focus on how to implement them alongside `diffusers`.

+In this document, we provide a non-exhaustive overview of qualitative and quantitative methods to evaluate Diffusion models. For quantitative methods, we specifically focus on how to implement them alongside `diffusers`.

The methods shown in this document can also be used to evaluate different [noise schedulers](https://huggingface.co/docs/diffusers/main/en/api/schedulers/overview) keeping the underlying generation model fixed.

@@ -38,9 +38,9 @@ We cover Diffusion models with the following pipelines:

## Qualitative Evaluation

Qualitative evaluation typically involves human assessment of generated images. Quality is measured across aspects such as compositionality, image-text alignment, and spatial relations. Common prompts provide a degree of uniformity for subjective metrics.

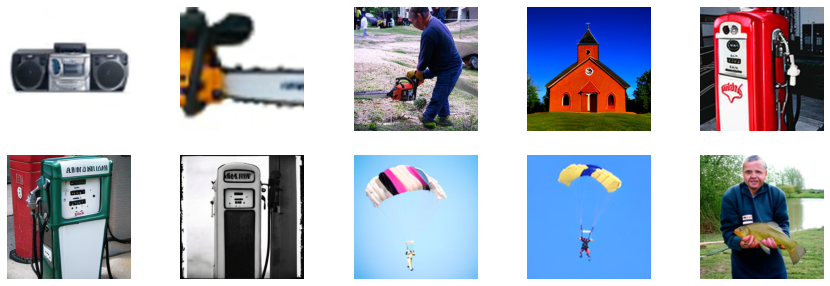

-DrawBench and PartiPrompts are prompt datasets used for qualitative benchmarking. DrawBench and PartiPrompts were introduced by [Imagen](https://imagen.research.google/) and [Parti](https://parti.research.google/) respectively.

+DrawBench and PartiPrompts are prompt datasets used for qualitative benchmarking. DrawBench and PartiPrompts were introduced by [Imagen](https://imagen.research.google/) and [Parti](https://parti.research.google/) respectively.

-From the [official Parti website](https://parti.research.google/):

+From the [official Parti website](https://parti.research.google/):

> PartiPrompts (P2) is a rich set of over 1600 prompts in English that we release as part of this work. P2 can be used to measure model capabilities across various categories and challenge aspects.

@@ -52,13 +52,13 @@ PartiPrompts has the following columns:

- Category of the prompt (such as “Abstract”, “World Knowledge”, etc.)

- Challenge reflecting the difficulty (such as “Basic”, “Complex”, “Writing & Symbols”, etc.)

-These benchmarks allow for side-by-side human evaluation of different image generation models.

+These benchmarks allow for side-by-side human evaluation of different image generation models.

For this, the 🧨 Diffusers team has built **Open Parti Prompts**, which is a community-driven qualitative benchmark based on Parti Prompts to compare state-of-the-art open-source diffusion models:

- [Open Parti Prompts Game](https://huggingface.co/spaces/OpenGenAI/open-parti-prompts): For 10 parti prompts, 4 generated images are shown and the user selects the image that suits the prompt best.

- [Open Parti Prompts Leaderboard](https://huggingface.co/spaces/OpenGenAI/parti-prompts-leaderboard): The leaderboard comparing the currently best open-sourced diffusion models to each other.

-To manually compare images, let’s see how we can use `diffusers` on a couple of PartiPrompts.

+To manually compare images, let’s see how we can use `diffusers` on a couple of PartiPrompts.

Below we show some prompts sampled across different challenges: Basic, Complex, Linguistic Structures, Imagination, and Writing & Symbols. Here we are using PartiPrompts as a [dataset](https://huggingface.co/datasets/nateraw/parti-prompts).

@@ -92,16 +92,16 @@ images = sd_pipeline(sample_prompts, num_images_per_prompt=1, generator=generato

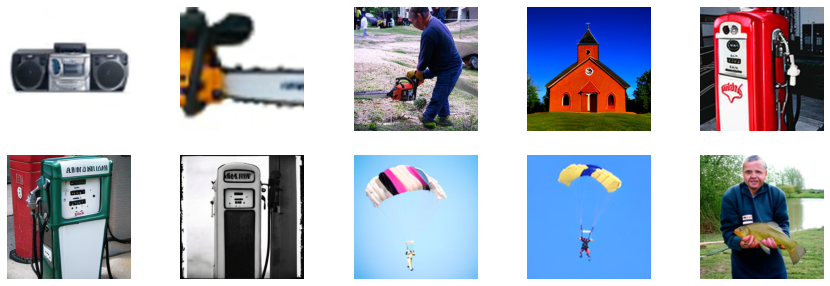

-We can also set `num_images_per_prompt` accordingly to compare different images for the same prompt. Running the same pipeline but with a different checkpoint ([v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)), yields:

+We can also set `num_images_per_prompt` accordingly to compare different images for the same prompt. Running the same pipeline but with a different checkpoint ([v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)), yields:

Once several images are generated from all the prompts using multiple models (under evaluation), these results are presented to human evaluators for scoring. For

-more details on the DrawBench and PartiPrompts benchmarks, refer to their respective papers.

+more details on the DrawBench and PartiPrompts benchmarks, refer to their respective papers.

-

+

-It is useful to look at some inference samples while a model is training to measure the

+It is useful to look at some inference samples while a model is training to measure the

training progress. In our [training scripts](https://github.com/huggingface/diffusers/tree/main/examples/), we support this utility with additional support for

logging to TensorBoard and Weights & Biases.

@@ -177,7 +177,7 @@ generator = torch.manual_seed(seed)

images = sd_pipeline(prompts, num_images_per_prompt=1, generator=generator, output_type="np").images

```

-Then we load the [v1-5 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) to generate images:

+Then we load the [v1-5 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) to generate images:

```python

model_ckpt_1_5 = "runwayml/stable-diffusion-v1-5"

@@ -205,7 +205,7 @@ It seems like the [v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

By construction, there are some limitations in this score. The captions in the training dataset

were crawled from the web and extracted from `alt` and similar tags associated an image on the internet.

They are not necessarily representative of what a human being would use to describe an image. Hence we

-had to "engineer" some prompts here.

+had to "engineer" some prompts here.

@@ -551,15 +551,15 @@ FID results tend to be fragile as they depend on a lot of factors:

* The implementation accuracy of the computation.

* The image format (not the same if we start from PNGs vs JPGs).

-Keeping that in mind, FID is often most useful when comparing similar runs, but it is

-hard to reproduce paper results unless the authors carefully disclose the FID

+Keeping that in mind, FID is often most useful when comparing similar runs, but it is

+hard to reproduce paper results unless the authors carefully disclose the FID

measurement code.

-These points apply to other related metrics too, such as KID and IS.

+These points apply to other related metrics too, such as KID and IS.

-As a final step, let's visually inspect the `fake_images`.

+As a final step, let's visually inspect the `fake_images`.

+

Evaluation of generative models like [Stable Diffusion](https://huggingface.co/docs/diffusers/stable_diffusion) is subjective in nature. But as practitioners and researchers, we often have to make careful choices amongst many different possibilities. So, when working with different generative models (like GANs, Diffusion, etc.), how do we choose one over the other?

@@ -23,7 +23,7 @@ However, quantitative metrics don't necessarily correspond to image quality. So,

of both qualitative and quantitative evaluations provides a stronger signal when choosing one model

over the other.

-In this document, we provide a non-exhaustive overview of qualitative and quantitative methods to evaluate Diffusion models. For quantitative methods, we specifically focus on how to implement them alongside `diffusers`.

+In this document, we provide a non-exhaustive overview of qualitative and quantitative methods to evaluate Diffusion models. For quantitative methods, we specifically focus on how to implement them alongside `diffusers`.

The methods shown in this document can also be used to evaluate different [noise schedulers](https://huggingface.co/docs/diffusers/main/en/api/schedulers/overview) keeping the underlying generation model fixed.

@@ -38,9 +38,9 @@ We cover Diffusion models with the following pipelines:

## Qualitative Evaluation

Qualitative evaluation typically involves human assessment of generated images. Quality is measured across aspects such as compositionality, image-text alignment, and spatial relations. Common prompts provide a degree of uniformity for subjective metrics.

-DrawBench and PartiPrompts are prompt datasets used for qualitative benchmarking. DrawBench and PartiPrompts were introduced by [Imagen](https://imagen.research.google/) and [Parti](https://parti.research.google/) respectively.

+DrawBench and PartiPrompts are prompt datasets used for qualitative benchmarking. DrawBench and PartiPrompts were introduced by [Imagen](https://imagen.research.google/) and [Parti](https://parti.research.google/) respectively.

-From the [official Parti website](https://parti.research.google/):

+From the [official Parti website](https://parti.research.google/):

> PartiPrompts (P2) is a rich set of over 1600 prompts in English that we release as part of this work. P2 can be used to measure model capabilities across various categories and challenge aspects.

@@ -52,13 +52,13 @@ PartiPrompts has the following columns:

- Category of the prompt (such as “Abstract”, “World Knowledge”, etc.)

- Challenge reflecting the difficulty (such as “Basic”, “Complex”, “Writing & Symbols”, etc.)

-These benchmarks allow for side-by-side human evaluation of different image generation models.

+These benchmarks allow for side-by-side human evaluation of different image generation models.

For this, the 🧨 Diffusers team has built **Open Parti Prompts**, which is a community-driven qualitative benchmark based on Parti Prompts to compare state-of-the-art open-source diffusion models:

- [Open Parti Prompts Game](https://huggingface.co/spaces/OpenGenAI/open-parti-prompts): For 10 parti prompts, 4 generated images are shown and the user selects the image that suits the prompt best.

- [Open Parti Prompts Leaderboard](https://huggingface.co/spaces/OpenGenAI/parti-prompts-leaderboard): The leaderboard comparing the currently best open-sourced diffusion models to each other.

-To manually compare images, let’s see how we can use `diffusers` on a couple of PartiPrompts.

+To manually compare images, let’s see how we can use `diffusers` on a couple of PartiPrompts.

Below we show some prompts sampled across different challenges: Basic, Complex, Linguistic Structures, Imagination, and Writing & Symbols. Here we are using PartiPrompts as a [dataset](https://huggingface.co/datasets/nateraw/parti-prompts).

@@ -92,16 +92,16 @@ images = sd_pipeline(sample_prompts, num_images_per_prompt=1, generator=generato

-We can also set `num_images_per_prompt` accordingly to compare different images for the same prompt. Running the same pipeline but with a different checkpoint ([v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)), yields:

+We can also set `num_images_per_prompt` accordingly to compare different images for the same prompt. Running the same pipeline but with a different checkpoint ([v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)), yields:

Once several images are generated from all the prompts using multiple models (under evaluation), these results are presented to human evaluators for scoring. For

-more details on the DrawBench and PartiPrompts benchmarks, refer to their respective papers.

+more details on the DrawBench and PartiPrompts benchmarks, refer to their respective papers.

-

+

-It is useful to look at some inference samples while a model is training to measure the

+It is useful to look at some inference samples while a model is training to measure the

training progress. In our [training scripts](https://github.com/huggingface/diffusers/tree/main/examples/), we support this utility with additional support for

logging to TensorBoard and Weights & Biases.

@@ -177,7 +177,7 @@ generator = torch.manual_seed(seed)

images = sd_pipeline(prompts, num_images_per_prompt=1, generator=generator, output_type="np").images

```

-Then we load the [v1-5 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) to generate images:

+Then we load the [v1-5 checkpoint](https://huggingface.co/runwayml/stable-diffusion-v1-5) to generate images:

```python

model_ckpt_1_5 = "runwayml/stable-diffusion-v1-5"

@@ -205,7 +205,7 @@ It seems like the [v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

By construction, there are some limitations in this score. The captions in the training dataset

were crawled from the web and extracted from `alt` and similar tags associated an image on the internet.

They are not necessarily representative of what a human being would use to describe an image. Hence we

-had to "engineer" some prompts here.

+had to "engineer" some prompts here.

@@ -551,15 +551,15 @@ FID results tend to be fragile as they depend on a lot of factors:

* The implementation accuracy of the computation.

* The image format (not the same if we start from PNGs vs JPGs).

-Keeping that in mind, FID is often most useful when comparing similar runs, but it is

-hard to reproduce paper results unless the authors carefully disclose the FID

+Keeping that in mind, FID is often most useful when comparing similar runs, but it is

+hard to reproduce paper results unless the authors carefully disclose the FID

measurement code.

-These points apply to other related metrics too, such as KID and IS.

+These points apply to other related metrics too, such as KID and IS.

-As a final step, let's visually inspect the `fake_images`.

+As a final step, let's visually inspect the `fake_images`.

diff --git a/docs/source/en/conceptual/philosophy.md b/docs/source/en/conceptual/philosophy.md

index 909ed6bc193d..c7b96abd7f11 100644

--- a/docs/source/en/conceptual/philosophy.md

+++ b/docs/source/en/conceptual/philosophy.md

@@ -27,18 +27,18 @@ In a nutshell, Diffusers is built to be a natural extension of PyTorch. Therefor

## Simple over easy

-As PyTorch states, **explicit is better than implicit** and **simple is better than complex**. This design philosophy is reflected in multiple parts of the library:

+As PyTorch states, **explicit is better than implicit** and **simple is better than complex**. This design philosophy is reflected in multiple parts of the library:

- We follow PyTorch's API with methods like [`DiffusionPipeline.to`](https://huggingface.co/docs/diffusers/main/en/api/diffusion_pipeline#diffusers.DiffusionPipeline.to) to let the user handle device management.

- Raising concise error messages is preferred to silently correct erroneous input. Diffusers aims at teaching the user, rather than making the library as easy to use as possible.

- Complex model vs. scheduler logic is exposed instead of magically handled inside. Schedulers/Samplers are separated from diffusion models with minimal dependencies on each other. This forces the user to write the unrolled denoising loop. However, the separation allows for easier debugging and gives the user more control over adapting the denoising process or switching out diffusion models or schedulers.

-- Separately trained components of the diffusion pipeline, *e.g.* the text encoder, the unet, and the variational autoencoder, each have their own model class. This forces the user to handle the interaction between the different model components, and the serialization format separates the model components into different files. However, this allows for easier debugging and customization. DreamBooth or Textual Inversion training

+- Separately trained components of the diffusion pipeline, *e.g.* the text encoder, the unet, and the variational autoencoder, each have their own model class. This forces the user to handle the interaction between the different model components, and the serialization format separates the model components into different files. However, this allows for easier debugging and customization. DreamBooth or Textual Inversion training

is very simple thanks to Diffusers' ability to separate single components of the diffusion pipeline.

## Tweakable, contributor-friendly over abstraction

-For large parts of the library, Diffusers adopts an important design principle of the [Transformers library](https://github.com/huggingface/transformers), which is to prefer copy-pasted code over hasty abstractions. This design principle is very opinionated and stands in stark contrast to popular design principles such as [Don't repeat yourself (DRY)](https://en.wikipedia.org/wiki/Don%27t_repeat_yourself).

+For large parts of the library, Diffusers adopts an important design principle of the [Transformers library](https://github.com/huggingface/transformers), which is to prefer copy-pasted code over hasty abstractions. This design principle is very opinionated and stands in stark contrast to popular design principles such as [Don't repeat yourself (DRY)](https://en.wikipedia.org/wiki/Don%27t_repeat_yourself).

In short, just like Transformers does for modeling files, Diffusers prefers to keep an extremely low level of abstraction and very self-contained code for pipelines and schedulers.

-Functions, long code blocks, and even classes can be copied across multiple files which at first can look like a bad, sloppy design choice that makes the library unmaintainable.

+Functions, long code blocks, and even classes can be copied across multiple files which at first can look like a bad, sloppy design choice that makes the library unmaintainable.

**However**, this design has proven to be extremely successful for Transformers and makes a lot of sense for community-driven, open-source machine learning libraries because:

- Machine Learning is an extremely fast-moving field in which paradigms, model architectures, and algorithms are changing rapidly, which therefore makes it very difficult to define long-lasting code abstractions.

- Machine Learning practitioners like to be able to quickly tweak existing code for ideation and research and therefore prefer self-contained code over one that contains many abstractions.

@@ -47,10 +47,10 @@ Functions, long code blocks, and even classes can be copied across multiple file

At Hugging Face, we call this design the **single-file policy** which means that almost all of the code of a certain class should be written in a single, self-contained file. To read more about the philosophy, you can have a look

at [this blog post](https://huggingface.co/blog/transformers-design-philosophy).

-In Diffusers, we follow this philosophy for both pipelines and schedulers, but only partly for diffusion models. The reason we don't follow this design fully for diffusion models is because almost all diffusion pipelines, such

+In Diffusers, we follow this philosophy for both pipelines and schedulers, but only partly for diffusion models. The reason we don't follow this design fully for diffusion models is because almost all diffusion pipelines, such

as [DDPM](https://huggingface.co/docs/diffusers/api/pipelines/ddpm), [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion/overview#stable-diffusion-pipelines), [unCLIP (DALL·E 2)](https://huggingface.co/docs/diffusers/api/pipelines/unclip) and [Imagen](https://imagen.research.google/) all rely on the same diffusion model, the [UNet](https://huggingface.co/docs/diffusers/api/models/unet2d-cond).

-Great, now you should have generally understood why 🧨 Diffusers is designed the way it is 🤗.

+Great, now you should have generally understood why 🧨 Diffusers is designed the way it is 🤗.

We try to apply these design principles consistently across the library. Nevertheless, there are some minor exceptions to the philosophy or some unlucky design choices. If you have feedback regarding the design, we would ❤️ to hear it [directly on GitHub](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

## Design Philosophy in Details

@@ -89,7 +89,7 @@ The following design principles are followed:

- Models should by default have the highest precision and lowest performance setting.

- To integrate new model checkpoints whose general architecture can be classified as an architecture that already exists in Diffusers, the existing model architecture shall be adapted to make it work with the new checkpoint. One should only create a new file if the model architecture is fundamentally different.

- Models should be designed to be easily extendable to future changes. This can be achieved by limiting public function arguments, configuration arguments, and "foreseeing" future changes, *e.g.* it is usually better to add `string` "...type" arguments that can easily be extended to new future types instead of boolean `is_..._type` arguments. Only the minimum amount of changes shall be made to existing architectures to make a new model checkpoint work.

-- The model design is a difficult trade-off between keeping code readable and concise and supporting many model checkpoints. For most parts of the modeling code, classes shall be adapted for new model checkpoints, while there are some exceptions where it is preferred to add new classes to make sure the code is kept concise and

+- The model design is a difficult trade-off between keeping code readable and concise and supporting many model checkpoints. For most parts of the modeling code, classes shall be adapted for new model checkpoints, while there are some exceptions where it is preferred to add new classes to make sure the code is kept concise and

readable long-term, such as [UNet blocks](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/unet_2d_blocks.py) and [Attention processors](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py).

### Schedulers

@@ -97,9 +97,9 @@ readable long-term, such as [UNet blocks](https://github.com/huggingface/diffuse

Schedulers are responsible to guide the denoising process for inference as well as to define a noise schedule for training. They are designed as individual classes with loadable configuration files and strongly follow the **single-file policy**.

The following design principles are followed:

-- All schedulers are found in [`src/diffusers/schedulers`](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers).

-- Schedulers are **not** allowed to import from large utils files and shall be kept very self-contained.

-- One scheduler Python file corresponds to one scheduler algorithm (as might be defined in a paper).

+- All schedulers are found in [`src/diffusers/schedulers`](https://github.com/huggingface/diffusers/tree/main/src/diffusers/schedulers).

+- Schedulers are **not** allowed to import from large utils files and shall be kept very self-contained.

+- One scheduler Python file corresponds to one scheduler algorithm (as might be defined in a paper).

- If schedulers share similar functionalities, we can make use of the `#Copied from` mechanism.

- Schedulers all inherit from `SchedulerMixin` and `ConfigMixin`.

- Schedulers can be easily swapped out with the [`ConfigMixin.from_config`](https://huggingface.co/docs/diffusers/main/en/api/configuration#diffusers.ConfigMixin.from_config) method as explained in detail [here](../using-diffusers/schedulers.md).

diff --git a/docs/source/en/optimization/coreml.md b/docs/source/en/optimization/coreml.md

index ab96eea0fb04..62809305bfb0 100644

--- a/docs/source/en/optimization/coreml.md

+++ b/docs/source/en/optimization/coreml.md

@@ -31,7 +31,7 @@ Thankfully, Apple engineers developed [a conversion tool](https://github.com/app

Before you convert a model, though, take a moment to explore the Hugging Face Hub – chances are the model you're interested in is already available in Core ML format:

- the [Apple](https://huggingface.co/apple) organization includes Stable Diffusion versions 1.4, 1.5, 2.0 base, and 2.1 base

-- [coreml](https://huggingface.co/coreml) organization includes custom DreamBoothed and finetuned models

+- [coreml community](https://huggingface.co/coreml-community) includes custom finetuned models

- use this [filter](https://huggingface.co/models?pipeline_tag=text-to-image&library=coreml&p=2&sort=likes) to return all available Core ML checkpoints

If you can't find the model you're interested in, we recommend you follow the instructions for [Converting Models to Core ML](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml) by Apple.

@@ -90,7 +90,6 @@ snapshot_download(repo_id, allow_patterns=f"{variant}/*", local_dir=model_path,

print(f"Model downloaded at {model_path}")

```

-

### Inference[[python-inference]]

Once you have downloaded a snapshot of the model, you can test it using Apple's Python script.

@@ -99,7 +98,7 @@ Once you have downloaded a snapshot of the model, you can test it using Apple's

python -m python_coreml_stable_diffusion.pipeline --prompt "a photo of an astronaut riding a horse on mars" -i models/coreml-stable-diffusion-v1-4_original_packages -o --compute-unit CPU_AND_GPU --seed 93

```

-`` should point to the checkpoint you downloaded in the step above, and `--compute-unit` indicates the hardware you want to allow for inference. It must be one of the following options: `ALL`, `CPU_AND_GPU`, `CPU_ONLY`, `CPU_AND_NE`. You may also provide an optional output path, and a seed for reproducibility.

+Pass the path of the downloaded checkpoint with `-i` flag to the script. `--compute-unit` indicates the hardware you want to allow for inference. It must be one of the following options: `ALL`, `CPU_AND_GPU`, `CPU_ONLY`, `CPU_AND_NE`. You may also provide an optional output path, and a seed for reproducibility.

The inference script assumes you're using the original version of the Stable Diffusion model, `CompVis/stable-diffusion-v1-4`. If you use another model, you *have* to specify its Hub id in the inference command line, using the `--model-version` option. This works for models already supported and custom models you trained or fine-tuned yourself.

@@ -109,7 +108,6 @@ For example, if you want to use [`runwayml/stable-diffusion-v1-5`](https://huggi

python -m python_coreml_stable_diffusion.pipeline --prompt "a photo of an astronaut riding a horse on mars" --compute-unit ALL -o output --seed 93 -i models/coreml-stable-diffusion-v1-5_original_packages --model-version runwayml/stable-diffusion-v1-5

```

-

## Core ML inference in Swift

Running inference in Swift is slightly faster than in Python because the models are already compiled in the `mlmodelc` format. This is noticeable on app startup when the model is loaded but shouldn’t be noticeable if you run several generations afterward.

@@ -149,7 +147,6 @@ You have to specify in `--resource-path` one of the checkpoints downloaded in th

For more details, please refer to the [instructions in Apple's repo](https://github.com/apple/ml-stable-diffusion).

-

## Supported Diffusers Features

The Core ML models and inference code don't support many of the features, options, and flexibility of 🧨 Diffusers. These are some of the limitations to keep in mind:

@@ -158,10 +155,10 @@ The Core ML models and inference code don't support many of the features, option

- Only two schedulers have been ported to Swift, the default one used by Stable Diffusion and `DPMSolverMultistepScheduler`, which we ported to Swift from our `diffusers` implementation. We recommend you use `DPMSolverMultistepScheduler`, since it produces the same quality in about half the steps.

- Negative prompts, classifier-free guidance scale, and image-to-image tasks are available in the inference code. Advanced features such as depth guidance, ControlNet, and latent upscalers are not available yet.

-Apple's [conversion and inference repo](https://github.com/apple/ml-stable-diffusion) and our own [swift-coreml-diffusers](https://github.com/huggingface/swift-coreml-diffusers) repos are intended as technology demonstrators to enable other developers to build upon.

+Apple's [conversion and inference repo](https://github.com/apple/ml-stable-diffusion) and our own [swift-coreml-diffusers](https://github.com/huggingface/swift-coreml-diffusers) repos are intended as technology demonstrators to enable other developers to build upon.

-If you feel strongly about any missing features, please feel free to open a feature request or, better yet, a contribution PR :)

+If you feel strongly about any missing features, please feel free to open a feature request or, better yet, a contribution PR 🙂.

## Native Diffusers Swift app

-One easy way to run Stable Diffusion on your own Apple hardware is to use [our open-source Swift repo](https://github.com/huggingface/swift-coreml-diffusers), based on `diffusers` and Apple's conversion and inference repo. You can study the code, compile it with [Xcode](https://developer.apple.com/xcode/) and adapt it for your own needs. For your convenience, there's also a [standalone Mac app in the App Store](https://apps.apple.com/app/diffusers/id1666309574), so you can play with it without having to deal with the code or IDE. If you are a developer and have determined that Core ML is the best solution to build your Stable Diffusion app, then you can use the rest of this guide to get started with your project. We can't wait to see what you'll build :)

+One easy way to run Stable Diffusion on your own Apple hardware is to use [our open-source Swift repo](https://github.com/huggingface/swift-coreml-diffusers), based on `diffusers` and Apple's conversion and inference repo. You can study the code, compile it with [Xcode](https://developer.apple.com/xcode/) and adapt it for your own needs. For your convenience, there's also a [standalone Mac app in the App Store](https://apps.apple.com/app/diffusers/id1666309574), so you can play with it without having to deal with the code or IDE. If you are a developer and have determined that Core ML is the best solution to build your Stable Diffusion app, then you can use the rest of this guide to get started with your project. We can't wait to see what you'll build 🙂.

diff --git a/docs/source/en/optimization/fp16.md b/docs/source/en/optimization/fp16.md

index 2ac16786eb46..61bc5569c53c 100644

--- a/docs/source/en/optimization/fp16.md

+++ b/docs/source/en/optimization/fp16.md

@@ -12,7 +12,7 @@ specific language governing permissions and limitations under the License.

# Speed up inference

-There are several ways to optimize 🤗 Diffusers for inference speed. As a general rule of thumb, we recommend using either [xFormers](xformers) or `torch.nn.functional.scaled_dot_product_attention` in PyTorch 2.0 for their memory-efficient attention.

+There are several ways to optimize 🤗 Diffusers for inference speed. As a general rule of thumb, we recommend using either [xFormers](xformers) or `torch.nn.functional.scaled_dot_product_attention` in PyTorch 2.0 for their memory-efficient attention.

@@ -64,5 +64,5 @@ image = pipe(prompt).images[0]

Don't use [`torch.autocast`](https://pytorch.org/docs/stable/amp.html#torch.autocast) in any of the pipelines as it can lead to black images and is always slower than pure float16 precision.

-

-

\ No newline at end of file

+

+

diff --git a/docs/source/en/optimization/habana.md b/docs/source/en/optimization/habana.md

index c78c8ca3a1be..8a06210996f3 100644

--- a/docs/source/en/optimization/habana.md

+++ b/docs/source/en/optimization/habana.md

@@ -55,8 +55,7 @@ outputs = pipeline(

)

```

-For more information, check out 🤗 Optimum Habana's [documentation](https://huggingface.co/docs/optimum/habana/usage_guides/stable_diffusion) and the [example](https://github.com/huggingface/optimum-habana/tree/main/examples/stable-diffusion) provided in the official Github repository.

-

+For more information, check out 🤗 Optimum Habana's [documentation](https://huggingface.co/docs/optimum/habana/usage_guides/stable_diffusion) and the [example](https://github.com/huggingface/optimum-habana/tree/main/examples/stable-diffusion) provided in the official GitHub repository.

## Benchmark

diff --git a/docs/source/en/optimization/memory.md b/docs/source/en/optimization/memory.md

index c91fed1b2784..281b65df8d8c 100644

--- a/docs/source/en/optimization/memory.md

+++ b/docs/source/en/optimization/memory.md

@@ -1,3 +1,15 @@

+

+

# Reduce memory usage

A barrier to using diffusion models is the large amount of memory required. To overcome this challenge, there are several memory-reducing techniques you can use to run even some of the largest models on free-tier or consumer GPUs. Some of these techniques can even be combined to further reduce memory usage.

@@ -18,10 +30,9 @@ The results below are obtained from generating a single 512x512 image from the p

| traced UNet | 3.21s | x2.96 |

| memory-efficient attention | 2.63s | x3.61 |

-

## Sliced VAE

-Sliced VAE enables decoding large batches of images with limited VRAM or batches with 32 images or more by decoding the batches of latents one image at a time. You'll likely want to couple this with [`~ModelMixin.enable_xformers_memory_efficient_attention`] to further reduce memory use.

+Sliced VAE enables decoding large batches of images with limited VRAM or batches with 32 images or more by decoding the batches of latents one image at a time. You'll likely want to couple this with [`~ModelMixin.enable_xformers_memory_efficient_attention`] to reduce memory use further if you have xFormers installed.

To use sliced VAE, call [`~StableDiffusionPipeline.enable_vae_slicing`] on your pipeline before inference:

@@ -38,6 +49,7 @@ pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

pipe.enable_vae_slicing()

+#pipe.enable_xformers_memory_efficient_attention()

images = pipe([prompt] * 32).images

```

@@ -45,7 +57,7 @@ You may see a small performance boost in VAE decoding on multi-image batches, an

## Tiled VAE

-Tiled VAE processing also enables working with large images on limited VRAM (for example, generating 4k images on 8GB of VRAM) by splitting the image into overlapping tiles, decoding the tiles, and then blending the outputs together to compose the final image. You should also used tiled VAE with [`~ModelMixin.enable_xformers_memory_efficient_attention`] to further reduce memory use.

+Tiled VAE processing also enables working with large images on limited VRAM (for example, generating 4k images on 8GB of VRAM) by splitting the image into overlapping tiles, decoding the tiles, and then blending the outputs together to compose the final image. You should also used tiled VAE with [`~ModelMixin.enable_xformers_memory_efficient_attention`] to reduce memory use further if you have xFormers installed.

To use tiled VAE processing, call [`~StableDiffusionPipeline.enable_vae_tiling`] on your pipeline before inference:

@@ -62,7 +74,7 @@ pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

prompt = "a beautiful landscape photograph"

pipe.enable_vae_tiling()

-pipe.enable_xformers_memory_efficient_attention()

+#pipe.enable_xformers_memory_efficient_attention()

image = pipe([prompt], width=3840, height=2224, num_inference_steps=20).images[0]

```

@@ -98,24 +110,6 @@ Consider using [model offloading](#model-offloading) if you want to optimize for

-CPU offloading can also be chained with attention slicing to reduce memory consumption to less than 2GB.

-

-```Python

-import torch

-from diffusers import StableDiffusionPipeline

-

-pipe = StableDiffusionPipeline.from_pretrained(

- "runwayml/stable-diffusion-v1-5",

- torch_dtype=torch.float16,

- use_safetensors=True,

-)

-

-prompt = "a photo of an astronaut riding a horse on mars"

-pipe.enable_sequential_cpu_offload()

-

-image = pipe(prompt).images[0]

-```

-

When using [`~StableDiffusionPipeline.enable_sequential_cpu_offload`], don't move the pipeline to CUDA beforehand or else the gain in memory consumption will only be minimal (see this [issue](https://github.com/huggingface/diffusers/issues/1934) for more information).

@@ -145,23 +139,6 @@ Enable model offloading by calling [`~StableDiffusionPipeline.enable_model_cpu_o

import torch

from diffusers import StableDiffusionPipeline

-pipe = StableDiffusionPipeline.from_pretrained(

- "runwayml/stable-diffusion-v1-5",

- torch_dtype=torch.float16,

- use_safetensors=True,

-)

-

-prompt = "a photo of an astronaut riding a horse on mars"

-pipe.enable_model_cpu_offload()

-image = pipe(prompt).images[0]

-```

-

-Model offloading can also be combined with attention slicing for additional memory savings.

-

-```Python

-import torch

-from diffusers import StableDiffusionPipeline

-

pipe = StableDiffusionPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5",

torch_dtype=torch.float16,

@@ -170,14 +147,12 @@ pipe = StableDiffusionPipeline.from_pretrained(

prompt = "a photo of an astronaut riding a horse on mars"

pipe.enable_model_cpu_offload()

-

image = pipe(prompt).images[0]

```

-In order to properly offload models after they're called, it is required to run the entire pipeline and models are called in the pipeline's expected order. Exercise caution if models are reused outside the context of the pipeline after hooks have been installed. See [Removing Hooks](https://huggingface.co/docs/accelerate/en/package_reference/big_modeling#accelerate.hooks.remove_hook_from_module)

-for more information.

+In order to properly offload models after they're called, it is required to run the entire pipeline and models are called in the pipeline's expected order. Exercise caution if models are reused outside the context of the pipeline after hooks have been installed. See [Removing Hooks](https://huggingface.co/docs/accelerate/en/package_reference/big_modeling#accelerate.hooks.remove_hook_from_module) for more information.

[`~StableDiffusionPipeline.enable_model_cpu_offload`] is a stateful operation that installs hooks on the models and state on the pipeline.

@@ -303,7 +278,7 @@ unet_traced = torch.jit.load("unet_traced.pt")

class TracedUNet(torch.nn.Module):

def __init__(self):

super().__init__()

- self.in_channels = pipe.unet.in_channels

+ self.in_channels = pipe.unet.config.in_channels

self.device = pipe.unet.device

def forward(self, latent_model_input, t, encoder_hidden_states):

@@ -319,7 +294,7 @@ with torch.inference_mode():

## Memory-efficient attention

-Recent work on optimizing bandwidth in the attention block has generated huge speed-ups and reductions in GPU memory usage. The most recent type of memory-efficient attention is [Flash Attention](https://arxiv.org/pdf/2205.14135.pdf) (you can check out the original code at [HazyResearch/flash-attention](https://github.com/HazyResearch/flash-attention)).

+Recent work on optimizing bandwidth in the attention block has generated huge speed-ups and reductions in GPU memory usage. The most recent type of memory-efficient attention is [Flash Attention](https://arxiv.org/abs/2205.14135) (you can check out the original code at [HazyResearch/flash-attention](https://github.com/HazyResearch/flash-attention)).

@@ -354,4 +329,4 @@ with torch.inference_mode():

# pipe.disable_xformers_memory_efficient_attention()

```

-The iteration speed when using `xformers` should match the iteration speed of Torch 2.0 as described [here](torch2.0).

+The iteration speed when using `xformers` should match the iteration speed of PyTorch 2.0 as described [here](torch2.0).

diff --git a/docs/source/en/optimization/mps.md b/docs/source/en/optimization/mps.md

index 138c85b51184..f5ce3332fc90 100644

--- a/docs/source/en/optimization/mps.md

+++ b/docs/source/en/optimization/mps.md

@@ -31,6 +31,8 @@ pipe = pipe.to("mps")

pipe.enable_attention_slicing()

prompt = "a photo of an astronaut riding a horse on mars"

+image = pipe(prompt).images[0]

+image

```

@@ -48,10 +50,10 @@ If you're using **PyTorch 1.13**, you need to "prime" the pipeline with an addit

pipe.enable_attention_slicing()

prompt = "a photo of an astronaut riding a horse on mars"

-# First-time "warmup" pass if PyTorch version is 1.13

+ # First-time "warmup" pass if PyTorch version is 1.13

+ _ = pipe(prompt, num_inference_steps=1)

-# Results match those from the CPU device after the warmup pass.

+ # Results match those from the CPU device after the warmup pass.

image = pipe(prompt).images[0]

```

@@ -63,6 +65,7 @@ To prevent this from happening, we recommend *attention slicing* to reduce memor

```py

from diffusers import DiffusionPipeline

+import torch

pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, variant="fp16", use_safetensors=True).to("mps")

pipeline.enable_attention_slicing()

diff --git a/docs/source/en/optimization/onnx.md b/docs/source/en/optimization/onnx.md

index 20104b555543..4d352480a007 100644

--- a/docs/source/en/optimization/onnx.md

+++ b/docs/source/en/optimization/onnx.md

@@ -10,13 +10,12 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-

# ONNX Runtime

🤗 [Optimum](https://github.com/huggingface/optimum) provides a Stable Diffusion pipeline compatible with ONNX Runtime. You'll need to install 🤗 Optimum with the following command for ONNX Runtime support:

```bash

-pip install optimum["onnxruntime"]

+pip install -q optimum["onnxruntime"]

```

This guide will show you how to use the Stable Diffusion and Stable Diffusion XL (SDXL) pipelines with ONNX Runtime.

@@ -50,7 +49,7 @@ optimum-cli export onnx --model runwayml/stable-diffusion-v1-5 sd_v15_onnx/

Then to perform inference (you don't have to specify `export=True` again):

-```python

+```python

from optimum.onnxruntime import ORTStableDiffusionPipeline

model_id = "sd_v15_onnx"

diff --git a/docs/source/en/optimization/open_vino.md b/docs/source/en/optimization/open_vino.md

index 606c2207bcda..29299786118a 100644

--- a/docs/source/en/optimization/open_vino.md

+++ b/docs/source/en/optimization/open_vino.md

@@ -10,14 +10,13 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-

# OpenVINO

-🤗 [Optimum](https://github.com/huggingface/optimum-intel) provides Stable Diffusion pipelines compatible with OpenVINO to perform inference on a variety of Intel processors (see the [full list]((https://docs.openvino.ai/latest/openvino_docs_OV_UG_supported_plugins_Supported_Devices.html)) of supported devices).

+🤗 [Optimum](https://github.com/huggingface/optimum-intel) provides Stable Diffusion pipelines compatible with OpenVINO to perform inference on a variety of Intel processors (see the [full list](https://docs.openvino.ai/latest/openvino_docs_OV_UG_supported_plugins_Supported_Devices.html) of supported devices).

You'll need to install 🤗 Optimum Intel with the `--upgrade-strategy eager` option to ensure [`optimum-intel`](https://github.com/huggingface/optimum-intel) is using the latest version:

-```

+```bash

pip install --upgrade-strategy eager optimum["openvino"]

```

diff --git a/docs/source/en/optimization/tome.md b/docs/source/en/optimization/tome.md

index 66d69c6900cc..34726a4c79c2 100644

--- a/docs/source/en/optimization/tome.md

+++ b/docs/source/en/optimization/tome.md

@@ -14,18 +14,25 @@ specific language governing permissions and limitations under the License.

[Token merging](https://huggingface.co/papers/2303.17604) (ToMe) merges redundant tokens/patches progressively in the forward pass of a Transformer-based network which can speed-up the inference latency of [`StableDiffusionPipeline`].

+Install ToMe from `pip`:

+

+```bash

+pip install tomesd

+```

+

You can use ToMe from the [`tomesd`](https://github.com/dbolya/tomesd) library with the [`apply_patch`](https://github.com/dbolya/tomesd?tab=readme-ov-file#usage) function:

```diff

-from diffusers import StableDiffusionPipeline

-import tomesd

+ from diffusers import StableDiffusionPipeline

+ import torch

+ import tomesd

-pipeline = StableDiffusionPipeline.from_pretrained(

- "runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True,

-).to("cuda")

+ pipeline = StableDiffusionPipeline.from_pretrained(

+ "runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True,

+ ).to("cuda")

+ tomesd.apply_patch(pipeline, ratio=0.5)

-image = pipeline("a photo of an astronaut riding a horse on mars").images[0]

+ image = pipeline("a photo of an astronaut riding a horse on mars").images[0]

```

The `apply_patch` function exposes a number of [arguments](https://github.com/dbolya/tomesd#usage) to help strike a balance between pipeline inference speed and the quality of the generated tokens. The most important argument is `ratio` which controls the number of tokens that are merged during the forward pass.

diff --git a/docs/source/en/optimization/torch2.0.md b/docs/source/en/optimization/torch2.0.md

index 1e07b876514f..4775fda0fcf9 100644

--- a/docs/source/en/optimization/torch2.0.md

+++ b/docs/source/en/optimization/torch2.0.md

@@ -10,7 +10,7 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-# Torch 2.0

+# PyTorch 2.0

🤗 Diffusers supports the latest optimizations from [PyTorch 2.0](https://pytorch.org/get-started/pytorch-2.0/) which include:

@@ -48,7 +48,6 @@ In some cases - such as making the pipeline more deterministic or converting it

```diff

import torch

from diffusers import DiffusionPipeline

- from diffusers.models.attention_processor import AttnProcessor

pipe = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True).to("cuda")

+ pipe.unet.set_default_attn_processor()

@@ -110,17 +109,14 @@ for _ in range(3):

### Stable Diffusion image-to-image

-```python

+```python

from diffusers import StableDiffusionImg2ImgPipeline

-import requests

+from diffusers.utils import load_image

import torch

-from PIL import Image

-from io import BytesIO

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

-response = requests.get(url)

-init_image = Image.open(BytesIO(response.content)).convert("RGB")

+init_image = load_image(url)

init_image = init_image.resize((512, 512))

path = "runwayml/stable-diffusion-v1-5"

@@ -143,25 +139,16 @@ for _ in range(3):

### Stable Diffusion inpainting

-```python

+```python

from diffusers import StableDiffusionInpaintPipeline

-import requests

+from diffusers.utils import load_image

import torch

-from PIL import Image

-from io import BytesIO

-

-url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

-

-def download_image(url):

- response = requests.get(url)

- return Image.open(BytesIO(response.content)).convert("RGB")

-

img_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo.png"

mask_url = "https://raw.githubusercontent.com/CompVis/latent-diffusion/main/data/inpainting_examples/overture-creations-5sI6fQgYIuo_mask.png"

-init_image = download_image(img_url).resize((512, 512))

-mask_image = download_image(mask_url).resize((512, 512))

+init_image = load_image(img_url).resize((512, 512))

+mask_image = load_image(mask_url).resize((512, 512))

path = "runwayml/stable-diffusion-inpainting"

@@ -183,17 +170,14 @@ for _ in range(3):

### ControlNet

-```python

+```python

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

-import requests

+from diffusers.utils import load_image

import torch

-from PIL import Image

-from io import BytesIO

url = "https://raw.githubusercontent.com/CompVis/stable-diffusion/main/assets/stable-samples/img2img/sketch-mountains-input.jpg"

-response = requests.get(url)

-init_image = Image.open(BytesIO(response.content)).convert("RGB")

+init_image = load_image(url)

init_image = init_image.resize((512, 512))

path = "runwayml/stable-diffusion-v1-5"

@@ -221,26 +205,26 @@ for _ in range(3):

### DeepFloyd IF text-to-image + upscaling

-```python

+```python

from diffusers import DiffusionPipeline

import torch

run_compile = True # Set True / False

-pipe = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16, use_safetensors=True)

-pipe.to("cuda")

+pipe_1 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-I-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16, use_safetensors=True)

+pipe_1.to("cuda")

pipe_2 = DiffusionPipeline.from_pretrained("DeepFloyd/IF-II-M-v1.0", variant="fp16", text_encoder=None, torch_dtype=torch.float16, use_safetensors=True)

pipe_2.to("cuda")

pipe_3 = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-x4-upscaler", torch_dtype=torch.float16, use_safetensors=True)

pipe_3.to("cuda")

-pipe.unet.to(memory_format=torch.channels_last)

+pipe_1.unet.to(memory_format=torch.channels_last)

pipe_2.unet.to(memory_format=torch.channels_last)

pipe_3.unet.to(memory_format=torch.channels_last)

if run_compile:

- pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

+ pipe_1.unet = torch.compile(pipe_1.unet, mode="reduce-overhead", fullgraph=True)

pipe_2.unet = torch.compile(pipe_2.unet, mode="reduce-overhead", fullgraph=True)

pipe_3.unet = torch.compile(pipe_3.unet, mode="reduce-overhead", fullgraph=True)

@@ -250,9 +234,9 @@ prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

neg_prompt_embeds = torch.randn((1, 2, 4096), dtype=torch.float16)

for _ in range(3):

- image = pipe(prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

- image_2 = pipe_2(image=image, prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

- image_3 = pipe_3(prompt=prompt, image=image, noise_level=100).images

+ image_1 = pipe_1(prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

+ image_2 = pipe_2(image=image_1, prompt_embeds=prompt_embeds, negative_prompt_embeds=neg_prompt_embeds, output_type="pt").images

+ image_3 = pipe_3(prompt=prompt, image=image_1, noise_level=100).images

```

@@ -426,9 +410,9 @@ In the following tables, we report our findings in terms of the *number of itera

| IF | 9.26 | 9.2 | ❌ | 13.31 |

| SDXL - txt2img | 0.52 | 0.53 | - | - |

-## Notes

+## Notes

-* Follow this [PR](https://github.com/huggingface/diffusers/pull/3313) for more details on the environment used for conducting the benchmarks.

+* Follow this [PR](https://github.com/huggingface/diffusers/pull/3313) for more details on the environment used for conducting the benchmarks.

* For the DeepFloyd IF pipeline where batch sizes > 1, we only used a batch size of > 1 in the first IF pipeline for text-to-image generation and NOT for upscaling. That means the two upscaling pipelines received a batch size of 1.

*Thanks to [Horace He](https://github.com/Chillee) from the PyTorch team for their support in improving our support of `torch.compile()` in Diffusers.*

diff --git a/docs/source/en/quicktour.md b/docs/source/en/quicktour.md

index c5ead9829cdc..89792d5c05b3 100644

--- a/docs/source/en/quicktour.md

+++ b/docs/source/en/quicktour.md

@@ -257,7 +257,7 @@ To predict a slightly less noisy image, pass the following to the scheduler's [`

torch.Size([1, 3, 256, 256])

```

-The `less_noisy_sample` can be passed to the next `timestep` where it'll get even less noisy! Let's bring it all together now and visualize the entire denoising process.

+The `less_noisy_sample` can be passed to the next `timestep` where it'll get even less noisy! Let's bring it all together now and visualize the entire denoising process.

First, create a function that postprocesses and displays the denoised image as a `PIL.Image`:

diff --git a/docs/source/en/stable_diffusion.md b/docs/source/en/stable_diffusion.md

index 06eb5bf15f23..c0298eeeb3c1 100644

--- a/docs/source/en/stable_diffusion.md

+++ b/docs/source/en/stable_diffusion.md

@@ -9,12 +9,12 @@ Unless required by applicable law or agreed to in writing, software distributed

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

-

+

# Effective and efficient diffusion

[[open-in-colab]]

-Getting the [`DiffusionPipeline`] to generate images in a certain style or include what you want can be tricky. Often times, you have to run the [`DiffusionPipeline`] several times before you end up with an image you're happy with. But generating something out of nothing is a computationally intensive process, especially if you're running inference over and over again.

+Getting the [`DiffusionPipeline`] to generate images in a certain style or include what you want can be tricky. Often times, you have to run the [`DiffusionPipeline`] several times before you end up with an image you're happy with. But generating something out of nothing is a computationally intensive process, especially if you're running inference over and over again.

This is why it's important to get the most *computational* (speed) and *memory* (GPU vRAM) efficiency from the pipeline to reduce the time between inference cycles so you can iterate faster.

@@ -68,7 +68,7 @@ image

-This process took ~30 seconds on a T4 GPU (it might be faster if your allocated GPU is better than a T4). By default, the [`DiffusionPipeline`] runs inference with full `float32` precision for 50 inference steps. You can speed this up by switching to a lower precision like `float16` or running fewer inference steps.

+This process took ~30 seconds on a T4 GPU (it might be faster if your allocated GPU is better than a T4). By default, the [`DiffusionPipeline`] runs inference with full `float32` precision for 50 inference steps. You can speed this up by switching to a lower precision like `float16` or running fewer inference steps.

Let's start by loading the model in `float16` and generate an image:

diff --git a/docs/source/en/training/lora.md b/docs/source/en/training/lora.md

index 28a9adf3ec61..7c13b7af9d7d 100644

--- a/docs/source/en/training/lora.md

+++ b/docs/source/en/training/lora.md

@@ -113,14 +113,15 @@ Load the LoRA weights from your finetuned model *on top of the base model weight

```py

>>> pipe.unet.load_attn_procs(lora_model_path)

>>> pipe.to("cuda")

-# use half the weights from the LoRA finetuned model and half the weights from the base model

+# use half the weights from the LoRA finetuned model and half the weights from the base model

>>> image = pipe(

... "A pokemon with blue eyes.", num_inference_steps=25, guidance_scale=7.5, cross_attention_kwargs={"scale": 0.5}

... ).images[0]

-# use the weights from the fully finetuned LoRA model

->>> image = pipe("A pokemon with blue eyes.", num_inference_steps=25, guidance_scale=7.5).images[0]

+# OR, use the weights from the fully finetuned LoRA model

+# >>> image = pipe("A pokemon with blue eyes.", num_inference_steps=25, guidance_scale=7.5).images[0]

+

>>> image.save("blue_pokemon.png")

```

@@ -225,17 +226,18 @@ Load the LoRA weights from your finetuned DreamBooth model *on top of the base m

```py

>>> pipe.unet.load_attn_procs(lora_model_path)

>>> pipe.to("cuda")

-# use half the weights from the LoRA finetuned model and half the weights from the base model

+# use half the weights from the LoRA finetuned model and half the weights from the base model

>>> image = pipe(

... "A picture of a sks dog in a bucket.",

... num_inference_steps=25,

... guidance_scale=7.5,

... cross_attention_kwargs={"scale": 0.5},

... ).images[0]

-# use the weights from the fully finetuned LoRA model

->>> image = pipe("A picture of a sks dog in a bucket.", num_inference_steps=25, guidance_scale=7.5).images[0]

+# OR, use the weights from the fully finetuned LoRA model

+# >>> image = pipe("A picture of a sks dog in a bucket.", num_inference_steps=25, guidance_scale=7.5).images[0]

+

>>> image.save("bucket-dog.png")

```

diff --git a/docs/source/en/tutorials/using_peft_for_inference.md b/docs/source/en/tutorials/using_peft_for_inference.md

index 2e3337519caa..da69b712a989 100644

--- a/docs/source/en/tutorials/using_peft_for_inference.md

+++ b/docs/source/en/tutorials/using_peft_for_inference.md

@@ -12,9 +12,9 @@ specific language governing permissions and limitations under the License.

[[open-in-colab]]

-# Inference with PEFT

+# Load LoRAs for inference

-There are many adapters trained in different styles to achieve different effects. You can even combine multiple adapters to create new and unique images. With the 🤗 [PEFT](https://huggingface.co/docs/peft/index) integration in 🤗 Diffusers, it is really easy to load and manage adapters for inference. In this guide, you'll learn how to use different adapters with [Stable Diffusion XL (SDXL)](../api/pipelines/stable_diffusion/stable_diffusion_xl) for inference.

+There are many adapters (with LoRAs being the most common type) trained in different styles to achieve different effects. You can even combine multiple adapters to create new and unique images. With the 🤗 [PEFT](https://huggingface.co/docs/peft/index) integration in 🤗 Diffusers, it is really easy to load and manage adapters for inference. In this guide, you'll learn how to use different adapters with [Stable Diffusion XL (SDXL)](../api/pipelines/stable_diffusion/stable_diffusion_xl) for inference.

Throughout this guide, you'll use LoRA as the main adapter technique, so we'll use the terms LoRA and adapter interchangeably. You should have some familiarity with LoRA, and if you don't, we welcome you to check out the [LoRA guide](https://huggingface.co/docs/peft/conceptual_guides/lora).

@@ -22,9 +22,8 @@ Let's first install all the required libraries.

```bash

!pip install -q transformers accelerate

-# Will be updated once the stable releases are done.

-!pip install -q git+https://github.com/huggingface/peft.git

-!pip install -q git+https://github.com/huggingface/diffusers.git

+!pip install peft

+!pip install diffusers

```

Now, let's load a pipeline with a SDXL checkpoint:

@@ -165,3 +164,22 @@ list_adapters_component_wise = pipe.get_list_adapters()

list_adapters_component_wise

{"text_encoder": ["toy", "pixel"], "unet": ["toy", "pixel"], "text_encoder_2": ["toy", "pixel"]}

```

+

+## Fusing adapters into the model

+

+You can use PEFT to easily fuse/unfuse multiple adapters directly into the model weights (both UNet and text encoder) using the [`~diffusers.loaders.LoraLoaderMixin.fuse_lora`] method, which can lead to a speed-up in inference and lower VRAM usage.

+

+```py

+pipe.load_lora_weights("nerijs/pixel-art-xl", weight_name="pixel-art-xl.safetensors", adapter_name="pixel")

+pipe.load_lora_weights("CiroN2022/toy-face", weight_name="toy_face_sdxl.safetensors", adapter_name="toy")

+

+pipe.set_adapters(["pixel", "toy"], adapter_weights=[0.5, 1.0])

+# Fuses the LoRAs into the Unet

+pipe.fuse_lora()

+

+prompt = "toy_face of a hacker with a hoodie, pixel art"

+image = pipe(prompt, num_inference_steps=30, generator=torch.manual_seed(0)).images[0]

+

+# Gets the Unet back to the original state

+pipe.unfuse_lora()

+```

diff --git a/docs/source/en/using-diffusers/callback.md b/docs/source/en/using-diffusers/callback.md

index 2293dc1f60c6..b4f16bda55eb 100644

--- a/docs/source/en/using-diffusers/callback.md

+++ b/docs/source/en/using-diffusers/callback.md

@@ -10,18 +10,18 @@ an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express o

specific language governing permissions and limitations under the License.

-->

-# Using callback

+# Using callback

[[open-in-colab]]

-Most 🤗 Diffusers pipeline now accept a `callback_on_step_end` argument that allows you to change the default behavior of denoising loop with custom defined functions. Here is an example of a callback function we can write to disable classifier free guidance after 40% of inference steps to save compute with minimum tradeoff in performance.

+Most 🤗 Diffusers pipelines now accept a `callback_on_step_end` argument that allows you to change the default behavior of denoising loop with custom defined functions. Here is an example of a callback function we can write to disable classifier-free guidance after 40% of inference steps to save compute with a minimum tradeoff in performance.

```python

-def callback_dynamic_cfg(pipe, step_index, timestep, callback_kwargs):

+def callback_dynamic_cfg(pipe, step_index, timestep, callback_kwargs):

# adjust the batch_size of prompt_embeds according to guidance_scale

if step_index == int(pipe.num_timestep * 0.4):

prompt_embeds = callback_kwargs["prompt_embeds"]

- prompt_embeds =prompt_embeds.chunk(2)[-1]

+ prompt_embeds = prompt_embeds.chunk(2)[-1]

# update guidance_scale and prompt_embeds

pipe._guidance_scale = 0.0

@@ -34,9 +34,9 @@ Your callback function has below arguments:

* `step_index` and `timestep` tell you where you are in the denoising loop. In our example, we use `step_index` to decide when to turn off CFG.

* `callback_kwargs` is a dict that contains tensor variables you can modify during the denoising loop. It only includes variables specified in the `callback_on_step_end_tensor_inputs` argument passed to the pipeline's `__call__` method. Different pipelines may use different sets of variables so please check the pipeline class's `_callback_tensor_inputs` attribute for the list of variables that you can modify. Common variables include `latents` and `prompt_embeds`. In our example, we need to adjust the batch size of `prompt_embeds` after setting `guidance_scale` to be `0` in order for it to work properly.

-You can pass the callback function as `callback_on_step_end` argument to the pipeline along with `callback_on_step_end_tensor_inputs`.

+You can pass the callback function as `callback_on_step_end` argument to the pipeline along with `callback_on_step_end_tensor_inputs`.

-```

+```python

import torch

from diffusers import StableDiffusionPipeline

@@ -46,7 +46,7 @@ pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

generator = torch.Generator(device="cuda").manual_seed(1)

-out= pipe(prompt, generator=generator, callback_on_step_end = callback_custom_cfg, callback_on_step_end_tensor_inputs=['prompt_embeds'])

+out = pipe(prompt, generator=generator, callback_on_step_end=callback_custom_cfg, callback_on_step_end_tensor_inputs=['prompt_embeds'])

out.images[0].save("out_custom_cfg.png")

```

@@ -55,6 +55,6 @@ Your callback function will be executed at the end of each denoising step and mo

-Currently we only support `callback_on_step_end`. If you have a solid use case and require a callback function with a different execution point, please open an [feature request](https://github.com/huggingface/diffusers/issues/new/choose) so we can add it!

+Currently we only support `callback_on_step_end`. If you have a solid use case and require a callback function with a different execution point, please open a [Feature Request](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&projects=&template=feature_request.md&title=) so we can add it!

-

\ No newline at end of file

+

diff --git a/docs/source/en/using-diffusers/conditional_image_generation.md b/docs/source/en/using-diffusers/conditional_image_generation.md

index d07658e4f58e..9832f53cffe6 100644

--- a/docs/source/en/using-diffusers/conditional_image_generation.md