+  +

+

-audio_sample = ds[3]

+## MagicMix

-text = audio_sample["text"].lower()

-speech_data = audio_sample["audio"]["array"]

+[MagicMix](https://huggingface.co/papers/2210.16056) is a pipeline that can mix an image and text prompt to generate a new image that preserves the image structure. The `mix_factor` determines how much influence the prompt has on the layout generation, `kmin` controls the number of steps during the content generation process, and `kmax` determines how much information is kept in the layout of the original image.

-model = WhisperForConditionalGeneration.from_pretrained("openai/whisper-small").to(device)

-processor = WhisperProcessor.from_pretrained("openai/whisper-small")

+```py

+from diffusers import DiffusionPipeline, DDIMScheduler

+from diffusers.utils import load_image

-diffuser_pipeline = DiffusionPipeline.from_pretrained(

+pipeline = DiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

- custom_pipeline="speech_to_image_diffusion",

- speech_model=model,

- speech_processor=processor,

- torch_dtype=torch.float16,

- use_safetensors=True,

-)

-

-diffuser_pipeline.enable_attention_slicing()

-diffuser_pipeline = diffuser_pipeline.to(device)

+ custom_pipeline="magic_mix",

+ scheduler = DDIMScheduler.from_pretrained("CompVis/stable-diffusion-v1-4", subfolder="scheduler"),

+).to('cuda')

-output = diffuser_pipeline(speech_data)

-plt.imshow(output.images[0])

+img = load_image("https://user-images.githubusercontent.com/59410571/209578593-141467c7-d831-4792-8b9a-b17dc5e47816.jpg")

+mix_img = pipeline(img, prompt="bed", kmin = 0.3, kmax = 0.5, mix_factor = 0.5)

+mix_img

```

-This example produces the following image:

-

\ No newline at end of file

+ +

+

+

\ No newline at end of file

diff --git a/docs/source/en/using-diffusers/loading_adapters.md b/docs/source/en/using-diffusers/loading_adapters.md

new file mode 100644

index 000000000000..0514688721d1

--- /dev/null

+++ b/docs/source/en/using-diffusers/loading_adapters.md

@@ -0,0 +1,300 @@

+

+

+# Load adapters

+

+[[open-in-colab]]

+

+There are several [training](../training/overview) techniques for personalizing diffusion models to generate images of a specific subject or images in certain styles. Each of these training methods produce a different type of adapter. Some of the adapters generate an entirely new model, while other adapters only modify a smaller set of embeddings or weights. This means the loading process for each adapter is also different.

+

+This guide will show you how to load DreamBooth, textual inversion, and LoRA weights.

+

+

+  +

+ image prompt

+

+  +

+

+  +

+ image and text prompt mix

+

+ +

+

+  +

+

+

+## Textual inversion

+

+[Textual inversion](https://textual-inversion.github.io/) is very similar to DreamBooth and it can also personalize a diffusion model to generate certain concepts (styles, objects) from just a few images. This method works by training and finding new embeddings that represent the images you provide with a special word in the prompt. As a result, the diffusion model weights stays the same and the training process produces a relatively tiny (a few KBs) file.

+

+Because textual inversion creates embeddings, it cannot be used on its own like DreamBooth and requires another model.

+

+```py

+from diffusers import AutoPipelineForText2Image

+import torch

+

+pipeline = AutoPipelineForText2Image.from_pretrained("runwayml/stable-diffusion-v1-5", torch_dtype=torch.float16).to("cuda")

+```

+

+Now you can load the textual inversion embeddings with the [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] method and generate some images. Let's load the [sd-concepts-library/gta5-artwork](https://huggingface.co/sd-concepts-library/gta5-artwork) embeddings and you'll need to include the special word ` +

+

+  +

+

+

+Textual inversion can also be trained on undesirable things to create *negative embeddings* to discourage a model from generating images with those undesirable things like blurry images or extra fingers on a hand. This can be a easy way to quickly improve your prompt. You'll also load the embeddings with [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`], but this time, you'll need two more parameters:

+

+- `weight_name`: specifies the weight file to load if the file was saved in the 🤗 Diffusers format with a specific name or if the file is stored in the A1111 format

+- `token`: specifies the special word to use in the prompt to trigger the embeddings

+

+Let's load the [sayakpaul/EasyNegative-test](https://huggingface.co/sayakpaul/EasyNegative-test) embeddings:

+

+```py

+pipeline.load_textual_inversion(

+ "sayakpaul/EasyNegative-test", weight_name="EasyNegative.safetensors", token="EasyNegative"

+)

+```

+

+Now you can use the `token` to generate an image with the negative embeddings:

+

+```py

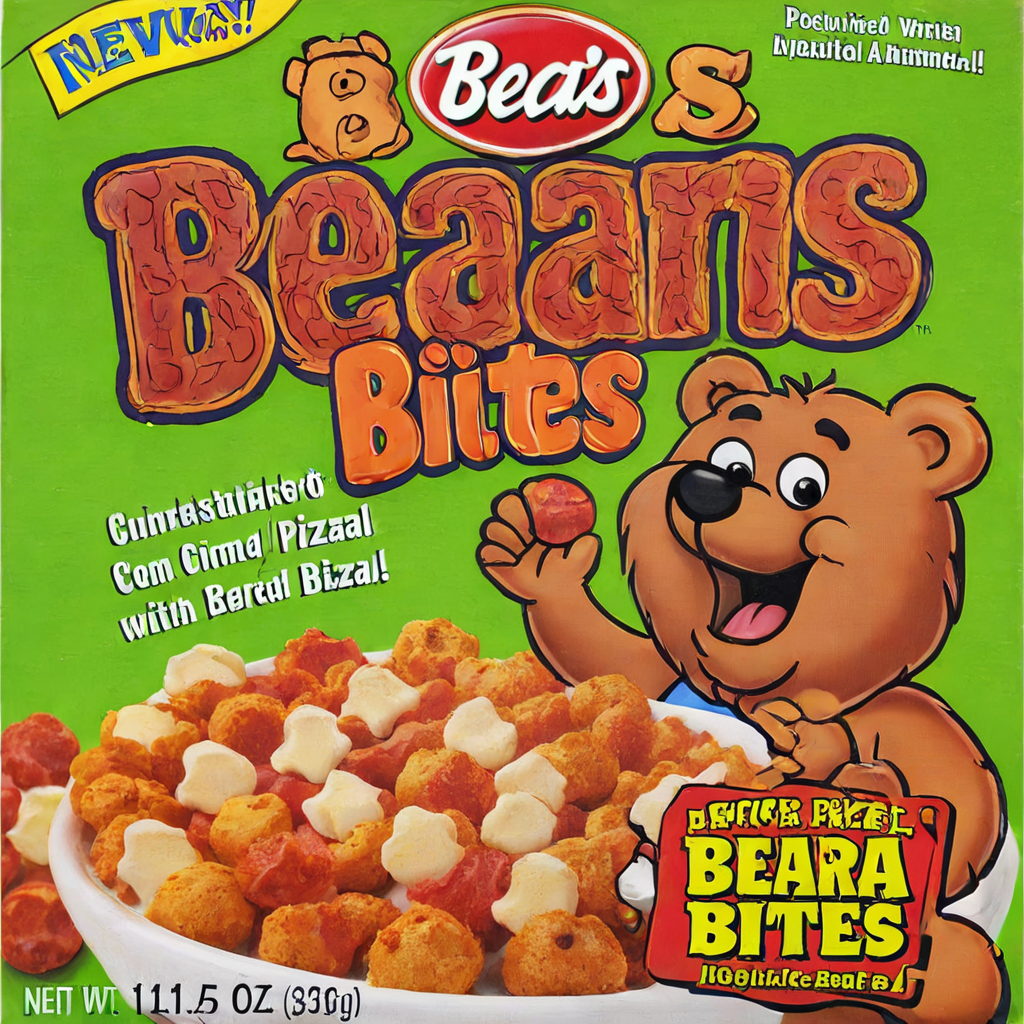

+prompt = "A cute brown bear eating a slice of pizza, stunning color scheme, masterpiece, illustration, EasyNegative"

+negative_prompt = "EasyNegative"

+

+image = pipeline(prompt, negative_prompt=negative_prompt, num_inference_steps=50).images[0]

+```

+

+ +

+

+  +

+

+

+## LoRA

+

+[Low-Rank Adaptation (LoRA)](https://huggingface.co/papers/2106.09685) is a popular training technique because it is fast and generates smaller file sizes (a couple hundred MBs). Like the other methods in this guide, LoRA can train a model to learn new styles from just a few images. It works by inserting new weights into the diffusion model and then only the new weights are trained instead of the entire model. This makes LoRAs faster to train and easier to store.

+

+ +

+

+  +

+

+

+The [`~loaders.LoraLoaderMixin.load_lora_weights`] method loads LoRA weights into both the UNet and text encoder. It is the preferred way for loading LoRAs because it can handle cases where:

+

+- the LoRA weights don't have separate identifiers for the UNet and text encoder

+- the LoRA weights have separate identifiers for the UNet and text encoder

+

+But if you only need to load LoRA weights into the UNet, then you can use the [`~loaders.UNet2DConditionLoadersMixin.load_attn_procs`] method. Let's load the [jbilcke-hf/sdxl-cinematic-1](https://huggingface.co/jbilcke-hf/sdxl-cinematic-1) LoRA:

+

+```py

+from diffusers import AutoPipelineForText2Image

+import torch

+

+pipeline = AutoPipelineForText2Image.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16).to("cuda")

+pipeline.unet.load_attn_procs("jbilcke-hf/sdxl-cinematic-1", weight_name="pytorch_lora_weights.safetensors")

+

+# use cnmt in the prompt to trigger the LoRA

+prompt = "A cute cnmt eating a slice of pizza, stunning color scheme, masterpiece, illustration"

+image = pipeline(prompt).images[0]

+```

+

+ +

+

+  +

+

+

+ +

+

+

+  +

+

+

+

+

+## Supported pipelines

+

+| Pipeline | Paper/Repository | Tasks |

+|---|---|:---:|

+| [alt_diffusion](./api/pipelines/alt_diffusion) | [AltCLIP: Altering the Language Encoder in CLIP for Extended Language Capabilities](https://arxiv.org/abs/2211.06679) | Image-to-Image Text-Guided Generation |

+| [audio_diffusion](./api/pipelines/audio_diffusion) | [Audio Diffusion](https://github.com/teticio/audio-diffusion.git) | Unconditional Audio Generation |

+| [controlnet](./api/pipelines/controlnet) | [Adding Conditional Control to Text-to-Image Diffusion Models](https://arxiv.org/abs/2302.05543) | Image-to-Image Text-Guided Generation |

+| [cycle_diffusion](./api/pipelines/cycle_diffusion) | [Unifying Diffusion Models' Latent Space, with Applications to CycleDiffusion and Guidance](https://arxiv.org/abs/2210.05559) | Image-to-Image Text-Guided Generation |

+| [dance_diffusion](./api/pipelines/dance_diffusion) | [Dance Diffusion](https://github.com/williamberman/diffusers.git) | Unconditional Audio Generation |

+| [ddpm](./api/pipelines/ddpm) | [Denoising Diffusion Probabilistic Models](https://arxiv.org/abs/2006.11239) | Unconditional Image Generation |

+| [ddim](./api/pipelines/ddim) | [Denoising Diffusion Implicit Models](https://arxiv.org/abs/2010.02502) | Unconditional Image Generation |

+| [if](./if) | [**IF**](./api/pipelines/if) | Image Generation |

+| [if_img2img](./if) | [**IF**](./api/pipelines/if) | Image-to-Image Generation |

+| [if_inpainting](./if) | [**IF**](./api/pipelines/if) | Image-to-Image Generation |

+| [latent_diffusion](./api/pipelines/latent_diffusion) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)| Text-to-Image Generation |

+| [latent_diffusion](./api/pipelines/latent_diffusion) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752)| Super Resolution Image-to-Image |

+| [latent_diffusion_uncond](./api/pipelines/latent_diffusion_uncond) | [High-Resolution Image Synthesis with Latent Diffusion Models](https://arxiv.org/abs/2112.10752) | Unconditional Image Generation |

+| [paint_by_example](./api/pipelines/paint_by_example) | [Paint by Example: Exemplar-based Image Editing with Diffusion Models](https://arxiv.org/abs/2211.13227) | Image-Guided Image Inpainting |

+| [pndm](./api/pipelines/pndm) | [Pseudo Numerical Methods for Diffusion Models on Manifolds](https://arxiv.org/abs/2202.09778) | Unconditional Image Generation |

+| [score_sde_ve](./api/pipelines/score_sde_ve) | [Score-Based Generative Modeling through Stochastic Differential Equations](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

+| [score_sde_vp](./api/pipelines/score_sde_vp) | [Score-Based Generative Modeling through Stochastic Differential Equations](https://openreview.net/forum?id=PxTIG12RRHS) | Unconditional Image Generation |

+| [semantic_stable_diffusion](./api/pipelines/semantic_stable_diffusion) | [Semantic Guidance](https://arxiv.org/abs/2301.12247) | Text-Guided Generation |

+| [stable_diffusion_adapter](./api/pipelines/stable_diffusion/adapter) | [**T2I-Adapter**](https://arxiv.org/abs/2302.08453) | Image-to-Image Text-Guided Generation | -

+| [stable_diffusion_text2img](./api/pipelines/stable_diffusion/text2img) | [Stable Diffusion](https://stability.ai/blog/stable-diffusion-public-release) | Text-to-Image Generation |

+| [stable_diffusion_img2img](./api/pipelines/stable_diffusion/img2img) | [Stable Diffusion](https://stability.ai/blog/stable-diffusion-public-release) | Image-to-Image Text-Guided Generation |

+| [stable_diffusion_inpaint](./api/pipelines/stable_diffusion/inpaint) | [Stable Diffusion](https://stability.ai/blog/stable-diffusion-public-release) | Text-Guided Image Inpainting |

+| [stable_diffusion_panorama](./api/pipelines/stable_diffusion/panorama) | [MultiDiffusion](https://multidiffusion.github.io/) | Text-to-Panorama Generation |

+| [stable_diffusion_pix2pix](./api/pipelines/stable_diffusion/pix2pix) | [InstructPix2Pix: Learning to Follow Image Editing Instructions](https://arxiv.org/abs/2211.09800) | Text-Guided Image Editing|

+| [stable_diffusion_pix2pix_zero](./api/pipelines/stable_diffusion/pix2pix_zero) | [Zero-shot Image-to-Image Translation](https://pix2pixzero.github.io/) | Text-Guided Image Editing |

+| [stable_diffusion_attend_and_excite](./api/pipelines/stable_diffusion/attend_and_excite) | [Attend-and-Excite: Attention-Based Semantic Guidance for Text-to-Image Diffusion Models](https://arxiv.org/abs/2301.13826) | Text-to-Image Generation |

+| [stable_diffusion_self_attention_guidance](./api/pipelines/stable_diffusion/self_attention_guidance) | [Improving Sample Quality of Diffusion Models Using Self-Attention Guidance](https://arxiv.org/abs/2210.00939) | Text-to-Image Generation Unconditional Image Generation |

+| [stable_diffusion_image_variation](./stable_diffusion/image_variation) | [Stable Diffusion Image Variations](https://github.com/LambdaLabsML/lambda-diffusers#stable-diffusion-image-variations) | Image-to-Image Generation |

+| [stable_diffusion_latent_upscale](./stable_diffusion/latent_upscale) | [Stable Diffusion Latent Upscaler](https://twitter.com/StabilityAI/status/1590531958815064065) | Text-Guided Super Resolution Image-to-Image |

+| [stable_diffusion_model_editing](./api/pipelines/stable_diffusion/model_editing) | [Editing Implicit Assumptions in Text-to-Image Diffusion Models](https://time-diffusion.github.io/) | Text-to-Image Model Editing |

+| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [Stable Diffusion 2](https://stability.ai/blog/stable-diffusion-v2-release) | Text-to-Image Generation |

+| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [Stable Diffusion 2](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Image Inpainting |

+| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [Depth-Conditional Stable Diffusion](https://github.com/Stability-AI/stablediffusion#depth-conditional-stable-diffusion) | Depth-to-Image Generation |

+| [stable_diffusion_2](./api/pipelines/stable_diffusion_2) | [Stable Diffusion 2](https://stability.ai/blog/stable-diffusion-v2-release) | Text-Guided Super Resolution Image-to-Image |

+| [stable_diffusion_safe](./api/pipelines/stable_diffusion_safe) | [Safe Stable Diffusion](https://arxiv.org/abs/2211.05105) | Text-Guided Generation |

+| [stable_unclip](./stable_unclip) | Stable unCLIP | Text-to-Image Generation |

+| [stable_unclip](./stable_unclip) | Stable unCLIP | Image-to-Image Text-Guided Generation |

+| [stochastic_karras_ve](./api/pipelines/stochastic_karras_ve) | [Elucidating the Design Space of Diffusion-Based Generative Models](https://arxiv.org/abs/2206.00364) | Unconditional Image Generation |

+| [text_to_video_sd](./api/pipelines/text_to_video) | [Modelscope's Text-to-video-synthesis Model in Open Domain](https://modelscope.cn/models/damo/text-to-video-synthesis/summary) | Text-to-Video Generation |

+| [unclip](./api/pipelines/unclip) | [Hierarchical Text-Conditional Image Generation with CLIP Latents](https://arxiv.org/abs/2204.06125)(implementation by [kakaobrain](https://github.com/kakaobrain/karlo)) | Text-to-Image Generation |

+| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Text-to-Image Generation |

+| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Image Variations Generation |

+| [versatile_diffusion](./api/pipelines/versatile_diffusion) | [Versatile Diffusion: Text, Images and Variations All in One Diffusion Model](https://arxiv.org/abs/2211.08332) | Dual Image and Text Guided Generation |

+| [vq_diffusion](./api/pipelines/vq_diffusion) | [Vector Quantized Diffusion Model for Text-to-Image Synthesis](https://arxiv.org/abs/2111.14822) | Text-to-Image Generation |

+| [stable_diffusion_ldm3d](./api/pipelines/stable_diffusion/ldm3d_diffusion) | [LDM3D: Latent Diffusion Model for 3D](https://arxiv.org/abs/2305.10853) | Text to Image and Depth Generation |

diff --git a/docs/source/ja/installation.md b/docs/source/ja/installation.md

new file mode 100644

index 000000000000..dbfd19d6cb7a

--- /dev/null

+++ b/docs/source/ja/installation.md

@@ -0,0 +1,145 @@

+

+

+# インストール

+

+お使いのディープラーニングライブラリに合わせてDiffusersをインストールできます。

+

+🤗 DiffusersはPython 3.8+、PyTorch 1.7.0+、Flaxでテストされています。使用するディープラーニングライブラリの以下のインストール手順に従ってください:

+

+- [PyTorch](https://pytorch.org/get-started/locally/)のインストール手順。

+- [Flax](https://flax.readthedocs.io/en/latest/)のインストール手順。

+

+## pip でインストール

+

+Diffusersは[仮想環境](https://docs.python.org/3/library/venv.html)の中でインストールすることが推奨されています。

+Python の仮想環境についてよく知らない場合は、こちらの [ガイド](https://packaging.python.org/guides/installing-using-pip-and-virtual-environments/) を参照してください。

+仮想環境は異なるプロジェクトの管理を容易にし、依存関係間の互換性の問題を回避します。

+

+ではさっそく、プロジェクトディレクトリに仮想環境を作ってみます:

+

+```bash

+python -m venv .env

+```

+

+仮想環境をアクティブにします:

+

+```bash

+source .env/bin/activate

+```

+

+🤗 Diffusers もまた 🤗 Transformers ライブラリに依存しており、以下のコマンドで両方をインストールできます:

+

+

+

+チュートリアル

+ 出力の生成、独自の拡散システムの構築、拡散モデルのトレーニングを開始するために必要な基本的なスキルを学ぶことができます。初めて🤗Diffusersを使用する場合は、ここから始めることをお勧めします!

+ +ガイド

+ パイプライン、モデル、スケジューラのロードに役立つ実践的なガイドです。また、特定のタスクにパイプラインを使用する方法、出力の生成方法を制御する方法、生成速度を最適化する方法、さまざまなトレーニング手法についても学ぶことができます。

+ +Conceptual guides

+ ライブラリがなぜこのように設計されたのかを理解し、ライブラリを利用する際の倫理的ガイドラインや安全対策について詳しく学べます。

+ +Reference

+ 🤗 Diffusersのクラスとメソッドがどのように機能するかについての技術的な説明です。

+ +

+  +

+

+

+`save`関数で画像を保存できます:

+

+```python

+>>> image.save("image_of_squirrel_painting.png")

+```

+

+### ローカルパイプライン

+

+ローカルでパイプラインを使用することもできます。唯一の違いは、最初にウェイトをダウンロードする必要があることです:

+

+```bash

+!git lfs install

+!git clone https://huggingface.co/runwayml/stable-diffusion-v1-5

+```

+

+保存したウェイトをパイプラインにロードします:

+

+```python

+>>> pipeline = DiffusionPipeline.from_pretrained("./stable-diffusion-v1-5", use_safetensors=True)

+```

+

+これで、上のセクションと同じようにパイプラインを動かすことができます。

+

+### スケジューラの交換

+

+スケジューラーによって、ノイズ除去のスピードや品質のトレードオフが異なります。どれが自分に最適かを知る最善の方法は、実際に試してみることです!Diffusers 🧨の主な機能の1つは、スケジューラを簡単に切り替えることができることです。例えば、デフォルトの[`PNDMScheduler`]を[`EulerDiscreteScheduler`]に置き換えるには、[`~diffusers.ConfigMixin.from_config`]メソッドでロードできます:

+

+```py

+>>> from diffusers import EulerDiscreteScheduler

+

+>>> pipeline = DiffusionPipeline.from_pretrained("runwayml/stable-diffusion-v1-5", use_safetensors=True)

+>>> pipeline.scheduler = EulerDiscreteScheduler.from_config(pipeline.scheduler.config)

+```

+

+新しいスケジューラを使って画像を生成し、その違いに気づくかどうか試してみてください!

+

+次のセクションでは、[`DiffusionPipeline`]を構成するコンポーネント(モデルとスケジューラ)を詳しく見て、これらのコンポーネントを使って猫の画像を生成する方法を学びます。

+

+## モデル

+

+ほとんどのモデルはノイズの多いサンプルを取り、各タイムステップで*残りのノイズ*を予測します(他のモデルは前のサンプルを直接予測するか、速度または[`v-prediction`](https://github.com/huggingface/diffusers/blob/5e5ce13e2f89ac45a0066cb3f369462a3cf1d9ef/src/diffusers/schedulers/scheduling_ddim.py#L110)を予測するように学習します)。モデルを混ぜて他の拡散システムを作ることもできます。

+

+モデルは[`~ModelMixin.from_pretrained`]メソッドで開始されます。このメソッドはモデルをローカルにキャッシュするので、次にモデルをロードするときに高速になります。この案内では、[`UNet2DModel`]をロードします。これは基本的な画像生成モデルであり、猫画像で学習されたチェックポイントを使います:

+

+```py

+>>> from diffusers import UNet2DModel

+

+>>> repo_id = "google/ddpm-cat-256"

+>>> model = UNet2DModel.from_pretrained(repo_id, use_safetensors=True)

+```

+

+モデルのパラメータにアクセスするには、`model.config` を呼び出せます:

+

+```py

+>>> model.config

+```

+

+モデル構成は🧊凍結🧊されたディクショナリであり、モデル作成後にこれらのパラメー タを変更することはできません。これは意図的なもので、最初にモデル・アーキテクチャを定義するために使用されるパラメータが同じままであることを保証します。他のパラメータは生成中に調整することができます。

+

+最も重要なパラメータは以下の通りです:

+

+* sample_size`: 入力サンプルの高さと幅。

+* `in_channels`: 入力サンプルの入力チャンネル数。

+* down_block_types` と `up_block_types`: UNet アーキテクチャを作成するために使用されるダウンサンプリングブロックとアップサンプリングブロックのタイプ。

+* block_out_channels`: ダウンサンプリングブロックの出力チャンネル数。逆順でアップサンプリングブロックの入力チャンネル数にも使用されます。

+* layer_per_block`: 各 UNet ブロックに含まれる ResNet ブロックの数。

+

+このモデルを生成に使用するには、ランダムな画像の形の正規分布を作成します。このモデルは複数のランダムな正規分布を受け取ることができるため`batch`軸を入れます。入力チャンネル数に対応する`channel`軸も必要です。画像の高さと幅に対応する`sample_size`軸を持つ必要があります:

+

+```py

+>>> import torch

+

+>>> torch.manual_seed(0)

+

+>>> noisy_sample = torch.randn(1, model.config.in_channels, model.config.sample_size, model.config.sample_size)

+>>> noisy_sample.shape

+torch.Size([1, 3, 256, 256])

+```

+

+画像生成には、ノイズの多い画像と `timestep` をモデルに渡します。`timestep`は入力画像がどの程度ノイズが多いかを示します。これは、モデルが拡散プロセスにおける自分の位置を決定するのに役立ちます。モデルの出力を得るには `sample` メソッドを使用します:

+

+```py

+>>> with torch.no_grad():

+... noisy_residual = model(sample=noisy_sample, timestep=2).sample

+```

+

+しかし、実際の例を生成するには、ノイズ除去プロセスをガイドするスケジューラが必要です。次のセクションでは、モデルをスケジューラと組み合わせる方法を学びます。

+

+## スケジューラ

+

+スケジューラは、モデルの出力(この場合は `noisy_residual` )が与えられたときに、ノイズの多いサンプルからノイズの少ないサンプルへの移行を管理します。

+

+

+ +

+

+  +

+

+

+## 次のステップ

+

+このクイックツアーで、🧨ディフューザーを使ったクールな画像をいくつか作成できたと思います!次のステップとして

+

+* モデルをトレーニングまたは微調整については、[training](./tutorials/basic_training)チュートリアルを参照してください。

+* 様々な使用例については、公式およびコミュニティの[training or finetuning scripts](https://github.com/huggingface/diffusers/tree/main/examples#-diffusers-examples)の例を参照してください。

+* スケジューラのロード、アクセス、変更、比較については[Using different Schedulers](./using-diffusers/schedulers)ガイドを参照してください。

+* プロンプトエンジニアリング、スピードとメモリの最適化、より高品質な画像を生成するためのヒントやトリックについては、[Stable Diffusion](./stable_diffusion)ガイドを参照してください。

+* 🧨 Diffusers の高速化については、最適化された [PyTorch on a GPU](./optimization/fp16)のガイド、[Stable Diffusion on Apple Silicon (M1/M2)](./optimization/mps)と[ONNX Runtime](./optimization/onnx)を参照してください。

diff --git a/docs/source/ja/stable_diffusion.md b/docs/source/ja/stable_diffusion.md

new file mode 100644

index 000000000000..fb5afc49435b

--- /dev/null

+++ b/docs/source/ja/stable_diffusion.md

@@ -0,0 +1,260 @@

+

+

+# 効果的で効率的な拡散モデル

+

+[[open-in-colab]]

+

+[`DiffusionPipeline`]を使って特定のスタイルで画像を生成したり、希望する画像を生成したりするのは難しいことです。多くの場合、[`DiffusionPipeline`]を何度か実行してからでないと満足のいく画像は得られません。しかし、何もないところから何かを生成するにはたくさんの計算が必要です。生成を何度も何度も実行する場合、特にたくさんの計算量が必要になります。

+

+そのため、パイプラインから*計算*(速度)と*メモリ*(GPU RAM)の効率を最大限に引き出し、生成サイクル間の時間を短縮することで、より高速な反復処理を行えるようにすることが重要です。

+

+このチュートリアルでは、[`DiffusionPipeline`]を用いて、より速く、より良い計算を行う方法を説明します。

+

+まず、[`runwayml/stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5)モデルをロードします:

+

+```python

+from diffusers import DiffusionPipeline

+

+model_id = "runwayml/stable-diffusion-v1-5"

+pipeline = DiffusionPipeline.from_pretrained(model_id, use_safetensors=True)

+```

+

+ここで使用するプロンプトの例は年老いた戦士の長の肖像画ですが、ご自由に変更してください:

+

+```python

+prompt = "portrait photo of a old warrior chief"

+```

+

+## Speed

+

+ +

+

+  +

+

+

+この処理にはT4 GPUで~30秒かかりました(割り当てられているGPUがT4より優れている場合はもっと速いかもしれません)。デフォルトでは、[`DiffusionPipeline`]は完全な`float32`精度で生成を50ステップ実行します。float16`のような低い精度に変更するか、推論ステップ数を減らすことで高速化することができます。

+

+まずは `float16` でモデルをロードして画像を生成してみましょう:

+

+```python

+import torch

+

+pipeline = DiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16, use_safetensors=True)

+pipeline = pipeline.to("cuda")

+generator = torch.Generator("cuda").manual_seed(0)

+image = pipeline(prompt, generator=generator).images[0]

+image

+```

+

+ +

+

+  +

+

+

+今回、画像生成にかかった時間はわずか11秒で、以前より3倍近く速くなりました!

+

+ +

+

+  +

+

+

+推論時間をわずか4秒に短縮することに成功した!⚡️

+

+## メモリー

+

+パイプラインのパフォーマンスを向上させるもう1つの鍵は、消費メモリを少なくすることです。一度に生成できる画像の数を確認する最も簡単な方法は、`OutOfMemoryError`(OOM)が発生するまで、さまざまなバッチサイズを試してみることです。

+

+文章と `Generators` のリストから画像のバッチを生成する関数を作成します。各 `Generator` にシードを割り当てて、良い結果が得られた場合に再利用できるようにします。

+

+```python

+def get_inputs(batch_size=1):

+ generator = [torch.Generator("cuda").manual_seed(i) for i in range(batch_size)]

+ prompts = batch_size * [prompt]

+ num_inference_steps = 20

+

+ return {"prompt": prompts, "generator": generator, "num_inference_steps": num_inference_steps}

+```

+

+`batch_size=4`で開始し、どれだけメモリを消費したかを確認します:

+

+```python

+from diffusers.utils import make_image_grid

+

+images = pipeline(**get_inputs(batch_size=4)).images

+make_image_grid(images, 2, 2)

+```

+

+大容量のRAMを搭載したGPUでない限り、上記のコードはおそらく`OOM`エラーを返したはずです!メモリの大半はクロスアテンションレイヤーが占めています。この処理をバッチで実行する代わりに、逐次実行することでメモリを大幅に節約できます。必要なのは、[`~DiffusionPipeline.enable_attention_slicing`]関数を使用することだけです:

+

+```python

+pipeline.enable_attention_slicing()

+```

+

+今度は`batch_size`を8にしてみてください!

+

+```python

+images = pipeline(**get_inputs(batch_size=8)).images

+make_image_grid(images, rows=2, cols=4)

+```

+

+ +

+

+  +

+

+

+以前は4枚の画像のバッチを生成することさえできませんでしたが、今では8枚の画像のバッチを1枚あたり~3.5秒で生成できます!これはおそらく、品質を犠牲にすることなくT4 GPUでできる最速の処理速度です。

+

+## 品質

+

+前の2つのセクションでは、`fp16` を使ってパイプラインの速度を最適化する方法、よりパフォーマン スなスケジューラーを使って生成ステップ数を減らす方法、アテンションスライスを有効 にしてメモリ消費量を減らす方法について学びました。今度は、生成される画像の品質を向上させる方法に焦点を当てます。

+

+### より良いチェックポイント

+

+最も単純なステップは、より良いチェックポイントを使うことです。Stable Diffusionモデルは良い出発点であり、公式発表以来、いくつかの改良版もリリースされています。しかし、新しいバージョンを使ったからといって、自動的に良い結果が得られるわけではありません。最良の結果を得るためには、自分でさまざまなチェックポイントを試してみたり、ちょっとした研究([ネガティブプロンプト](https://minimaxir.com/2022/11/stable-diffusion-negative-prompt/)の使用など)をしたりする必要があります。

+

+この分野が成長するにつれて、特定のスタイルを生み出すために微調整された、より質の高いチェックポイントが増えています。[Hub](https://huggingface.co/models?library=diffusers&sort=downloads)や[Diffusers Gallery](https://huggingface.co/spaces/huggingface-projects/diffusers-gallery)を探索して、興味のあるものを見つけてみてください!

+

+### より良いパイプラインコンポーネント

+

+現在のパイプラインコンポーネントを新しいバージョンに置き換えてみることもできます。Stability AIが提供する最新の[autodecoder](https://huggingface.co/stabilityai/stable-diffusion-2-1/tree/main/vae)をパイプラインにロードし、画像を生成してみましょう:

+

+```python

+from diffusers import AutoencoderKL

+

+vae = AutoencoderKL.from_pretrained("stabilityai/sd-vae-ft-mse", torch_dtype=torch.float16).to("cuda")

+pipeline.vae = vae

+images = pipeline(**get_inputs(batch_size=8)).images

+make_image_grid(images, rows=2, cols=4)

+```

+

+ +

+

+  +

+

+

+### より良いプロンプト・エンジニアリング

+

+画像を生成するために使用する文章は、*プロンプトエンジニアリング*と呼ばれる分野を作られるほど、非常に重要です。プロンプト・エンジニアリングで考慮すべき点は以下の通りです:

+

+- 生成したい画像やその類似画像は、インターネット上にどのように保存されているか?

+- 私が望むスタイルにモデルを誘導するために、どのような追加詳細を与えるべきか?

+

+このことを念頭に置いて、プロンプトに色やより質の高いディテールを含めるように改良してみましょう:

+

+```python

+prompt += ", tribal panther make up, blue on red, side profile, looking away, serious eyes"

+prompt += " 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta"

+```

+

+新しいプロンプトで画像のバッチを生成しましょう:

+

+```python

+images = pipeline(**get_inputs(batch_size=8)).images

+make_image_grid(images, rows=2, cols=4)

+```

+

+ +

+

+  +

+

+

+かなりいいです!種が`1`の`Generator`に対応する2番目の画像に、被写体の年齢に関するテキストを追加して、もう少し手を加えてみましょう:

+

+```python

+prompts = [

+ "portrait photo of the oldest warrior chief, tribal panther make up, blue on red, side profile, looking away, serious eyes 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta",

+ "portrait photo of a old warrior chief, tribal panther make up, blue on red, side profile, looking away, serious eyes 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta",

+ "portrait photo of a warrior chief, tribal panther make up, blue on red, side profile, looking away, serious eyes 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta",

+ "portrait photo of a young warrior chief, tribal panther make up, blue on red, side profile, looking away, serious eyes 50mm portrait photography, hard rim lighting photography--beta --ar 2:3 --beta --upbeta",

+]

+

+generator = [torch.Generator("cuda").manual_seed(1) for _ in range(len(prompts))]

+images = pipeline(prompt=prompts, generator=generator, num_inference_steps=25).images

+make_image_grid(images, 2, 2)

+```

+

+ +

+

+  +

+

+

+## 次のステップ

+

+このチュートリアルでは、[`DiffusionPipeline`]を最適化して計算効率とメモリ効率を向上させ、生成される出力の品質を向上させる方法を学びました。パイプラインをさらに高速化することに興味があれば、以下のリソースを参照してください:

+

+- [PyTorch 2.0](./optimization/torch2.0)と[`torch.compile`](https://pytorch.org/docs/stable/generated/torch.compile.html)がどのように生成速度を5-300%高速化できるかを学んでください。A100 GPUの場合、画像生成は最大50%速くなります!

+- PyTorch 2が使えない場合は、[xFormers](./optimization/xformers)をインストールすることをお勧めします。このライブラリのメモリ効率の良いアテンションメカニズムは PyTorch 1.13.1 と相性が良く、高速化とメモリ消費量の削減を同時に実現します。

+- モデルのオフロードなど、その他の最適化テクニックは [this guide](./optimization/fp16) でカバーされています。

diff --git a/examples/community/README.md b/examples/community/README.md

index 1073240d8b94..b4fb44c25385 100755

--- a/examples/community/README.md

+++ b/examples/community/README.md

@@ -45,6 +45,7 @@ FABRIC - Stable Diffusion with feedback Pipeline | pipeline supports feedback fr

sketch inpaint - Inpainting with non-inpaint Stable Diffusion | sketch inpaint much like in automatic1111 | [Masked Im2Im Stable Diffusion Pipeline](#stable-diffusion-masked-im2im) | - | [Anatoly Belikov](https://github.com/noskill) |

prompt-to-prompt | change parts of a prompt and retain image structure (see [paper page](https://prompt-to-prompt.github.io/)) | [Prompt2Prompt Pipeline](#prompt2prompt-pipeline) | - | [Umer H. Adil](https://twitter.com/UmerHAdil) |

| Latent Consistency Pipeline | Implementation of [Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference](https://arxiv.org/abs/2310.04378) | [Latent Consistency Pipeline](#latent-consistency-pipeline) | - | [Simian Luo](https://github.com/luosiallen) |

+| Latent Consistency Img2img Pipeline | Img2img pipeline for Latent Consistency Models | [Latent Consistency Img2Img Pipeline](#latent-consistency-img2img-pipeline) | - | [Logan Zoellner](https://github.com/nagolinc) |

To load a custom pipeline you just need to pass the `custom_pipeline` argument to `DiffusionPipeline`, as one of the files in `diffusers/examples/community`. Feel free to send a PR with your own pipelines, we will merge them quickly.

@@ -2185,3 +2186,35 @@ images = pipe(prompt=prompt, num_inference_steps=num_inference_steps, guidance_s

For any questions or feedback, feel free to reach out to [Simian Luo](https://github.com/luosiallen).

You can also try this pipeline directly in the [🚀 official spaces](https://huggingface.co/spaces/SimianLuo/Latent_Consistency_Model).

+

+

+

+### Latent Consistency Img2img Pipeline

+

+This pipeline extends the Latent Consistency Pipeline to allow it to take an input image.

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+pipe = DiffusionPipeline.from_pretrained("SimianLuo/LCM_Dreamshaper_v7", custom_pipeline="latent_consistency_img2img")

+

+# To save GPU memory, torch.float16 can be used, but it may compromise image quality.

+pipe.to(torch_device="cuda", torch_dtype=torch.float32)

+```

+

+- 2. Run inference with as little as 4 steps:

+

+```py

+prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

+

+

+input_image=Image.open("myimg.png")

+

+strength = 0.5 #strength =0 (no change) strength=1 (completely overwrite image)

+

+# Can be set to 1~50 steps. LCM support fast inference even <= 4 steps. Recommend: 1~8 steps.

+num_inference_steps = 4

+

+images = pipe(prompt=prompt, image=input_image, strength=strength, num_inference_steps=num_inference_steps, guidance_scale=8.0, lcm_origin_steps=50, output_type="pil").images

+```

diff --git a/examples/community/latent_consistency_img2img.py b/examples/community/latent_consistency_img2img.py

new file mode 100644

index 000000000000..cc40d41eab6e

--- /dev/null

+++ b/examples/community/latent_consistency_img2img.py

@@ -0,0 +1,829 @@

+# Copyright 2023 Stanford University Team and The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+# DISCLAIMER: This code is strongly influenced by https://github.com/pesser/pytorch_diffusion

+# and https://github.com/hojonathanho/diffusion

+

+import math

+from dataclasses import dataclass

+from typing import Any, Dict, List, Optional, Tuple, Union

+

+import numpy as np

+import PIL.Image

+import torch

+from transformers import CLIPImageProcessor, CLIPTextModel, CLIPTokenizer

+

+from diffusers import AutoencoderKL, ConfigMixin, DiffusionPipeline, SchedulerMixin, UNet2DConditionModel, logging

+from diffusers.configuration_utils import register_to_config

+from diffusers.image_processor import PipelineImageInput, VaeImageProcessor

+from diffusers.pipelines.stable_diffusion import StableDiffusionPipelineOutput

+from diffusers.pipelines.stable_diffusion.safety_checker import StableDiffusionSafetyChecker

+from diffusers.utils import BaseOutput

+from diffusers.utils.torch_utils import randn_tensor

+

+

+logger = logging.get_logger(__name__) # pylint: disable=invalid-name

+

+

+class LatentConsistencyModelImg2ImgPipeline(DiffusionPipeline):

+ _optional_components = ["scheduler"]

+

+ def __init__(

+ self,

+ vae: AutoencoderKL,

+ text_encoder: CLIPTextModel,

+ tokenizer: CLIPTokenizer,

+ unet: UNet2DConditionModel,

+ scheduler: "LCMSchedulerWithTimestamp",

+ safety_checker: StableDiffusionSafetyChecker,

+ feature_extractor: CLIPImageProcessor,

+ requires_safety_checker: bool = True,

+ ):

+ super().__init__()

+

+ scheduler = (

+ scheduler

+ if scheduler is not None

+ else LCMSchedulerWithTimestamp(

+ beta_start=0.00085, beta_end=0.0120, beta_schedule="scaled_linear", prediction_type="epsilon"

+ )

+ )

+

+ self.register_modules(

+ vae=vae,

+ text_encoder=text_encoder,

+ tokenizer=tokenizer,

+ unet=unet,

+ scheduler=scheduler,

+ safety_checker=safety_checker,

+ feature_extractor=feature_extractor,

+ )

+ self.vae_scale_factor = 2 ** (len(self.vae.config.block_out_channels) - 1)

+ self.image_processor = VaeImageProcessor(vae_scale_factor=self.vae_scale_factor)

+

+ def _encode_prompt(

+ self,

+ prompt,

+ device,

+ num_images_per_prompt,

+ prompt_embeds: None,

+ ):

+ r"""

+ Encodes the prompt into text encoder hidden states.

+ Args:

+ prompt (`str` or `List[str]`, *optional*):

+ prompt to be encoded

+ device: (`torch.device`):

+ torch device

+ num_images_per_prompt (`int`):

+ number of images that should be generated per prompt

+ prompt_embeds (`torch.FloatTensor`, *optional*):

+ Pre-generated text embeddings. Can be used to easily tweak text inputs, *e.g.* prompt weighting. If not

+ provided, text embeddings will be generated from `prompt` input argument.

+ """

+

+ if prompt is not None and isinstance(prompt, str):

+ pass

+ elif prompt is not None and isinstance(prompt, list):

+ len(prompt)

+ else:

+ prompt_embeds.shape[0]

+

+ if prompt_embeds is None:

+ text_inputs = self.tokenizer(

+ prompt,

+ padding="max_length",

+ max_length=self.tokenizer.model_max_length,

+ truncation=True,

+ return_tensors="pt",

+ )

+ text_input_ids = text_inputs.input_ids

+ untruncated_ids = self.tokenizer(prompt, padding="longest", return_tensors="pt").input_ids

+

+ if untruncated_ids.shape[-1] >= text_input_ids.shape[-1] and not torch.equal(

+ text_input_ids, untruncated_ids

+ ):

+ removed_text = self.tokenizer.batch_decode(

+ untruncated_ids[:, self.tokenizer.model_max_length - 1 : -1]

+ )

+ logger.warning(

+ "The following part of your input was truncated because CLIP can only handle sequences up to"

+ f" {self.tokenizer.model_max_length} tokens: {removed_text}"

+ )

+

+ if hasattr(self.text_encoder.config, "use_attention_mask") and self.text_encoder.config.use_attention_mask:

+ attention_mask = text_inputs.attention_mask.to(device)

+ else:

+ attention_mask = None

+

+ prompt_embeds = self.text_encoder(

+ text_input_ids.to(device),

+ attention_mask=attention_mask,

+ )

+ prompt_embeds = prompt_embeds[0]

+

+ if self.text_encoder is not None:

+ prompt_embeds_dtype = self.text_encoder.dtype

+ elif self.unet is not None:

+ prompt_embeds_dtype = self.unet.dtype

+ else:

+ prompt_embeds_dtype = prompt_embeds.dtype

+

+ prompt_embeds = prompt_embeds.to(dtype=prompt_embeds_dtype, device=device)

+

+ bs_embed, seq_len, _ = prompt_embeds.shape

+ # duplicate text embeddings for each generation per prompt, using mps friendly method

+ prompt_embeds = prompt_embeds.repeat(1, num_images_per_prompt, 1)

+ prompt_embeds = prompt_embeds.view(bs_embed * num_images_per_prompt, seq_len, -1)

+

+ # Don't need to get uncond prompt embedding because of LCM Guided Distillation

+ return prompt_embeds

+

+ def run_safety_checker(self, image, device, dtype):

+ if self.safety_checker is None:

+ has_nsfw_concept = None

+ else:

+ if torch.is_tensor(image):

+ feature_extractor_input = self.image_processor.postprocess(image, output_type="pil")

+ else:

+ feature_extractor_input = self.image_processor.numpy_to_pil(image)

+ safety_checker_input = self.feature_extractor(feature_extractor_input, return_tensors="pt").to(device)

+ image, has_nsfw_concept = self.safety_checker(

+ images=image, clip_input=safety_checker_input.pixel_values.to(dtype)

+ )

+ return image, has_nsfw_concept

+

+ def prepare_latents(

+ self,

+ image,

+ timestep,

+ batch_size,

+ num_channels_latents,

+ height,

+ width,

+ dtype,

+ device,

+ latents=None,

+ generator=None,

+ ):

+ shape = (batch_size, num_channels_latents, height // self.vae_scale_factor, width // self.vae_scale_factor)

+

+ if not isinstance(image, (torch.Tensor, PIL.Image.Image, list)):

+ raise ValueError(

+ f"`image` has to be of type `torch.Tensor`, `PIL.Image.Image` or list but is {type(image)}"

+ )

+

+ image = image.to(device=device, dtype=dtype)

+

+ # batch_size = batch_size * num_images_per_prompt

+

+ if image.shape[1] == 4:

+ init_latents = image

+

+ else:

+ if isinstance(generator, list) and len(generator) != batch_size:

+ raise ValueError(

+ f"You have passed a list of generators of length {len(generator)}, but requested an effective batch"

+ f" size of {batch_size}. Make sure the batch size matches the length of the generators."

+ )

+

+ elif isinstance(generator, list):

+ init_latents = [

+ self.vae.encode(image[i : i + 1]).latent_dist.sample(generator[i]) for i in range(batch_size)

+ ]

+ init_latents = torch.cat(init_latents, dim=0)

+ else:

+ init_latents = self.vae.encode(image).latent_dist.sample(generator)

+

+ init_latents = self.vae.config.scaling_factor * init_latents

+

+ if batch_size > init_latents.shape[0] and batch_size % init_latents.shape[0] == 0:

+ # expand init_latents for batch_size

+ (

+ f"You have passed {batch_size} text prompts (`prompt`), but only {init_latents.shape[0]} initial"

+ " images (`image`). Initial images are now duplicating to match the number of text prompts. Note"

+ " that this behavior is deprecated and will be removed in a version 1.0.0. Please make sure to update"

+ " your script to pass as many initial images as text prompts to suppress this warning."

+ )

+ # deprecate("len(prompt) != len(image)", "1.0.0", deprecation_message, standard_warn=False)

+ additional_image_per_prompt = batch_size // init_latents.shape[0]

+ init_latents = torch.cat([init_latents] * additional_image_per_prompt, dim=0)

+ elif batch_size > init_latents.shape[0] and batch_size % init_latents.shape[0] != 0:

+ raise ValueError(

+ f"Cannot duplicate `image` of batch size {init_latents.shape[0]} to {batch_size} text prompts."

+ )

+ else:

+ init_latents = torch.cat([init_latents], dim=0)

+

+ shape = init_latents.shape

+ noise = randn_tensor(shape, generator=generator, device=device, dtype=dtype)

+

+ # get latents

+ init_latents = self.scheduler.add_noise(init_latents, noise, timestep)

+ latents = init_latents

+

+ return latents

+

+ if latents is None:

+ latents = torch.randn(shape, dtype=dtype).to(device)

+ else:

+ latents = latents.to(device)

+ # scale the initial noise by the standard deviation required by the scheduler

+ latents = latents * self.scheduler.init_noise_sigma

+ return latents

+

+ def get_w_embedding(self, w, embedding_dim=512, dtype=torch.float32):

+ """

+ see https://github.com/google-research/vdm/blob/dc27b98a554f65cdc654b800da5aa1846545d41b/model_vdm.py#L298

+ Args:

+ timesteps: torch.Tensor: generate embedding vectors at these timesteps

+ embedding_dim: int: dimension of the embeddings to generate

+ dtype: data type of the generated embeddings

+ Returns:

+ embedding vectors with shape `(len(timesteps), embedding_dim)`

+ """

+ assert len(w.shape) == 1

+ w = w * 1000.0

+

+ half_dim = embedding_dim // 2

+ emb = torch.log(torch.tensor(10000.0)) / (half_dim - 1)

+ emb = torch.exp(torch.arange(half_dim, dtype=dtype) * -emb)

+ emb = w.to(dtype)[:, None] * emb[None, :]

+ emb = torch.cat([torch.sin(emb), torch.cos(emb)], dim=1)

+ if embedding_dim % 2 == 1: # zero pad

+ emb = torch.nn.functional.pad(emb, (0, 1))

+ assert emb.shape == (w.shape[0], embedding_dim)

+ return emb

+

+ def get_timesteps(self, num_inference_steps, strength, device):

+ # get the original timestep using init_timestep

+ init_timestep = min(int(num_inference_steps * strength), num_inference_steps)

+

+ t_start = max(num_inference_steps - init_timestep, 0)

+ timesteps = self.scheduler.timesteps[t_start * self.scheduler.order :]

+

+ return timesteps, num_inference_steps - t_start

+

+ @torch.no_grad()

+ def __call__(

+ self,

+ prompt: Union[str, List[str]] = None,

+ image: PipelineImageInput = None,

+ strength: float = 0.8,

+ height: Optional[int] = 768,

+ width: Optional[int] = 768,

+ guidance_scale: float = 7.5,

+ num_images_per_prompt: Optional[int] = 1,

+ latents: Optional[torch.FloatTensor] = None,

+ num_inference_steps: int = 4,

+ lcm_origin_steps: int = 50,

+ prompt_embeds: Optional[torch.FloatTensor] = None,

+ output_type: Optional[str] = "pil",

+ return_dict: bool = True,

+ cross_attention_kwargs: Optional[Dict[str, Any]] = None,

+ ):

+ # 0. Default height and width to unet

+ height = height or self.unet.config.sample_size * self.vae_scale_factor

+ width = width or self.unet.config.sample_size * self.vae_scale_factor

+

+ # 2. Define call parameters

+ if prompt is not None and isinstance(prompt, str):

+ batch_size = 1

+ elif prompt is not None and isinstance(prompt, list):

+ batch_size = len(prompt)

+ else:

+ batch_size = prompt_embeds.shape[0]

+

+ device = self._execution_device

+ # do_classifier_free_guidance = guidance_scale > 0.0 # In LCM Implementation: cfg_noise = noise_cond + cfg_scale * (noise_cond - noise_uncond) , (cfg_scale > 0.0 using CFG)

+

+ # 3. Encode input prompt

+ prompt_embeds = self._encode_prompt(

+ prompt,

+ device,

+ num_images_per_prompt,

+ prompt_embeds=prompt_embeds,

+ )

+

+ # 3.5 encode image

+ image = self.image_processor.preprocess(image)

+

+ # 4. Prepare timesteps

+ self.scheduler.set_timesteps(strength, num_inference_steps, lcm_origin_steps)

+ # timesteps = self.scheduler.timesteps

+ # timesteps, num_inference_steps = self.get_timesteps(num_inference_steps, 1.0, device)

+ timesteps = self.scheduler.timesteps

+ latent_timestep = timesteps[:1].repeat(batch_size * num_images_per_prompt)

+

+ print("timesteps: ", timesteps)

+

+ # 5. Prepare latent variable

+ num_channels_latents = self.unet.config.in_channels

+ latents = self.prepare_latents(

+ image,

+ latent_timestep,

+ batch_size * num_images_per_prompt,

+ num_channels_latents,

+ height,

+ width,

+ prompt_embeds.dtype,

+ device,

+ latents,

+ )

+ bs = batch_size * num_images_per_prompt

+

+ # 6. Get Guidance Scale Embedding

+ w = torch.tensor(guidance_scale).repeat(bs)

+ w_embedding = self.get_w_embedding(w, embedding_dim=256).to(device=device, dtype=latents.dtype)

+

+ # 7. LCM MultiStep Sampling Loop:

+ with self.progress_bar(total=num_inference_steps) as progress_bar:

+ for i, t in enumerate(timesteps):

+ ts = torch.full((bs,), t, device=device, dtype=torch.long)

+ latents = latents.to(prompt_embeds.dtype)

+

+ # model prediction (v-prediction, eps, x)

+ model_pred = self.unet(

+ latents,

+ ts,

+ timestep_cond=w_embedding,

+ encoder_hidden_states=prompt_embeds,

+ cross_attention_kwargs=cross_attention_kwargs,

+ return_dict=False,

+ )[0]

+

+ # compute the previous noisy sample x_t -> x_t-1

+ latents, denoised = self.scheduler.step(model_pred, i, t, latents, return_dict=False)

+

+ # # call the callback, if provided

+ # if i == len(timesteps) - 1:

+ progress_bar.update()

+

+ denoised = denoised.to(prompt_embeds.dtype)

+ if not output_type == "latent":

+ image = self.vae.decode(denoised / self.vae.config.scaling_factor, return_dict=False)[0]

+ image, has_nsfw_concept = self.run_safety_checker(image, device, prompt_embeds.dtype)

+ else:

+ image = denoised

+ has_nsfw_concept = None

+

+ if has_nsfw_concept is None:

+ do_denormalize = [True] * image.shape[0]

+ else:

+ do_denormalize = [not has_nsfw for has_nsfw in has_nsfw_concept]

+

+ image = self.image_processor.postprocess(image, output_type=output_type, do_denormalize=do_denormalize)

+

+ if not return_dict:

+ return (image, has_nsfw_concept)

+

+ return StableDiffusionPipelineOutput(images=image, nsfw_content_detected=has_nsfw_concept)

+

+

+@dataclass

+# Copied from diffusers.schedulers.scheduling_ddpm.DDPMSchedulerOutput with DDPM->DDIM

+class LCMSchedulerOutput(BaseOutput):

+ """

+ Output class for the scheduler's `step` function output.

+ Args:

+ prev_sample (`torch.FloatTensor` of shape `(batch_size, num_channels, height, width)` for images):

+ Computed sample `(x_{t-1})` of previous timestep. `prev_sample` should be used as next model input in the

+ denoising loop.

+ pred_original_sample (`torch.FloatTensor` of shape `(batch_size, num_channels, height, width)` for images):

+ The predicted denoised sample `(x_{0})` based on the model output from the current timestep.

+ `pred_original_sample` can be used to preview progress or for guidance.

+ """

+

+ prev_sample: torch.FloatTensor

+ denoised: Optional[torch.FloatTensor] = None

+

+

+# Copied from diffusers.schedulers.scheduling_ddpm.betas_for_alpha_bar

+def betas_for_alpha_bar(

+ num_diffusion_timesteps,

+ max_beta=0.999,

+ alpha_transform_type="cosine",

+):

+ """

+ Create a beta schedule that discretizes the given alpha_t_bar function, which defines the cumulative product of

+ (1-beta) over time from t = [0,1].

+ Contains a function alpha_bar that takes an argument t and transforms it to the cumulative product of (1-beta) up

+ to that part of the diffusion process.

+ Args:

+ num_diffusion_timesteps (`int`): the number of betas to produce.

+ max_beta (`float`): the maximum beta to use; use values lower than 1 to

+ prevent singularities.

+ alpha_transform_type (`str`, *optional*, default to `cosine`): the type of noise schedule for alpha_bar.

+ Choose from `cosine` or `exp`

+ Returns:

+ betas (`np.ndarray`): the betas used by the scheduler to step the model outputs

+ """

+ if alpha_transform_type == "cosine":

+

+ def alpha_bar_fn(t):

+ return math.cos((t + 0.008) / 1.008 * math.pi / 2) ** 2

+

+ elif alpha_transform_type == "exp":

+

+ def alpha_bar_fn(t):

+ return math.exp(t * -12.0)

+

+ else:

+ raise ValueError(f"Unsupported alpha_tranform_type: {alpha_transform_type}")

+

+ betas = []

+ for i in range(num_diffusion_timesteps):

+ t1 = i / num_diffusion_timesteps

+ t2 = (i + 1) / num_diffusion_timesteps

+ betas.append(min(1 - alpha_bar_fn(t2) / alpha_bar_fn(t1), max_beta))

+ return torch.tensor(betas, dtype=torch.float32)

+

+

+def rescale_zero_terminal_snr(betas):

+ """

+ Rescales betas to have zero terminal SNR Based on https://arxiv.org/pdf/2305.08891.pdf (Algorithm 1)

+ Args:

+ betas (`torch.FloatTensor`):

+ the betas that the scheduler is being initialized with.

+ Returns:

+ `torch.FloatTensor`: rescaled betas with zero terminal SNR

+ """

+ # Convert betas to alphas_bar_sqrt

+ alphas = 1.0 - betas

+ alphas_cumprod = torch.cumprod(alphas, dim=0)

+ alphas_bar_sqrt = alphas_cumprod.sqrt()

+

+ # Store old values.

+ alphas_bar_sqrt_0 = alphas_bar_sqrt[0].clone()

+ alphas_bar_sqrt_T = alphas_bar_sqrt[-1].clone()

+

+ # Shift so the last timestep is zero.

+ alphas_bar_sqrt -= alphas_bar_sqrt_T

+

+ # Scale so the first timestep is back to the old value.

+ alphas_bar_sqrt *= alphas_bar_sqrt_0 / (alphas_bar_sqrt_0 - alphas_bar_sqrt_T)

+

+ # Convert alphas_bar_sqrt to betas

+ alphas_bar = alphas_bar_sqrt**2 # Revert sqrt

+ alphas = alphas_bar[1:] / alphas_bar[:-1] # Revert cumprod

+ alphas = torch.cat([alphas_bar[0:1], alphas])

+ betas = 1 - alphas

+

+ return betas

+

+

+class LCMSchedulerWithTimestamp(SchedulerMixin, ConfigMixin):

+ """

+ This class modifies LCMScheduler to add a timestamp argument to set_timesteps

+

+

+ `LCMScheduler` extends the denoising procedure introduced in denoising diffusion probabilistic models (DDPMs) with

+ non-Markovian guidance.

+ This model inherits from [`SchedulerMixin`] and [`ConfigMixin`]. Check the superclass documentation for the generic

+ methods the library implements for all schedulers such as loading and saving.

+ Args:

+ num_train_timesteps (`int`, defaults to 1000):

+ The number of diffusion steps to train the model.

+ beta_start (`float`, defaults to 0.0001):

+ The starting `beta` value of inference.

+ beta_end (`float`, defaults to 0.02):

+ The final `beta` value.

+ beta_schedule (`str`, defaults to `"linear"`):

+ The beta schedule, a mapping from a beta range to a sequence of betas for stepping the model. Choose from

+ `linear`, `scaled_linear`, or `squaredcos_cap_v2`.

+ trained_betas (`np.ndarray`, *optional*):

+ Pass an array of betas directly to the constructor to bypass `beta_start` and `beta_end`.

+ clip_sample (`bool`, defaults to `True`):

+ Clip the predicted sample for numerical stability.

+ clip_sample_range (`float`, defaults to 1.0):

+ The maximum magnitude for sample clipping. Valid only when `clip_sample=True`.

+ set_alpha_to_one (`bool`, defaults to `True`):

+ Each diffusion step uses the alphas product value at that step and at the previous one. For the final step

+ there is no previous alpha. When this option is `True` the previous alpha product is fixed to `1`,

+ otherwise it uses the alpha value at step 0.

+ steps_offset (`int`, defaults to 0):

+ An offset added to the inference steps. You can use a combination of `offset=1` and

+ `set_alpha_to_one=False` to make the last step use step 0 for the previous alpha product like in Stable

+ Diffusion.

+ prediction_type (`str`, defaults to `epsilon`, *optional*):

+ Prediction type of the scheduler function; can be `epsilon` (predicts the noise of the diffusion process),

+ `sample` (directly predicts the noisy sample`) or `v_prediction` (see section 2.4 of [Imagen

+ Video](https://imagen.research.google/video/paper.pdf) paper).

+ thresholding (`bool`, defaults to `False`):

+ Whether to use the "dynamic thresholding" method. This is unsuitable for latent-space diffusion models such

+ as Stable Diffusion.

+ dynamic_thresholding_ratio (`float`, defaults to 0.995):

+ The ratio for the dynamic thresholding method. Valid only when `thresholding=True`.

+ sample_max_value (`float`, defaults to 1.0):

+ The threshold value for dynamic thresholding. Valid only when `thresholding=True`.

+ timestep_spacing (`str`, defaults to `"leading"`):

+ The way the timesteps should be scaled. Refer to Table 2 of the [Common Diffusion Noise Schedules and

+ Sample Steps are Flawed](https://huggingface.co/papers/2305.08891) for more information.

+ rescale_betas_zero_snr (`bool`, defaults to `False`):

+ Whether to rescale the betas to have zero terminal SNR. This enables the model to generate very bright and

+ dark samples instead of limiting it to samples with medium brightness. Loosely related to

+ [`--offset_noise`](https://github.com/huggingface/diffusers/blob/74fd735eb073eb1d774b1ab4154a0876eb82f055/examples/dreambooth/train_dreambooth.py#L506).

+ """

+

+ # _compatibles = [e.name for e in KarrasDiffusionSchedulers]

+ order = 1

+

+ @register_to_config

+ def __init__(

+ self,

+ num_train_timesteps: int = 1000,

+ beta_start: float = 0.0001,

+ beta_end: float = 0.02,

+ beta_schedule: str = "linear",

+ trained_betas: Optional[Union[np.ndarray, List[float]]] = None,

+ clip_sample: bool = True,

+ set_alpha_to_one: bool = True,

+ steps_offset: int = 0,

+ prediction_type: str = "epsilon",

+ thresholding: bool = False,

+ dynamic_thresholding_ratio: float = 0.995,

+ clip_sample_range: float = 1.0,

+ sample_max_value: float = 1.0,

+ timestep_spacing: str = "leading",

+ rescale_betas_zero_snr: bool = False,

+ ):

+ if trained_betas is not None:

+ self.betas = torch.tensor(trained_betas, dtype=torch.float32)

+ elif beta_schedule == "linear":

+ self.betas = torch.linspace(beta_start, beta_end, num_train_timesteps, dtype=torch.float32)

+ elif beta_schedule == "scaled_linear":

+ # this schedule is very specific to the latent diffusion model.

+ self.betas = (

+ torch.linspace(beta_start**0.5, beta_end**0.5, num_train_timesteps, dtype=torch.float32) ** 2

+ )

+ elif beta_schedule == "squaredcos_cap_v2":

+ # Glide cosine schedule

+ self.betas = betas_for_alpha_bar(num_train_timesteps)

+ else:

+ raise NotImplementedError(f"{beta_schedule} does is not implemented for {self.__class__}")

+

+ # Rescale for zero SNR

+ if rescale_betas_zero_snr:

+ self.betas = rescale_zero_terminal_snr(self.betas)

+

+ self.alphas = 1.0 - self.betas

+ self.alphas_cumprod = torch.cumprod(self.alphas, dim=0)

+

+ # At every step in ddim, we are looking into the previous alphas_cumprod

+ # For the final step, there is no previous alphas_cumprod because we are already at 0

+ # `set_alpha_to_one` decides whether we set this parameter simply to one or

+ # whether we use the final alpha of the "non-previous" one.

+ self.final_alpha_cumprod = torch.tensor(1.0) if set_alpha_to_one else self.alphas_cumprod[0]

+

+ # standard deviation of the initial noise distribution

+ self.init_noise_sigma = 1.0

+

+ # setable values

+ self.num_inference_steps = None

+ self.timesteps = torch.from_numpy(np.arange(0, num_train_timesteps)[::-1].copy().astype(np.int64))

+

+ def scale_model_input(self, sample: torch.FloatTensor, timestep: Optional[int] = None) -> torch.FloatTensor:

+ """

+ Ensures interchangeability with schedulers that need to scale the denoising model input depending on the

+ current timestep.

+ Args:

+ sample (`torch.FloatTensor`):

+ The input sample.

+ timestep (`int`, *optional*):

+ The current timestep in the diffusion chain.

+ Returns:

+ `torch.FloatTensor`:

+ A scaled input sample.

+ """

+ return sample

+

+ def _get_variance(self, timestep, prev_timestep):

+ alpha_prod_t = self.alphas_cumprod[timestep]

+ alpha_prod_t_prev = self.alphas_cumprod[prev_timestep] if prev_timestep >= 0 else self.final_alpha_cumprod

+ beta_prod_t = 1 - alpha_prod_t

+ beta_prod_t_prev = 1 - alpha_prod_t_prev

+

+ variance = (beta_prod_t_prev / beta_prod_t) * (1 - alpha_prod_t / alpha_prod_t_prev)

+

+ return variance

+

+ # Copied from diffusers.schedulers.scheduling_ddpm.DDPMScheduler._threshold_sample

+ def _threshold_sample(self, sample: torch.FloatTensor) -> torch.FloatTensor:

+ """

+ "Dynamic thresholding: At each sampling step we set s to a certain percentile absolute pixel value in xt0 (the

+ prediction of x_0 at timestep t), and if s > 1, then we threshold xt0 to the range [-s, s] and then divide by

+ s. Dynamic thresholding pushes saturated pixels (those near -1 and 1) inwards, thereby actively preventing

+ pixels from saturation at each step. We find that dynamic thresholding results in significantly better

+ photorealism as well as better image-text alignment, especially when using very large guidance weights."

+ https://arxiv.org/abs/2205.11487

+ """

+ dtype = sample.dtype

+ batch_size, channels, height, width = sample.shape

+

+ if dtype not in (torch.float32, torch.float64):

+ sample = sample.float() # upcast for quantile calculation, and clamp not implemented for cpu half

+

+ # Flatten sample for doing quantile calculation along each image

+ sample = sample.reshape(batch_size, channels * height * width)

+

+ abs_sample = sample.abs() # "a certain percentile absolute pixel value"

+

+ s = torch.quantile(abs_sample, self.config.dynamic_thresholding_ratio, dim=1)

+ s = torch.clamp(

+ s, min=1, max=self.config.sample_max_value

+ ) # When clamped to min=1, equivalent to standard clipping to [-1, 1]

+

+ s = s.unsqueeze(1) # (batch_size, 1) because clamp will broadcast along dim=0

+ sample = torch.clamp(sample, -s, s) / s # "we threshold xt0 to the range [-s, s] and then divide by s"

+

+ sample = sample.reshape(batch_size, channels, height, width)

+ sample = sample.to(dtype)

+

+ return sample

+

+ def set_timesteps(

+ self, stength, num_inference_steps: int, lcm_origin_steps: int, device: Union[str, torch.device] = None

+ ):

+ """

+ Sets the discrete timesteps used for the diffusion chain (to be run before inference).

+ Args:

+ num_inference_steps (`int`):

+ The number of diffusion steps used when generating samples with a pre-trained model.

+ """

+

+ if num_inference_steps > self.config.num_train_timesteps:

+ raise ValueError(

+ f"`num_inference_steps`: {num_inference_steps} cannot be larger than `self.config.train_timesteps`:"

+ f" {self.config.num_train_timesteps} as the unet model trained with this scheduler can only handle"

+ f" maximal {self.config.num_train_timesteps} timesteps."

+ )

+

+ self.num_inference_steps = num_inference_steps

+

+ # LCM Timesteps Setting: # Linear Spacing

+ c = self.config.num_train_timesteps // lcm_origin_steps

+ lcm_origin_timesteps = (

+ np.asarray(list(range(1, int(lcm_origin_steps * stength) + 1))) * c - 1

+ ) # LCM Training Steps Schedule

+ skipping_step = len(lcm_origin_timesteps) // num_inference_steps

+ timesteps = lcm_origin_timesteps[::-skipping_step][:num_inference_steps] # LCM Inference Steps Schedule

+

+ self.timesteps = torch.from_numpy(timesteps.copy()).to(device)

+

+ def get_scalings_for_boundary_condition_discrete(self, t):

+ self.sigma_data = 0.5 # Default: 0.5

+

+ # By dividing 0.1: This is almost a delta function at t=0.

+ c_skip = self.sigma_data**2 / ((t / 0.1) ** 2 + self.sigma_data**2)

+ c_out = (t / 0.1) / ((t / 0.1) ** 2 + self.sigma_data**2) ** 0.5

+ return c_skip, c_out

+

+ def step(

+ self,

+ model_output: torch.FloatTensor,

+ timeindex: int,

+ timestep: int,

+ sample: torch.FloatTensor,

+ eta: float = 0.0,

+ use_clipped_model_output: bool = False,

+ generator=None,

+ variance_noise: Optional[torch.FloatTensor] = None,

+ return_dict: bool = True,

+ ) -> Union[LCMSchedulerOutput, Tuple]:

+ """

+ Predict the sample from the previous timestep by reversing the SDE. This function propagates the diffusion

+ process from the learned model outputs (most often the predicted noise).

+ Args:

+ model_output (`torch.FloatTensor`):

+ The direct output from learned diffusion model.

+ timestep (`float`):

+ The current discrete timestep in the diffusion chain.

+ sample (`torch.FloatTensor`):

+ A current instance of a sample created by the diffusion process.

+ eta (`float`):

+ The weight of noise for added noise in diffusion step.

+ use_clipped_model_output (`bool`, defaults to `False`):

+ If `True`, computes "corrected" `model_output` from the clipped predicted original sample. Necessary

+ because predicted original sample is clipped to [-1, 1] when `self.config.clip_sample` is `True`. If no

+ clipping has happened, "corrected" `model_output` would coincide with the one provided as input and

+ `use_clipped_model_output` has no effect.

+ generator (`torch.Generator`, *optional*):

+ A random number generator.

+ variance_noise (`torch.FloatTensor`):

+ Alternative to generating noise with `generator` by directly providing the noise for the variance

+ itself. Useful for methods such as [`CycleDiffusion`].

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~schedulers.scheduling_lcm.LCMSchedulerOutput`] or `tuple`.

+ Returns:

+ [`~schedulers.scheduling_utils.LCMSchedulerOutput`] or `tuple`:

+ If return_dict is `True`, [`~schedulers.scheduling_lcm.LCMSchedulerOutput`] is returned, otherwise a

+ tuple is returned where the first element is the sample tensor.

+ """

+ if self.num_inference_steps is None:

+ raise ValueError(

+ "Number of inference steps is 'None', you need to run 'set_timesteps' after creating the scheduler"

+ )

+

+ # 1. get previous step value

+ prev_timeindex = timeindex + 1

+ if prev_timeindex < len(self.timesteps):

+ prev_timestep = self.timesteps[prev_timeindex]

+ else:

+ prev_timestep = timestep

+

+ # 2. compute alphas, betas

+ alpha_prod_t = self.alphas_cumprod[timestep]

+ alpha_prod_t_prev = self.alphas_cumprod[prev_timestep] if prev_timestep >= 0 else self.final_alpha_cumprod

+

+ beta_prod_t = 1 - alpha_prod_t

+ beta_prod_t_prev = 1 - alpha_prod_t_prev

+

+ # 3. Get scalings for boundary conditions

+ c_skip, c_out = self.get_scalings_for_boundary_condition_discrete(timestep)

+

+ # 4. Different Parameterization:

+ parameterization = self.config.prediction_type

+

+ if parameterization == "epsilon": # noise-prediction

+ pred_x0 = (sample - beta_prod_t.sqrt() * model_output) / alpha_prod_t.sqrt()

+

+ elif parameterization == "sample": # x-prediction

+ pred_x0 = model_output

+

+ elif parameterization == "v_prediction": # v-prediction

+ pred_x0 = alpha_prod_t.sqrt() * sample - beta_prod_t.sqrt() * model_output

+

+ # 4. Denoise model output using boundary conditions

+ denoised = c_out * pred_x0 + c_skip * sample

+

+ # 5. Sample z ~ N(0, I), For MultiStep Inference

+ # Noise is not used for one-step sampling.

+ if len(self.timesteps) > 1:

+ noise = torch.randn(model_output.shape).to(model_output.device)

+ prev_sample = alpha_prod_t_prev.sqrt() * denoised + beta_prod_t_prev.sqrt() * noise

+ else:

+ prev_sample = denoised

+

+ if not return_dict:

+ return (prev_sample, denoised)

+

+ return LCMSchedulerOutput(prev_sample=prev_sample, denoised=denoised)

+

+ # Copied from diffusers.schedulers.scheduling_ddpm.DDPMScheduler.add_noise

+ def add_noise(

+ self,

+ original_samples: torch.FloatTensor,

+ noise: torch.FloatTensor,

+ timesteps: torch.IntTensor,

+ ) -> torch.FloatTensor:

+ # Make sure alphas_cumprod and timestep have same device and dtype as original_samples

+ alphas_cumprod = self.alphas_cumprod.to(device=original_samples.device, dtype=original_samples.dtype)

+ timesteps = timesteps.to(original_samples.device)

+

+ sqrt_alpha_prod = alphas_cumprod[timesteps] ** 0.5

+ sqrt_alpha_prod = sqrt_alpha_prod.flatten()

+ while len(sqrt_alpha_prod.shape) < len(original_samples.shape):

+ sqrt_alpha_prod = sqrt_alpha_prod.unsqueeze(-1)

+

+ sqrt_one_minus_alpha_prod = (1 - alphas_cumprod[timesteps]) ** 0.5

+ sqrt_one_minus_alpha_prod = sqrt_one_minus_alpha_prod.flatten()

+ while len(sqrt_one_minus_alpha_prod.shape) < len(original_samples.shape):

+ sqrt_one_minus_alpha_prod = sqrt_one_minus_alpha_prod.unsqueeze(-1)

+

+ noisy_samples = sqrt_alpha_prod * original_samples + sqrt_one_minus_alpha_prod * noise

+ return noisy_samples

+

+ # Copied from diffusers.schedulers.scheduling_ddpm.DDPMScheduler.get_velocity

+ def get_velocity(

+ self, sample: torch.FloatTensor, noise: torch.FloatTensor, timesteps: torch.IntTensor

+ ) -> torch.FloatTensor:

+ # Make sure alphas_cumprod and timestep have same device and dtype as sample

+ alphas_cumprod = self.alphas_cumprod.to(device=sample.device, dtype=sample.dtype)

+ timesteps = timesteps.to(sample.device)

+

+ sqrt_alpha_prod = alphas_cumprod[timesteps] ** 0.5

+ sqrt_alpha_prod = sqrt_alpha_prod.flatten()

+ while len(sqrt_alpha_prod.shape) < len(sample.shape):

+ sqrt_alpha_prod = sqrt_alpha_prod.unsqueeze(-1)

+

+ sqrt_one_minus_alpha_prod = (1 - alphas_cumprod[timesteps]) ** 0.5

+ sqrt_one_minus_alpha_prod = sqrt_one_minus_alpha_prod.flatten()

+ while len(sqrt_one_minus_alpha_prod.shape) < len(sample.shape):

+ sqrt_one_minus_alpha_prod = sqrt_one_minus_alpha_prod.unsqueeze(-1)

+

+ velocity = sqrt_alpha_prod * noise - sqrt_one_minus_alpha_prod * sample

+ return velocity

+

+ def __len__(self):

+ return self.config.num_train_timesteps

diff --git a/examples/dreambooth/train_dreambooth.py b/examples/dreambooth/train_dreambooth.py

index 6ad79a47deb5..6d59ee4de383 100644

--- a/examples/dreambooth/train_dreambooth.py

+++ b/examples/dreambooth/train_dreambooth.py

@@ -1167,7 +1167,7 @@ def compute_text_embeddings(prompt):

if args.resume_from_checkpoint != "latest":

path = os.path.basename(args.resume_from_checkpoint)

else:

- # Get the mos recent checkpoint

+ # Get the most recent checkpoint

dirs = os.listdir(args.output_dir)

dirs = [d for d in dirs if d.startswith("checkpoint")]

dirs = sorted(dirs, key=lambda x: int(x.split("-")[1]))

@@ -1364,7 +1364,7 @@ def compute_text_embeddings(prompt):

if global_step >= args.max_train_steps:

break

- # Create the pipeline using using the trained modules and save it.

+ # Create the pipeline using the trained modules and save it.

accelerator.wait_for_everyone()

if accelerator.is_main_process:

pipeline_args = {}

diff --git a/examples/text_to_image/train_text_to_image_flax.py b/examples/text_to_image/train_text_to_image_flax.py

index ac3afcbaba12..63ea53c52a11 100644

--- a/examples/text_to_image/train_text_to_image_flax.py

+++ b/examples/text_to_image/train_text_to_image_flax.py

@@ -208,6 +208,12 @@ def parse_args():

),

)

parser.add_argument("--local_rank", type=int, default=-1, help="For distributed training: local_rank")

+ parser.add_argument(

+ "--from_pt",

+ action="store_true",

+ default=False,

+ help="Flag to indicate whether to convert models from PyTorch.",

+ )

args = parser.parse_args()

env_local_rank = int(os.environ.get("LOCAL_RANK", -1))

@@ -374,16 +380,31 @@ def collate_fn(examples):

# Load models and create wrapper for stable diffusion

tokenizer = CLIPTokenizer.from_pretrained(

- args.pretrained_model_name_or_path, revision=args.revision, subfolder="tokenizer"

+ args.pretrained_model_name_or_path,

+ from_pt=args.from_pt,

+ revision=args.revision,

+ subfolder="tokenizer",

)

text_encoder = FlaxCLIPTextModel.from_pretrained(

- args.pretrained_model_name_or_path, revision=args.revision, subfolder="text_encoder", dtype=weight_dtype

+ args.pretrained_model_name_or_path,

+ from_pt=args.from_pt,

+ revision=args.revision,

+ subfolder="text_encoder",

+ dtype=weight_dtype,

)

vae, vae_params = FlaxAutoencoderKL.from_pretrained(

- args.pretrained_model_name_or_path, revision=args.revision, subfolder="vae", dtype=weight_dtype

+ args.pretrained_model_name_or_path,

+ from_pt=args.from_pt,

+ revision=args.revision,

+ subfolder="vae",

+ dtype=weight_dtype,

)

unet, unet_params = FlaxUNet2DConditionModel.from_pretrained(

- args.pretrained_model_name_or_path, revision=args.revision, subfolder="unet", dtype=weight_dtype

+ args.pretrained_model_name_or_path,

+ from_pt=args.from_pt,

+ revision=args.revision,

+ subfolder="unet",

+ dtype=weight_dtype,

)

# Optimization

diff --git a/src/diffusers/__init__.py b/src/diffusers/__init__.py

index 42f352c029c8..9d146ac233c2 100644

--- a/src/diffusers/__init__.py

+++ b/src/diffusers/__init__.py

@@ -142,6 +142,7 @@

"KarrasVeScheduler",

"KDPM2AncestralDiscreteScheduler",

"KDPM2DiscreteScheduler",

+ "LCMScheduler",

"PNDMScheduler",

"RePaintScheduler",

"SchedulerMixin",

@@ -226,6 +227,7 @@

"KandinskyV22Pipeline",

"KandinskyV22PriorEmb2EmbPipeline",

"KandinskyV22PriorPipeline",

+ "LatentConsistencyModelPipeline",

"LDMTextToImagePipeline",

"MusicLDMPipeline",

"PaintByExamplePipeline",

@@ -499,6 +501,7 @@

KarrasVeScheduler,

KDPM2AncestralDiscreteScheduler,

KDPM2DiscreteScheduler,

+ LCMScheduler,

PNDMScheduler,

RePaintScheduler,

SchedulerMixin,

@@ -564,6 +567,7 @@

KandinskyV22Pipeline,

KandinskyV22PriorEmb2EmbPipeline,

KandinskyV22PriorPipeline,

+ LatentConsistencyModelPipeline,

LDMTextToImagePipeline,

MusicLDMPipeline,

PaintByExamplePipeline,

diff --git a/src/diffusers/configuration_utils.py b/src/diffusers/configuration_utils.py

index 9bc25155a0b6..a67fa9d41ca5 100644

--- a/src/diffusers/configuration_utils.py

+++ b/src/diffusers/configuration_utils.py

@@ -485,10 +485,18 @@ def extract_init_dict(cls, config_dict, **kwargs):

# remove attributes from orig class that cannot be expected

orig_cls_name = config_dict.pop("_class_name", cls.__name__)

- if orig_cls_name != cls.__name__ and hasattr(diffusers_library, orig_cls_name):

+ if (

+ isinstance(orig_cls_name, str)

+ and orig_cls_name != cls.__name__

+ and hasattr(diffusers_library, orig_cls_name)

+ ):

orig_cls = getattr(diffusers_library, orig_cls_name)

unexpected_keys_from_orig = cls._get_init_keys(orig_cls) - expected_keys

config_dict = {k: v for k, v in config_dict.items() if k not in unexpected_keys_from_orig}

+ elif not isinstance(orig_cls_name, str) and not isinstance(orig_cls_name, (list, tuple)):

+ raise ValueError(

+ "Make sure that the `_class_name` is of type string or list of string (for custom pipelines)."

+ )

# remove private attributes

config_dict = {k: v for k, v in config_dict.items() if not k.startswith("_")}

diff --git a/src/diffusers/loaders.py b/src/diffusers/loaders.py

index e36088e4645d..67043866be6e 100644

--- a/src/diffusers/loaders.py

+++ b/src/diffusers/loaders.py

@@ -3087,13 +3087,13 @@ def from_single_file(cls, pretrained_model_link_or_path, **kwargs):

Examples:

```py

- from diffusers import StableDiffusionControlnetPipeline, ControlNetModel

+ from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

url = "https://huggingface.co/lllyasviel/ControlNet-v1-1/blob/main/control_v11p_sd15_canny.pth" # can also be a local path

model = ControlNetModel.from_single_file(url)

url = "https://huggingface.co/runwayml/stable-diffusion-v1-5/blob/main/v1-5-pruned.safetensors" # can also be a local path

- pipe = StableDiffusionControlnetPipeline.from_single_file(url, controlnet=controlnet)

+ pipe = StableDiffusionControlNetPipeline.from_single_file(url, controlnet=controlnet)

```

"""

# import here to avoid circular dependency

@@ -3171,7 +3171,7 @@ def from_single_file(cls, pretrained_model_link_or_path, **kwargs):

)

if torch_dtype is not None:

- controlnet.to(torch_dtype=torch_dtype)

+ controlnet.to(dtype=torch_dtype)

return controlnet

diff --git a/src/diffusers/models/activations.py b/src/diffusers/models/activations.py

index e66d90040fd2..8b75162ba597 100644

--- a/src/diffusers/models/activations.py

+++ b/src/diffusers/models/activations.py

@@ -21,6 +21,15 @@

from .lora import LoRACompatibleLinear

+ACTIVATION_FUNCTIONS = {

+ "swish": nn.SiLU(),

+ "silu": nn.SiLU(),

+ "mish": nn.Mish(),

+ "gelu": nn.GELU(),

+ "relu": nn.ReLU(),

+}

+

+

def get_activation(act_fn: str) -> nn.Module:

"""Helper function to get activation function from string.

@@ -30,14 +39,10 @@ def get_activation(act_fn: str) -> nn.Module:

Returns:

nn.Module: Activation function.

"""

- if act_fn in ["swish", "silu"]:

- return nn.SiLU()

- elif act_fn == "mish":

- return nn.Mish()

- elif act_fn == "gelu":

- return nn.GELU()

- elif act_fn == "relu":

- return nn.ReLU()

+

+ act_fn = act_fn.lower()

+ if act_fn in ACTIVATION_FUNCTIONS:

+ return ACTIVATION_FUNCTIONS[act_fn]

else:

raise ValueError(f"Unsupported activation function: {act_fn}")

diff --git a/src/diffusers/models/attention_processor.py b/src/diffusers/models/attention_processor.py

index 9856f3c7739c..efed305a0e96 100644

--- a/src/diffusers/models/attention_processor.py

+++ b/src/diffusers/models/attention_processor.py

@@ -40,14 +40,50 @@ class Attention(nn.Module):

A cross attention layer.

Parameters:

- query_dim (`int`): The number of channels in the query.

+ query_dim (`int`):

+ The number of channels in the query.

cross_attention_dim (`int`, *optional*):