diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..5c4c9a5

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,135 @@

+# Byte-compiled / optimized / DLL files

+__pycache__/

+*.py[cod]

+*$py.class

+*.ipynb

+

+# C extensions

+*.so

+

+# Distribution / packaging

+.Python

+build/

+develop-eggs/

+dist/

+downloads/

+eggs/

+.eggs/

+lib/

+lib64/

+parts/

+sdist/

+var/

+wheels/

+*.egg-info/

+.installed.cfg

+*.egg

+MANIFEST

+

+# PyInstaller

+# Usually these files are written by a python script from a template

+# before PyInstaller builds the exe, so as to inject date/other infos into it.

+*.manifest

+*.spec

+

+# Installer logs

+pip-log.txt

+pip-delete-this-directory.txt

+

+# Unit test / coverage reports

+htmlcov/

+.tox/

+.coverage

+.coverage.*

+.cache

+nosetests.xml

+coverage.xml

+*.cover

+.hypothesis/

+.pytest_cache/

+

+# Translations

+*.mo

+*.pot

+

+# Django stuff:

+*.log

+local_settings.py

+db.sqlite3

+

+# Flask stuff:

+instance/

+.webassets-cache

+

+# Scrapy stuff:

+.scrapy

+

+# Sphinx documentation

+docs/en/_build/

+docs/zh_cn/_build/

+

+# PyBuilder

+target/

+

+# Jupyter Notebook

+.ipynb_checkpoints

+

+# pyenv

+.python-version

+

+# celery beat schedule file

+celerybeat-schedule

+

+# SageMath parsed files

+*.sage.py

+

+# Environments

+.env

+.venv

+env/

+venv/

+ENV/

+env.bak/

+venv.bak/

+

+# Spyder project settings

+.spyderproject

+.spyproject

+

+# Rope project settings

+.ropeproject

+

+# mkdocs documentation

+/site

+

+# mypy

+.mypy_cache/

+

+# cython generated cpp

+data

+.vscode

+.idea

+

+# custom

+*.pkl

+*.pkl.json

+*.log.json

+work_dirs/

+exps/

+*~

+mmdet3d/.mim

+

+# Pytorch

+*.pth

+

+# demo

+*.jpg

+*.png

+data/s3dis/Stanford3dDataset_v1.2_Aligned_Version/

+data/scannet/scans/

+data/sunrgbd/OFFICIAL_SUNRGBD/

+*.obj

+*.ply

+

+# Waymo evaluation

+mmdet3d/core/evaluation/waymo_utils/compute_detection_metrics_main

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 0000000..790bfb1

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,50 @@

+repos:

+ - repo: https://github.com/PyCQA/flake8

+ rev: 3.8.3

+ hooks:

+ - id: flake8

+ - repo: https://github.com/PyCQA/isort

+ rev: 5.10.1

+ hooks:

+ - id: isort

+ - repo: https://github.com/pre-commit/mirrors-yapf

+ rev: v0.30.0

+ hooks:

+ - id: yapf

+ - repo: https://github.com/pre-commit/pre-commit-hooks

+ rev: v3.1.0

+ hooks:

+ - id: trailing-whitespace

+ - id: check-yaml

+ - id: end-of-file-fixer

+ - id: requirements-txt-fixer

+ - id: double-quote-string-fixer

+ - id: check-merge-conflict

+ - id: fix-encoding-pragma

+ args: ["--remove"]

+ - id: mixed-line-ending

+ args: ["--fix=lf"]

+ - repo: https://github.com/codespell-project/codespell

+ rev: v2.1.0

+ hooks:

+ - id: codespell

+ - repo: https://github.com/executablebooks/mdformat

+ rev: 0.7.14

+ hooks:

+ - id: mdformat

+ args: [ "--number" ]

+ additional_dependencies:

+ - mdformat-gfm

+ - mdformat_frontmatter

+ - linkify-it-py

+ - repo: https://github.com/myint/docformatter

+ rev: v1.3.1

+ hooks:

+ - id: docformatter

+ args: ["--in-place", "--wrap-descriptions", "79"]

+ - repo: https://github.com/open-mmlab/pre-commit-hooks

+ rev: v0.2.0 # Use the ref you want to point at

+ hooks:

+ - id: check-algo-readme

+ - id: check-copyright

+ args: ["mmdet3d"] # replace the dir_to_check with your expected directory to check

diff --git a/.readthedocs.yml b/.readthedocs.yml

new file mode 100644

index 0000000..49178bb

--- /dev/null

+++ b/.readthedocs.yml

@@ -0,0 +1,10 @@

+version: 2

+

+formats: all

+

+python:

+ version: 3.7

+ install:

+ - requirements: requirements/docs.txt

+ - requirements: requirements/runtime.txt

+ - requirements: requirements/readthedocs.txt

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000..e4cf43e

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,159 @@

+# Attribution-NonCommercial 4.0 International

+

+> *Creative Commons Corporation (“Creative Commons”) is not a law firm and does not provide legal services or legal advice. Distribution of Creative Commons public licenses does not create a lawyer-client or other relationship. Creative Commons makes its licenses and related information available on an “as-is” basis. Creative Commons gives no warranties regarding its licenses, any material licensed under their terms and conditions, or any related information. Creative Commons disclaims all liability for damages resulting from their use to the fullest extent possible.*

+>

+> ### Using Creative Commons Public Licenses

+>

+> Creative Commons public licenses provide a standard set of terms and conditions that creators and other rights holders may use to share original works of authorship and other material subject to copyright and certain other rights specified in the public license below. The following considerations are for informational purposes only, are not exhaustive, and do not form part of our licenses.

+>

+> * __Considerations for licensors:__ Our public licenses are intended for use by those authorized to give the public permission to use material in ways otherwise restricted by copyright and certain other rights. Our licenses are irrevocable. Licensors should read and understand the terms and conditions of the license they choose before applying it. Licensors should also secure all rights necessary before applying our licenses so that the public can reuse the material as expected. Licensors should clearly mark any material not subject to the license. This includes other CC-licensed material, or material used under an exception or limitation to copyright. [More considerations for licensors](http://wiki.creativecommons.org/Considerations_for_licensors_and_licensees#Considerations_for_licensors).

+>

+> * __Considerations for the public:__ By using one of our public licenses, a licensor grants the public permission to use the licensed material under specified terms and conditions. If the licensor’s permission is not necessary for any reason–for example, because of any applicable exception or limitation to copyright–then that use is not regulated by the license. Our licenses grant only permissions under copyright and certain other rights that a licensor has authority to grant. Use of the licensed material may still be restricted for other reasons, including because others have copyright or other rights in the material. A licensor may make special requests, such as asking that all changes be marked or described. Although not required by our licenses, you are encouraged to respect those requests where reasonable. [More considerations for the public](http://wiki.creativecommons.org/Considerations_for_licensors_and_licensees#Considerations_for_licensees).

+

+## Creative Commons Attribution-NonCommercial 4.0 International Public License

+

+By exercising the Licensed Rights (defined below), You accept and agree to be bound by the terms and conditions of this Creative Commons Attribution-NonCommercial 4.0 International Public License ("Public License"). To the extent this Public License may be interpreted as a contract, You are granted the Licensed Rights in consideration of Your acceptance of these terms and conditions, and the Licensor grants You such rights in consideration of benefits the Licensor receives from making the Licensed Material available under these terms and conditions.

+

+### Section 1 – Definitions.

+

+a. __Adapted Material__ means material subject to Copyright and Similar Rights that is derived from or based upon the Licensed Material and in which the Licensed Material is translated, altered, arranged, transformed, or otherwise modified in a manner requiring permission under the Copyright and Similar Rights held by the Licensor. For purposes of this Public License, where the Licensed Material is a musical work, performance, or sound recording, Adapted Material is always produced where the Licensed Material is synched in timed relation with a moving image.

+

+b. __Adapter's License__ means the license You apply to Your Copyright and Similar Rights in Your contributions to Adapted Material in accordance with the terms and conditions of this Public License.

+

+c. __Copyright and Similar Rights__ means copyright and/or similar rights closely related to copyright including, without limitation, performance, broadcast, sound recording, and Sui Generis Database Rights, without regard to how the rights are labeled or categorized. For purposes of this Public License, the rights specified in Section 2(b)(1)-(2) are not Copyright and Similar Rights.

+

+d. __Effective Technological Measures__ means those measures that, in the absence of proper authority, may not be circumvented under laws fulfilling obligations under Article 11 of the WIPO Copyright Treaty adopted on December 20, 1996, and/or similar international agreements.

+

+e. __Exceptions and Limitations__ means fair use, fair dealing, and/or any other exception or limitation to Copyright and Similar Rights that applies to Your use of the Licensed Material.

+

+f. __Licensed Material__ means the artistic or literary work, database, or other material to which the Licensor applied this Public License.

+

+g. __Licensed Rights__ means the rights granted to You subject to the terms and conditions of this Public License, which are limited to all Copyright and Similar Rights that apply to Your use of the Licensed Material and that the Licensor has authority to license.

+

+h. __Licensor__ means the individual(s) or entity(ies) granting rights under this Public License.

+

+i. __NonCommercial__ means not primarily intended for or directed towards commercial advantage or monetary compensation. For purposes of this Public License, the exchange of the Licensed Material for other material subject to Copyright and Similar Rights by digital file-sharing or similar means is NonCommercial provided there is no payment of monetary compensation in connection with the exchange.

+

+j. __Share__ means to provide material to the public by any means or process that requires permission under the Licensed Rights, such as reproduction, public display, public performance, distribution, dissemination, communication, or importation, and to make material available to the public including in ways that members of the public may access the material from a place and at a time individually chosen by them.

+

+k. __Sui Generis Database Rights__ means rights other than copyright resulting from Directive 96/9/EC of the European Parliament and of the Council of 11 March 1996 on the legal protection of databases, as amended and/or succeeded, as well as other essentially equivalent rights anywhere in the world.

+

+l. __You__ means the individual or entity exercising the Licensed Rights under this Public License. Your has a corresponding meaning.

+

+### Section 2 – Scope.

+

+a. ___License grant.___

+

+ 1. Subject to the terms and conditions of this Public License, the Licensor hereby grants You a worldwide, royalty-free, non-sublicensable, non-exclusive, irrevocable license to exercise the Licensed Rights in the Licensed Material to:

+

+ A. reproduce and Share the Licensed Material, in whole or in part, for NonCommercial purposes only; and

+

+ B. produce, reproduce, and Share Adapted Material for NonCommercial purposes only.

+

+ 2. __Exceptions and Limitations.__ For the avoidance of doubt, where Exceptions and Limitations apply to Your use, this Public License does not apply, and You do not need to comply with its terms and conditions.

+

+ 3. __Term.__ The term of this Public License is specified in Section 6(a).

+

+ 4. __Media and formats; technical modifications allowed.__ The Licensor authorizes You to exercise the Licensed Rights in all media and formats whether now known or hereafter created, and to make technical modifications necessary to do so. The Licensor waives and/or agrees not to assert any right or authority to forbid You from making technical modifications necessary to exercise the Licensed Rights, including technical modifications necessary to circumvent Effective Technological Measures. For purposes of this Public License, simply making modifications authorized by this Section 2(a)(4) never produces Adapted Material.

+

+ 5. __Downstream recipients.__

+

+ A. __Offer from the Licensor – Licensed Material.__ Every recipient of the Licensed Material automatically receives an offer from the Licensor to exercise the Licensed Rights under the terms and conditions of this Public License.

+

+ B. __No downstream restrictions.__ You may not offer or impose any additional or different terms or conditions on, or apply any Effective Technological Measures to, the Licensed Material if doing so restricts exercise of the Licensed Rights by any recipient of the Licensed Material.

+

+ 6. __No endorsement.__ Nothing in this Public License constitutes or may be construed as permission to assert or imply that You are, or that Your use of the Licensed Material is, connected with, or sponsored, endorsed, or granted official status by, the Licensor or others designated to receive attribution as provided in Section 3(a)(1)(A)(i).

+

+b. ___Other rights.___

+

+ 1. Moral rights, such as the right of integrity, are not licensed under this Public License, nor are publicity, privacy, and/or other similar personality rights; however, to the extent possible, the Licensor waives and/or agrees not to assert any such rights held by the Licensor to the limited extent necessary to allow You to exercise the Licensed Rights, but not otherwise.

+

+ 2. Patent and trademark rights are not licensed under this Public License.

+

+ 3. To the extent possible, the Licensor waives any right to collect royalties from You for the exercise of the Licensed Rights, whether directly or through a collecting society under any voluntary or waivable statutory or compulsory licensing scheme. In all other cases the Licensor expressly reserves any right to collect such royalties, including when the Licensed Material is used other than for NonCommercial purposes.

+

+### Section 3 – License Conditions.

+

+Your exercise of the Licensed Rights is expressly made subject to the following conditions.

+

+a. ___Attribution.___

+

+ 1. If You Share the Licensed Material (including in modified form), You must:

+

+ A. retain the following if it is supplied by the Licensor with the Licensed Material:

+

+ i. identification of the creator(s) of the Licensed Material and any others designated to receive attribution, in any reasonable manner requested by the Licensor (including by pseudonym if designated);

+

+ ii. a copyright notice;

+

+ iii. a notice that refers to this Public License;

+

+ iv. a notice that refers to the disclaimer of warranties;

+

+ v. a URI or hyperlink to the Licensed Material to the extent reasonably practicable;

+

+ B. indicate if You modified the Licensed Material and retain an indication of any previous modifications; and

+

+ C. indicate the Licensed Material is licensed under this Public License, and include the text of, or the URI or hyperlink to, this Public License.

+

+ 2. You may satisfy the conditions in Section 3(a)(1) in any reasonable manner based on the medium, means, and context in which You Share the Licensed Material. For example, it may be reasonable to satisfy the conditions by providing a URI or hyperlink to a resource that includes the required information.

+

+ 3. If requested by the Licensor, You must remove any of the information required by Section 3(a)(1)(A) to the extent reasonably practicable.

+

+ 4. If You Share Adapted Material You produce, the Adapter's License You apply must not prevent recipients of the Adapted Material from complying with this Public License.

+

+### Section 4 – Sui Generis Database Rights.

+

+Where the Licensed Rights include Sui Generis Database Rights that apply to Your use of the Licensed Material:

+

+a. for the avoidance of doubt, Section 2(a)(1) grants You the right to extract, reuse, reproduce, and Share all or a substantial portion of the contents of the database for NonCommercial purposes only;

+

+b. if You include all or a substantial portion of the database contents in a database in which You have Sui Generis Database Rights, then the database in which You have Sui Generis Database Rights (but not its individual contents) is Adapted Material; and

+

+c. You must comply with the conditions in Section 3(a) if You Share all or a substantial portion of the contents of the database.

+

+For the avoidance of doubt, this Section 4 supplements and does not replace Your obligations under this Public License where the Licensed Rights include other Copyright and Similar Rights.

+

+### Section 5 – Disclaimer of Warranties and Limitation of Liability.

+

+a. __Unless otherwise separately undertaken by the Licensor, to the extent possible, the Licensor offers the Licensed Material as-is and as-available, and makes no representations or warranties of any kind concerning the Licensed Material, whether express, implied, statutory, or other. This includes, without limitation, warranties of title, merchantability, fitness for a particular purpose, non-infringement, absence of latent or other defects, accuracy, or the presence or absence of errors, whether or not known or discoverable. Where disclaimers of warranties are not allowed in full or in part, this disclaimer may not apply to You.__

+

+b. __To the extent possible, in no event will the Licensor be liable to You on any legal theory (including, without limitation, negligence) or otherwise for any direct, special, indirect, incidental, consequential, punitive, exemplary, or other losses, costs, expenses, or damages arising out of this Public License or use of the Licensed Material, even if the Licensor has been advised of the possibility of such losses, costs, expenses, or damages. Where a limitation of liability is not allowed in full or in part, this limitation may not apply to You.__

+

+c. The disclaimer of warranties and limitation of liability provided above shall be interpreted in a manner that, to the extent possible, most closely approximates an absolute disclaimer and waiver of all liability.

+

+### Section 6 – Term and Termination.

+

+a. This Public License applies for the term of the Copyright and Similar Rights licensed here. However, if You fail to comply with this Public License, then Your rights under this Public License terminate automatically.

+

+b. Where Your right to use the Licensed Material has terminated under Section 6(a), it reinstates:

+

+ 1. automatically as of the date the violation is cured, provided it is cured within 30 days of Your discovery of the violation; or

+

+ 2. upon express reinstatement by the Licensor.

+

+ For the avoidance of doubt, this Section 6(b) does not affect any right the Licensor may have to seek remedies for Your violations of this Public License.

+

+c. For the avoidance of doubt, the Licensor may also offer the Licensed Material under separate terms or conditions or stop distributing the Licensed Material at any time; however, doing so will not terminate this Public License.

+

+d. Sections 1, 5, 6, 7, and 8 survive termination of this Public License.

+

+### Section 7 – Other Terms and Conditions.

+

+a. The Licensor shall not be bound by any additional or different terms or conditions communicated by You unless expressly agreed.

+

+b. Any arrangements, understandings, or agreements regarding the Licensed Material not stated herein are separate from and independent of the terms and conditions of this Public License.

+

+### Section 8 – Interpretation.

+

+a. For the avoidance of doubt, this Public License does not, and shall not be interpreted to, reduce, limit, restrict, or impose conditions on any use of the Licensed Material that could lawfully be made without permission under this Public License.

+

+b. To the extent possible, if any provision of this Public License is deemed unenforceable, it shall be automatically reformed to the minimum extent necessary to make it enforceable. If the provision cannot be reformed, it shall be severed from this Public License without affecting the enforceability of the remaining terms and conditions.

+

+c. No term or condition of this Public License will be waived and no failure to comply consented to unless expressly agreed to by the Licensor.

+

+d. Nothing in this Public License constitutes or may be interpreted as a limitation upon, or waiver of, any privileges and immunities that apply to the Licensor or You, including from the legal processes of any jurisdiction or authority.

+

+> Creative Commons is not a party to its public licenses. Notwithstanding, Creative Commons may elect to apply one of its public licenses to material it publishes and in those instances will be considered the “Licensor.” Except for the limited purpose of indicating that material is shared under a Creative Commons public license or as otherwise permitted by the Creative Commons policies published at [creativecommons.org/policies](http://creativecommons.org/policies), Creative Commons does not authorize the use of the trademark “Creative Commons” or any other trademark or logo of Creative Commons without its prior written consent including, without limitation, in connection with any unauthorized modifications to any of its public licenses or any other arrangements, understandings, or agreements concerning use of licensed material. For the avoidance of doubt, this paragraph does not form part of the public licenses.

+>

+> Creative Commons may be contacted at creativecommons.org

\ No newline at end of file

diff --git a/MANIFEST.in b/MANIFEST.in

new file mode 100644

index 0000000..7b9cae6

--- /dev/null

+++ b/MANIFEST.in

@@ -0,0 +1,5 @@

+include mmdet3d/.mim/model-index.yml

+include requirements/*.txt

+recursive-include mmdet3d/.mim/ops *.cpp *.cu *.h *.cc

+recursive-include mmdet3d/.mim/configs *.py *.yml

+recursive-include mmdet3d/.mim/tools *.sh *.py

diff --git a/README.md b/README.md

new file mode 100644

index 0000000..f0e531a

--- /dev/null

+++ b/README.md

@@ -0,0 +1,78 @@

+## Top-Down Beats Bottom-Up in 3D Instance Segmentation

+

+**News**:

+ * :fire: February, 2023. Source code has been published.

+

+This repository contains an implementation of TD3D, a 3D instance segmentation method introduced in our paper:

+

+> **Top-Down Beats Bottom-Up in 3D Instance Segmentation**

+> [Maksim Kolodiazhnyi](https://github.com/col14m),

+> [Danila Rukhovich](https://github.com/filaPro),

+> [Anna Vorontsova](https://github.com/highrut),

+> [Anton Konushin](https://scholar.google.com/citations?user=ZT_k-wMAAAAJ)

+>

+> Samsung AI Center Moscow

+>

+

+### Installation

+For convenience, we provide a [Dockerfile](docker/Dockerfile).

+

+Alternatively, you can install all required packages manually. This implementation is based on [mmdetection3d](https://github.com/open-mmlab/mmdetection3d) framework.

+

+Please refer to the original installation guide [getting_started.md](docs/getting_started.md), including MinkowskiEngine installation, replacing open-mmlab/mmdetection3d with samsunglabs/td3d.

+

+Most of the `TD3D`-related code locates in the following files:

+[detectors/td3d_instance_segmentor.py](mmdet3d/models/detectors/td3d_instance_segmentor.py),

+[necks/ngfc_neck.py](mmdet3d/models/necks/ngfc_neck.py),

+[decode_heads/td3d_instance_head.py](mmdet3d/models/decode_heads/td3d_instance_head.py).

+

+### Getting Started

+

+Please see [getting_started.md](docs/getting_started.md) for basic usage examples.

+We follow the `mmdetection3d` data preparation protocol described in [scannet](data/scannet), [s3dis](data/s3dis).

+

+

+**Training**

+

+To start training, run [train](tools/train.py) with `TD3D` [configs](configs/td3d_is):

+```shell

+python tools/train.py configs/td3d_is/td3d_is_scannet-3d-18class.py

+```

+

+**Testing**

+

+Test pre-trained model using [test](tools/test.py) with `TD3D` [configs](configs/td3d_is). For best quality on Scannet and S3DIS, set `score_thr` to `0.1` and `nms_pre` to `1200` in configs. For best quality on Scannet200, set `score_thr` to `0.07` and `nms_pre` to `300`:

+```shell

+python tools/test.py configs/td3d_is/td3d_is_scannet-3d-18class.py \

+ work_dirs/td3d_is_scannet-3d-18class/latest.pth --eval mAP

+```

+

+**Visualization**

+

+Visualizations can be created with [test](tools/test.py) script.

+For better visualizations, you may set `score_thr` to `0.20` and `nms_pre` to `200` in configs:

+```shell

+python tools/test.py configs/td3d_is/td3d_is_scannet-3d-18class.py \

+ work_dirs/td3d_is_scannet-3d-18class/latest.pth --eval mAP --show \

+ --show-dir work_dirs/td3d_is_scannet-3d-18class

+```

+

+### Models (quality on validation subset)

+

+| Dataset | mAP@0.25 | mAP@0.5 | mAP | Download |

+|:-------:|:--------:|:-------:|:--------:|:--------:|

+| ScanNet | 81.3 | 71.1 | 46.2 | [model]() | [config]() |

+| S3DIS (5 area) | 82.8 | 66.5 | 47.4 | [model]() | [config]() |

+| S3DIS (5 area)

(ScanNet pretrain) | 85.6 | 75.5 | 61.1 | [model]() | [config]() |

+| Scannet200 | 39.7 | 33.3 | 22.2 | [model]() | [config]() |

+

+

+

+

diff --git a/configs/3dssd/3dssd_4x4_kitti-3d-car.py b/configs/3dssd/3dssd_4x4_kitti-3d-car.py

new file mode 100644

index 0000000..bcc8c82

--- /dev/null

+++ b/configs/3dssd/3dssd_4x4_kitti-3d-car.py

@@ -0,0 +1,121 @@

+_base_ = [

+ '../_base_/models/3dssd.py', '../_base_/datasets/kitti-3d-car.py',

+ '../_base_/default_runtime.py'

+]

+

+# dataset settings

+dataset_type = 'KittiDataset'

+data_root = 'data/kitti/'

+class_names = ['Car']

+point_cloud_range = [0, -40, -5, 70, 40, 3]

+input_modality = dict(use_lidar=True, use_camera=False)

+db_sampler = dict(

+ data_root=data_root,

+ info_path=data_root + 'kitti_dbinfos_train.pkl',

+ rate=1.0,

+ prepare=dict(filter_by_difficulty=[-1], filter_by_min_points=dict(Car=5)),

+ classes=class_names,

+ sample_groups=dict(Car=15))

+

+file_client_args = dict(backend='disk')

+# Uncomment the following if use ceph or other file clients.

+# See https://mmcv.readthedocs.io/en/latest/api.html#mmcv.fileio.FileClient

+# for more details.

+# file_client_args = dict(

+# backend='petrel', path_mapping=dict(data='s3://kitti_data/'))

+

+train_pipeline = [

+ dict(

+ type='LoadPointsFromFile',

+ coord_type='LIDAR',

+ load_dim=4,

+ use_dim=4,

+ file_client_args=file_client_args),

+ dict(

+ type='LoadAnnotations3D',

+ with_bbox_3d=True,

+ with_label_3d=True,

+ file_client_args=file_client_args),

+ dict(type='PointsRangeFilter', point_cloud_range=point_cloud_range),

+ dict(type='ObjectRangeFilter', point_cloud_range=point_cloud_range),

+ dict(type='ObjectSample', db_sampler=db_sampler),

+ dict(type='RandomFlip3D', flip_ratio_bev_horizontal=0.5),

+ dict(

+ type='ObjectNoise',

+ num_try=100,

+ translation_std=[1.0, 1.0, 0],

+ global_rot_range=[0.0, 0.0],

+ rot_range=[-1.0471975511965976, 1.0471975511965976]),

+ dict(

+ type='GlobalRotScaleTrans',

+ rot_range=[-0.78539816, 0.78539816],

+ scale_ratio_range=[0.9, 1.1]),

+ # 3DSSD can get a higher performance without this transform

+ # dict(type='BackgroundPointsFilter', bbox_enlarge_range=(0.5, 2.0, 0.5)),

+ dict(type='PointSample', num_points=16384),

+ dict(type='DefaultFormatBundle3D', class_names=class_names),

+ dict(type='Collect3D', keys=['points', 'gt_bboxes_3d', 'gt_labels_3d'])

+]

+

+test_pipeline = [

+ dict(

+ type='LoadPointsFromFile',

+ coord_type='LIDAR',

+ load_dim=4,

+ use_dim=4,

+ file_client_args=file_client_args),

+ dict(

+ type='MultiScaleFlipAug3D',

+ img_scale=(1333, 800),

+ pts_scale_ratio=1,

+ flip=False,

+ transforms=[

+ dict(

+ type='GlobalRotScaleTrans',

+ rot_range=[0, 0],

+ scale_ratio_range=[1., 1.],

+ translation_std=[0, 0, 0]),

+ dict(type='RandomFlip3D'),

+ dict(

+ type='PointsRangeFilter', point_cloud_range=point_cloud_range),

+ dict(type='PointSample', num_points=16384),

+ dict(

+ type='DefaultFormatBundle3D',

+ class_names=class_names,

+ with_label=False),

+ dict(type='Collect3D', keys=['points'])

+ ])

+]

+

+data = dict(

+ samples_per_gpu=4,

+ workers_per_gpu=4,

+ train=dict(dataset=dict(pipeline=train_pipeline)),

+ val=dict(pipeline=test_pipeline),

+ test=dict(pipeline=test_pipeline))

+

+evaluation = dict(interval=2)

+

+# model settings

+model = dict(

+ bbox_head=dict(

+ num_classes=1,

+ bbox_coder=dict(

+ type='AnchorFreeBBoxCoder', num_dir_bins=12, with_rot=True)))

+

+# optimizer

+lr = 0.002 # max learning rate

+optimizer = dict(type='AdamW', lr=lr, weight_decay=0)

+optimizer_config = dict(grad_clip=dict(max_norm=35, norm_type=2))

+lr_config = dict(policy='step', warmup=None, step=[45, 60])

+# runtime settings

+runner = dict(type='EpochBasedRunner', max_epochs=80)

+

+# yapf:disable

+log_config = dict(

+ interval=30,

+ hooks=[

+ dict(type='TextLoggerHook'),

+ dict(type='TensorboardLoggerHook')

+ ])

+# yapf:enable

diff --git a/configs/3dssd/README.md b/configs/3dssd/README.md

new file mode 100644

index 0000000..4feb6d7

--- /dev/null

+++ b/configs/3dssd/README.md

@@ -0,0 +1,45 @@

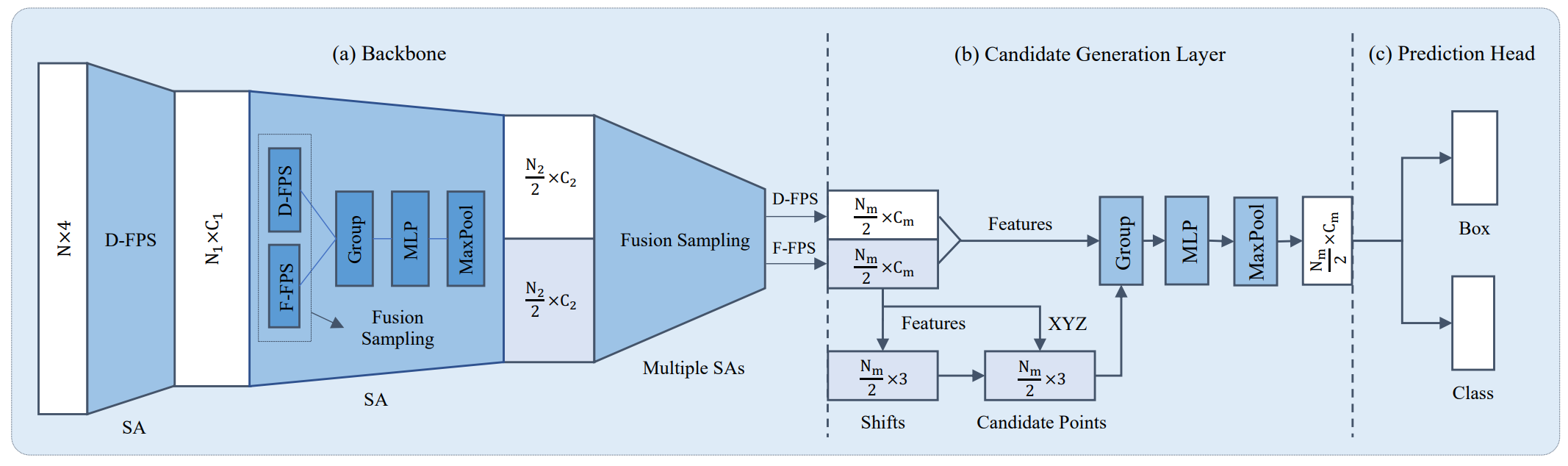

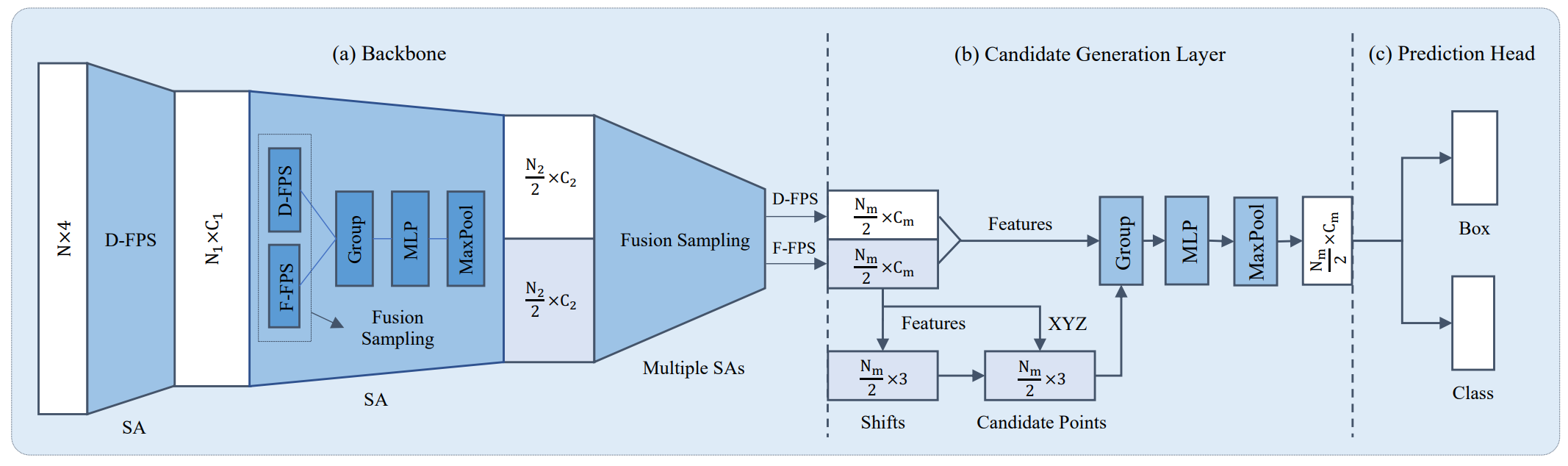

+# 3DSSD: Point-based 3D Single Stage Object Detector

+

+> [3DSSD: Point-based 3D Single Stage Object Detector](https://arxiv.org/abs/2002.10187)

+

+

+

+## Abstract

+

+Currently, there have been many kinds of voxel-based 3D single stage detectors, while point-based single stage methods are still underexplored. In this paper, we first present a lightweight and effective point-based 3D single stage object detector, named 3DSSD, achieving a good balance between accuracy and efficiency. In this paradigm, all upsampling layers and refinement stage, which are indispensable in all existing point-based methods, are abandoned to reduce the large computation cost. We novelly propose a fusion sampling strategy in downsampling process to make detection on less representative points feasible. A delicate box prediction network including a candidate generation layer, an anchor-free regression head with a 3D center-ness assignment strategy is designed to meet with our demand of accuracy and speed. Our paradigm is an elegant single stage anchor-free framework, showing great superiority to other existing methods. We evaluate 3DSSD on widely used KITTI dataset and more challenging nuScenes dataset. Our method outperforms all state-of-the-art voxel-based single stage methods by a large margin, and has comparable performance to two stage point-based methods as well, with inference speed more than 25 FPS, 2x faster than former state-of-the-art point-based methods.

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ (diff to make it use the same method for benchmarking speed - click to expand)

+

+

+ ```diff

+ diff --git a/tools/train_utils/train_utils.py b/tools/train_utils/train_utils.py

+ index 91f21dd..021359d 100644

+ --- a/tools/train_utils/train_utils.py

+ +++ b/tools/train_utils/train_utils.py

+ @@ -2,6 +2,7 @@ import torch

+ import os

+ import glob

+ import tqdm

+ +import datetime

+ from torch.nn.utils import clip_grad_norm_

+

+

+ @@ -13,7 +14,10 @@ def train_one_epoch(model, optimizer, train_loader, model_func, lr_scheduler, ac

+ if rank == 0:

+ pbar = tqdm.tqdm(total=total_it_each_epoch, leave=leave_pbar, desc='train', dynamic_ncols=True)

+

+ + start_time = None

+ for cur_it in range(total_it_each_epoch):

+ + if cur_it > 49 and start_time is None:

+ + start_time = datetime.datetime.now()

+ try:

+ batch = next(dataloader_iter)

+ except StopIteration:

+ @@ -55,9 +59,11 @@ def train_one_epoch(model, optimizer, train_loader, model_func, lr_scheduler, ac

+ tb_log.add_scalar('learning_rate', cur_lr, accumulated_iter)

+ for key, val in tb_dict.items():

+ tb_log.add_scalar('train_' + key, val, accumulated_iter)

+ + endtime = datetime.datetime.now()

+ + speed = (endtime - start_time).seconds / (total_it_each_epoch - 50)

+ if rank == 0:

+ pbar.close()

+ - return accumulated_iter

+ + return accumulated_iter, speed

+

+

+ def train_model(model, optimizer, train_loader, model_func, lr_scheduler, optim_cfg,

+ @@ -65,6 +71,7 @@ def train_model(model, optimizer, train_loader, model_func, lr_scheduler, optim_

+ lr_warmup_scheduler=None, ckpt_save_interval=1, max_ckpt_save_num=50,

+ merge_all_iters_to_one_epoch=False):

+ accumulated_iter = start_iter

+ + speeds = []

+ with tqdm.trange(start_epoch, total_epochs, desc='epochs', dynamic_ncols=True, leave=(rank == 0)) as tbar:

+ total_it_each_epoch = len(train_loader)

+ if merge_all_iters_to_one_epoch:

+ @@ -82,7 +89,7 @@ def train_model(model, optimizer, train_loader, model_func, lr_scheduler, optim_

+ cur_scheduler = lr_warmup_scheduler

+ else:

+ cur_scheduler = lr_scheduler

+ - accumulated_iter = train_one_epoch(

+ + accumulated_iter, speed = train_one_epoch(

+ model, optimizer, train_loader, model_func,

+ lr_scheduler=cur_scheduler,

+ accumulated_iter=accumulated_iter, optim_cfg=optim_cfg,

+ @@ -91,7 +98,7 @@ def train_model(model, optimizer, train_loader, model_func, lr_scheduler, optim_

+ total_it_each_epoch=total_it_each_epoch,

+ dataloader_iter=dataloader_iter

+ )

+ -

+ + speeds.append(speed)

+ # save trained model

+ trained_epoch = cur_epoch + 1

+ if trained_epoch % ckpt_save_interval == 0 and rank == 0:

+ @@ -107,6 +114,8 @@ def train_model(model, optimizer, train_loader, model_func, lr_scheduler, optim_

+ save_checkpoint(

+ checkpoint_state(model, optimizer, trained_epoch, accumulated_iter), filename=ckpt_name,

+ )

+ + print(speed)

+ + print(f'*******{sum(speeds) / len(speeds)}******')

+

+

+ def model_state_to_cpu(model_state):

+ ```

+

+

+

+### VoteNet

+

+- __MMDetection3D__: With release v0.1.0, run

+

+ ```bash

+ ./tools/dist_train.sh configs/votenet/votenet_16x8_sunrgbd-3d-10class.py 8 --no-validate

+ ```

+

+- __votenet__: At commit [2f6d6d3](https://github.com/facebookresearch/votenet/tree/2f6d6d36ff98d96901182e935afe48ccee82d566), run

+

+ ```bash

+ python train.py --dataset sunrgbd --batch_size 16

+ ```

+

+ Then benchmark the test speed by running

+

+ ```bash

+ python eval.py --dataset sunrgbd --checkpoint_path log_sunrgbd/checkpoint.tar --batch_size 1 --dump_dir eval_sunrgbd --cluster_sampling seed_fps --use_3d_nms --use_cls_nms --per_class_proposal

+ ```

+

+ Note that eval.py is modified to compute inference time.

+

+

+

+ (diff to benchmark the similar models - click to expand)

+

+

+ ```diff

+ diff --git a/eval.py b/eval.py

+ index c0b2886..04921e9 100644

+ --- a/eval.py

+ +++ b/eval.py

+ @@ -10,6 +10,7 @@ import os

+ import sys

+ import numpy as np

+ from datetime import datetime

+ +import time

+ import argparse

+ import importlib

+ import torch

+ @@ -28,7 +29,7 @@ parser.add_argument('--checkpoint_path', default=None, help='Model checkpoint pa

+ parser.add_argument('--dump_dir', default=None, help='Dump dir to save sample outputs [default: None]')

+ parser.add_argument('--num_point', type=int, default=20000, help='Point Number [default: 20000]')

+ parser.add_argument('--num_target', type=int, default=256, help='Point Number [default: 256]')

+ -parser.add_argument('--batch_size', type=int, default=8, help='Batch Size during training [default: 8]')

+ +parser.add_argument('--batch_size', type=int, default=1, help='Batch Size during training [default: 8]')

+ parser.add_argument('--vote_factor', type=int, default=1, help='Number of votes generated from each seed [default: 1]')

+ parser.add_argument('--cluster_sampling', default='vote_fps', help='Sampling strategy for vote clusters: vote_fps, seed_fps, random [default: vote_fps]')

+ parser.add_argument('--ap_iou_thresholds', default='0.25,0.5', help='A list of AP IoU thresholds [default: 0.25,0.5]')

+ @@ -132,6 +133,7 @@ CONFIG_DICT = {'remove_empty_box': (not FLAGS.faster_eval), 'use_3d_nms': FLAGS.

+ # ------------------------------------------------------------------------- GLOBAL CONFIG END

+

+ def evaluate_one_epoch():

+ + time_list = list()

+ stat_dict = {}

+ ap_calculator_list = [APCalculator(iou_thresh, DATASET_CONFIG.class2type) \

+ for iou_thresh in AP_IOU_THRESHOLDS]

+ @@ -144,6 +146,8 @@ def evaluate_one_epoch():

+

+ # Forward pass

+ inputs = {'point_clouds': batch_data_label['point_clouds']}

+ + torch.cuda.synchronize()

+ + start_time = time.perf_counter()

+ with torch.no_grad():

+ end_points = net(inputs)

+

+ @@ -161,6 +165,12 @@ def evaluate_one_epoch():

+

+ batch_pred_map_cls = parse_predictions(end_points, CONFIG_DICT)

+ batch_gt_map_cls = parse_groundtruths(end_points, CONFIG_DICT)

+ + torch.cuda.synchronize()

+ + elapsed = time.perf_counter() - start_time

+ + time_list.append(elapsed)

+ +

+ + if len(time_list==200):

+ + print("average inference time: %4f"%(sum(time_list[5:])/len(time_list[5:])))

+ for ap_calculator in ap_calculator_list:

+ ap_calculator.step(batch_pred_map_cls, batch_gt_map_cls)

+

+ ```

+

+### PointPillars-car

+

+- __MMDetection3D__: With release v0.1.0, run

+

+ ```bash

+ ./tools/dist_train.sh configs/benchmark/hv_pointpillars_secfpn_3x8_100e_det3d_kitti-3d-car.py 8 --no-validate

+ ```

+

+- __Det3D__: At commit [519251e](https://github.com/poodarchu/Det3D/tree/519251e72a5c1fdd58972eabeac67808676b9bb7), use `kitti_point_pillars_mghead_syncbn.py` and run

+

+ ```bash

+ ./tools/scripts/train.sh --launcher=slurm --gpus=8

+ ```

+

+ Note that the config in train.sh is modified to train point pillars.

+

+

+

+ (diff to benchmark the similar models - click to expand)

+

+

+ ```diff

+ diff --git a/tools/scripts/train.sh b/tools/scripts/train.sh

+ index 3a93f95..461e0ea 100755

+ --- a/tools/scripts/train.sh

+ +++ b/tools/scripts/train.sh

+ @@ -16,9 +16,9 @@ then

+ fi

+

+ # Voxelnet

+ -python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py examples/second/configs/ kitti_car_vfev3_spmiddlefhd_rpn1_mghead_syncbn.py --work_dir=$SECOND_WORK_DIR

+ +# python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py examples/second/configs/ kitti_car_vfev3_spmiddlefhd_rpn1_mghead_syncbn.py --work_dir=$SECOND_WORK_DIR

+ # python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py examples/cbgs/configs/ nusc_all_vfev3_spmiddleresnetfhd_rpn2_mghead_syncbn.py --work_dir=$NUSC_CBGS_WORK_DIR

+ # python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py examples/second/configs/ lyft_all_vfev3_spmiddleresnetfhd_rpn2_mghead_syncbn.py --work_dir=$LYFT_CBGS_WORK_DIR

+

+ # PointPillars

+ -# python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py ./examples/point_pillars/configs/ original_pp_mghead_syncbn_kitti.py --work_dir=$PP_WORK_DIR

+ +python -m torch.distributed.launch --nproc_per_node=8 ./tools/train.py ./examples/point_pillars/configs/ kitti_point_pillars_mghead_syncbn.py

+ ```

+

+

+

+### PointPillars-3class

+

+- __MMDetection3D__: With release v0.1.0, run

+

+ ```bash

+ ./tools/dist_train.sh configs/benchmark/hv_pointpillars_secfpn_4x8_80e_pcdet_kitti-3d-3class.py 8 --no-validate

+ ```

+

+- __OpenPCDet__: At commit [b32fbddb](https://github.com/open-mmlab/OpenPCDet/tree/b32fbddbe06183507bad433ed99b407cbc2175c2), run

+

+ ```bash

+ cd tools

+ sh scripts/slurm_train.sh ${PARTITION} ${JOB_NAME} 8 --cfg_file ./cfgs/kitti_models/pointpillar.yaml --batch_size 32 --workers 32 --epochs 80

+ ```

+

+### SECOND

+

+For SECOND, we mean the [SECONDv1.5](https://github.com/traveller59/second.pytorch/blob/master/second/configs/all.fhd.config) that was first implemented in [second.Pytorch](https://github.com/traveller59/second.pytorch). Det3D's implementation of SECOND uses its self-implemented Multi-Group Head, so its speed is not compatible with other codebases.

+

+- __MMDetection3D__: With release v0.1.0, run

+

+ ```bash

+ ./tools/dist_train.sh configs/benchmark/hv_second_secfpn_4x8_80e_pcdet_kitti-3d-3class.py 8 --no-validate

+ ```

+

+- __OpenPCDet__: At commit [b32fbddb](https://github.com/open-mmlab/OpenPCDet/tree/b32fbddbe06183507bad433ed99b407cbc2175c2), run

+

+ ```bash

+ cd tools

+ sh ./scripts/slurm_train.sh ${PARTITION} ${JOB_NAME} 8 --cfg_file ./cfgs/kitti_models/second.yaml --batch_size 32 --workers 32 --epochs 80

+ ```

+

+### Part-A2

+

+- __MMDetection3D__: With release v0.1.0, run

+

+ ```bash

+ ./tools/dist_train.sh configs/benchmark/hv_PartA2_secfpn_4x8_cyclic_80e_pcdet_kitti-3d-3class.py 8 --no-validate

+ ```

+

+- __OpenPCDet__: At commit [b32fbddb](https://github.com/open-mmlab/OpenPCDet/tree/b32fbddbe06183507bad433ed99b407cbc2175c2), train the model by running

+

+ ```bash

+ cd tools

+ sh ./scripts/slurm_train.sh ${PARTITION} ${JOB_NAME} 8 --cfg_file ./cfgs/kitti_models/PartA2.yaml --batch_size 32 --workers 32 --epochs 80

+ ```

diff --git a/docs/en/changelog.md b/docs/en/changelog.md

new file mode 100644

index 0000000..748aa94

--- /dev/null

+++ b/docs/en/changelog.md

@@ -0,0 +1,822 @@

+## Changelog

+

+### v1.0.0rc3 (8/6/2022)

+

+#### Highlights

+

+- Support [SA-SSD](https://openaccess.thecvf.com/content_CVPR_2020/papers/He_Structure_Aware_Single-Stage_3D_Object_Detection_From_Point_Cloud_CVPR_2020_paper.pdf)

+

+#### New Features

+

+- Support [SA-SSD](https://openaccess.thecvf.com/content_CVPR_2020/papers/He_Structure_Aware_Single-Stage_3D_Object_Detection_From_Point_Cloud_CVPR_2020_paper.pdf) (#1337)

+

+#### Improvements

+

+- Add Chinese documentation for vision-only 3D detection (#1438)

+- Update CenterPoint pretrained models that are compatible with refactored coordinate systems (#1450)

+- Configure myst-parser to parse anchor tag in the documentation (#1488)

+- Replace markdownlint with mdformat for avoiding installing ruby (#1489)

+- Add missing `gt_names` when getting annotation info in Custom3DDataset (#1519)

+- Support S3DIS full ceph training (#1542)

+- Rewrite the installation and FAQ documentation (#1545)

+

+#### Bug Fixes

+

+- Fix the incorrect registry name when building RoI extractors (#1460)

+- Fix the potential problems caused by the registry scope update when composing pipelines (#1466) and using CocoDataset (#1536)

+- Fix the missing selection with `order` in the [box3d_nms](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/core/post_processing/box3d_nms.py) introduced by [#1403](https://github.com/open-mmlab/mmdetection3d/pull/1403) (#1479)

+- Update the [PointPillars config](https://github.com/open-mmlab/mmdetection3d/blob/master/configs/pointpillars/hv_pointpillars_secfpn_6x8_160e_kitti-3d-car.py) to make it consistent with the log (#1486)

+- Fix heading anchor in documentation (#1490)

+- Fix the compatibility of mmcv in the dockerfile (#1508)

+- Make overwrite_spconv packaged when building whl (#1516)

+- Fix the requirement of mmcv and mmdet (#1537)

+- Update configs of PartA2 and support its compatibility with spconv 2.0 (#1538)

+

+#### Contributors

+

+A total of 13 developers contributed to this release.

+

+@Xiangxu-0103, @ZCMax, @jshilong, @filaPro, @atinfinity, @Tai-Wang, @wenbo-yu, @yi-chen-isuzu, @ZwwWayne, @wchen61, @VVsssssk, @AlexPasqua, @lianqing11

+

+### v1.0.0rc2 (1/5/2022)

+

+#### Highlights

+

+- Support spconv 2.0

+- Support MinkowskiEngine with MinkResNet

+- Support training models on custom datasets with only point clouds

+- Update Registry to distinguish the scope of built functions

+- Replace mmcv.iou3d with a set of bird-eye-view (BEV) operators to unify the operations of rotated boxes

+

+#### New Features

+

+- Add loader arguments in the configuration files (#1388)

+- Support [spconv 2.0](https://github.com/traveller59/spconv) when the package is installed. Users can still use spconv 1.x in MMCV with CUDA 9.0 (only cost more memory) without losing the compatibility of model weights between two versions (#1421)

+- Support MinkowskiEngine with MinkResNet (#1422)

+

+#### Improvements

+

+- Add the documentation for model deployment (#1373, #1436)

+- Add Chinese documentation of

+ - Speed benchmark (#1379)

+ - LiDAR-based 3D detection (#1368)

+ - LiDAR 3D segmentation (#1420)

+ - Coordinate system refactoring (#1384)

+- Support training models on custom datasets with only point clouds (#1393)

+- Replace mmcv.iou3d with a set of bird-eye-view (BEV) operators to unify the operations of rotated boxes (#1403, #1418)

+- Update Registry to distinguish the scope of building functions (#1412, #1443)

+- Replace recommonmark with myst_parser for documentation rendering (#1414)

+

+#### Bug Fixes

+

+- Fix the show pipeline in the [browse_dataset.py](https://github.com/open-mmlab/mmdetection3d/blob/master/tools/misc/browse_dataset.py) (#1376)

+- Fix missing __init__ files after coordinate system refactoring (#1383)

+- Fix the incorrect yaw in the visualization caused by coordinate system refactoring (#1407)

+- Fix `NaiveSyncBatchNorm1d` and `NaiveSyncBatchNorm2d` to support non-distributed cases and more general inputs (#1435)

+

+#### Contributors

+

+A total of 11 developers contributed to this release.

+

+@ZCMax, @ZwwWayne, @Tai-Wang, @VVsssssk, @HanaRo, @JoeyforJoy, @ansonlcy, @filaPro, @jshilong, @Xiangxu-0103, @deleomike

+

+### v1.0.0rc1 (1/4/2022)

+

+#### Compatibility

+

+- We migrate all the mmdet3d ops to mmcv and do not need to compile them when installing mmdet3d.

+- To fix the imprecise timestamp and optimize its saving method, we reformat the point cloud data during Waymo data conversion. The data conversion time is also optimized significantly by supporting parallel processing. Please re-generate KITTI format Waymo data if necessary. See more details in the [compatibility documentation](https://github.com/open-mmlab/mmdetection3d/blob/master/docs/en/compatibility.md).

+- We update some of the model checkpoints after the refactor of coordinate systems. Please stay tuned for the release of the remaining model checkpoints.

+

+| | Fully Updated | Partially Updated | In Progress | No Influcence |

+| ------------- | :-----------: | :---------------: | :---------: | :-----------: |

+| SECOND | | ✓ | | |

+| PointPillars | | ✓ | | |

+| FreeAnchor | ✓ | | | |

+| VoteNet | ✓ | | | |

+| H3DNet | ✓ | | | |

+| 3DSSD | | ✓ | | |

+| Part-A2 | ✓ | | | |

+| MVXNet | ✓ | | | |

+| CenterPoint | | | ✓ | |

+| SSN | ✓ | | | |

+| ImVoteNet | ✓ | | | |

+| FCOS3D | | | | ✓ |

+| PointNet++ | | | | ✓ |

+| Group-Free-3D | | | | ✓ |

+| ImVoxelNet | ✓ | | | |

+| PAConv | | | | ✓ |

+| DGCNN | | | | ✓ |

+| SMOKE | | | | ✓ |

+| PGD | | | | ✓ |

+| MonoFlex | | | | ✓ |

+

+#### Highlights

+

+- Migrate all the mmdet3d ops to mmcv

+- Support parallel waymo data converter

+- Add ScanNet instance segmentation dataset with metrics

+- Better compatibility for windows with CI support, op migration and bug fixes

+- Support loading annotations from Ceph

+

+#### New Features

+

+- Add ScanNet instance segmentation dataset with metrics (#1230)

+- Support different random seeds for different ranks (#1321)

+- Support loading annotations from Ceph (#1325)

+- Support resuming from the latest checkpoint automatically (#1329)

+- Add windows CI (#1345)

+

+#### Improvements

+

+- Update the table format and OpenMMLab project orders in [README.md](https://github.com/open-mmlab/mmdetection3d/blob/master/README.md) (#1272, #1283)

+- Migrate all the mmdet3d ops to mmcv (#1240, #1286, #1290, #1333)

+- Add `with_plane` flag in the KITTI data conversion (#1278)

+- Update instructions and links in the documentation (#1300, 1309, #1319)

+- Support parallel Waymo dataset converter and ground truth database generator (#1327)

+- Add quick installation commands to [getting_started.md](https://github.com/open-mmlab/mmdetection3d/blob/master/docs/en/getting_started.md) (#1366)

+

+#### Bug Fixes

+

+- Update nuimages configs to use new nms config style (#1258)

+- Fix the usage of np.long for windows compatibility (#1270)

+- Fix the incorrect indexing in `BasePoints` (#1274)

+- Fix the incorrect indexing in the [pillar_scatter.forward_single](https://github.com/open-mmlab/mmdetection3d/blob/dev/mmdet3d/models/middle_encoders/pillar_scatter.py#L38) (#1280)

+- Fix unit tests that use GPUs (#1301)

+- Fix incorrect feature dimensions in `DynamicPillarFeatureNet` caused by previous upgrading of `PillarFeatureNet` (#1302)

+- Remove the `CameraPoints` constraint in `PointSample` (#1314)

+- Fix imprecise timestamps saving of Waymo dataset (#1327)

+

+#### Contributors

+

+A total of 9 developers contributed to this release.

+

+@ZCMax, @ZwwWayne, @wHao-Wu, @Tai-Wang, @wangruohui, @zjwzcx, @Xiangxu-0103, @EdAyers, @hongye-dev, @zhanggefan

+

+### v1.0.0rc0 (18/2/2022)

+

+#### Compatibility

+

+- We refactor our three coordinate systems to make their rotation directions and origins more consistent, and further remove unnecessary hacks in different datasets and models. Therefore, please re-generate data infos or convert the old version to the new one with our provided scripts. We will also provide updated checkpoints in the next version. Please refer to the [compatibility documentation](https://github.com/open-mmlab/mmdetection3d/blob/v1.0.0.dev0/docs/en/compatibility.md) for more details.

+- Unify the camera keys for consistent transformation between coordinate systems on different datasets. The modification changes the key names to `lidar2img`, `depth2img`, `cam2img`, etc., for easier understanding. Customized codes using legacy keys may be influenced.

+- The next release will begin to move files of CUDA ops to [MMCV](https://github.com/open-mmlab/mmcv). It will influence the way to import related functions. We will not break the compatibility but will raise a warning first and please prepare to migrate it.

+

+#### Highlights

+

+- Support new monocular 3D detectors: [PGD](https://github.com/open-mmlab/mmdetection3d/tree/v1.0.0.dev0/configs/pgd), [SMOKE](https://github.com/open-mmlab/mmdetection3d/tree/v1.0.0.dev0/configs/smoke), [MonoFlex](https://github.com/open-mmlab/mmdetection3d/tree/v1.0.0.dev0/configs/monoflex)

+- Support a new LiDAR-based detector: [PointRCNN](https://github.com/open-mmlab/mmdetection3d/tree/v1.0.0.dev0/configs/point_rcnn)

+- Support a new backbone: [DGCNN](https://github.com/open-mmlab/mmdetection3d/tree/v1.0.0.dev0/configs/dgcnn)

+- Support 3D object detection on the S3DIS dataset

+- Support compilation on Windows

+- Full benchmark for PAConv on S3DIS

+- Further enhancement for documentation, especially on the Chinese documentation

+

+#### New Features

+

+- Support 3D object detection on the S3DIS dataset (#835)

+- Support PointRCNN (#842, #843, #856, #974, #1022, #1109, #1125)

+- Support DGCNN (#896)

+- Support PGD (#938, #940, #948, #950, #964, #1014, #1065, #1070, #1157)

+- Support SMOKE (#939, #955, #959, #975, #988, #999, #1029)

+- Support MonoFlex (#1026, #1044, #1114, #1115, #1183)

+- Support CPU Training (#1196)

+

+#### Improvements

+

+- Support point sampling based on distance metric (#667, #840)

+- Refactor coordinate systems (#677, #774, #803, #899, #906, #912, #968, #1001)

+- Unify camera keys in PointFusion and transformations between different systems (#791, #805)

+- Refine documentation (#792, #827, #829, #836, #849, #854, #859, #1111, #1113, #1116, #1121, #1132, #1135, #1185, #1193, #1226)

+- Add a script to support benchmark regression (#808)

+- Benchmark PAConvCUDA on S3DIS (#847)

+- Support to download pdf and epub documentation (#850)

+- Change the `repeat` setting in Group-Free-3D configs to reduce training epochs (#855)

+- Support KITTI AP40 evaluation metric (#927)

+- Add the mmdet3d2torchserve tool for SECOND (#977)

+- Add code-spell pre-commit hook and fix typos (#995)

+- Support the latest numba version (#1043)

+- Set a default seed to use when the random seed is not specified (#1072)

+- Distribute mix-precision models to each algorithm folder (#1074)

+- Add abstract and a representative figure for each algorithm (#1086)

+- Upgrade pre-commit hook (#1088, #1217)

+- Support augmented data and ground truth visualization (#1092)

+- Add local yaw property for `CameraInstance3DBoxes` (#1130)

+- Lock the required numba version to 0.53.0 (#1159)

+- Support the usage of plane information for KITTI dataset (#1162)

+- Deprecate the support for "python setup.py test" (#1164)

+- Reduce the number of multi-process threads to accelerate training (#1168)

+- Support 3D flip augmentation for semantic segmentation (#1181)

+- Update README format for each model (#1195)

+

+#### Bug Fixes

+

+- Fix compiling errors on Windows (#766)

+- Fix the deprecated nms setting in the ImVoteNet config (#828)

+- Use the latest `wrap_fp16_model` import from mmcv (#861)

+- Remove 2D annotations generation on Lyft (#867)

+- Update index files for the Chinese documentation to be consistent with the English version (#873)

+- Fix the nested list transpose in the CenterPoint head (#879)

+- Fix deprecated pretrained model loading for RegNet (#889)

+- Fix the incorrect dimension indices of rotations and testing config in the CenterPoint test time augmentation (#892)

+- Fix and improve visualization tools (#956, #1066, #1073)

+- Fix PointPillars FLOPs calculation error (#1075)

+- Fix missing dimension information in the SUN RGB-D data generation (#1120)

+- Fix incorrect anchor range settings in the PointPillars [config](https://github.com/open-mmlab/mmdetection3d/blob/master/configs/_base_/models/hv_pointpillars_secfpn_kitti.py) for KITTI (#1163)

+- Fix incorrect model information in the RegNet metafile (#1184)

+- Fix bugs in non-distributed multi-gpu training and testing (#1197)

+- Fix a potential assertion error when generating corners from an empty box (#1212)

+- Upgrade bazel version according to the requirement of Waymo Devkit (#1223)

+

+#### Contributors

+

+A total of 12 developers contributed to this release.

+

+@THU17cyz, @wHao-Wu, @wangruohui, @Wuziyi616, @filaPro, @ZwwWayne, @Tai-Wang, @DCNSW, @xieenze, @robin-karlsson0, @ZCMax, @Otteri

+

+### v0.18.1 (1/2/2022)

+

+#### Improvements

+

+- Support Flip3D augmentation in semantic segmentation task (#1182)

+- Update regnet metafile (#1184)

+- Add point cloud annotation tools introduction in FAQ (#1185)

+- Add missing explanations of `cam_intrinsic` in the nuScenes dataset doc (#1193)

+

+#### Bug Fixes

+

+- Deprecate the support for "python setup.py test" (#1164)

+- Fix the rotation matrix while rotation axis=0 (#1182)

+- Fix the bug in non-distributed multi-gpu training/testing (#1197)

+- Fix a potential bug when generating corners for empty bounding boxes (#1212)

+

+#### Contributors

+

+A total of 4 developers contributed to this release.

+

+@ZwwWayne, @ZCMax, @Tai-Wang, @wHao-Wu

+

+### v0.18.0 (1/1/2022)

+

+#### Highlights

+

+- Update the required minimum version of mmdet and mmseg

+

+#### Improvements

+

+- Use the official markdownlint hook and add codespell hook for pre-committing (#1088)

+- Improve CI operation (#1095, #1102, #1103)

+- Use shared menu content from OpenMMLab's theme and remove duplicated contents from config (#1111)

+- Refactor the structure of documentation (#1113, #1121)

+- Update the required minimum version of mmdet and mmseg (#1147)

+

+#### Bug Fixes

+

+- Fix symlink failure on Windows (#1096)

+- Fix the upper bound of mmcv version in the mminstall requirements (#1104)

+- Fix API documentation compilation and mmcv build errors (#1116)

+- Fix figure links and pdf documentation compilation (#1132, #1135)

+

+#### Contributors

+

+A total of 4 developers contributed to this release.

+

+@ZwwWayne, @ZCMax, @Tai-Wang, @wHao-Wu

+

+### v0.17.3 (1/12/2021)

+

+#### Improvements

+

+- Change the default show value to `False` in show_result function to avoid unnecessary errors (#1034)

+- Improve the visualization of detection results with colorized points in [single_gpu_test](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/apis/test.py#L11) (#1050)

+- Clean unnecessary custom_imports in entrypoints (#1068)

+

+#### Bug Fixes

+

+- Update mmcv version in the Dockerfile (#1036)

+- Fix the memory-leak problem when loading checkpoints in [init_model](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/apis/inference.py#L36) (#1045)

+- Fix incorrect velocity indexing when formatting boxes on nuScenes (#1049)

+- Explicitly set cuda device ID in [init_model](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/apis/inference.py#L36) to avoid memory allocation on unexpected devices (#1056)

+- Fix PointPillars FLOPs calculation error (#1076)

+

+#### Contributors

+

+A total of 5 developers contributed to this release.

+

+@wHao-Wu, @Tai-Wang, @ZCMax, @MilkClouds, @aldakata

+

+### v0.17.2 (1/11/2021)

+

+#### Improvements

+

+- Update Group-Free-3D and FCOS3D bibtex (#985)

+- Update the solutions for incompatibility of pycocotools in the FAQ (#993)

+- Add Chinese documentation for the KITTI (#1003) and Lyft (#1010) dataset tutorial

+- Add the H3DNet checkpoint converter for incompatible keys (#1007)

+

+#### Bug Fixes

+

+- Update mmdetection and mmsegmentation version in the Dockerfile (#992)

+- Fix links in the Chinese documentation (#1015)

+

+#### Contributors

+

+A total of 4 developers contributed to this release.

+

+@Tai-Wang, @wHao-Wu, @ZwwWayne, @ZCMax

+

+### v0.17.1 (1/10/2021)

+

+#### Highlights

+

+- Support a faster but non-deterministic version of hard voxelization

+- Completion of dataset tutorials and the Chinese documentation

+- Improved the aesthetics of the documentation format

+

+#### Improvements

+

+- Add Chinese documentation for training on customized datasets and designing customized models (#729, #820)

+- Support a faster but non-deterministic version of hard voxelization (#904)

+- Update paper titles and code details for metafiles (#917)

+- Add a tutorial for KITTI dataset (#953)

+- Use Pytorch sphinx theme to improve the format of documentation (#958)

+- Use the docker to accelerate CI (#971)

+

+#### Bug Fixes

+

+- Fix the sphinx version used in the documentation (#902)

+- Fix a dynamic scatter bug that discards the first voxel by mistake when all input points are valid (#915)

+- Fix the inconsistent variable names used in the [unit test](https://github.com/open-mmlab/mmdetection3d/blob/master/tests/test_models/test_voxel_encoder/test_voxel_generator.py) for voxel generator (#919)

+- Upgrade to use `build_prior_generator` to replace the legacy `build_anchor_generator` (#941)

+- Fix a minor bug caused by a too small difference set in the FreeAnchor Head (#944)

+

+#### Contributors

+

+A total of 8 developers contributed to this release.

+

+@DCNSW, @zhanggefan, @mickeyouyou, @ZCMax, @wHao-Wu, @tojimahammatov, @xiliu8006, @Tai-Wang

+

+### v0.17.0 (1/9/2021)

+

+#### Compatibility

+

+- Unify the camera keys for consistent transformation between coordinate systems on different datasets. The modification change the key names to `lidar2img`, `depth2img`, `cam2img`, etc. for easier understanding. Customized codes using legacy keys may be influenced.

+- The next release will begin to move files of CUDA ops to [MMCV](https://github.com/open-mmlab/mmcv). It will influence the way to import related functions. We will not break the compatibility but will raise a warning first and please prepare to migrate it.

+

+#### Highlights

+

+- Support 3D object detection on the S3DIS dataset

+- Support compilation on Windows

+- Full benchmark for PAConv on S3DIS

+- Further enhancement for documentation, especially on the Chinese documentation

+

+#### New Features

+

+- Support 3D object detection on the S3DIS dataset (#835)

+

+#### Improvements

+

+- Support point sampling based on distance metric (#667, #840)

+- Update PointFusion to support unified camera keys (#791)

+- Add Chinese documentation for customized dataset (#792), data pipeline (#827), customized runtime (#829), 3D Detection on ScanNet (#836), nuScenes (#854) and Waymo (#859)

+- Unify camera keys used in transformation between different systems (#805)

+- Add a script to support benchmark regression (#808)

+- Benchmark PAConvCUDA on S3DIS (#847)

+- Add a tutorial for 3D detection on the Lyft dataset (#849)

+- Support to download pdf and epub documentation (#850)

+- Change the `repeat` setting in Group-Free-3D configs to reduce training epochs (#855)

+

+#### Bug Fixes

+

+- Fix compiling errors on Windows (#766)

+- Fix the deprecated nms setting in the ImVoteNet config (#828)

+- Use the latest `wrap_fp16_model` import from mmcv (#861)

+- Remove 2D annotations generation on Lyft (#867)

+- Update index files for the Chinese documentation to be consistent with the English version (#873)

+- Fix the nested list transpose in the CenterPoint head (#879)

+- Fix deprecated pretrained model loading for RegNet (#889)

+

+#### Contributors

+

+A total of 11 developers contributed to this release.

+

+@THU17cyz, @wHao-Wu, @wangruohui, @Wuziyi616, @filaPro, @ZwwWayne, @Tai-Wang, @DCNSW, @xieenze, @robin-karlsson0, @ZCMax

+

+### v0.16.0 (1/8/2021)

+

+#### Compatibility

+

+- Remove the rotation and dimension hack in the monocular 3D detection on nuScenes by applying corresponding transformation in the pre-processing and post-processing. The modification only influences nuScenes coco-style json files. Please re-run the data preparation scripts if necessary. See more details in the PR #744.

+- Add a new pre-processing module for the ScanNet dataset in order to support multi-view detectors. Please run the updated scripts to extract the RGB data and its annotations. See more details in the PR #696.

+

+#### Highlights

+

+- Support to use [MIM](https://github.com/open-mmlab/mim) with pip installation

+- Support PAConv [models and benchmarks](https://github.com/open-mmlab/mmdetection3d/tree/master/configs/paconv) on S3DIS

+- Enhance the documentation especially on dataset tutorials

+

+#### New Features

+

+- Support RGB images on ScanNet for multi-view detectors (#696)

+- Support FLOPs and number of parameters calculation (#736)

+- Support to use [MIM](https://github.com/open-mmlab/mim) with pip installation (#782)

+- Support PAConv models and benchmarks on the S3DIS dataset (#783, #809)

+

+#### Improvements

+

+- Refactor Group-Free-3D to make it inherit BaseModule from MMCV (#704)

+- Modify the initialization methods of FCOS3D to be consistent with the refactored approach (#705)

+- Benchmark the Group-Free-3D [models](https://github.com/open-mmlab/mmdetection3d/tree/master/configs/groupfree3d) on ScanNet (#710)

+- Add Chinese documentation for Getting Started (#725), FAQ (#730), Model Zoo (#735), Demo (#745), Quick Run (#746), Data Preparation (#787) and Configs (#788)

+- Add documentation for semantic segmentation on ScanNet and S3DIS (#743, #747, #806, #807)

+- Add a parameter `max_keep_ckpts` to limit the maximum number of saved Group-Free-3D checkpoints (#765)

+- Add documentation for 3D detection on SUN RGB-D and nuScenes (#770, #793)

+- Remove mmpycocotools in the Dockerfile (#785)

+

+#### Bug Fixes

+

+- Fix versions of OpenMMLab dependencies (#708)

+- Convert `rt_mat` to `torch.Tensor` in coordinate transformation for compatibility (#709)

+- Fix the `bev_range` initialization in `ObjectRangeFilter` according to the `gt_bboxes_3d` type (#717)

+- Fix Chinese documentation and incorrect doc format due to the incompatible Sphinx version (#718)

+- Fix a potential bug when setting `interval == 1` in [analyze_logs.py](https://github.com/open-mmlab/mmdetection3d/blob/master/tools/analysis_tools/analyze_logs.py) (#720)

+- Update the structure of Chinese documentation (#722)

+- Fix FCOS3D FPN BC-Breaking caused by the code refactoring in MMDetection (#739)

+- Fix wrong `in_channels` when `with_distance=True` in the [Dynamic VFE Layers](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/models/voxel_encoders/voxel_encoder.py#L87) (#749)

+- Fix the dimension and yaw hack of FCOS3D on nuScenes (#744, #794, #795, #818)

+- Fix the missing default `bbox_mode` in the `show_multi_modality_result` (#825)

+

+#### Contributors

+

+A total of 12 developers contributed to this release.

+

+@yinchimaoliang, @gopi231091, @filaPro, @ZwwWayne, @ZCMax, @hjin2902, @wHao-Wu, @Wuziyi616, @xiliu8006, @THU17cyz, @DCNSW, @Tai-Wang

+

+### v0.15.0 (1/7/2021)

+

+#### Compatibility

+

+In order to fix the problem that the priority of EvalHook is too low, all hook priorities have been re-adjusted in 1.3.8, so MMDetection 2.14.0 needs to rely on the latest MMCV 1.3.8 version. For related information, please refer to [#1120](https://github.com/open-mmlab/mmcv/pull/1120), for related issues, please refer to [#5343](https://github.com/open-mmlab/mmdetection/issues/5343).

+

+#### Highlights

+

+- Support [PAConv](https://arxiv.org/abs/2103.14635)

+- Support monocular/multi-view 3D detector [ImVoxelNet](https://arxiv.org/abs/2106.01178) on KITTI

+- Support Transformer-based 3D detection method [Group-Free-3D](https://arxiv.org/abs/2104.00678) on ScanNet

+- Add documentation for tasks including LiDAR-based 3D detection, vision-only 3D detection and point-based 3D semantic segmentation

+- Add dataset documents like ScanNet

+

+#### New Features

+

+- Support Group-Free-3D on ScanNet (#539)

+- Support PAConv modules (#598, #599)

+- Support ImVoxelNet on KITTI (#627, #654)

+

+#### Improvements

+

+- Add unit tests for pipeline functions `LoadImageFromFileMono3D`, `ObjectNameFilter` and `ObjectRangeFilter` (#615)

+- Enhance [IndoorPatchPointSample](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/datasets/pipelines/transforms_3d.py) (#617)

+- Refactor model initialization methods based MMCV (#622)

+- Add Chinese docs (#629)

+- Add documentation for LiDAR-based 3D detection (#642)

+- Unify intrinsic and extrinsic matrices for all datasets (#653)

+- Add documentation for point-based 3D semantic segmentation (#663)

+- Add documentation of ScanNet for 3D detection (#664)

+- Refine docs for tutorials (#666)

+- Add documentation for vision-only 3D detection (#669)

+- Refine docs for Quick Run and Useful Tools (#686)

+

+#### Bug Fixes

+

+- Fix the bug of [BackgroundPointsFilter](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/datasets/pipelines/transforms_3d.py) using the bottom center of ground truth (#609)

+- Fix [LoadMultiViewImageFromFiles](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/datasets/pipelines/loading.py) to unravel stacked multi-view images to list to be consistent with DefaultFormatBundle (#611)

+- Fix the potential bug in [analyze_logs](https://github.com/open-mmlab/mmdetection3d/blob/master/tools/analysis_tools/analyze_logs.py) when the training resumes from a checkpoint or is stopped before evaluation (#634)

+- Fix test commands in docs and make some refinements (#635)

+- Fix wrong config paths in unit tests (#641)

+

+### v0.14.0 (1/6/2021)

+

+#### Highlights

+

+- Support the point cloud segmentation method [PointNet++](https://arxiv.org/abs/1706.02413)

+

+#### New Features

+

+- Support PointNet++ (#479, #528, #532, #541)

+- Support RandomJitterPoints transform for point cloud segmentation (#584)

+- Support RandomDropPointsColor transform for point cloud segmentation (#585)

+

+#### Improvements

+

+- Move the point alignment of ScanNet from data pre-processing to pipeline (#439, #470)

+- Add compatibility document to provide detailed descriptions of BC-breaking changes (#504)

+- Add MMSegmentation installation requirement (#535)

+- Support points rotation even without bounding box in GlobalRotScaleTrans for point cloud segmentaiton (#540)

+- Support visualization of detection results and dataset browse for nuScenes Mono-3D dataset (#542, #582)

+- Support faster implementation of KNN (#586)

+- Support RegNetX models on Lyft dataset (#589)

+- Remove a useless parameter `label_weight` from segmentation datasets including `Custom3DSegDataset`, `ScanNetSegDataset` and `S3DISSegDataset` (#607)

+

+#### Bug Fixes

+

+- Fix a corrupted lidar data file in Lyft dataset in [data_preparation](https://github.com/open-mmlab/mmdetection3d/tree/master/docs/data_preparation.md) (#546)

+- Fix evaluation bugs in nuScenes and Lyft dataset (#549)

+- Fix converting points between coordinates with specific transformation matrix in the [coord_3d_mode.py](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/core/bbox/structures/coord_3d_mode.py) (#556)

+- Support PointPillars models on Lyft dataset (#578)

+- Fix the bug of demo with pre-trained VoteNet model on ScanNet (#600)

+

+### v0.13.0 (1/5/2021)

+

+#### Highlights

+

+- Support a monocular 3D detection method [FCOS3D](https://arxiv.org/abs/2104.10956)

+- Support ScanNet and S3DIS semantic segmentation dataset

+- Enhancement of visualization tools for dataset browsing and demos, including support of visualization for multi-modality data and point cloud segmentation.

+

+#### New Features

+

+- Support ScanNet semantic segmentation dataset (#390)

+- Support monocular 3D detection on nuScenes (#392)

+- Support multi-modality visualization (#405)

+- Support nuimages visualization (#408)

+- Support monocular 3D detection on KITTI (#415)

+- Support online visualization of semantic segmentation results (#416)

+- Support ScanNet test results submission to online benchmark (#418)

+- Support S3DIS data pre-processing and dataset class (#433)

+- Support FCOS3D (#436, #442, #482, #484)

+- Support dataset browse for multiple types of datasets (#467)

+- Adding paper-with-code (PWC) metafile for each model in the model zoo (#485)

+

+#### Improvements

+

+- Support dataset browsing for SUNRGBD, ScanNet or KITTI points and detection results (#367)

+- Add the pipeline to load data using file client (#430)

+- Support to customize the type of runner (#437)

+- Make pipeline functions process points and masks simultaneously when sampling points (#444)

+- Add waymo unit tests (#455)

+- Split the visualization of projecting points onto image from that for only points (#480)

+- Efficient implementation of PointSegClassMapping (#489)

+- Use the new model registry from mmcv (#495)

+

+#### Bug Fixes

+

+- Fix Pytorch 1.8 Compilation issue in the [scatter_points_cuda.cu](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/ops/voxel/src/scatter_points_cuda.cu) (#404)

+- Fix [dynamic_scatter](https://github.com/open-mmlab/mmdetection3d/blob/master/mmdet3d/ops/voxel/src/scatter_points_cuda.cu) errors triggered by empty point input (#417)

+- Fix the bug of missing points caused by using break incorrectly in the voxelization (#423)

+- Fix the missing `coord_type` in the waymo dataset [config](https://github.com/open-mmlab/mmdetection3d/blob/master/configs/_base_/datasets/waymoD5-3d-3class.py) (#441)

+- Fix errors in four unittest functions of [configs](https://github.com/open-mmlab/mmdetection3d/blob/master/configs/ssn/hv_ssn_secfpn_sbn-all_2x16_2x_lyft-3d.py), [test_detectors.py](https://github.com/open-mmlab/mmdetection3d/blob/master/tests/test_models/test_detectors.py), [test_heads.py](https://github.com/open-mmlab/mmdetection3d/blob/master/tests/test_models/test_heads/test_heads.py) (#453)

+- Fix 3DSSD training errors and simplify configs (#462)

+- Clamp 3D votes projections to image boundaries in ImVoteNet (#463)

+- Update out-of-date names of pipelines in the [config](https://github.com/open-mmlab/mmdetection3d/blob/master/configs/benchmark/hv_pointpillars_secfpn_3x8_100e_det3d_kitti-3d-car.py) of pointpillars benchmark (#474)