diff --git a/CITATION.bib b/CITATION.bib

index acdab6f3..85bae8e6 100644

--- a/CITATION.bib

+++ b/CITATION.bib

@@ -1,6 +1,6 @@

-@inproceedings{Alegre+2022bnaic,

- author = {Lucas N. Alegre and Florian Felten and El-Ghazali Talbi and Gr{\'e}goire Danoy and Ann Now{\'e} and Ana L. C. Bazzan and Bruno C. da Silva},

- title = {{MO-Gym}: A Library of Multi-Objective Reinforcement Learning Environments},

- booktitle = {Proceedings of the 34th Benelux Conference on Artificial Intelligence BNAIC/Benelearn 2022},

- year = {2022}

+@inproceedings{felten_toolkit_2023,

+ author = {Felten, Florian and Alegre, Lucas N. and Now{\'e}, Ann and Bazzan, Ana L. C. and Talbi, El Ghazali and Danoy, Gr{\'e}goire and Silva, Bruno C. {\relax da}},

+ title = {A Toolkit for Reliable Benchmarking and Research in Multi-Objective Reinforcement Learning},

+ booktitle = {Proceedings of the 37th Conference on Neural Information Processing Systems ({NeurIPS} 2023)},

+ year = {2023}

}

diff --git a/README.md b/README.md

index aaf999e4..fb5f7885 100644

--- a/README.md

+++ b/README.md

@@ -82,11 +82,11 @@ Maintenance for this project is also contributed by the broader Farama team: [fa

If you use this repository in your research, please cite:

```bibtex

-@inproceedings{Alegre+2022bnaic,

- author = {Lucas N. Alegre and Florian Felten and El-Ghazali Talbi and Gr{\'e}goire Danoy and Ann Now{\'e} and Ana L. C. Bazzan and Bruno C. da Silva},

- title = {{MO-Gym}: A Library of Multi-Objective Reinforcement Learning Environments},

- booktitle = {Proceedings of the 34th Benelux Conference on Artificial Intelligence BNAIC/Benelearn 2022},

- year = {2022}

+@inproceedings{felten_toolkit_2023,

+ author = {Felten, Florian and Alegre, Lucas N. and Now{\'e}, Ann and Bazzan, Ana L. C. and Talbi, El Ghazali and Danoy, Gr{\'e}goire and Silva, Bruno C. {\relax da}},

+ title = {A Toolkit for Reliable Benchmarking and Research in Multi-Objective Reinforcement Learning},

+ booktitle = {Proceedings of the 37th Conference on Neural Information Processing Systems ({NeurIPS} 2023)},

+ year = {2023}

}

```

diff --git a/docs/_static/videos/minecart-rgb.gif b/docs/_static/videos/minecart-rgb.gif

new file mode 100644

index 00000000..39ad4172

Binary files /dev/null and b/docs/_static/videos/minecart-rgb.gif differ

diff --git a/docs/_static/videos/mo-ant.gif b/docs/_static/videos/mo-ant.gif

new file mode 100644

index 00000000..9397b4ff

Binary files /dev/null and b/docs/_static/videos/mo-ant.gif differ

diff --git a/docs/_static/videos/mo-humanoid.gif b/docs/_static/videos/mo-humanoid.gif

new file mode 100644

index 00000000..625a40f8

Binary files /dev/null and b/docs/_static/videos/mo-humanoid.gif differ

diff --git a/docs/_static/videos/mo-lunar-lander-continuous.gif b/docs/_static/videos/mo-lunar-lander-continuous.gif

new file mode 100644

index 00000000..2051d754

Binary files /dev/null and b/docs/_static/videos/mo-lunar-lander-continuous.gif differ

diff --git a/docs/_static/videos/mo-swimmer.gif b/docs/_static/videos/mo-swimmer.gif

new file mode 100644

index 00000000..f1dffd63

Binary files /dev/null and b/docs/_static/videos/mo-swimmer.gif differ

diff --git a/docs/_static/videos/mo-walker2d.gif b/docs/_static/videos/mo-walker2d.gif

new file mode 100644

index 00000000..6a2a2e1e

Binary files /dev/null and b/docs/_static/videos/mo-walker2d.gif differ

diff --git a/docs/citing/citing.md b/docs/citing/citing.md

index b90bd5d1..64b21646 100644

--- a/docs/citing/citing.md

+++ b/docs/citing/citing.md

@@ -7,6 +7,17 @@ title: "Citing"

:end-before:

```

+MO-Gymnasium (formerly MO-Gym) appeared first in the following workshop publication:

+

+```bibtex

+@inproceedings{Alegre+2022bnaic,

+ author = {Lucas N. Alegre and Florian Felten and El-Ghazali Talbi and Gr{\'e}goire Danoy and Ann Now{\'e} and Ana L. C. Bazzan and Bruno C. {\relax da} Silva},

+ title = {{MO-Gym}: A Library of Multi-Objective Reinforcement Learning Environments},

+ booktitle = {Proceedings of the 34th Benelux Conference on Artificial Intelligence BNAIC/Benelearn 2022},

+ year = {2022}

+}

+```

+

```{toctree}

:hidden:

:glob:

diff --git a/docs/environments/all-environments.md b/docs/environments/all-environments.md

deleted file mode 100644

index 0975216a..00000000

--- a/docs/environments/all-environments.md

+++ /dev/null

@@ -1,39 +0,0 @@

----

-title: "Environments"

----

-

-# Available environments

-

-

-MO-Gymnasium includes environments taken from the MORL literature, as well as multi-objective version of classical environments, such as Mujoco.

-

-| Env | Obs/Action spaces | Objectives | Description |

-|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| [`deep-sea-treasure-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`deep-sea-treasure-concave-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-concave/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`deep-sea-treasure-concave-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-concave/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Vamplew et al. 2010](https://link.springer.com/article/10.1007/s10994-010-5232-5). |

-| [`deep-sea-treasure-mirrored-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-mirrored/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Vamplew et al. 2010](https://link.springer.com/article/10.1007/s10994-010-5232-5). |

-| [`deep-sea-treasure-mirrored-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-mirrored/)

| Discrete / Discrete | `[treasure, time_penalty]` | Harder version of the concave DST [Felten et al. 2022](https://www.scitepress.org/Papers/2022/109891/109891.pdf). |

-| [`resource-gathering-v0`](https://mo-gymnasium.farama.org/environments/resource-gathering/)

| Discrete / Discrete | `[treasure, time_penalty]` | Harder version of the concave DST [Felten et al. 2022](https://www.scitepress.org/Papers/2022/109891/109891.pdf). |

-| [`resource-gathering-v0`](https://mo-gymnasium.farama.org/environments/resource-gathering/)

| Discrete / Discrete | `[enemy, gold, gem]` | Agent must collect gold or gem. Enemies have a 10% chance of killing the agent. From [Barret & Narayanan 2008](https://dl.acm.org/doi/10.1145/1390156.1390162). |

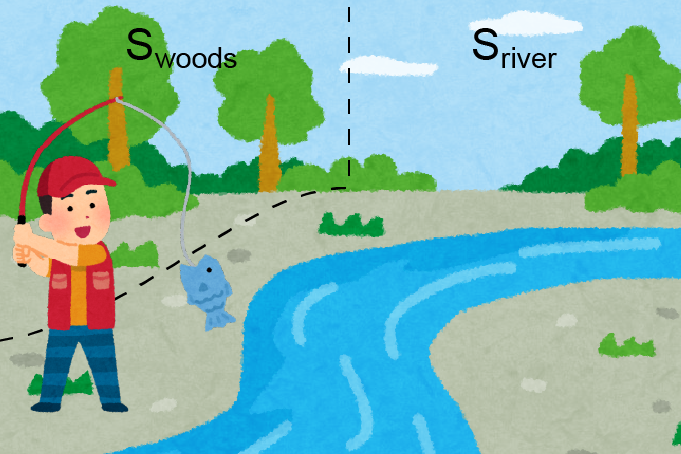

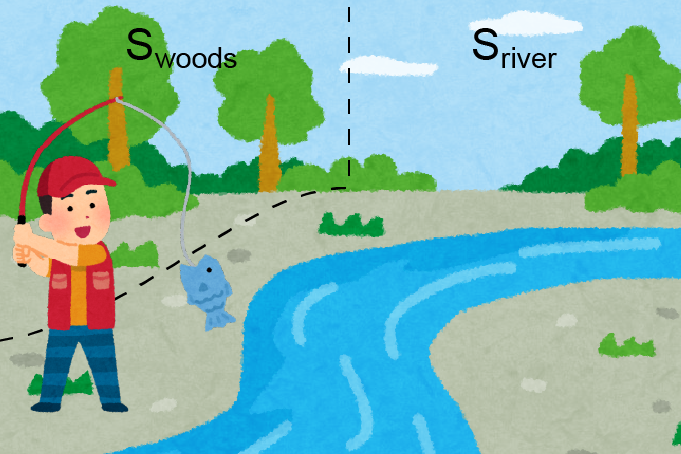

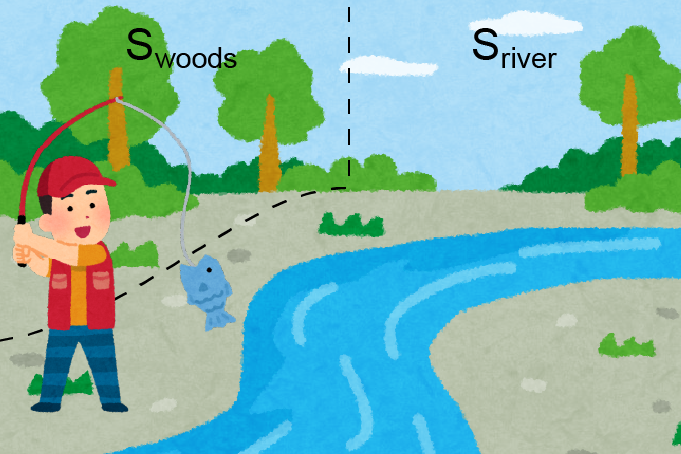

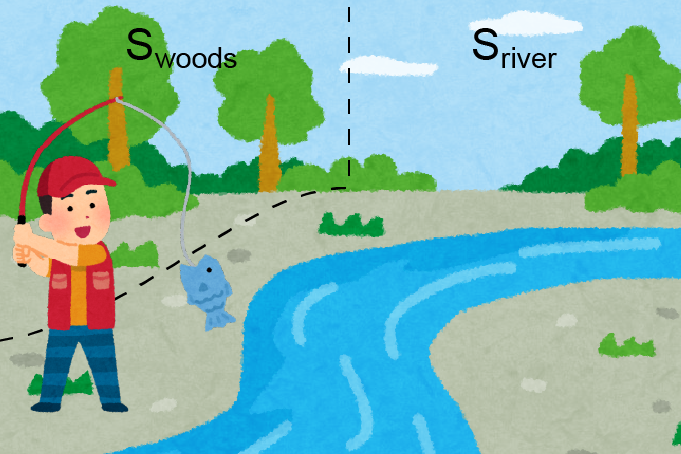

-| [`fishwood-v0`](https://mo-gymnasium.farama.org/environments/fishwood/)

| Discrete / Discrete | `[enemy, gold, gem]` | Agent must collect gold or gem. Enemies have a 10% chance of killing the agent. From [Barret & Narayanan 2008](https://dl.acm.org/doi/10.1145/1390156.1390162). |

-| [`fishwood-v0`](https://mo-gymnasium.farama.org/environments/fishwood/)

| Discrete / Discrete | `[fish_amount, wood_amount]` | ESR environment, the agent must collect fish and wood to light a fire and eat. From [Roijers et al. 2018](https://www.researchgate.net/publication/328718263_Multi-objective_Reinforcement_Learning_for_the_Expected_Utility_of_the_Return). |

-| [`breakable-bottles-v0`](https://mo-gymnasium.farama.org/environments/breakable-bottles/)

| Discrete / Discrete | `[fish_amount, wood_amount]` | ESR environment, the agent must collect fish and wood to light a fire and eat. From [Roijers et al. 2018](https://www.researchgate.net/publication/328718263_Multi-objective_Reinforcement_Learning_for_the_Expected_Utility_of_the_Return). |

-| [`breakable-bottles-v0`](https://mo-gymnasium.farama.org/environments/breakable-bottles/)

| Discrete (Dictionary) / Discrete | `[time_penalty, bottles_delivered, potential]` | Gridworld with 5 cells. The agents must collect bottles from the source location and deliver to the destination. From [Vamplew et al. 2021](https://www.sciencedirect.com/science/article/pii/S0952197621000336). |

-| [`fruit-tree-v0`](https://mo-gymnasium.farama.org/environments/fruit-tree/)

| Discrete (Dictionary) / Discrete | `[time_penalty, bottles_delivered, potential]` | Gridworld with 5 cells. The agents must collect bottles from the source location and deliver to the destination. From [Vamplew et al. 2021](https://www.sciencedirect.com/science/article/pii/S0952197621000336). |

-| [`fruit-tree-v0`](https://mo-gymnasium.farama.org/environments/fruit-tree/)

| Discrete / Discrete | `[nutri1, ..., nutri6]` | Full binary tree of depth d=5,6 or 7. Every leaf contains a fruit with a value for the nutrients Protein, Carbs, Fats, Vitamins, Minerals and Water. From [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`water-reservoir-v0`](https://mo-gymnasium.farama.org/environments/water-reservoir/)

| Discrete / Discrete | `[nutri1, ..., nutri6]` | Full binary tree of depth d=5,6 or 7. Every leaf contains a fruit with a value for the nutrients Protein, Carbs, Fats, Vitamins, Minerals and Water. From [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`water-reservoir-v0`](https://mo-gymnasium.farama.org/environments/water-reservoir/)

| Continuous / Continuous | `[cost_flooding, deficit_water]` | A Water reservoir environment. The agent executes a continuous action, corresponding to the amount of water released by the dam. From [Pianosi et al. 2013](https://iwaponline.com/jh/article/15/2/258/3425/Tree-based-fitted-Q-iteration-for-multi-objective). |

-| [`four-room-v0`](https://mo-gymnasium.farama.org/environments/four-room/)

| Continuous / Continuous | `[cost_flooding, deficit_water]` | A Water reservoir environment. The agent executes a continuous action, corresponding to the amount of water released by the dam. From [Pianosi et al. 2013](https://iwaponline.com/jh/article/15/2/258/3425/Tree-based-fitted-Q-iteration-for-multi-objective). |

-| [`four-room-v0`](https://mo-gymnasium.farama.org/environments/four-room/)

| Discrete / Discrete | `[item1, item2, item3]` | Agent must collect three different types of items in the map and reach the goal. From [Alegre et al. 2022](https://proceedings.mlr.press/v162/alegre22a.html). |

-| [`mo-mountaincar-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincar/)

| Discrete / Discrete | `[item1, item2, item3]` | Agent must collect three different types of items in the map and reach the goal. From [Alegre et al. 2022](https://proceedings.mlr.press/v162/alegre22a.html). |

-| [`mo-mountaincar-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincar/)

| Continuous / Discrete | `[time_penalty, reverse_penalty, forward_penalty]` | Classic Mountain Car env, but with extra penalties for the forward and reverse actions. From [Vamplew et al. 2011](https://www.researchgate.net/publication/220343783_Empirical_evaluation_methods_for_multiobjective_reinforcement_learning_algorithms). |

-| [`mo-mountaincarcontinuous-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincarcontinuous/)

| Continuous / Discrete | `[time_penalty, reverse_penalty, forward_penalty]` | Classic Mountain Car env, but with extra penalties for the forward and reverse actions. From [Vamplew et al. 2011](https://www.researchgate.net/publication/220343783_Empirical_evaluation_methods_for_multiobjective_reinforcement_learning_algorithms). |

-| [`mo-mountaincarcontinuous-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincarcontinuous/)

| Continuous / Continuous | `[time_penalty, fuel_consumption_penalty]` | Continuous Mountain Car env, but with penalties for fuel consumption. |

-| [`mo-lunar-lander-v2`](https://mo-gymnasium.farama.org/environments/mo-lunar-lander/)

| Continuous / Continuous | `[time_penalty, fuel_consumption_penalty]` | Continuous Mountain Car env, but with penalties for fuel consumption. |

-| [`mo-lunar-lander-v2`](https://mo-gymnasium.farama.org/environments/mo-lunar-lander/)

| Continuous / Discrete or Continuous | `[landed, shaped_reward, main_engine_fuel, side_engine_fuel]` | MO version of the `LunarLander-v2` [environment](https://gymnasium.farama.org/environments/box2d/lunar_lander/). Objectives defined similarly as in [Hung et al. 2022](https://openreview.net/forum?id=AwWaBXLIJE). |

-| [`minecart-v0`](https://mo-gymnasium.farama.org/environments/minecart/)

| Continuous / Discrete or Continuous | `[landed, shaped_reward, main_engine_fuel, side_engine_fuel]` | MO version of the `LunarLander-v2` [environment](https://gymnasium.farama.org/environments/box2d/lunar_lander/). Objectives defined similarly as in [Hung et al. 2022](https://openreview.net/forum?id=AwWaBXLIJE). |

-| [`minecart-v0`](https://mo-gymnasium.farama.org/environments/minecart/)

| Continuous or Image / Discrete | `[ore1, ore2, fuel]` | Agent must collect two types of ores and minimize fuel consumption. From [Abels et al. 2019](https://arxiv.org/abs/1809.07803v2). |

-| [`mo-highway-v0`](https://mo-gymnasium.farama.org/environments/mo-highway/) and `mo-highway-fast-v0`

| Continuous or Image / Discrete | `[ore1, ore2, fuel]` | Agent must collect two types of ores and minimize fuel consumption. From [Abels et al. 2019](https://arxiv.org/abs/1809.07803v2). |

-| [`mo-highway-v0`](https://mo-gymnasium.farama.org/environments/mo-highway/) and `mo-highway-fast-v0`

| Continuous / Discrete | `[speed, right_lane, collision]` | The agent's objective is to reach a high speed while avoiding collisions with neighbouring vehicles and staying on the rightest lane. From [highway-env](https://github.com/eleurent/highway-env). |

-| [`mo-supermario-v0`](https://mo-gymnasium.farama.org/environments/mo-supermario/)

| Continuous / Discrete | `[speed, right_lane, collision]` | The agent's objective is to reach a high speed while avoiding collisions with neighbouring vehicles and staying on the rightest lane. From [highway-env](https://github.com/eleurent/highway-env). |

-| [`mo-supermario-v0`](https://mo-gymnasium.farama.org/environments/mo-supermario/)

| Image / Discrete | `[x_pos, time, death, coin, enemy]` | [:warning: SuperMarioBrosEnv support is limited.] Multi-objective version of [SuperMarioBrosEnv](https://github.com/Kautenja/gym-super-mario-bros). Objectives are defined similarly as in [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`mo-reacher-v4`](https://mo-gymnasium.farama.org/environments/mo-reacher/)

| Image / Discrete | `[x_pos, time, death, coin, enemy]` | [:warning: SuperMarioBrosEnv support is limited.] Multi-objective version of [SuperMarioBrosEnv](https://github.com/Kautenja/gym-super-mario-bros). Objectives are defined similarly as in [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

-| [`mo-reacher-v4`](https://mo-gymnasium.farama.org/environments/mo-reacher/)

| Continuous / Discrete | `[target_1, target_2, target_3, target_4]` | Mujoco version of `mo-reacher-v0`, based on `Reacher-v4` [environment](https://gymnasium.farama.org/environments/mujoco/reacher/). |

-| [`mo-hopper-v4`](https://mo-gymnasium.farama.org/environments/mo-hopper/)

| Continuous / Discrete | `[target_1, target_2, target_3, target_4]` | Mujoco version of `mo-reacher-v0`, based on `Reacher-v4` [environment](https://gymnasium.farama.org/environments/mujoco/reacher/). |

-| [`mo-hopper-v4`](https://mo-gymnasium.farama.org/environments/mo-hopper/)

| Continuous / Continuous | `[velocity, height, energy]` | Multi-objective version of [Hopper-v4](https://gymnasium.farama.org/environments/mujoco/hopper/) env. |

-| [`mo-halfcheetah-v4`](https://mo-gymnasium.farama.org/environments/mo-halfcheetah/)

| Continuous / Continuous | `[velocity, height, energy]` | Multi-objective version of [Hopper-v4](https://gymnasium.farama.org/environments/mujoco/hopper/) env. |

-| [`mo-halfcheetah-v4`](https://mo-gymnasium.farama.org/environments/mo-halfcheetah/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [HalfCheetah-v4](https://gymnasium.farama.org/environments/mujoco/half_cheetah/) env. Similar to [Xu et al. 2020](https://github.com/mit-gfx/PGMORL). |

-

-

-```{toctree}

-:hidden:

-:glob:

-:caption: MO-Gymnasium Environments

-

-./*

-

-```

diff --git a/docs/environments/classical.md b/docs/environments/classical.md

new file mode 100644

index 00000000..3f80ce85

--- /dev/null

+++ b/docs/environments/classical.md

@@ -0,0 +1,25 @@

+---

+title: "Classic Control"

+---

+

+# Classic Control

+

+Multi-objective versions of classical Gymnasium's environments.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`mo-mountaincar-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincar/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [HalfCheetah-v4](https://gymnasium.farama.org/environments/mujoco/half_cheetah/) env. Similar to [Xu et al. 2020](https://github.com/mit-gfx/PGMORL). |

-

-

-```{toctree}

-:hidden:

-:glob:

-:caption: MO-Gymnasium Environments

-

-./*

-

-```

diff --git a/docs/environments/classical.md b/docs/environments/classical.md

new file mode 100644

index 00000000..3f80ce85

--- /dev/null

+++ b/docs/environments/classical.md

@@ -0,0 +1,25 @@

+---

+title: "Classic Control"

+---

+

+# Classic Control

+

+Multi-objective versions of classical Gymnasium's environments.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`mo-mountaincar-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincar/)

| Continuous / Discrete | `[time_penalty, reverse_penalty, forward_penalty]` | Classic Mountain Car env, but with extra penalties for the forward and reverse actions. From [Vamplew et al. 2011](https://www.researchgate.net/publication/220343783_Empirical_evaluation_methods_for_multiobjective_reinforcement_learning_algorithms). |

+| [`mo-mountaincarcontinuous-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincarcontinuous/)

| Continuous / Discrete | `[time_penalty, reverse_penalty, forward_penalty]` | Classic Mountain Car env, but with extra penalties for the forward and reverse actions. From [Vamplew et al. 2011](https://www.researchgate.net/publication/220343783_Empirical_evaluation_methods_for_multiobjective_reinforcement_learning_algorithms). |

+| [`mo-mountaincarcontinuous-v0`](https://mo-gymnasium.farama.org/environments/mo-mountaincarcontinuous/)

| Continuous / Continuous | `[time_penalty, fuel_consumption_penalty]` | Continuous Mountain Car env, but with penalties for fuel consumption. |

+| [`mo-lunar-lander-v2`](https://mo-gymnasium.farama.org/environments/mo-lunar-lander/)

| Continuous / Continuous | `[time_penalty, fuel_consumption_penalty]` | Continuous Mountain Car env, but with penalties for fuel consumption. |

+| [`mo-lunar-lander-v2`](https://mo-gymnasium.farama.org/environments/mo-lunar-lander/)

| Continuous / Discrete or Continuous | `[landed, shaped_reward, main_engine_fuel, side_engine_fuel]` | MO version of the `LunarLander-v2` [environment](https://gymnasium.farama.org/environments/box2d/lunar_lander/). Objectives defined similarly as in [Hung et al. 2022](https://openreview.net/forum?id=AwWaBXLIJE). |

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./mo-mountaincar

+./mo-mountaincarcontinuous

+./mo-lunar-lander

+./mo-lunar-lander-continuous

+

+```

diff --git a/docs/environments/grid-world.md b/docs/environments/grid-world.md

new file mode 100644

index 00000000..e9d25520

--- /dev/null

+++ b/docs/environments/grid-world.md

@@ -0,0 +1,36 @@

+---

+title: "Grid-World"

+---

+

+# Grid-World

+

+Environments with discrete observation spaces, e.g., grid-worlds.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`deep-sea-treasure-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure/)

| Continuous / Discrete or Continuous | `[landed, shaped_reward, main_engine_fuel, side_engine_fuel]` | MO version of the `LunarLander-v2` [environment](https://gymnasium.farama.org/environments/box2d/lunar_lander/). Objectives defined similarly as in [Hung et al. 2022](https://openreview.net/forum?id=AwWaBXLIJE). |

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./mo-mountaincar

+./mo-mountaincarcontinuous

+./mo-lunar-lander

+./mo-lunar-lander-continuous

+

+```

diff --git a/docs/environments/grid-world.md b/docs/environments/grid-world.md

new file mode 100644

index 00000000..e9d25520

--- /dev/null

+++ b/docs/environments/grid-world.md

@@ -0,0 +1,36 @@

+---

+title: "Grid-World"

+---

+

+# Grid-World

+

+Environments with discrete observation spaces, e.g., grid-worlds.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`deep-sea-treasure-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+| [`deep-sea-treasure-concave-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-concave/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+| [`deep-sea-treasure-concave-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-concave/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Vamplew et al. 2010](https://link.springer.com/article/10.1007/s10994-010-5232-5). |

+| [`deep-sea-treasure-mirrored-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-mirrored/)

| Discrete / Discrete | `[treasure, time_penalty]` | Agent is a submarine that must collect a treasure while taking into account a time penalty. Treasures values taken from [Vamplew et al. 2010](https://link.springer.com/article/10.1007/s10994-010-5232-5). |

+| [`deep-sea-treasure-mirrored-v0`](https://mo-gymnasium.farama.org/environments/deep-sea-treasure-mirrored/)

| Discrete / Discrete | `[treasure, time_penalty]` | Harder version of the concave DST [Felten et al. 2022](https://www.scitepress.org/Papers/2022/109891/109891.pdf). |

+| [`resource-gathering-v0`](https://mo-gymnasium.farama.org/environments/resource-gathering/)

| Discrete / Discrete | `[treasure, time_penalty]` | Harder version of the concave DST [Felten et al. 2022](https://www.scitepress.org/Papers/2022/109891/109891.pdf). |

+| [`resource-gathering-v0`](https://mo-gymnasium.farama.org/environments/resource-gathering/)

| Discrete / Discrete | `[enemy, gold, gem]` | Agent must collect gold or gem. Enemies have a 10% chance of killing the agent. From [Barret & Narayanan 2008](https://dl.acm.org/doi/10.1145/1390156.1390162). |

+| [`fishwood-v0`](https://mo-gymnasium.farama.org/environments/fishwood/)

| Discrete / Discrete | `[enemy, gold, gem]` | Agent must collect gold or gem. Enemies have a 10% chance of killing the agent. From [Barret & Narayanan 2008](https://dl.acm.org/doi/10.1145/1390156.1390162). |

+| [`fishwood-v0`](https://mo-gymnasium.farama.org/environments/fishwood/)

| Discrete / Discrete | `[fish_amount, wood_amount]` | ESR environment, the agent must collect fish and wood to light a fire and eat. From [Roijers et al. 2018](https://www.researchgate.net/publication/328718263_Multi-objective_Reinforcement_Learning_for_the_Expected_Utility_of_the_Return). |

+| [`breakable-bottles-v0`](https://mo-gymnasium.farama.org/environments/breakable-bottles/)

| Discrete / Discrete | `[fish_amount, wood_amount]` | ESR environment, the agent must collect fish and wood to light a fire and eat. From [Roijers et al. 2018](https://www.researchgate.net/publication/328718263_Multi-objective_Reinforcement_Learning_for_the_Expected_Utility_of_the_Return). |

+| [`breakable-bottles-v0`](https://mo-gymnasium.farama.org/environments/breakable-bottles/)

| Discrete (Dictionary) / Discrete | `[time_penalty, bottles_delivered, potential]` | Gridworld with 5 cells. The agents must collect bottles from the source location and deliver to the destination. From [Vamplew et al. 2021](https://www.sciencedirect.com/science/article/pii/S0952197621000336). |

+| [`fruit-tree-v0`](https://mo-gymnasium.farama.org/environments/fruit-tree/)

| Discrete (Dictionary) / Discrete | `[time_penalty, bottles_delivered, potential]` | Gridworld with 5 cells. The agents must collect bottles from the source location and deliver to the destination. From [Vamplew et al. 2021](https://www.sciencedirect.com/science/article/pii/S0952197621000336). |

+| [`fruit-tree-v0`](https://mo-gymnasium.farama.org/environments/fruit-tree/)

| Discrete / Discrete | `[nutri1, ..., nutri6]` | Full binary tree of depth d=5,6 or 7. Every leaf contains a fruit with a value for the nutrients Protein, Carbs, Fats, Vitamins, Minerals and Water. From [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+| [`four-room-v0`](https://mo-gymnasium.farama.org/environments/four-room/)

| Discrete / Discrete | `[nutri1, ..., nutri6]` | Full binary tree of depth d=5,6 or 7. Every leaf contains a fruit with a value for the nutrients Protein, Carbs, Fats, Vitamins, Minerals and Water. From [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+| [`four-room-v0`](https://mo-gymnasium.farama.org/environments/four-room/)

| Discrete / Discrete | `[item1, item2, item3]` | Agent must collect three different types of items in the map and reach the goal. From [Alegre et al. 2022](https://proceedings.mlr.press/v162/alegre22a.html). |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./deep-sea-treasure

+./deep-sea-treasure-concave

+./deep-sea-treasure-mirrored

+./resource-gathering

+./four-room

+./fruit-tree

+./breakable-bottles

+./fishwood

+

+

+```

diff --git a/docs/environments/misc.md b/docs/environments/misc.md

new file mode 100644

index 00000000..d9cc2295

--- /dev/null

+++ b/docs/environments/misc.md

@@ -0,0 +1,28 @@

+---

+title: "Misc"

+---

+

+# Miscellaneous

+

+MO-Gymnasium also includes other miscellaneous multi-objective environments:

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`water-reservoir-v0`](https://mo-gymnasium.farama.org/environments/water-reservoir/)

| Discrete / Discrete | `[item1, item2, item3]` | Agent must collect three different types of items in the map and reach the goal. From [Alegre et al. 2022](https://proceedings.mlr.press/v162/alegre22a.html). |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./deep-sea-treasure

+./deep-sea-treasure-concave

+./deep-sea-treasure-mirrored

+./resource-gathering

+./four-room

+./fruit-tree

+./breakable-bottles

+./fishwood

+

+

+```

diff --git a/docs/environments/misc.md b/docs/environments/misc.md

new file mode 100644

index 00000000..d9cc2295

--- /dev/null

+++ b/docs/environments/misc.md

@@ -0,0 +1,28 @@

+---

+title: "Misc"

+---

+

+# Miscellaneous

+

+MO-Gymnasium also includes other miscellaneous multi-objective environments:

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`water-reservoir-v0`](https://mo-gymnasium.farama.org/environments/water-reservoir/)

| Continuous / Continuous | `[cost_flooding, deficit_water]` | A Water reservoir environment. The agent executes a continuous action, corresponding to the amount of water released by the dam. From [Pianosi et al. 2013](https://iwaponline.com/jh/article/15/2/258/3425/Tree-based-fitted-Q-iteration-for-multi-objective). |

+| [`minecart-v0`](https://mo-gymnasium.farama.org/environments/minecart/)

| Continuous / Continuous | `[cost_flooding, deficit_water]` | A Water reservoir environment. The agent executes a continuous action, corresponding to the amount of water released by the dam. From [Pianosi et al. 2013](https://iwaponline.com/jh/article/15/2/258/3425/Tree-based-fitted-Q-iteration-for-multi-objective). |

+| [`minecart-v0`](https://mo-gymnasium.farama.org/environments/minecart/)

| Continuous or Image / Discrete | `[ore1, ore2, fuel]` | Agent must collect two types of ores and minimize fuel consumption. From [Abels et al. 2019](https://arxiv.org/abs/1809.07803v2). |

+| [`mo-highway-v0`](https://mo-gymnasium.farama.org/environments/mo-highway/) and `mo-highway-fast-v0`

| Continuous or Image / Discrete | `[ore1, ore2, fuel]` | Agent must collect two types of ores and minimize fuel consumption. From [Abels et al. 2019](https://arxiv.org/abs/1809.07803v2). |

+| [`mo-highway-v0`](https://mo-gymnasium.farama.org/environments/mo-highway/) and `mo-highway-fast-v0`

| Continuous / Discrete | `[speed, right_lane, collision]` | The agent's objective is to reach a high speed while avoiding collisions with neighbouring vehicles and staying on the rightest lane. From [highway-env](https://github.com/eleurent/highway-env). |

+| [`mo-supermario-v0`](https://mo-gymnasium.farama.org/environments/mo-supermario/)

| Continuous / Discrete | `[speed, right_lane, collision]` | The agent's objective is to reach a high speed while avoiding collisions with neighbouring vehicles and staying on the rightest lane. From [highway-env](https://github.com/eleurent/highway-env). |

+| [`mo-supermario-v0`](https://mo-gymnasium.farama.org/environments/mo-supermario/)

| Image / Discrete | `[x_pos, time, death, coin, enemy]` | [:warning: SuperMarioBrosEnv support is limited.] Multi-objective version of [SuperMarioBrosEnv](https://github.com/Kautenja/gym-super-mario-bros). Objectives are defined similarly as in [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./water-reservoir

+./minecart

+./minecart-deterministic

+./minecart-rgb

+./mo-highway

+./mo-supermario

+```

diff --git a/docs/environments/mujoco.md b/docs/environments/mujoco.md

new file mode 100644

index 00000000..70272d24

--- /dev/null

+++ b/docs/environments/mujoco.md

@@ -0,0 +1,32 @@

+---

+title: "MuJoCo"

+---

+

+# MuJoCo

+

+Multi-objective versions of Mujoco environments.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`mo-reacher-v4`](https://mo-gymnasium.farama.org/environments/mo-reacher/)

| Image / Discrete | `[x_pos, time, death, coin, enemy]` | [:warning: SuperMarioBrosEnv support is limited.] Multi-objective version of [SuperMarioBrosEnv](https://github.com/Kautenja/gym-super-mario-bros). Objectives are defined similarly as in [Yang et al. 2019](https://arxiv.org/pdf/1908.08342.pdf). |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./water-reservoir

+./minecart

+./minecart-deterministic

+./minecart-rgb

+./mo-highway

+./mo-supermario

+```

diff --git a/docs/environments/mujoco.md b/docs/environments/mujoco.md

new file mode 100644

index 00000000..70272d24

--- /dev/null

+++ b/docs/environments/mujoco.md

@@ -0,0 +1,32 @@

+---

+title: "MuJoCo"

+---

+

+# MuJoCo

+

+Multi-objective versions of Mujoco environments.

+

+| Env | Obs/Action spaces | Objectives | Description |

+|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------------|---------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

+| [`mo-reacher-v4`](https://mo-gymnasium.farama.org/environments/mo-reacher/)

| Continuous / Discrete | `[target_1, target_2, target_3, target_4]` | Mujoco version of `mo-reacher-v0`, based on `Reacher-v4` [environment](https://gymnasium.farama.org/environments/mujoco/reacher/). |

+| [`mo-hopper-v4`](https://mo-gymnasium.farama.org/environments/mo-hopper/)

| Continuous / Discrete | `[target_1, target_2, target_3, target_4]` | Mujoco version of `mo-reacher-v0`, based on `Reacher-v4` [environment](https://gymnasium.farama.org/environments/mujoco/reacher/). |

+| [`mo-hopper-v4`](https://mo-gymnasium.farama.org/environments/mo-hopper/)

| Continuous / Continuous | `[velocity, height, energy]` | Multi-objective version of [Hopper-v4](https://gymnasium.farama.org/environments/mujoco/hopper/) env. |

+| [`mo-halfcheetah-v4`](https://mo-gymnasium.farama.org/environments/mo-halfcheetah/)

| Continuous / Continuous | `[velocity, height, energy]` | Multi-objective version of [Hopper-v4](https://gymnasium.farama.org/environments/mujoco/hopper/) env. |

+| [`mo-halfcheetah-v4`](https://mo-gymnasium.farama.org/environments/mo-halfcheetah/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [HalfCheetah-v4](https://gymnasium.farama.org/environments/mujoco/half_cheetah/) env. Similar to [Xu et al. 2020](https://github.com/mit-gfx/PGMORL). |

+| [`mo-walker2d-v4`](https://mo-gymnasium.farama.org/environments/mo-walker2d/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [HalfCheetah-v4](https://gymnasium.farama.org/environments/mujoco/half_cheetah/) env. Similar to [Xu et al. 2020](https://github.com/mit-gfx/PGMORL). |

+| [`mo-walker2d-v4`](https://mo-gymnasium.farama.org/environments/mo-walker2d/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Walker2d-v4](https://gymnasium.farama.org/environments/mujoco/walker2d/) env. |

+| [`mo-ant-v4`](https://mo-gymnasium.farama.org/environments/mo-ant/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Walker2d-v4](https://gymnasium.farama.org/environments/mujoco/walker2d/) env. |

+| [`mo-ant-v4`](https://mo-gymnasium.farama.org/environments/mo-ant/)

| Continuous / Continuous | `[x_velocity, y_velocity, energy]` | Multi-objective version of [Ant-v4](https://gymnasium.farama.org/environments/mujoco/ant/) env. |

+| [`mo-swimmer-v4`](https://mo-gymnasium.farama.org/environments/mo-swimmer/)

| Continuous / Continuous | `[x_velocity, y_velocity, energy]` | Multi-objective version of [Ant-v4](https://gymnasium.farama.org/environments/mujoco/ant/) env. |

+| [`mo-swimmer-v4`](https://mo-gymnasium.farama.org/environments/mo-swimmer/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Swimmer-v4](https://gymnasium.farama.org/environments/mujoco/swimmer/) env. |

+| [`mo-humanoid-v4`](https://mo-gymnasium.farama.org/environments/mo-humanoid/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Swimmer-v4](https://gymnasium.farama.org/environments/mujoco/swimmer/) env. |

+| [`mo-humanoid-v4`](https://mo-gymnasium.farama.org/environments/mo-humanoid/)

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Humonoid-v4](https://gymnasium.farama.org/environments/mujoco/humanoid/) env. |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./mo-reacher

+./mo-hopper

+./mo-halfcheetah

+./mo-walker2d

+./mo-ant

+./mo-swimmer

+./mo-humanoid

+```

diff --git a/docs/examples/publications.md b/docs/examples/publications.md

index c2739d12..23176746 100644

--- a/docs/examples/publications.md

+++ b/docs/examples/publications.md

@@ -10,7 +10,14 @@ MO-Gymnasium (formerly MO-Gym) was first published in:

List of publications & submissions using MO-Gymnasium (please open a pull request to add missing entries):

+

- [Sample-Efficient Multi-Objective Learning via Generalized Policy Improvement Prioritization](https://arxiv.org/abs/2301.07784) (Alegre et al., AAMAS 2023)

- [Hyperparameter Optimization for Multi-Objective Reinforcement Learning](https://arxiv.org/abs/2310.16487v1) (Felten et al., MODeM Workshop 2023)

- [Multi-Step Generalized Policy Improvement by Leveraging Approximate Models](https://openreview.net/forum?id=KFj0Q1EXvU) (Alegre et al., NeurIPS 2023)

- [A Toolkit for Reliable Benchmarking and Research in Multi-Objective Reinforcement Learning](https://openreview.net/forum?id=jfwRLudQyj) (Felten et al., NeurIPS 2023)

+- [Distributional Pareto-Optimal Multi-Objective Reinforcement Learning](https://proceedings.neurips.cc/paper_files/paper/2023/hash/32285dd184dbfc33cb2d1f0db53c23c5-Abstract-Conference.html) (Cai et al., NeurIPS 2023)

+- [Welfare and Fairness in Multi-objective Reinforcement Learning](https://arxiv.org/abs/2212.01382) (Fan et al., AAMAS 2023)

+- [Personalized Reinforcement Learning with a Budget of Policies](https://arxiv.org/abs/2401.06514) (Ivanov et al., 2024)

+- [Multi-Objective Reinforcement Learning Based on Decomposition: A Taxonomy and Framework](https://arxiv.org/abs/2311.12495) (Felten et al., 2024)

+- [Multi-objective reinforcement learning for guaranteeing alignment with multiple values](https://alaworkshop2023.github.io/papers/ALA2023_paper_15.pdf) (Rodriguez-Soto et al., 2023)

+- [MOFL/D: A Federated Multi-objective Learning Framework with Decomposition](https://neurips.cc/virtual/2023/79018) (Hartmann et al., 2023)

diff --git a/docs/index.md b/docs/index.md

index a5069a60..fb6d56ff 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -10,11 +10,21 @@ lastpage:

introduction/install

introduction/api

-environments/all-environments

wrappers/wrappers

examples/morl_baselines

```

+```{toctree}

+:hidden:

+:caption: Environments

+

+environments/grid-world

+environments/classical

+environments/misc

+environments/mujoco

+```

+

+

```{toctree}

:hidden:

:caption: Tutorials

diff --git a/mo_gymnasium/__init__.py b/mo_gymnasium/__init__.py

index bb596e25..23201d0c 100644

--- a/mo_gymnasium/__init__.py

+++ b/mo_gymnasium/__init__.py

@@ -14,4 +14,4 @@

)

-__version__ = "1.0.1"

+__version__ = "1.1.0"

diff --git a/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py b/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

index 0a6cbe72..93c33821 100644

--- a/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

+++ b/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

@@ -25,9 +25,13 @@ class BreakableBottles(Env, EzPickle):

The observation space is a dictionary with 4 keys:

- location: the current location of the agent

- bottles_carrying: the number of bottles the agent is currently carrying (0, 1 or 2)

- - bottles_delivered: the number of bottles the agent has delivered (0 or 1)

+ - bottles_delivered: the number of bottles the agent has delivered (0, 1 or 2)

- bottles_dropped: for each location, a boolean flag indicating if that location currently contains a bottle

+ Note that this observation space is different from that listed in the paper above. In the paper, bottles_delivered's possible values are listed as (0 or 1),

+ rather than (0, 1 or 2). This is because the paper did not take the terminal state, in which 2 bottles have been delivered, into account when calculating

+ the observation space. As such, the observation space of this implementation is larger than specified in the paper, having 360 possible states instead of 240.

+

## Reward Space

The reward space has 3 dimensions:

- time penalty: -1 for each time step

@@ -96,11 +100,11 @@ def __init__(

{

"location": Discrete(self.size),

"bottles_carrying": Discrete(3),

- "bottles_delivered": Discrete(2),

+ "bottles_delivered": Discrete(3),

"bottles_dropped": MultiBinary(self.size - 2),

}

)

- self.num_observations = 240

+ self.num_observations = 360

self.action_space = Discrete(3) # LEFT, RIGHT, PICKUP

self.num_actions = 3

@@ -220,7 +224,7 @@ def get_obs_idx(self, obs):

*[[bd > 0] for bd in obs["bottles_dropped"]],

]

)

- return np.ravel_multi_index(multi_index, tuple([self.size, 3, 2, *([2] * (self.size - 2))]))

+ return np.ravel_multi_index(multi_index, tuple([self.size, 3, 3, *([2] * (self.size - 2))]))

def _get_obs(self):

return {

diff --git a/mo_gymnasium/envs/lunar_lander/lunar_lander.py b/mo_gymnasium/envs/lunar_lander/lunar_lander.py

index 091190a9..c8d94d6a 100644

--- a/mo_gymnasium/envs/lunar_lander/lunar_lander.py

+++ b/mo_gymnasium/envs/lunar_lander/lunar_lander.py

@@ -6,6 +6,7 @@

FPS,

LEG_DOWN,

MAIN_ENGINE_POWER,

+ MAIN_ENGINE_Y_LOCATION,

SCALE,

SIDE_ENGINE_AWAY,

SIDE_ENGINE_HEIGHT,

@@ -46,7 +47,7 @@ def __init__(self, *args, **kwargs):

def step(self, action):

assert self.lander is not None

- # Update wind

+ # Update wind and apply to the lander

assert self.lander is not None, "You forgot to call reset()"

if self.enable_wind and not (self.legs[0].ground_contact or self.legs[1].ground_contact):

# the function used for wind is tanh(sin(2 k x) + sin(pi k x)),

@@ -60,12 +61,13 @@ def step(self, action):

# the function used for torque is tanh(sin(2 k x) + sin(pi k x)),

# which is proven to never be periodic, k = 0.01

- torque_mag = math.tanh(math.sin(0.02 * self.torque_idx) + (math.sin(math.pi * 0.01 * self.torque_idx))) * (

- self.turbulence_power

+ torque_mag = (

+ math.tanh(math.sin(0.02 * self.torque_idx) + (math.sin(math.pi * 0.01 * self.torque_idx)))

+ * self.turbulence_power

)

self.torque_idx += 1

self.lander.ApplyTorque(

- (torque_mag),

+ torque_mag,

True,

)

@@ -74,9 +76,15 @@ def step(self, action):

else:

assert self.action_space.contains(action), f"{action!r} ({type(action)}) invalid "

- # Engines

+ # Apply Engine Impulses

+

+ # Tip is the (X and Y) components of the rotation of the lander.

tip = (math.sin(self.lander.angle), math.cos(self.lander.angle))

+

+ # Side is the (-Y and X) components of the rotation of the lander.

side = (-tip[1], tip[0])

+

+ # Generate two random numbers between -1/SCALE and 1/SCALE.

dispersion = [self.np_random.uniform(-1.0, +1.0) / SCALE for _ in range(2)]

m_power = 0.0

@@ -87,21 +95,29 @@ def step(self, action):

assert m_power >= 0.5 and m_power <= 1.0

else:

m_power = 1.0

+

# 4 is move a bit downwards, +-2 for randomness

- ox = tip[0] * (4 / SCALE + 2 * dispersion[0]) + side[0] * dispersion[1]

- oy = -tip[1] * (4 / SCALE + 2 * dispersion[0]) - side[1] * dispersion[1]

+ # The components of the impulse to be applied by the main engine.

+ ox = tip[0] * (MAIN_ENGINE_Y_LOCATION / SCALE + 2 * dispersion[0]) + side[0] * dispersion[1]

+ oy = -tip[1] * (MAIN_ENGINE_Y_LOCATION / SCALE + 2 * dispersion[0]) - side[1] * dispersion[1]

+

impulse_pos = (self.lander.position[0] + ox, self.lander.position[1] + oy)

- p = self._create_particle(

- 3.5, # 3.5 is here to make particle speed adequate

- impulse_pos[0],

- impulse_pos[1],

- m_power,

- ) # particles are just a decoration

- p.ApplyLinearImpulse(

- (ox * MAIN_ENGINE_POWER * m_power, oy * MAIN_ENGINE_POWER * m_power),

- impulse_pos,

- True,

- )

+ if self.render_mode is not None:

+ # particles are just a decoration, with no impact on the physics, so don't add them when not rendering

+ p = self._create_particle(

+ 3.5, # 3.5 is here to make particle speed adequate

+ impulse_pos[0],

+ impulse_pos[1],

+ m_power,

+ )

+ p.ApplyLinearImpulse(

+ (

+ ox * MAIN_ENGINE_POWER * m_power,

+ oy * MAIN_ENGINE_POWER * m_power,

+ ),

+ impulse_pos,

+ True,

+ )

self.lander.ApplyLinearImpulse(

(-ox * MAIN_ENGINE_POWER * m_power, -oy * MAIN_ENGINE_POWER * m_power),

impulse_pos,

@@ -110,26 +126,39 @@ def step(self, action):

s_power = 0.0

if (self.continuous and np.abs(action[1]) > 0.5) or (not self.continuous and action in [1, 3]):

- # Orientation engines

+ # Orientation/Side engines

if self.continuous:

direction = np.sign(action[1])

s_power = np.clip(np.abs(action[1]), 0.5, 1.0)

assert s_power >= 0.5 and s_power <= 1.0

else:

+ # action = 1 is left, action = 3 is right

direction = action - 2

s_power = 1.0

+

+ # The components of the impulse to be applied by the side engines.

ox = tip[0] * dispersion[0] + side[0] * (3 * dispersion[1] + direction * SIDE_ENGINE_AWAY / SCALE)

oy = -tip[1] * dispersion[0] - side[1] * (3 * dispersion[1] + direction * SIDE_ENGINE_AWAY / SCALE)

+

+ # The constant 17 is a constant, that is presumably meant to be SIDE_ENGINE_HEIGHT.

+ # However, SIDE_ENGINE_HEIGHT is defined as 14

+ # This causes the position of the thrust on the body of the lander to change, depending on the orientation of the lander.

+ # This in turn results in an orientation dependent torque being applied to the lander.

impulse_pos = (

self.lander.position[0] + ox - tip[0] * 17 / SCALE,

self.lander.position[1] + oy + tip[1] * SIDE_ENGINE_HEIGHT / SCALE,

)

- p = self._create_particle(0.7, impulse_pos[0], impulse_pos[1], s_power)

- p.ApplyLinearImpulse(

- (ox * SIDE_ENGINE_POWER * s_power, oy * SIDE_ENGINE_POWER * s_power),

- impulse_pos,

- True,

- )

+ if self.render_mode is not None:

+ # particles are just a decoration, with no impact on the physics, so don't add them when not rendering

+ p = self._create_particle(0.7, impulse_pos[0], impulse_pos[1], s_power)

+ p.ApplyLinearImpulse(

+ (

+ ox * SIDE_ENGINE_POWER * s_power,

+ oy * SIDE_ENGINE_POWER * s_power,

+ ),

+ impulse_pos,

+ True,

+ )

self.lander.ApplyLinearImpulse(

(-ox * SIDE_ENGINE_POWER * s_power, -oy * SIDE_ENGINE_POWER * s_power),

impulse_pos,

@@ -140,6 +169,7 @@ def step(self, action):

pos = self.lander.position

vel = self.lander.linearVelocity

+

state = [

(pos.x - VIEWPORT_W / SCALE / 2) / (VIEWPORT_W / SCALE / 2),

(pos.y - (self.helipad_y + LEG_DOWN / SCALE)) / (VIEWPORT_H / SCALE / 2),

diff --git a/mo_gymnasium/envs/mujoco/__init__.py b/mo_gymnasium/envs/mujoco/__init__.py

index 5b605ff5..4415d577 100644

--- a/mo_gymnasium/envs/mujoco/__init__.py

+++ b/mo_gymnasium/envs/mujoco/__init__.py

@@ -39,6 +39,37 @@

kwargs={"cost_objective": False},

)

+register(

+ id="mo-walker2d-v4",

+ entry_point="mo_gymnasium.envs.mujoco.walker2d:MOWalker2dEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-ant-v4",

+ entry_point="mo_gymnasium.envs.mujoco.ant:MOAntEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-ant-2d-v4",

+ entry_point="mo_gymnasium.envs.mujoco.ant:MOAntEnv",

+ max_episode_steps=1000,

+ kwargs={"cost_objective": False},

+)

+

+register(

+ id="mo-swimmer-v4",

+ entry_point="mo_gymnasium.envs.mujoco.swimmer:MOSwimmerEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-humanoid-v4",

+ entry_point="mo_gymnasium.envs.mujoco.humanoid:MOHumanoidEnv",

+ max_episode_steps=1000,

+)

+

register(

id="mo-reacher-v4",

entry_point="mo_gymnasium.envs.mujoco.reacher_v4:MOReacherEnv",

diff --git a/mo_gymnasium/envs/mujoco/ant.py b/mo_gymnasium/envs/mujoco/ant.py

new file mode 100644

index 00000000..cc2ba7ed

--- /dev/null

+++ b/mo_gymnasium/envs/mujoco/ant.py

@@ -0,0 +1,51 @@

+import numpy as np

+from gymnasium.envs.mujoco.ant_v4 import AntEnv

+from gymnasium.spaces import Box

+from gymnasium.utils import EzPickle

+

+

+class MOAntEnv(AntEnv, EzPickle):

+ """

+ ## Description

+ Multi-objective version of the AntEnv environment.

+

+ See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/ant/) for more information.

+

+ The original Gymnasium's 'Ant-v4' is recovered by the following linear scalarization:

+

+ env = mo_gym.make('mo-ant-v4', cost_objective=False)

+ LinearReward(env, weight=np.array([1.0, 0.0]))

+

+ ## Reward Space

+ The reward is 2- or 3-dimensional:

+ - 0: x-velocity

+ - 1: y-velocity

+ - 2: Control cost of the action

+ If the cost_objective flag is set to False, the reward is 2-dimensional, and the cost is added to other objectives.

+ A healthy reward is added to all objectives.

+ """

+

+ def __init__(self, cost_objective=True, **kwargs):

+ super().__init__(**kwargs)

+ EzPickle.__init__(self, cost_objective, **kwargs)

+ self.cost_objetive = cost_objective

+ self.reward_dim = 3 if cost_objective else 2

+ self.reward_space = Box(low=-np.inf, high=np.inf, shape=(self.reward_dim,))

+

+ def step(self, action):

+ observation, reward, terminated, truncated, info = super().step(action)

+ x_velocity = info["x_velocity"]

+ y_velocity = info["y_velocity"]

+ cost = info["reward_ctrl"]

+ healthy_reward = info["reward_survive"]

+

+ if self.cost_objetive:

+ cost /= self._ctrl_cost_weight # Ignore the weight in the original AntEnv

+ vec_reward = np.array([x_velocity, y_velocity, cost], dtype=np.float32)

+ else:

+ vec_reward = np.array([x_velocity, y_velocity], dtype=np.float32)

+ vec_reward += cost

+

+ vec_reward += healthy_reward

+

+ return observation, vec_reward, terminated, truncated, info

diff --git a/mo_gymnasium/envs/mujoco/half_cheetah_v4.py b/mo_gymnasium/envs/mujoco/half_cheetah_v4.py

index 8427cc52..0dac5308 100644

--- a/mo_gymnasium/envs/mujoco/half_cheetah_v4.py

+++ b/mo_gymnasium/envs/mujoco/half_cheetah_v4.py

@@ -11,6 +11,11 @@ class MOHalfCheehtahEnv(HalfCheetahEnv, EzPickle):

See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/half_cheetah/) for more information.

+ The original Gymnasium's 'HalfCheetah-v4' is recovered by the following linear scalarization:

+

+ env = mo_gym.make('mo-halfcheetah-v4')

+ LinearReward(env, weight=np.array([1.0, 1.0]))

+

## Reward Space

The reward is 2-dimensional:

- 0: Reward for running forward

diff --git a/mo_gymnasium/envs/mujoco/hopper_v4.py b/mo_gymnasium/envs/mujoco/hopper_v4.py

index 7d35b408..6fe0ed3c 100644

--- a/mo_gymnasium/envs/mujoco/hopper_v4.py

+++ b/mo_gymnasium/envs/mujoco/hopper_v4.py

@@ -11,6 +11,11 @@ class MOHopperEnv(HopperEnv, EzPickle):

See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/hopper/) for more information.

+ The original Gymnasium's 'Hopper-v4' is recovered by the following linear scalarization:

+

+ env = mo_gym.make('mo-hopper-v4', cost_objective=False)

+ LinearReward(env, weight=np.array([1.0, 0.0]))

+

## Reward Space

The reward is 3-dimensional:

- 0: Reward for going forward on the x-axis

diff --git a/mo_gymnasium/envs/mujoco/humanoid.py b/mo_gymnasium/envs/mujoco/humanoid.py

new file mode 100644

index 00000000..12518cd8

--- /dev/null

+++ b/mo_gymnasium/envs/mujoco/humanoid.py

@@ -0,0 +1,34 @@

+import numpy as np

+from gymnasium.envs.mujoco.humanoid_v4 import HumanoidEnv

+from gymnasium.spaces import Box

+from gymnasium.utils import EzPickle

+

+

+class MOHumanoidEnv(HumanoidEnv, EzPickle):

+ """

+ ## Description

+ Multi-objective version of the HumanoidEnv environment.

+

+ See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/humanoid/) for more information.

+

+ ## Reward Space

+ The reward is 2-dimensional:

+ - 0: Reward for running forward (x-velocity)

+ - 1: Control cost of the action

+ """

+

+ def __init__(self, **kwargs):

+ super().__init__(**kwargs)

+ EzPickle.__init__(self, **kwargs)

+ self.reward_space = Box(low=-np.inf, high=np.inf, shape=(2,))

+ self.reward_dim = 2

+

+ def step(self, action):

+ observation, reward, terminated, truncated, info = super().step(action)

+ velocity = info["x_velocity"]

+ negative_cost = 10 * info["reward_quadctrl"]

+ vec_reward = np.array([velocity, negative_cost], dtype=np.float32)

+

+ vec_reward += self.healthy_reward # All objectives are penalyzed when the agent falls

+

+ return observation, vec_reward, terminated, truncated, info

diff --git a/mo_gymnasium/envs/mujoco/swimmer.py b/mo_gymnasium/envs/mujoco/swimmer.py

new file mode 100644

index 00000000..72e5b59e

--- /dev/null

+++ b/mo_gymnasium/envs/mujoco/swimmer.py

@@ -0,0 +1,38 @@

+import numpy as np

+from gymnasium.envs.mujoco.swimmer_v4 import SwimmerEnv

+from gymnasium.spaces import Box

+from gymnasium.utils import EzPickle

+

+

+class MOSwimmerEnv(SwimmerEnv, EzPickle):

+ """

+ ## Description

+ Multi-objective version of the SwimmerEnv environment.

+

+ See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/swimmer/) for more information.

+

+ The original Gymnasium's 'Swimmer-v4' is recovered by the following linear scalarization:

+

+ env = mo_gym.make('mo-swimmer-v4')

+ LinearReward(env, weight=np.array([1.0, 1e-4]))

+

+ ## Reward Space

+ The reward is 2-dimensional:

+ - 0: Reward for moving forward (x-velocity)

+ - 1: Control cost of the action

+ """

+

+ def __init__(self, **kwargs):

+ super().__init__(**kwargs)

+ EzPickle.__init__(self, **kwargs)

+ self.reward_space = Box(low=-np.inf, high=np.inf, shape=(2,))

+ self.reward_dim = 2

+

+ def step(self, action):

+ observation, reward, terminated, truncated, info = super().step(action)

+ velocity = info["x_velocity"]

+ energy = -np.sum(np.square(action))

+

+ vec_reward = np.array([velocity, energy], dtype=np.float32)

+

+ return observation, vec_reward, terminated, truncated, info

diff --git a/mo_gymnasium/envs/mujoco/walker2d.py b/mo_gymnasium/envs/mujoco/walker2d.py

new file mode 100644

index 00000000..e3806810

--- /dev/null

+++ b/mo_gymnasium/envs/mujoco/walker2d.py

@@ -0,0 +1,35 @@

+import numpy as np

+from gymnasium.envs.mujoco.walker2d_v4 import Walker2dEnv

+from gymnasium.spaces import Box

+from gymnasium.utils import EzPickle

+

+

+class MOWalker2dEnv(Walker2dEnv, EzPickle):

+ """

+ ## Description

+ Multi-objective version of the Walker2dEnv environment.

+

+ See [Gymnasium's env](https://gymnasium.farama.org/environments/mujoco/walker2d/) for more information.

+

+ ## Reward Space

+ The reward is 2-dimensional:

+ - 0: Reward for running forward (x-velocity)

+ - 1: Control cost of the action

+ """

+

+ def __init__(self, **kwargs):

+ super().__init__(**kwargs)

+ EzPickle.__init__(self, **kwargs)

+ self.reward_space = Box(low=-np.inf, high=np.inf, shape=(2,))

+ self.reward_dim = 2

+

+ def step(self, action):

+ observation, reward, terminated, truncated, info = super().step(action)

+ velocity = info["x_velocity"]

+ energy = -np.sum(np.square(action))

+

+ vec_reward = np.array([velocity, energy], dtype=np.float32)

+

+ vec_reward += self.healthy_reward # All objectives are penalyzed when the agent falls

+

+ return observation, vec_reward, terminated, truncated, info

diff --git a/tests/test_envs.py b/tests/test_envs.py

index 7e338be4..28af4b0c 100644

--- a/tests/test_envs.py

+++ b/tests/test_envs.py

@@ -5,6 +5,7 @@

import pytest

from gymnasium.envs.registration import EnvSpec

from gymnasium.utils.env_checker import check_env, data_equivalence

+from gymnasium.utils.env_match import check_environments_match

import mo_gymnasium as mo_gym

@@ -40,6 +41,32 @@ def test_all_env_passive_env_checker(spec):

env.close()

+@pytest.mark.parametrize(

+ "gym_id, mo_gym_id",

+ [

+ ("MountainCar-v0", "mo-mountaincar-v0"),

+ ("MountainCarContinuous-v0", "mo-mountaincarcontinuous-v0"),

+ ("LunarLander-v2", "mo-lunar-lander-v2"),

+ # ("Reacher-v4", "mo-reacher-v4"), # use a different model and action space

+ ("Hopper-v4", "mo-hopper-v4"),

+ ("HalfCheetah-v4", "mo-halfcheetah-v4"),

+ ("Walker2d-v4", "mo-walker2d-v4"),

+ ("Ant-v4", "mo-ant-v4"),

+ ("Swimmer-v4", "mo-swimmer-v4"),

+ ("Humanoid-v4", "mo-humanoid-v4"),

+ ],

+)

+def test_gymnasium_equivalence(gym_id, mo_gym_id, num_steps=100, seed=123):

+ env = gym.make(gym_id)

+ mo_env = mo_gym.LinearReward(mo_gym.make(mo_gym_id))

+

+ # for float rewards, then precision becomes an issue

+ env = gym.wrappers.TransformReward(env, lambda reward: round(reward, 4))

+ mo_env = gym.wrappers.TransformReward(mo_env, lambda reward: round(reward, 4))

+

+ check_environments_match(env, mo_env, num_steps=num_steps, seed=seed, skip_rew=True, info_comparison="keys-superset")

+

+

# Note that this precludes running this test in multiple threads.

# However, we probably already can't do multithreading due to some environments.

SEED = 0

| Continuous / Continuous | `[velocity, energy]` | Multi-objective version of [Humonoid-v4](https://gymnasium.farama.org/environments/mujoco/humanoid/) env. |

+

+

+```{toctree}

+:hidden:

+:glob:

+:caption: MO-Gymnasium Environments

+

+./mo-reacher

+./mo-hopper

+./mo-halfcheetah

+./mo-walker2d

+./mo-ant

+./mo-swimmer

+./mo-humanoid

+```

diff --git a/docs/examples/publications.md b/docs/examples/publications.md

index c2739d12..23176746 100644

--- a/docs/examples/publications.md

+++ b/docs/examples/publications.md

@@ -10,7 +10,14 @@ MO-Gymnasium (formerly MO-Gym) was first published in:

List of publications & submissions using MO-Gymnasium (please open a pull request to add missing entries):

+

- [Sample-Efficient Multi-Objective Learning via Generalized Policy Improvement Prioritization](https://arxiv.org/abs/2301.07784) (Alegre et al., AAMAS 2023)

- [Hyperparameter Optimization for Multi-Objective Reinforcement Learning](https://arxiv.org/abs/2310.16487v1) (Felten et al., MODeM Workshop 2023)

- [Multi-Step Generalized Policy Improvement by Leveraging Approximate Models](https://openreview.net/forum?id=KFj0Q1EXvU) (Alegre et al., NeurIPS 2023)

- [A Toolkit for Reliable Benchmarking and Research in Multi-Objective Reinforcement Learning](https://openreview.net/forum?id=jfwRLudQyj) (Felten et al., NeurIPS 2023)

+- [Distributional Pareto-Optimal Multi-Objective Reinforcement Learning](https://proceedings.neurips.cc/paper_files/paper/2023/hash/32285dd184dbfc33cb2d1f0db53c23c5-Abstract-Conference.html) (Cai et al., NeurIPS 2023)

+- [Welfare and Fairness in Multi-objective Reinforcement Learning](https://arxiv.org/abs/2212.01382) (Fan et al., AAMAS 2023)

+- [Personalized Reinforcement Learning with a Budget of Policies](https://arxiv.org/abs/2401.06514) (Ivanov et al., 2024)

+- [Multi-Objective Reinforcement Learning Based on Decomposition: A Taxonomy and Framework](https://arxiv.org/abs/2311.12495) (Felten et al., 2024)

+- [Multi-objective reinforcement learning for guaranteeing alignment with multiple values](https://alaworkshop2023.github.io/papers/ALA2023_paper_15.pdf) (Rodriguez-Soto et al., 2023)

+- [MOFL/D: A Federated Multi-objective Learning Framework with Decomposition](https://neurips.cc/virtual/2023/79018) (Hartmann et al., 2023)

diff --git a/docs/index.md b/docs/index.md

index a5069a60..fb6d56ff 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -10,11 +10,21 @@ lastpage:

introduction/install

introduction/api

-environments/all-environments

wrappers/wrappers

examples/morl_baselines

```

+```{toctree}

+:hidden:

+:caption: Environments

+

+environments/grid-world

+environments/classical

+environments/misc

+environments/mujoco

+```

+

+

```{toctree}

:hidden:

:caption: Tutorials

diff --git a/mo_gymnasium/__init__.py b/mo_gymnasium/__init__.py

index bb596e25..23201d0c 100644

--- a/mo_gymnasium/__init__.py

+++ b/mo_gymnasium/__init__.py

@@ -14,4 +14,4 @@

)

-__version__ = "1.0.1"

+__version__ = "1.1.0"

diff --git a/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py b/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

index 0a6cbe72..93c33821 100644

--- a/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

+++ b/mo_gymnasium/envs/breakable_bottles/breakable_bottles.py

@@ -25,9 +25,13 @@ class BreakableBottles(Env, EzPickle):

The observation space is a dictionary with 4 keys:

- location: the current location of the agent

- bottles_carrying: the number of bottles the agent is currently carrying (0, 1 or 2)

- - bottles_delivered: the number of bottles the agent has delivered (0 or 1)

+ - bottles_delivered: the number of bottles the agent has delivered (0, 1 or 2)

- bottles_dropped: for each location, a boolean flag indicating if that location currently contains a bottle

+ Note that this observation space is different from that listed in the paper above. In the paper, bottles_delivered's possible values are listed as (0 or 1),

+ rather than (0, 1 or 2). This is because the paper did not take the terminal state, in which 2 bottles have been delivered, into account when calculating

+ the observation space. As such, the observation space of this implementation is larger than specified in the paper, having 360 possible states instead of 240.

+

## Reward Space

The reward space has 3 dimensions:

- time penalty: -1 for each time step

@@ -96,11 +100,11 @@ def __init__(

{

"location": Discrete(self.size),

"bottles_carrying": Discrete(3),

- "bottles_delivered": Discrete(2),

+ "bottles_delivered": Discrete(3),

"bottles_dropped": MultiBinary(self.size - 2),

}

)

- self.num_observations = 240

+ self.num_observations = 360

self.action_space = Discrete(3) # LEFT, RIGHT, PICKUP

self.num_actions = 3

@@ -220,7 +224,7 @@ def get_obs_idx(self, obs):

*[[bd > 0] for bd in obs["bottles_dropped"]],

]

)

- return np.ravel_multi_index(multi_index, tuple([self.size, 3, 2, *([2] * (self.size - 2))]))

+ return np.ravel_multi_index(multi_index, tuple([self.size, 3, 3, *([2] * (self.size - 2))]))

def _get_obs(self):

return {

diff --git a/mo_gymnasium/envs/lunar_lander/lunar_lander.py b/mo_gymnasium/envs/lunar_lander/lunar_lander.py

index 091190a9..c8d94d6a 100644

--- a/mo_gymnasium/envs/lunar_lander/lunar_lander.py

+++ b/mo_gymnasium/envs/lunar_lander/lunar_lander.py

@@ -6,6 +6,7 @@

FPS,

LEG_DOWN,

MAIN_ENGINE_POWER,

+ MAIN_ENGINE_Y_LOCATION,

SCALE,

SIDE_ENGINE_AWAY,

SIDE_ENGINE_HEIGHT,

@@ -46,7 +47,7 @@ def __init__(self, *args, **kwargs):

def step(self, action):

assert self.lander is not None

- # Update wind

+ # Update wind and apply to the lander

assert self.lander is not None, "You forgot to call reset()"

if self.enable_wind and not (self.legs[0].ground_contact or self.legs[1].ground_contact):

# the function used for wind is tanh(sin(2 k x) + sin(pi k x)),

@@ -60,12 +61,13 @@ def step(self, action):

# the function used for torque is tanh(sin(2 k x) + sin(pi k x)),

# which is proven to never be periodic, k = 0.01

- torque_mag = math.tanh(math.sin(0.02 * self.torque_idx) + (math.sin(math.pi * 0.01 * self.torque_idx))) * (

- self.turbulence_power

+ torque_mag = (

+ math.tanh(math.sin(0.02 * self.torque_idx) + (math.sin(math.pi * 0.01 * self.torque_idx)))

+ * self.turbulence_power

)

self.torque_idx += 1

self.lander.ApplyTorque(

- (torque_mag),

+ torque_mag,

True,

)

@@ -74,9 +76,15 @@ def step(self, action):

else:

assert self.action_space.contains(action), f"{action!r} ({type(action)}) invalid "

- # Engines

+ # Apply Engine Impulses

+

+ # Tip is the (X and Y) components of the rotation of the lander.

tip = (math.sin(self.lander.angle), math.cos(self.lander.angle))

+

+ # Side is the (-Y and X) components of the rotation of the lander.

side = (-tip[1], tip[0])

+

+ # Generate two random numbers between -1/SCALE and 1/SCALE.

dispersion = [self.np_random.uniform(-1.0, +1.0) / SCALE for _ in range(2)]

m_power = 0.0

@@ -87,21 +95,29 @@ def step(self, action):

assert m_power >= 0.5 and m_power <= 1.0

else:

m_power = 1.0

+

# 4 is move a bit downwards, +-2 for randomness

- ox = tip[0] * (4 / SCALE + 2 * dispersion[0]) + side[0] * dispersion[1]

- oy = -tip[1] * (4 / SCALE + 2 * dispersion[0]) - side[1] * dispersion[1]

+ # The components of the impulse to be applied by the main engine.

+ ox = tip[0] * (MAIN_ENGINE_Y_LOCATION / SCALE + 2 * dispersion[0]) + side[0] * dispersion[1]

+ oy = -tip[1] * (MAIN_ENGINE_Y_LOCATION / SCALE + 2 * dispersion[0]) - side[1] * dispersion[1]

+

impulse_pos = (self.lander.position[0] + ox, self.lander.position[1] + oy)

- p = self._create_particle(

- 3.5, # 3.5 is here to make particle speed adequate

- impulse_pos[0],

- impulse_pos[1],

- m_power,

- ) # particles are just a decoration

- p.ApplyLinearImpulse(

- (ox * MAIN_ENGINE_POWER * m_power, oy * MAIN_ENGINE_POWER * m_power),

- impulse_pos,

- True,

- )

+ if self.render_mode is not None:

+ # particles are just a decoration, with no impact on the physics, so don't add them when not rendering

+ p = self._create_particle(

+ 3.5, # 3.5 is here to make particle speed adequate

+ impulse_pos[0],

+ impulse_pos[1],

+ m_power,

+ )

+ p.ApplyLinearImpulse(

+ (

+ ox * MAIN_ENGINE_POWER * m_power,

+ oy * MAIN_ENGINE_POWER * m_power,

+ ),

+ impulse_pos,

+ True,

+ )

self.lander.ApplyLinearImpulse(

(-ox * MAIN_ENGINE_POWER * m_power, -oy * MAIN_ENGINE_POWER * m_power),

impulse_pos,

@@ -110,26 +126,39 @@ def step(self, action):

s_power = 0.0

if (self.continuous and np.abs(action[1]) > 0.5) or (not self.continuous and action in [1, 3]):

- # Orientation engines

+ # Orientation/Side engines

if self.continuous:

direction = np.sign(action[1])

s_power = np.clip(np.abs(action[1]), 0.5, 1.0)

assert s_power >= 0.5 and s_power <= 1.0

else:

+ # action = 1 is left, action = 3 is right

direction = action - 2

s_power = 1.0

+

+ # The components of the impulse to be applied by the side engines.

ox = tip[0] * dispersion[0] + side[0] * (3 * dispersion[1] + direction * SIDE_ENGINE_AWAY / SCALE)

oy = -tip[1] * dispersion[0] - side[1] * (3 * dispersion[1] + direction * SIDE_ENGINE_AWAY / SCALE)

+

+ # The constant 17 is a constant, that is presumably meant to be SIDE_ENGINE_HEIGHT.

+ # However, SIDE_ENGINE_HEIGHT is defined as 14

+ # This causes the position of the thrust on the body of the lander to change, depending on the orientation of the lander.

+ # This in turn results in an orientation dependent torque being applied to the lander.

impulse_pos = (

self.lander.position[0] + ox - tip[0] * 17 / SCALE,

self.lander.position[1] + oy + tip[1] * SIDE_ENGINE_HEIGHT / SCALE,

)

- p = self._create_particle(0.7, impulse_pos[0], impulse_pos[1], s_power)

- p.ApplyLinearImpulse(

- (ox * SIDE_ENGINE_POWER * s_power, oy * SIDE_ENGINE_POWER * s_power),

- impulse_pos,

- True,

- )

+ if self.render_mode is not None:

+ # particles are just a decoration, with no impact on the physics, so don't add them when not rendering

+ p = self._create_particle(0.7, impulse_pos[0], impulse_pos[1], s_power)

+ p.ApplyLinearImpulse(

+ (

+ ox * SIDE_ENGINE_POWER * s_power,

+ oy * SIDE_ENGINE_POWER * s_power,

+ ),

+ impulse_pos,

+ True,

+ )

self.lander.ApplyLinearImpulse(

(-ox * SIDE_ENGINE_POWER * s_power, -oy * SIDE_ENGINE_POWER * s_power),

impulse_pos,

@@ -140,6 +169,7 @@ def step(self, action):

pos = self.lander.position

vel = self.lander.linearVelocity

+

state = [

(pos.x - VIEWPORT_W / SCALE / 2) / (VIEWPORT_W / SCALE / 2),

(pos.y - (self.helipad_y + LEG_DOWN / SCALE)) / (VIEWPORT_H / SCALE / 2),

diff --git a/mo_gymnasium/envs/mujoco/__init__.py b/mo_gymnasium/envs/mujoco/__init__.py

index 5b605ff5..4415d577 100644

--- a/mo_gymnasium/envs/mujoco/__init__.py

+++ b/mo_gymnasium/envs/mujoco/__init__.py

@@ -39,6 +39,37 @@

kwargs={"cost_objective": False},

)

+register(

+ id="mo-walker2d-v4",

+ entry_point="mo_gymnasium.envs.mujoco.walker2d:MOWalker2dEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-ant-v4",

+ entry_point="mo_gymnasium.envs.mujoco.ant:MOAntEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-ant-2d-v4",

+ entry_point="mo_gymnasium.envs.mujoco.ant:MOAntEnv",

+ max_episode_steps=1000,

+ kwargs={"cost_objective": False},

+)

+

+register(

+ id="mo-swimmer-v4",

+ entry_point="mo_gymnasium.envs.mujoco.swimmer:MOSwimmerEnv",

+ max_episode_steps=1000,

+)

+

+register(

+ id="mo-humanoid-v4",

+ entry_point="mo_gymnasium.envs.mujoco.humanoid:MOHumanoidEnv",

+ max_episode_steps=1000,

+)

+

register(

id="mo-reacher-v4",

entry_point="mo_gymnasium.envs.mujoco.reacher_v4:MOReacherEnv",

diff --git a/mo_gymnasium/envs/mujoco/ant.py b/mo_gymnasium/envs/mujoco/ant.py

new file mode 100644

index 00000000..cc2ba7ed

--- /dev/null