diff --git a/README.md b/README.md

index 014092c..531e730 100644

--- a/README.md

+++ b/README.md

@@ -20,10 +20,11 @@

- Built-in API **Caching** System (In Memory, **Redis**)

- Built-in **Authentication** Classes

- Built-in **Permission** Classes

+- Built-in Visual API **Monitoring** (In Terminal)

- Support Custom **Background Tasks**

- Support Custom **Middlewares**

- Support Custom **Throttling**

-- Visual API **Monitoring** (In Terminal)

+- Support **Function-Base** and **Class-Base** APIs

- It's One Of The **Fastest Python Frameworks**

---

@@ -176,6 +177,12 @@ $ pip install panther

---

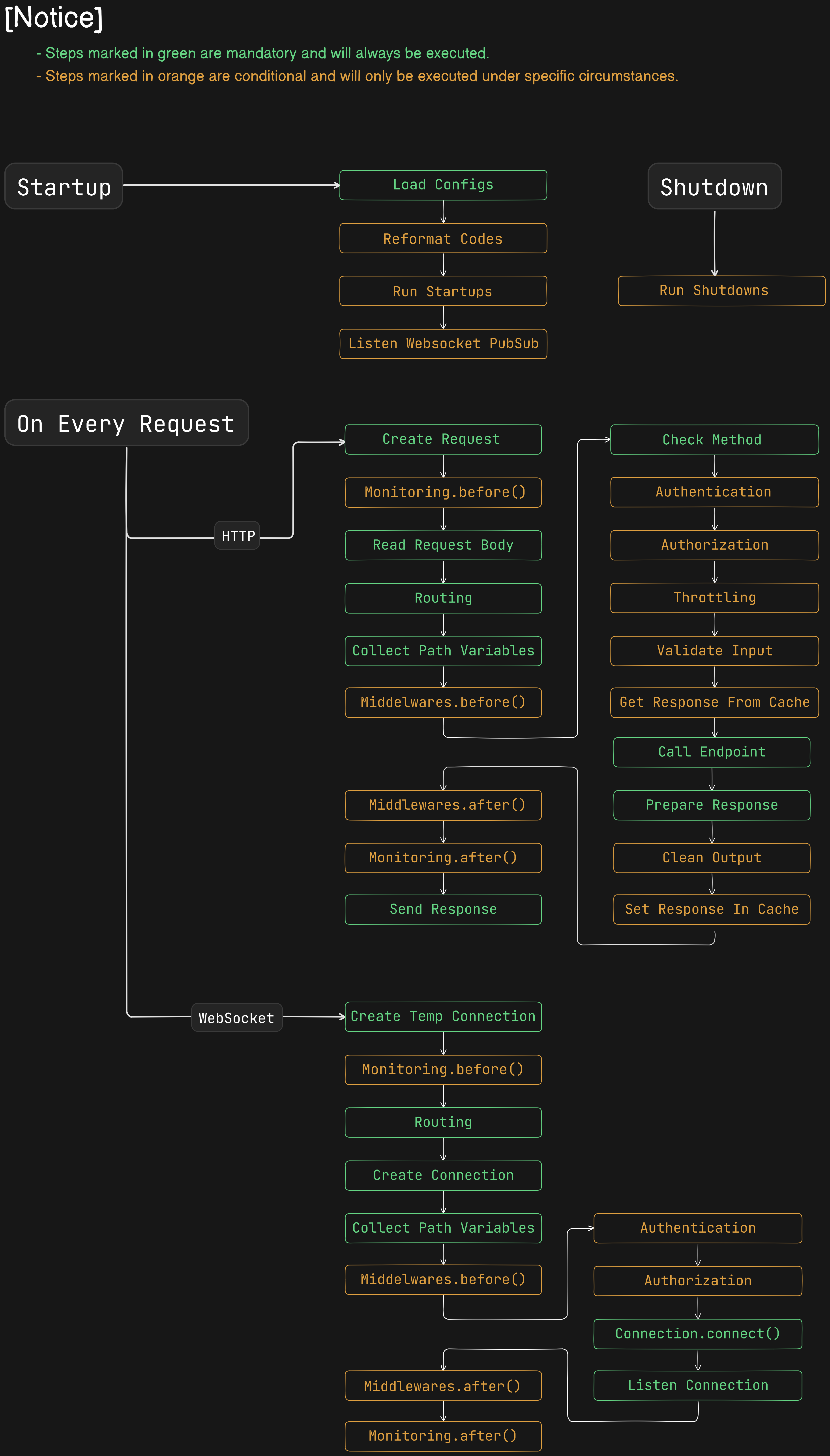

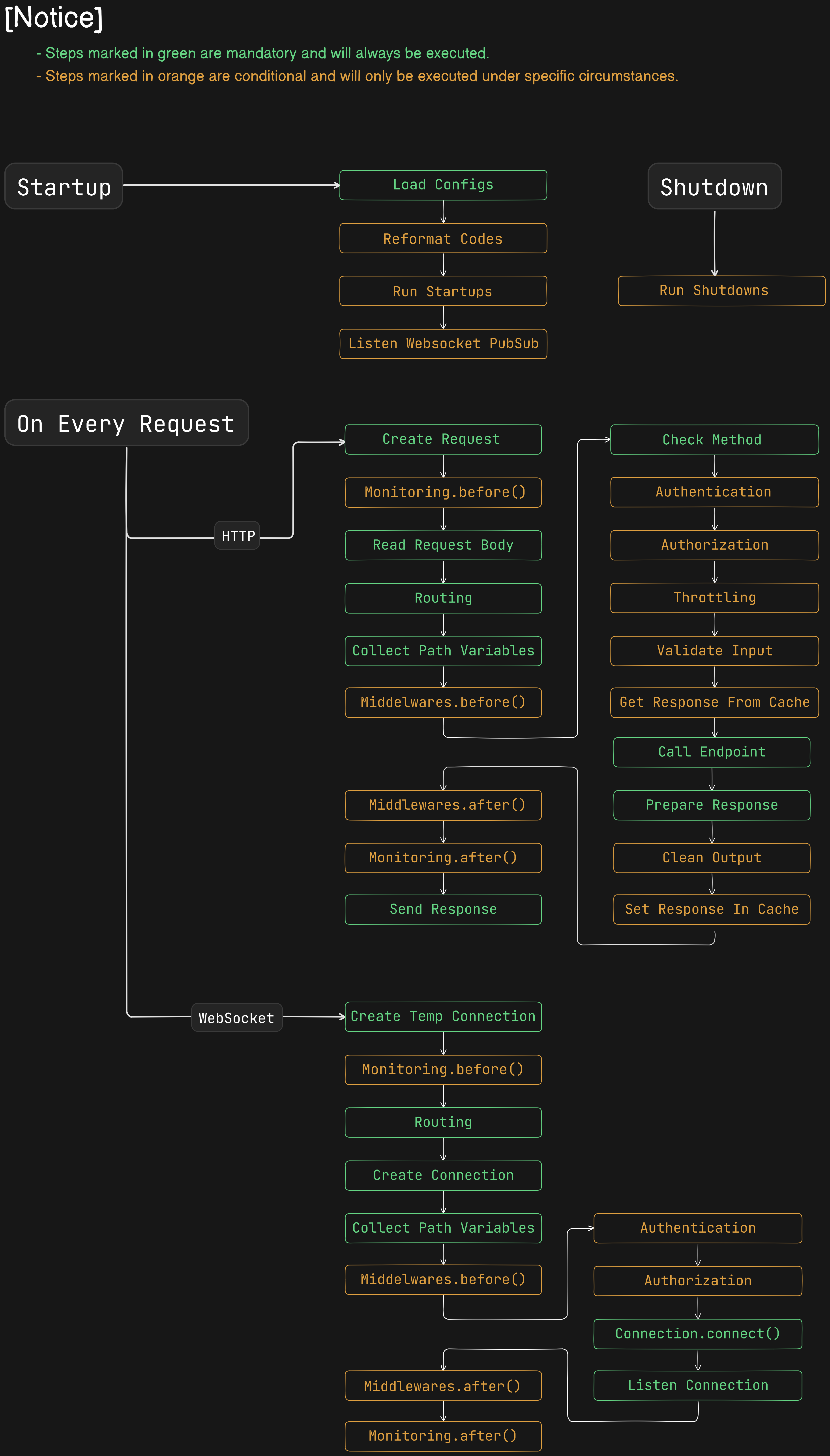

+### How Panther Works!

+

+

+

+---

+

### Roadmap

diff --git a/docs/docs/authentications.md b/docs/docs/authentications.md

index 3b2efe6..6563086 100644

--- a/docs/docs/authentications.md

+++ b/docs/docs/authentications.md

@@ -3,12 +3,20 @@

> Type: `str`

>

> Default: `None`

-

-You can set your Authentication class in `configs`, now, if you set `auth=True` in `@API()`, Panther will use this class for authentication of every `API`, and put the `user` in `request.user` or `raise HTTP_401_UNAUTHORIZED`

-We implemented a built-in authentication class which used `JWT` for authentication.

+You can set Authentication class in your `configs`

+

+Panther use it, to authenticate every API/ WS if `auth=True` and give you the user or `raise HTTP_401_UNAUTHORIZED`

+

+The `user` will be in `request.user` in APIs and in `self.user` in WSs

+

+---

+

+We implemented 2 built-in authentication classes which use `JWT` for authentication.

+

But, You can use your own custom authentication class too.

+---

### JWTAuthentication

@@ -17,7 +25,7 @@ This class will

- Get the `token` from `Authorization` header of request.

- Check the `Bearer`

- `decode` the `token`

-- Find the matched `user` (It uses the `USER_MODEL`)

+- Find the matched `user`

> `JWTAuthentication` is going to use `panther.db.models.BaseUser` if you didn't set the `USER_MODEL` in your `configs`

@@ -59,9 +67,7 @@ JWTConfig = {

#### Websocket Authentication

-This class is very useful when you are trying to authenticate the user in websocket

-

-Add this into your `configs`:

+The `QueryParamJWTAuthentication` is very useful when you are trying to authenticate the user in websocket, you just have to add this into your `configs`:

```python

WS_AUTHENTICATION = 'panther.authentications.QueryParamJWTAuthentication'

```

@@ -77,7 +83,9 @@ WS_AUTHENTICATION = 'panther.authentications.QueryParamJWTAuthentication'

- Or raise `panther.exceptions.AuthenticationAPIError`

-- Address it in `configs`

- - `AUTHENTICATION = 'project_name.core.authentications.CustomAuthentication'`

+- Add it into your `configs`

+ ```python

+ AUTHENTICATION = 'project_name.core.authentications.CustomAuthentication'

+ ```

-> You can see the source code of JWTAuthentication [[here]](https://github.com/AliRn76/panther/blob/da2654ccdd83ebcacda91a1aaf51d5aeb539eff5/panther/authentications.py#L38)

\ No newline at end of file

+> You can see the source code of JWTAuthentication [[here]](https://github.com/AliRn76/panther/blob/da2654ccdd83ebcacda91a1aaf51d5aeb539eff5/panther/authentications.py)

\ No newline at end of file

diff --git a/docs/docs/background_tasks.md b/docs/docs/background_tasks.md

index 670f455..b5057f3 100644

--- a/docs/docs/background_tasks.md

+++ b/docs/docs/background_tasks.md

@@ -110,7 +110,7 @@ Panther is going to run the `background tasks` as a thread in the background on

task = BackgroundTask(do_something, 'Ali', 26)

```

-- > Default interval is 1.

+- > Default of interval() is 1.

- > The -1 interval means infinite,

diff --git a/docs/docs/class_first_crud.md b/docs/docs/class_first_crud.md

index 9c81906..274cc28 100644

--- a/docs/docs/class_first_crud.md

+++ b/docs/docs/class_first_crud.md

@@ -1,6 +1,6 @@

We assume you could run the project with [Introduction](https://pantherpy.github.io/#installation)

-Now let's write custom API `Create`, `Retrieve`, `Update` and `Delete` for a `Book`:

+Now let's write custom APIs for `Create`, `Retrieve`, `Update` and `Delete` a `Book`:

## Structure & Requirements

### Create Model

@@ -82,7 +82,7 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

- def post(self):

+ async def post(self):

...

```

@@ -95,7 +95,7 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

- def post(self, request: Request):

+ async def post(self, request: Request):

...

```

@@ -122,7 +122,7 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

input_model = BookSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

...

```

@@ -138,7 +138,7 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

input_model = BookSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

body: BookSerializer = request.validated_data

...

```

@@ -156,9 +156,9 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

input_model = BookSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

body: BookSerializer = request.validated_data

- Book.insert_one(

+ await Book.insert_one(

name=body.name,

author=body.author,

pages_count=body.pages_count,

@@ -180,15 +180,14 @@ Now we are going to create a book on `post` request, We need to:

class BookAPI(GenericAPI):

input_model = BookSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

body: BookSerializer = request.validated_data

- book = Book.insert_one(

+ book = await Book.insert_one(

name=body.name,

author=body.author,

pages_count=body.pages_count,

)

return Response(data=book, status_code=status.HTTP_201_CREATED)

-

```

> The response.data can be `Instance of Models`, `dict`, `str`, `tuple`, `list`, `str` or `None`

@@ -213,11 +212,11 @@ from app.models import Book

class BookAPI(GenericAPI):

input_model = BookSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

...

- def get(self):

- books: list[Book] = Book.find()

+ async def get(self):

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

```

@@ -256,11 +255,11 @@ Assume we don't want to return field `author` in response:

input_model = BookSerializer

output_model = BookOutputSerializer

- def post(self, request: Request):

+ async def post(self, request: Request):

...

- def get(self):

- books: list[Book] = Book.find()

+ async def get(self):

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

```

@@ -292,11 +291,11 @@ class BookAPI(GenericAPI):

cache = True

cache_exp_time = timedelta(seconds=10)

- def post(self, request: Request):

+ async def post(self, request: Request):

...

- def get(self):

- books: list[Book] = Book.find()

+ async def get(self):

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

```

@@ -329,11 +328,11 @@ class BookAPI(GenericAPI):

cache_exp_time = timedelta(seconds=10)

throttling = Throttling(rate=10, duration=timedelta(minutes=1))

- def post(self, request: Request):

+ async def post(self, request: Request):

...

- def get(self):

- books: list[Book] = Book.find()

+ async def get(self):

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

```

@@ -383,8 +382,8 @@ For `retrieve`, `update` and `delete` API, we are going to

class SingleBookAPI(GenericAPI):

- def get(self, book_id: int):

- if book := Book.find_one(id=book_id):

+ async def get(self, book_id: int):

+ if book := await Book.find_one(id=book_id):

return Response(data=book, status_code=status.HTTP_200_OK)

else:

return Response(status_code=status.HTTP_404_NOT_FOUND)

@@ -406,11 +405,11 @@ from app.serializers import BookSerializer

class SingleBookAPI(GenericAPI):

input_model = BookSerializer

- def get(self, book_id: int):

+ async def get(self, book_id: int):

...

- def put(self, request: Request, book_id: int):

- is_updated: bool = Book.update_one({'id': book_id}, request.validated_data.model_dump())

+ async def put(self, request: Request, book_id: int):

+ is_updated: bool = await Book.update_one({'id': book_id}, request.validated_data.model_dump())

data = {'is_updated': is_updated}

return Response(data=data, status_code=status.HTTP_202_ACCEPTED)

```

@@ -431,14 +430,14 @@ from app.models import Book

class SingleBookAPI(GenericAPI):

input_model = BookSerializer

- def get(self, book_id: int):

+ async def get(self, book_id: int):

...

- def put(self, request: Request, book_id: int):

+ async def put(self, request: Request, book_id: int):

...

- def delete(self, book_id: int):

- is_deleted: bool = Book.delete_one(id=book_id)

+ async def delete(self, book_id: int):

+ is_deleted: bool = await Book.delete_one(id=book_id)

if is_deleted:

return Response(status_code=status.HTTP_204_NO_CONTENT)

else:

diff --git a/docs/docs/configs.md b/docs/docs/configs.md

index 294ef32..3a37775 100644

--- a/docs/docs/configs.md

+++ b/docs/docs/configs.md

@@ -7,12 +7,14 @@ Panther collect all the configs from your `core/configs.py` or the module you pa

> Type: `bool` (Default: `False`)

It should be `True` if you want to use `panther monitor` command

-and see the monitoring logs

+and watch the monitoring

If `True`:

- Log every request in `logs/monitoring.log`

+_Requires [watchfiles](https://watchfiles.helpmanual.io) package._

+

---

### [LOG_QUERIES](https://pantherpy.github.io/log_queries)

> Type: `bool` (Default: `False`)

@@ -33,6 +35,8 @@ List of middlewares you want to use

Every request goes through `authentication()` method of this `class` (if `auth = True`)

+_Requires [python-jose](https://python-jose.readthedocs.io/en/latest/) package._

+

_Example:_ `AUTHENTICATION = 'panther.authentications.JWTAuthentication'`

---

@@ -119,6 +123,8 @@ It will reformat your code on every reload (on every change if you run the proje

You may want to write your custom `ruff.toml` in root of your project.

+_Requires [ruff](https://docs.astral.sh/ruff/) package._

+

Reference: [https://docs.astral.sh/ruff/formatter/](https://docs.astral.sh/ruff/formatter/)

_Example:_ `AUTO_REFORMAT = True`

@@ -133,4 +139,16 @@ We use it to create `database` connection

### [REDIS](https://pantherpy.github.io/redis)

> Type: `dict` (Default: `{}`)

+_Requires [redis](https://redis-py.readthedocs.io/en/stable/) package._

+

We use it to create `redis` connection

+

+

+---

+### [TIMEZONE](https://pantherpy.github.io/timezone)

+> Type: `str` (Default: `'UTC'`)

+

+Used in `panther.utils.timezone_now()` which returns a `datetime` based on your `timezone`

+

+And `panther.utils.timezone_now()` used in `BaseUser.date_created` and `BaseUser.last_login`

+

diff --git a/docs/docs/events.md b/docs/docs/events.md

new file mode 100644

index 0000000..bf7a21e

--- /dev/null

+++ b/docs/docs/events.md

@@ -0,0 +1,29 @@

+## Startup Event

+

+Use `Event.startup` decorator

+

+```python

+from panther.events import Event

+

+

+@Event.startup

+def do_something_on_startup():

+ print('Hello, I am at startup')

+```

+

+## Shutdown Event

+

+```python

+from panther.events import Event

+

+

+@Event.shutdown

+def do_something_on_shutdown():

+ print('Good Bye, I am at shutdown')

+```

+

+

+## Notice

+

+- You can have **multiple** events on `startup` and `shutdown`

+- Events can be `sync` or `async`

diff --git a/docs/docs/function_first_crud.md b/docs/docs/function_first_crud.md

index 3c83565..dd97e3b 100644

--- a/docs/docs/function_first_crud.md

+++ b/docs/docs/function_first_crud.md

@@ -1,6 +1,6 @@

We assume you could run the project with [Introduction](https://pantherpy.github.io/#installation)

-Now let's write custom API `Create`, `Retrieve`, `Update` and `Delete` for a `Book`:

+Now let's write custom APIs for `Create`, `Retrieve`, `Update` and `Delete` a `Book`:

## Structure & Requirements

### Create Model

@@ -142,7 +142,7 @@ Now we are going to create a book on `post` request, We need to:

async def book_api(request: Request):

body: BookSerializer = request.validated_data

- Book.insert_one(

+ await Book.insert_one(

name=body.name,

author=body.author,

pages_count=body.pages_count,

@@ -165,7 +165,7 @@ Now we are going to create a book on `post` request, We need to:

if request.method == 'POST':

body: BookSerializer = request.validated_data

- Book.create(

+ await Book.insert_one(

name=body.name,

author=body.author,

pages_count=body.pages_count,

@@ -189,7 +189,7 @@ Now we are going to create a book on `post` request, We need to:

if request.method == 'POST':

body: BookSerializer = request.validated_data

- book: Book = Book.create(

+ book: Book = await Book.insert_one(

name=body.name,

author=body.author,

pages_count=body.pages_count,

@@ -224,7 +224,7 @@ async def book_api(request: Request):

...

elif request.method == 'GET':

- books: list[Book] = Book.find()

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

return Response(status_code=status.HTTP_501_NOT_IMPLEMENTED)

@@ -265,7 +265,7 @@ Assume we don't want to return field `author` in response:

...

elif request.method == 'GET':

- books: list[Book] = Book.find()

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

return Response(status_code=status.HTTP_501_NOT_IMPLEMENTED)

@@ -299,7 +299,7 @@ async def book_api(request: Request):

...

elif request.method == 'GET':

- books: list[Book] = Book.find()

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

return Response(status_code=status.HTTP_501_NOT_IMPLEMENTED)

@@ -338,7 +338,7 @@ async def book_api(request: Request):

...

elif request.method == 'GET':

- books: list[Book] = Book.find()

+ books = await Book.find()

return Response(data=books, status_code=status.HTTP_200_OK)

return Response(status_code=status.HTTP_501_NOT_IMPLEMENTED)

@@ -393,7 +393,7 @@ For `retrieve`, `update` and `delete` API, we are going to

@API()

async def single_book_api(request: Request, book_id: int):

if request.method == 'GET':

- if book := Book.find_one(id=book_id):

+ if book := await Book.find_one(id=book_id):

return Response(data=book, status_code=status.HTTP_200_OK)

else:

return Response(status_code=status.HTTP_404_NOT_FOUND)

@@ -420,12 +420,12 @@ For `retrieve`, `update` and `delete` API, we are going to

if request.method == 'GET':

...

elif request.method == 'PUT':

- book: Book = Book.find_one(id=book_id)

- book.update(

+ book: Book = await Book.find_one(id=book_id)

+ await book.update(

name=body.name,

author=body.author,

pages_count=body.pages_count

- )

+ )

return Response(status_code=status.HTTP_202_ACCEPTED)

```

@@ -446,7 +446,7 @@ For `retrieve`, `update` and `delete` API, we are going to

if request.method == 'GET':

...

elif request.method == 'PUT':

- is_updated: bool = Book.update_one({'id': book_id}, request.validated_data.model_dump())

+ is_updated: bool = await Book.update_one({'id': book_id}, request.validated_data.model_dump())

data = {'is_updated': is_updated}

return Response(data=data, status_code=status.HTTP_202_ACCEPTED)

```

@@ -468,7 +468,7 @@ For `retrieve`, `update` and `delete` API, we are going to

if request.method == 'GET':

...

elif request.method == 'PUT':

- updated_count: int = Book.update_many({'id': book_id}, request.validated_data.model_dump())

+ updated_count: int = await Book.update_many({'id': book_id}, request.validated_data.model_dump())

data = {'updated_count': updated_count}

return Response(data=data, status_code=status.HTTP_202_ACCEPTED)

```

@@ -496,7 +496,7 @@ For `retrieve`, `update` and `delete` API, we are going to

elif request.method == 'PUT':

...

elif request.method == 'DELETE':

- is_deleted: bool = Book.delete_one(id=book_id)

+ is_deleted: bool = await Book.delete_one(id=book_id)

if is_deleted:

return Response(status_code=status.HTTP_204_NO_CONTENT)

else:

@@ -520,7 +520,7 @@ For `retrieve`, `update` and `delete` API, we are going to

elif request.method == 'PUT':

...

elif request.method == 'DELETE':

- is_deleted: bool = Book.delete_one(id=book_id)

+ is_deleted: bool = await Book.delete_one(id=book_id)

return Response(status_code=status.HTTP_204_NO_CONTENT)

```

@@ -542,6 +542,6 @@ For `retrieve`, `update` and `delete` API, we are going to

elif request.method == 'PUT':

...

elif request.method == 'DELETE':

- deleted_count: int = Book.delete_many(id=book_id)

+ deleted_count: int = await Book.delete_many(id=book_id)

return Response(status_code=status.HTTP_204_NO_CONTENT)

```

\ No newline at end of file

diff --git a/docs/docs/generic_crud.md b/docs/docs/generic_crud.md

new file mode 100644

index 0000000..ab6ed28

--- /dev/null

+++ b/docs/docs/generic_crud.md

@@ -0,0 +1,313 @@

+We assume you could run the project with [Introduction](https://pantherpy.github.io/#installation)

+

+Now let's write custom APIs for `Create`, `Retrieve`, `Update` and `Delete` a `Book`:

+

+## Structure & Requirements

+### Create Model

+

+Create a model named `Book` in `app/models.py`:

+

+```python

+from panther.db import Model

+

+

+class Book(Model):

+ name: str

+ author: str

+ pages_count: int

+```

+

+### Create API Class

+

+Create the `BookAPI()` in `app/apis.py`:

+

+```python

+from panther.app import GenericAPI

+

+

+class BookAPI(GenericAPI):

+ ...

+```

+

+> We are going to complete it later ...

+

+### Update URLs

+

+Add the `BookAPI` in `app/urls.py`:

+

+```python

+from app.apis import BookAPI

+

+

+urls = {

+ 'book/': BookAPI,

+}

+```

+

+We assume that the `urls` in `core/urls.py` pointing to `app/urls.py`, like below:

+

+```python

+from app.urls import urls as app_urls

+

+

+urls = {

+ '/': app_urls,

+}

+```

+

+### Add Database

+

+Add `DATABASE` in `configs`, we are going to add `pantherdb`

+> [PantherDB](https://github.com/PantherPy/PantherDB/#readme) is a Simple, File-Base and Document Oriented database

+

+```python

+...

+DATABASE = {

+ 'engine': {

+ 'class': 'panther.db.connections.PantherDBConnection',

+ }

+}

+...

+```

+

+## APIs

+### API - Create a Book

+

+Now we are going to create a book on `POST` request, we need to:

+

+1. Inherit from `CreateAPI`:

+ ```python

+ from panther.generics import CreateAPI

+

+

+ class BookAPI(CreateAPI):

+ ...

+ ```

+

+2. Create a ModelSerializer in `app/serializers.py`, for `validation` of the `request.data`:

+

+ ```python

+ from panther.serializer import ModelSerializer

+ from app.models import Book

+

+ class BookSerializer(ModelSerializer):

+ class Config:

+ model = Book

+ fields = ['name', 'author', 'pages_count']

+ ```

+

+3. Set the created serializer in `BookAPI` as `input_model`:

+

+ ```python

+ from panther.app import CreateAPI

+ from app.serializers import BookSerializer

+

+

+ class BookAPI(CreateAPI):

+ input_model = BookSerializer

+ ```

+

+It is going to create a `Book` with incoming `request.data` and return that instance to user with status code of `201`

+

+

+### API - List of Books

+

+Let's return list of books of `GET` method, we need to:

+

+1. Inherit from `ListAPI`

+ ```python

+ from panther.generics import CreateAPI, ListAPI

+ from app.serializers import BookSerializer

+

+ class BookAPI(CreateAPI, ListAPI):

+ input_model = BookSerializer

+ ...

+ ```

+

+2. define `objects` method, so the `ListAPI` knows to return which books

+

+ ```python

+ from panther.generics import CreateAPI, ListAPI

+ from panther.request import Request

+

+ from app.models import Book

+ from app.serializers import BookSerializer

+

+ class BookAPI(CreateAPI, ListAPI):

+ input_model = BookSerializer

+

+ async def objects(self, request: Request, **kwargs):

+ return await Book.find()

+ ```

+

+### Pagination, Search, Filter, Sort

+

+#### Pagination

+

+Use `panther.pagination.Pagination` as `pagination`

+

+**Usage:** It will look for the `limit` and `skip` in the `query params` and return its own response template

+

+**Example:** `?limit=10&skip=20`

+

+---

+

+#### Search

+

+Define the fields you want the search, query on them in `search_fields`

+

+The value of `search_fields` should be `list`

+

+**Usage:** It works with `search` query param --> `?search=maybe_name_of_the_book_or_author`

+

+**Example:** `?search=maybe_name_of_the_book_or_author`

+

+---

+

+#### Filter

+

+Define the fields you want to be filterable in `filter_fields`

+

+The value of `filter_fields` should be `list`

+

+**Usage:** It will look for each value of the `filter_fields` in the `query params` and query on them

+

+**Example:** `?name=name_of_the_book&author=author_of_the_book`

+

+---

+

+

+#### Sort

+

+Define the fields you want to be sortable in `sort_fields`

+

+The value of `sort_fields` should be `list`

+

+

+

+**Usage:** It will look for each value of the `sort_fields` in the `query params` and sort with them

+

+**Example:** `?sort=pages_count,-name`

+

+**Notice:**

+ - fields should be separated with a column `,`

+ - use `field_name` for ascending sort

+ - use `-field_name` for descending sort

+---

+

+#### Example

+```python

+from panther.generics import CreateAPI, ListAPI

+from panther.pagination import Pagination

+from panther.request import Request

+

+from app.models import Book

+from app.serializers import BookSerializer, BookOutputSerializer

+

+class BookAPI(CreateAPI, ListAPI):

+ input_model = BookSerializer

+ output_model = BookOutputSerializer

+ pagination = Pagination

+ search_fields = ['name', 'author']

+ filter_fields = ['name', 'author']

+ sort_fields = ['name', 'pages_count']

+

+ async def objects(self, request: Request, **kwargs):

+ return await Book.find()

+```

+

+

+### API - Retrieve a Book

+

+Now we are going to retrieve a book on `GET` request, we need to:

+

+1. Inherit from `RetrieveAPI`:

+ ```python

+ from panther.generics import RetrieveAPI

+

+

+ class SingleBookAPI(RetrieveAPI):

+ ...

+ ```

+

+2. define `object` method, so the `RetrieveAPI` knows to return which book

+

+ ```python

+ from panther.generics import RetrieveAPI

+ from panther.request import Request

+ from app.models import Book

+

+

+ class SingleBookAPI(RetrieveAPI):

+ async def object(self, request: Request, **kwargs):

+ return await Book.find_one_or_raise(id=kwargs['book_id'])

+ ```

+

+3. Add it in `app/urls.py`:

+

+ ```python

+ from app.apis import BookAPI, SingleBookAPI

+

+

+ urls = {

+ 'book/': BookAPI,

+ 'book//': SingleBookAPI,

+ }

+ ```

+

+ > You should write the [Path Variable](https://pantherpy.github.io/urls/#path-variables-are-handled-like-below) in `<` and `>`

+

+

+### API - Update a Book

+

+1. Inherit from `UpdateAPI`

+

+ ```python

+ from panther.generics import RetrieveAPI, UpdateAPI

+ from panther.request import Request

+ from app.models import Book

+

+

+ class SingleBookAPI(RetrieveAPI, UpdateAPI):

+ ...

+

+ async def object(self, request: Request, **kwargs):

+ return await Book.find_one_or_raise(id=kwargs['book_id'])

+ ```

+

+2. Add `input_model` so the `UpdateAPI` knows how to validate the `request.data`

+> We use the same serializer as CreateAPI serializer we defined above

+

+ ```python

+ from panther.generics import RetrieveAPI, UpdateAPI

+ from panther.request import Request

+ from app.models import Book

+ from app.serializers import BookSerializer

+

+

+ class SingleBookAPI(RetrieveAPI, UpdateAPI):

+ input_model = BookSerializer

+

+ async def object(self, request: Request, **kwargs):

+ return await Book.find_one_or_raise(id=kwargs['book_id'])

+ ```

+

+### API - Delete a Book

+

+1. Inherit from `DeleteAPI`

+

+ ```python

+ from panther.generics import RetrieveAPI, UpdateAPI, DeleteAPI

+ from panther.request import Request

+ from app.models import Book

+ from app.serializers import BookSerializer

+

+

+ class SingleBookAPI(RetrieveAPI, UpdateAPI, DeleteAPI):

+ input_model = BookSerializer

+

+ async def object(self, request: Request, **kwargs):

+ return await Book.find_one_or_raise(id=kwargs['book_id'])

+ ```

+

+2. It requires `object` method which we defined before, so it's done.

diff --git a/docs/docs/images/diagram.png b/docs/docs/images/diagram.png

new file mode 100644

index 0000000..2c60eaa

Binary files /dev/null and b/docs/docs/images/diagram.png differ

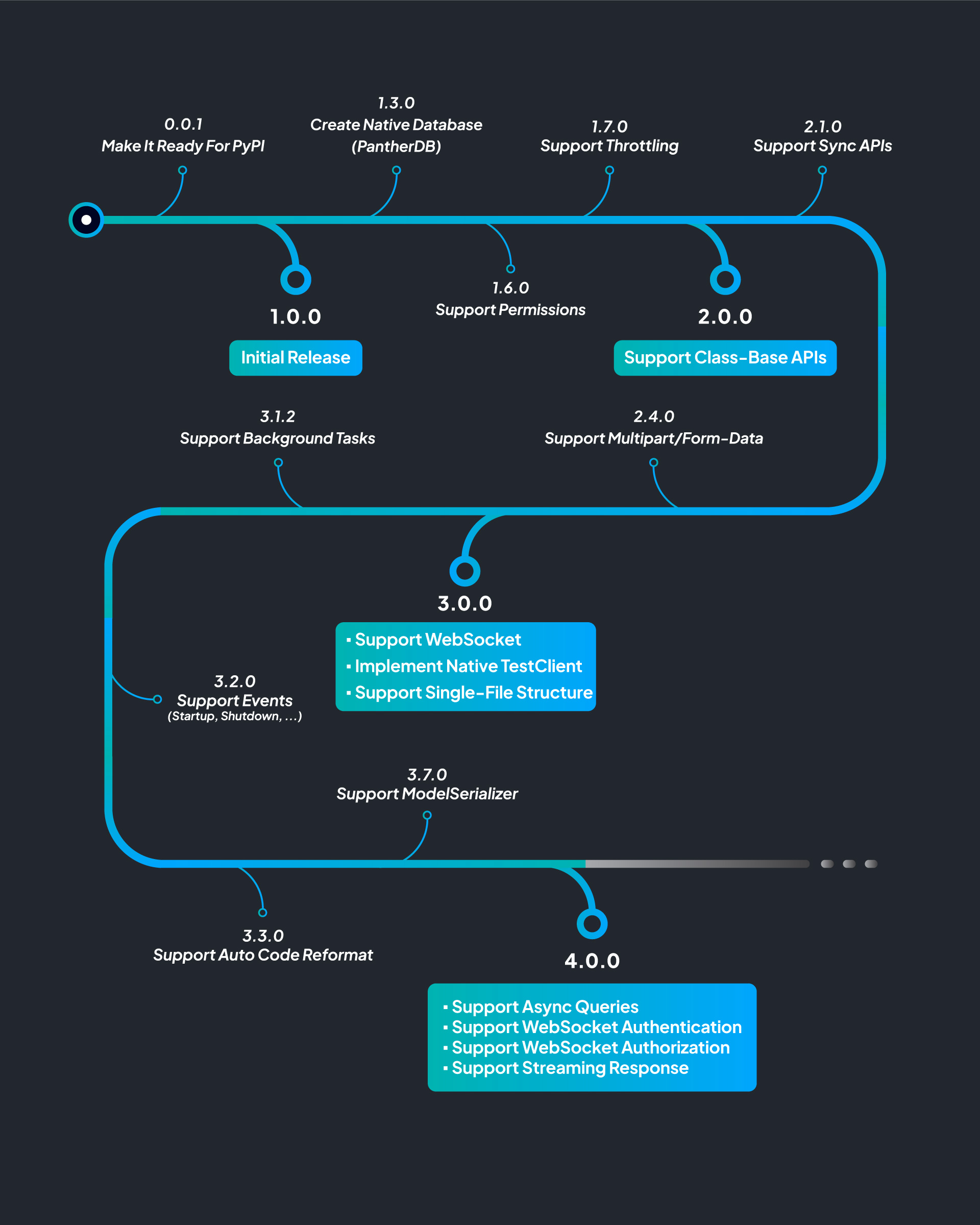

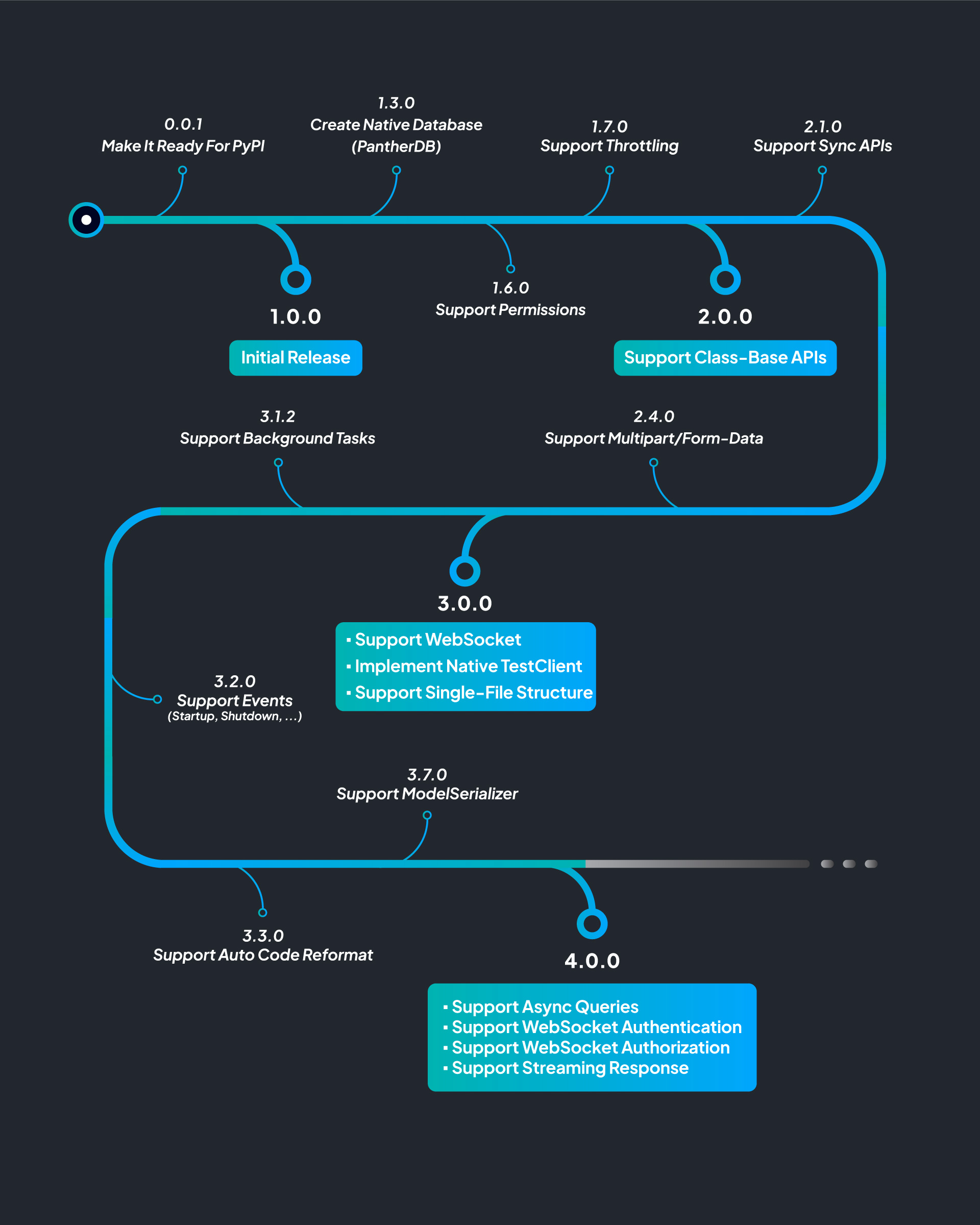

diff --git a/docs/docs/images/roadmap.jpg b/docs/docs/images/roadmap.jpg

index 5eb7378..cf376ac 100644

Binary files a/docs/docs/images/roadmap.jpg and b/docs/docs/images/roadmap.jpg differ

diff --git a/docs/docs/index.md b/docs/docs/index.md

index d59067b..03b4f38 100644

--- a/docs/docs/index.md

+++ b/docs/docs/index.md

@@ -21,10 +21,11 @@

- Built-in API **Caching** System (In Memory, **Redis**)

- Built-in **Authentication** Classes

- Built-in **Permission** Classes

+- Built-in Visual API **Monitoring** (In Terminal)

- Support Custom **Background Tasks**

- Support Custom **Middlewares**

- Support Custom **Throttling**

-- Visual API **Monitoring** (In Terminal)

+- Support **Function-Base** and **Class-Base** APIs

- It's One Of The **Fastest Python Frameworks**

---

@@ -198,6 +199,12 @@

---

+### How Panther Works!

+

+

+

+---

+

### Roadmap

diff --git a/docs/docs/middlewares.md b/docs/docs/middlewares.md

index e745153..5fd2a1d 100644

--- a/docs/docs/middlewares.md

+++ b/docs/docs/middlewares.md

@@ -14,8 +14,11 @@

## Custom Middleware

### Middleware Types

We have 3 type of Middlewares, make sure that you are inheriting from the correct one:

+

- `Base Middleware`: which is used for both `websocket` and `http` requests

+

- `HTTP Middleware`: which is only used for `http` requests

+

- `Websocket Middleware`: which is only used for `websocket` requests

### Write Custom Middleware

diff --git a/docs/docs/panther_odm.md b/docs/docs/panther_odm.md

index 13f3e80..ee849ba 100644

--- a/docs/docs/panther_odm.md

+++ b/docs/docs/panther_odm.md

@@ -16,18 +16,36 @@ user: User = await User.find_one({'id': 1}, name='Ali')

Get documents from the database.

```python

-users: list[User] = await User.find(age=18, name='Ali')

+users: Cursor = await User.find(age=18, name='Ali')

or

-users: list[User] = await User.find({'age': 18, 'name': 'Ali'})

+users: Cursor = await User.find({'age': 18, 'name': 'Ali'})

or

-users: list[User] = await User.find({'age': 18}, name='Ali')

+users: Cursor = await User.find({'age': 18}, name='Ali')

```

+#### skip, limit and sort

+

+`skip()`, `limit()` and `sort()` are also available as chain methods

+

+```python

+users: Cursor = await User.find(age=18, name='Ali').skip(10).limit(10).sort([('age', -1), ('name', 1)])

+```

+

+#### cursor

+

+The `find()` method, returns a `Crusor` depends on the database

+- `from panther.db.cursor import Cursor ` for `MongoDB`

+- `from pantherdb import Cursor` for `PantherDB`

+

+You can work with these cursors as list and directly pass them to `Response(data=cursor)`

+

+They are designed to return an instance of your model in `iterations`(`__next__()`) or accessing by index(`__getitem__()`)

+

### all

Get all documents from the database. (Alias of `.find()`)

```python

-users: list[User] = User.all()

+users: Cursor = User.all()

```

### first

@@ -73,19 +91,7 @@ pipeline = [

...

]

users: Iterable[dict] = await User.aggregate(pipeline)

-```

-

-### find with skip, limit, sort

-Get limited documents from the database from offset and sorted by something

-> Only available in mongodb

-

-```python

-users: list[User] = await User.find(age=18, name='Ali').limit(10).skip(10).sort('_id', -1)

-or

-users: list[User] = await User.find({'age': 18, 'name': 'Ali'}).limit(10).skip(10).sort('_id', -1)

-or

-users: list[User] = await User.find({'age': 18}, name='Ali').limit(10).skip(10).sort('_id', -1)

-```

+```

### count

Count the number of documents in this collection.

@@ -234,7 +240,7 @@ is_inserted, user = await User.find_one_or_insert({'age': 18}, name='Ali')

### find_one_or_raise

- Get a single document from the database]

- **or**

-- Raise an `APIError(f'{Model} Does Not Exist')`

+- Raise an `NotFoundAPIError(f'{Model} Does Not Exist')`

```python

from app.models import User

@@ -248,6 +254,7 @@ user: User = await User.find_one_or_raise({'age': 18}, name='Ali')

### save

Save the document.

+

- If it has id --> `Update` It

- else --> `Insert` It

diff --git a/docs/docs/redis.md b/docs/docs/redis.md

index 22dade6..eb2a44d 100644

--- a/docs/docs/redis.md

+++ b/docs/docs/redis.md

@@ -31,7 +31,7 @@ REDIS = {

### How it works?

-- Panther creates a redis connection depends on `REDIS` block you defined in `configs`

+- Panther creates an async redis connection depends on `REDIS` block you defined in `configs`

- You can access to it from `from panther.db.connections import redis`

@@ -39,7 +39,7 @@ REDIS = {

```python

from panther.db.connections import redis

- redis.set('name', 'Ali')

- result = redis.get('name')

+ await redis.set('name', 'Ali')

+ result = await redis.get('name')

print(result)

```

\ No newline at end of file

diff --git a/docs/docs/release_notes.md b/docs/docs/release_notes.md

index 12df57d..47a49f3 100644

--- a/docs/docs/release_notes.md

+++ b/docs/docs/release_notes.md

@@ -1,10 +1,15 @@

### 4.0.0

- Move `database` and `redis` connections from `MIDDLEWARES` to their own block, `DATABASE` and `REDIS`

-- Make queries `async`

+- Make `Database` queries `async`

+- Make `Redis` queries `async`

+- Add `StreamingResponse`

+- Add `generics` API classes

- Add `login()` & `logout()` to `JWTAuthentication` and used it in `BaseUser`

- Support `Authentication` & `Authorization` in `Websocket`

- Rename all exceptions suffix from `Exception` to `Error` (https://peps.python.org/pep-0008/#exception-names)

-- Support `pantherdb 1.4`

+- Support `pantherdb 2.0.0` (`Cursor` Added)

+- Remove `watchfiles` from required dependencies

+- Support `exclude` and `optional_fields` in `ModelSerializer`

- Minor Improvements

### 3.9.0

diff --git a/docs/docs/serializer.md b/docs/docs/serializer.md

index 7d4852a..5afec4b 100644

--- a/docs/docs/serializer.md

+++ b/docs/docs/serializer.md

@@ -45,13 +45,21 @@ Use panther `ModelSerializer` to write your serializer which will use your `mode

first_name: str = Field(default='', min_length=2)

last_name: str = Field(default='', min_length=4)

-

+ # type 1 - using fields

class UserModelSerializer(ModelSerializer):

class Config:

model = User

fields = ['username', 'first_name', 'last_name']

required_fields = ['first_name']

+ # type 2 - using exclude

+ class UserModelSerializer(ModelSerializer):

+ class Config:

+ model = User

+ fields = '*'

+ required_fields = ['first_name']

+ exclude = ['id', 'password']

+

@API(input_model=UserModelSerializer)

async def model_serializer_example(request: Request):

@@ -59,9 +67,14 @@ Use panther `ModelSerializer` to write your serializer which will use your `mode

```

### Notes

-1. In the example above, `ModelSerializer` will look up for the value of `Config.fields` in the `User.fields` and use their `type` and `value` for the `validation`.

+1. In the example above, `ModelSerializer` will look up for the value of `Config.fields` in the `User.model_fields` and use their `type` and `value` for the `validation`.

2. `Config.model` and `Config.fields` are `required` when you are using `ModelSerializer`.

-3. If you want to use `Config.required_fields`, you have to put its value in `Config.fields` too.

+3. You can force a field to be required with `Config.required_fields`

+4. You can force a field to be optional with `Config.optional_fields`

+5. `Config.required_fields` and `Config.optional_fields` can't include same fields

+6. If you want to use `Config.required_fields` or `Config.optional_fields` you have to put their value in `Config.fields` too.

+7. `Config.fields` & `Config.required_fields` & `Config.optional_fields` can be `*` too (Include all the model fields)

+8. `Config.exclude` is mostly used when `Config.fields` is `'*'`

@@ -94,8 +107,9 @@ You can use `pydantic.BaseModel` features in `ModelSerializer` too.

class Config:

model = User

- fields = ['username', 'first_name', 'last_name']

+ fields = ['first_name', 'last_name']

required_fields = ['first_name']

+ optional_fields = ['last_name']

@field_validator('username')

def validate_username(cls, username):

diff --git a/docs/docs/single_file.md b/docs/docs/single_file.md

index 8676c8f..62f2b71 100644

--- a/docs/docs/single_file.md

+++ b/docs/docs/single_file.md

@@ -42,15 +42,16 @@ If you want to work with `Panther` in a `single-file` structure, follow the step

app = Panther(__name__, configs=__name__, urls=url_routing)

```

4. Run the project

- ```bash

- panther run

- ```

+

+ - If name of your file is `main.py` -->

+ ```panther run```

+ - else use `uvicorn` -->

+ ```uvicorn file_name:app```

### Notes

- `URLs` is a required config unless you pass the `urls` directly to the `Panther`

- When you pass the `configs` to the `Panther(configs=...)`, Panther is going to load the configs from this file,

else it is going to load `core/configs.py` file

-- You can pass the `startup` and `shutdown` functions to the `Panther()` too.

```python

from panther import Panther

@@ -64,11 +65,5 @@ else it is going to load `core/configs.py` file

'/': hello_world_api,

}

- def startup():

- pass

-

- def shutdown():

- pass

-

- app = Panther(__name__, configs=__name__, urls=url_routing, startup=startup, shutdown=shutdown)

+ app = Panther(__name__, configs=__name__, urls=url_routing)

```

\ No newline at end of file

diff --git a/docs/docs/urls.md b/docs/docs/urls.md

index a497d24..028d42c 100644

--- a/docs/docs/urls.md

+++ b/docs/docs/urls.md

@@ -14,7 +14,7 @@

- Example: `user//blog//`

- The `endpoint` should have parameters with those names too

- Example Function-Base: `async def profile_api(user_id: int, title: str):`

- - Example Class-Base: `async def get(user_id: int, title: str):`

+ - Example Class-Base: `async def get(self, user_id: int, title: str):`

## Example

diff --git a/docs/docs/websocket.md b/docs/docs/websocket.md

index 385bc73..ec21692 100644

--- a/docs/docs/websocket.md

+++ b/docs/docs/websocket.md

@@ -15,7 +15,7 @@ class BookWebsocket(GenericWebsocket):

await self.accept()

print(f'{self.connection_id=}')

- async def receive(self, data: str | bytes = None):

+ async def receive(self, data: str | bytes):

# Just Echo The Message

await self.send(data=data)

```

@@ -64,7 +64,7 @@ you have to use `--preload`, like below:

from panther import status

await self.close(code=status.WS_1000_NORMAL_CLOSURE, reason='I just want to close it')

```

- - Out of websocket class scope **(Not Recommended)**: You can close it with `close_websocket_connection()` from `panther.websocket`, it's `async` function with takes 3 args, `connection_id`, `code` and `reason`, like below:

+ - Out of websocket class scope: You can close it with `close_websocket_connection()` from `panther.websocket`, it's `async` function with takes 3 args, `connection_id`, `code` and `reason`, like below:

```python

from panther import status

from panther.websocket import close_websocket_connection

@@ -83,6 +83,5 @@ you have to use `--preload`, like below:

'/ws/<user_id>/<room_id>/': UserWebsocket

}

```

-12. WebSocket Echo Example -> [Https://GitHub.com/PantherPy/echo_websocket](https://github.com/PantherPy/echo_websocket)

-13. Enjoy.

+12. Enjoy.

diff --git a/docs/mkdocs.yml b/docs/mkdocs.yml

index 10de3bb..67db86a 100644

--- a/docs/mkdocs.yml

+++ b/docs/mkdocs.yml

@@ -26,9 +26,10 @@ edit_uri: edit/master/docs/

nav:

- Introduction: 'index.md'

- - First Crud:

+ - How To CRUD:

- Function Base: 'function_first_crud.md'

- Class Base: 'class_first_crud.md'

+ - Generic: 'generic_crud.md'

- Database: 'database.md'

- Panther ODM: 'panther_odm.md'

- Configs: 'configs.md'

@@ -37,6 +38,7 @@ nav:

- WebSocket: 'websocket.md'

- Middlewares: 'middlewares.md'

- Authentications: 'authentications.md'

+ - Events: 'events.md'

- URLs: 'urls.md'

- Throttling: 'throttling.md'

- Background Tasks: 'background_tasks.md'

diff --git a/example/app/apis.py b/example/app/apis.py

index 140ed70..45dac10 100644

--- a/example/app/apis.py

+++ b/example/app/apis.py

@@ -16,9 +16,13 @@

from panther.app import API, GenericAPI

from panther.authentications import JWTAuthentication

from panther.background_tasks import BackgroundTask, background_tasks

+from pantherdb import Cursor as PantherDBCursor

from panther.db.connections import redis

+from panther.db.cursor import Cursor

+from panther.generics import ListAPI

+from panther.pagination import Pagination

from panther.request import Request

-from panther.response import HTMLResponse, Response

+from panther.response import HTMLResponse, Response, StreamingResponse

from panther.throttling import Throttling

from panther.websocket import close_websocket_connection, send_message_to_websocket

@@ -85,8 +89,8 @@ async def res_request_data_with_output_model(request: Request):

@API(input_model=UserInputSerializer)

async def using_redis(request: Request):

- redis.set('ali', '1')

- logger.debug(f"{redis.get('ali') = }")

+ await redis.set('ali', '1')

+ logger.debug(f"{await redis.get('ali') = }")

return Response()

@@ -213,3 +217,30 @@ async def login_api():

@API(auth=True)

def logout_api(request: Request):

return request.user.logout()

+

+

+def reader():

+ from faker import Faker

+ import time

+ f = Faker()

+ for _ in range(5):

+ name = f.name()

+ print(f'{name=}')

+ yield name

+ time.sleep(1)

+

+

+@API()

+def stream_api():

+ # Test --> curl http://127.0.0.1:8000/stream/ --no-buffer

+ return StreamingResponse(reader())

+

+

+class PaginationAPI(ListAPI):

+ pagination = Pagination

+ sort_fields = ['username', 'id']

+ filter_fields = ['username']

+ search_fields = ['username']

+

+ async def objects(self, request: Request, **kwargs) -> Cursor | PantherDBCursor:

+ return await User.find()

diff --git a/example/app/urls.py b/example/app/urls.py

index b6defc1..7adb05c 100644

--- a/example/app/urls.py

+++ b/example/app/urls.py

@@ -39,4 +39,6 @@ async def test(*args, **kwargs):

'custom-response/': custom_response_class_api,

'image/': ImageAPI,

'logout/': logout_api,

+ 'stream/': stream_api,

+ 'pagination/': PaginationAPI,

}

diff --git a/example/core/configs.py b/example/core/configs.py

index d6ee78e..cff954d 100644

--- a/example/core/configs.py

+++ b/example/core/configs.py

@@ -50,9 +50,9 @@

DATABASE = {

'engine': {

- 'class': 'panther.db.connections.MongoDBConnection',

- # 'class': 'panther.db.connections.PantherDBConnection',

- 'host': f'mongodb://{DB_HOST}:27017/{DB_NAME}'

+ # 'class': 'panther.db.connections.MongoDBConnection',

+ 'class': 'panther.db.connections.PantherDBConnection',

+ # 'host': f'mongodb://{DB_HOST}:27017/{DB_NAME}'

},

# 'query': ...,

}

@@ -78,3 +78,5 @@ async def shutdown():

SHUTDOWN = 'core.configs.shutdown'

AUTO_REFORMAT = False

+

+TIMEZONE = 'UTC'

diff --git a/panther/_load_configs.py b/panther/_load_configs.py

index abdfea6..62b3e7d 100644

--- a/panther/_load_configs.py

+++ b/panther/_load_configs.py

@@ -21,6 +21,7 @@

'load_redis',

'load_startup',

'load_shutdown',

+ 'load_timezone',

'load_database',

'load_secret_key',

'load_monitoring',

@@ -39,10 +40,10 @@

logger = logging.getLogger('panther')

-def load_configs_module(_configs, /) -> dict:

+def load_configs_module(module_name: str, /) -> dict:

"""Read the config file as dict"""

- if _configs:

- _module = sys.modules[_configs]

+ if module_name:

+ _module = sys.modules[module_name]

else:

try:

_module = import_module('core.configs')

@@ -55,10 +56,10 @@ def load_redis(_configs: dict, /) -> None:

if redis_config := _configs.get('REDIS'):

# Check redis module installation

try:

- from redis import Redis as _Redis

- except ModuleNotFoundError as e:

+ from redis.asyncio import Redis

+ except ImportError as e:

raise import_error(e, package='redis')

- redis_class_path = redis_config.get('class', 'panther.db.connections.Redis')

+ redis_class_path = redis_config.get('class', 'panther.db.connections.RedisConnection')

redis_class = import_class(redis_class_path)

# We have to create another dict then pop the 'class' else we can't pass the tests

args = redis_config.copy()

@@ -68,12 +69,17 @@ def load_redis(_configs: dict, /) -> None:

def load_startup(_configs: dict, /) -> None:

if startup := _configs.get('STARTUP'):

- config['startup'] = import_class(startup)

+ config.STARTUP = import_class(startup)

def load_shutdown(_configs: dict, /) -> None:

if shutdown := _configs.get('SHUTDOWN'):

- config['shutdown'] = import_class(shutdown)

+ config.SHUTDOWN = import_class(shutdown)

+

+

+def load_timezone(_configs: dict, /) -> None:

+ if timezone := _configs.get('TIMEZONE'):

+ config.TIMEZONE = timezone

def load_database(_configs: dict, /) -> None:

@@ -87,42 +93,42 @@ def load_database(_configs: dict, /) -> None:

# We have to create another dict then pop the 'class' else we can't pass the tests

args = database_config['engine'].copy()

args.pop('class')

- config['database'] = engine_class(**args)

+ config.DATABASE = engine_class(**args)

if engine_class_path == 'panther.db.connections.PantherDBConnection':

- config['query_engine'] = BasePantherDBQuery

+ config.QUERY_ENGINE = BasePantherDBQuery

elif engine_class_path == 'panther.db.connections.MongoDBConnection':

- config['query_engine'] = BaseMongoDBQuery

+ config.QUERY_ENGINE = BaseMongoDBQuery

if 'query' in database_config:

- if config['query_engine']:

+ if config.QUERY_ENGINE:

logger.warning('`DATABASE.query` has already been filled.')

- config['query_engine'] = import_class(database_config['query'])

+ config.QUERY_ENGINE = import_class(database_config['query'])

def load_secret_key(_configs: dict, /) -> None:

if secret_key := _configs.get('SECRET_KEY'):

- config['secret_key'] = secret_key.encode()

+ config.SECRET_KEY = secret_key.encode()

def load_monitoring(_configs: dict, /) -> None:

if _configs.get('MONITORING'):

- config['monitoring'] = True

+ config.MONITORING = True

def load_throttling(_configs: dict, /) -> None:

if throttling := _configs.get('THROTTLING'):

- config['throttling'] = throttling

+ config.THROTTLING = throttling

def load_user_model(_configs: dict, /) -> None:

- config['user_model'] = import_class(_configs.get('USER_MODEL', 'panther.db.models.BaseUser'))

- config['models'].append(config['user_model'])

+ config.USER_MODEL = import_class(_configs.get('USER_MODEL', 'panther.db.models.BaseUser'))

+ config.MODELS.append(config.USER_MODEL)

def load_log_queries(_configs: dict, /) -> None:

if _configs.get('LOG_QUERIES'):

- config['log_queries'] = True

+ config.LOG_QUERIES = True

def load_middlewares(_configs: dict, /) -> None:

@@ -153,42 +159,39 @@ def load_middlewares(_configs: dict, /) -> None:

if issubclass(middleware_class, BaseMiddleware) is False:

raise _exception_handler(field='MIDDLEWARES', error='is not a sub class of BaseMiddleware')

- middleware_instance = middleware_class(**data)

- if isinstance(middleware_instance, BaseMiddleware | HTTPMiddleware):

- middlewares['http'].append(middleware_instance)

+ if middleware_class.__bases__[0] in (BaseMiddleware, HTTPMiddleware):

+ middlewares['http'].append((middleware_class, data))

- if isinstance(middleware_instance, BaseMiddleware | WebsocketMiddleware):

- middlewares['ws'].append(middleware_instance)

+ if middleware_class.__bases__[0] in (BaseMiddleware, WebsocketMiddleware):

+ middlewares['ws'].append((middleware_class, data))

- config['http_middlewares'] = middlewares['http']

- config['ws_middlewares'] = middlewares['ws']

- config['reversed_http_middlewares'] = middlewares['http'][::-1]

- config['reversed_ws_middlewares'] = middlewares['ws'][::-1]

+ config.HTTP_MIDDLEWARES = middlewares['http']

+ config.WS_MIDDLEWARES = middlewares['ws']

def load_auto_reformat(_configs: dict, /) -> None:

if _configs.get('AUTO_REFORMAT'):

- config['auto_reformat'] = True

+ config.AUTO_REFORMAT = True

def load_background_tasks(_configs: dict, /) -> None:

if _configs.get('BACKGROUND_TASKS'):

- config['background_tasks'] = True

+ config.BACKGROUND_TASKS = True

background_tasks.initialize()

def load_default_cache_exp(_configs: dict, /) -> None:

if default_cache_exp := _configs.get('DEFAULT_CACHE_EXP'):

- config['default_cache_exp'] = default_cache_exp

+ config.DEFAULT_CACHE_EXP = default_cache_exp

def load_authentication_class(_configs: dict, /) -> None:

"""Should be after `load_secret_key()`"""

if authentication := _configs.get('AUTHENTICATION'):

- config['authentication'] = import_class(authentication)

+ config.AUTHENTICATION = import_class(authentication)

if ws_authentication := _configs.get('WS_AUTHENTICATION'):

- config['ws_authentication'] = import_class(ws_authentication)

+ config.WS_AUTHENTICATION = import_class(ws_authentication)

load_jwt_config(_configs)

@@ -196,16 +199,16 @@ def load_authentication_class(_configs: dict, /) -> None:

def load_jwt_config(_configs: dict, /) -> None:

"""Only Collect JWT Config If Authentication Is JWTAuthentication"""

auth_is_jwt = (

- getattr(config['authentication'], '__name__', None) == 'JWTAuthentication' or

- getattr(config['ws_authentication'], '__name__', None) == 'QueryParamJWTAuthentication'

+ getattr(config.AUTHENTICATION, '__name__', None) == 'JWTAuthentication' or

+ getattr(config.WS_AUTHENTICATION, '__name__', None) == 'QueryParamJWTAuthentication'

)

jwt = _configs.get('JWTConfig', {})

if auth_is_jwt or jwt:

if 'key' not in jwt:

- if config['secret_key'] is None:

+ if config.SECRET_KEY is None:

raise _exception_handler(field='JWTConfig', error='`JWTConfig.key` or `SECRET_KEY` is required.')

- jwt['key'] = config['secret_key'].decode()

- config['jwt_config'] = JWTConfig(**jwt)

+ jwt['key'] = config.SECRET_KEY.decode()

+ config.JWT_CONFIG = JWTConfig(**jwt)

def load_urls(_configs: dict, /, urls: dict | None) -> None:

@@ -237,14 +240,14 @@ def load_urls(_configs: dict, /, urls: dict | None) -> None:

if not isinstance(urls, dict):

raise _exception_handler(field='URLs', error='should point to a dict.')

- config['flat_urls'] = flatten_urls(urls)

- config['urls'] = finalize_urls(config['flat_urls'])

- config['urls']['_panel'] = finalize_urls(flatten_urls(panel_urls))

+ config.FLAT_URLS = flatten_urls(urls)

+ config.URLS = finalize_urls(config.FLAT_URLS)

+ config.URLS['_panel'] = finalize_urls(flatten_urls(panel_urls))

def load_websocket_connections():

"""Should be after `load_redis()`"""

- if config['has_ws']:

+ if config.HAS_WS:

# Check `websockets`

try:

import websockets

@@ -253,7 +256,7 @@ def load_websocket_connections():

# Use the redis pubsub if `redis.is_connected`, else use the `multiprocessing.Manager`

pubsub_connection = redis.create_connection_for_websocket() if redis.is_connected else Manager()

- config['websocket_connections'] = WebsocketConnections(pubsub_connection=pubsub_connection)

+ config.WEBSOCKET_CONNECTIONS = WebsocketConnections(pubsub_connection=pubsub_connection)

def _exception_handler(field: str, error: str | Exception) -> PantherError:

diff --git a/panther/_utils.py b/panther/_utils.py

index e1371ba..e7fffcb 100644

--- a/panther/_utils.py

+++ b/panther/_utils.py

@@ -1,57 +1,20 @@

+import asyncio

import importlib

import logging

import re

import subprocess

import types

-import typing

+from typing import Any, Generator, Iterator, AsyncGenerator

from collections.abc import Callable

from traceback import TracebackException

-import orjson as json

-

-from panther import status

from panther.exceptions import PantherError

from panther.file_handler import File

logger = logging.getLogger('panther')

-async def _http_response_start(send: Callable, /, headers: dict, status_code: int) -> None:

- bytes_headers = [[k.encode(), str(v).encode()] for k, v in (headers or {}).items()]

- await send({

- 'type': 'http.response.start',

- 'status': status_code,

- 'headers': bytes_headers,

- })

-

-

-async def _http_response_body(send: Callable, /, body: bytes) -> None:

- if body:

- await send({'type': 'http.response.body', 'body': body})

- else: # body = b''

- await send({'type': 'http.response.body'})

-

-

-async def http_response(

- send: Callable,

- /,

- *,

- monitoring, # type: MonitoringMiddleware

- status_code: int,

- headers: dict,

- body: bytes = b'',

- exception: bool = False,

-) -> None:

- if exception:

- body = json.dumps({'detail': status.status_text[status_code]})

-

- await monitoring.after(status_code)

-

- await _http_response_start(send, headers=headers, status_code=status_code)

- await _http_response_body(send, body=body)

-

-

-def import_class(dotted_path: str, /) -> type[typing.Any]:

+def import_class(dotted_path: str, /) -> type[Any]:

"""

Example:

-------

@@ -148,4 +111,25 @@ def check_class_type_endpoint(endpoint: Callable) -> Callable:

logger.critical(f'You may have forgotten to inherit from GenericAPI on the {endpoint.__name__}()')

raise TypeError

- return endpoint.call_method

+ return endpoint().call_method

+

+

+def async_next(iterator: Iterator):

+ """

+ The StopIteration exception is a special case in Python,

+ particularly when it comes to asynchronous programming and the use of asyncio.

+ This is because StopIteration is not meant to be caught in the traditional sense;

+ it's used internally by Python to signal the end of an iteration.

+ """

+ try:

+ return next(iterator)

+ except StopIteration:

+ raise StopAsyncIteration

+

+

+async def to_async_generator(generator: Generator) -> AsyncGenerator:

+ while True:

+ try:

+ yield await asyncio.to_thread(async_next, iter(generator))

+ except StopAsyncIteration:

+ break

diff --git a/panther/app.py b/panther/app.py

index 2f4282f..8dff292 100644

--- a/panther/app.py

+++ b/panther/app.py

@@ -1,13 +1,18 @@

import functools

import logging

-from datetime import datetime, timedelta

+from datetime import timedelta

from typing import Literal

from orjson import JSONDecodeError

-from pydantic import ValidationError

+from pydantic import ValidationError, BaseModel

from panther._utils import is_function_async

-from panther.caching import cache_key, get_cached_response_data, set_cache_response

+from panther.caching import (

+ get_response_from_cache,

+ set_response_in_cache,

+ get_throttling_from_cache,

+ increment_throttling_in_cache

+)

from panther.configs import config

from panther.exceptions import (

APIError,

@@ -19,8 +24,8 @@

)

from panther.request import Request

from panther.response import Response

-from panther.throttling import Throttling, throttling_storage

-from panther.utils import round_datetime

+from panther.serializer import ModelSerializer

+from panther.throttling import Throttling

__all__ = ('API', 'GenericAPI')

@@ -31,11 +36,11 @@ class API:

def __init__(

self,

*,

- input_model=None,

- output_model=None,

+ input_model: type[ModelSerializer] | type[BaseModel] | None = None,

+ output_model: type[ModelSerializer] | type[BaseModel] | None = None,

auth: bool = False,

permissions: list | None = None,

- throttling: Throttling = None,

+ throttling: Throttling | None = None,

cache: bool = False,

cache_exp_time: timedelta | int | None = None,

methods: list[Literal['GET', 'POST', 'PUT', 'PATCH', 'DELETE']] | None = None,

@@ -53,20 +58,20 @@ def __init__(

def __call__(self, func):

@functools.wraps(func)

async def wrapper(request: Request) -> Response:

- self.request: Request = request # noqa: Non-self attribute could not be type hinted

+ self.request = request

# 1. Check Method

if self.methods and self.request.method not in self.methods:

raise MethodNotAllowedAPIError

# 2. Authentication

- await self.handle_authentications()

+ await self.handle_authentication()

- # 3. Throttling

- self.handle_throttling()

+ # 3. Permissions

+ await self.handle_permission()

- # 4. Permissions

- await self.handle_permissions()

+ # 4. Throttling

+ await self.handle_throttling()

# 5. Validate Input

if self.request.method in ['POST', 'PUT', 'PATCH']:

@@ -74,7 +79,7 @@ async def wrapper(request: Request) -> Response:

# 6. Get Cached Response

if self.cache and self.request.method == 'GET':

- if cached := get_cached_response_data(request=self.request, cache_exp_time=self.cache_exp_time):

+ if cached := await get_response_from_cache(request=self.request, cache_exp_time=self.cache_exp_time):

return Response(data=cached.data, status_code=cached.status_code)

# 7. Put PathVariables and Request(If User Wants It) In kwargs

@@ -89,11 +94,12 @@ async def wrapper(request: Request) -> Response:

# 9. Clean Response

if not isinstance(response, Response):

response = Response(data=response)

- response._clean_data_with_output_model(output_model=self.output_model) # noqa: SLF001

+ if self.output_model and response.data:

+ response.data = response.apply_output_model(response.data, output_model=self.output_model)

# 10. Set New Response To Cache

if self.cache and self.request.method == 'GET':

- set_cache_response(

+ await set_response_in_cache(

request=self.request,

response=response,

cache_exp_time=self.cache_exp_time

@@ -107,26 +113,21 @@ async def wrapper(request: Request) -> Response:

return wrapper

- async def handle_authentications(self) -> None:

- auth_class = config['authentication']

+ async def handle_authentication(self) -> None:

if self.auth:

- if not auth_class:

+ if not config.AUTHENTICATION:

logger.critical('"AUTHENTICATION" has not been set in configs')

raise APIError

- user = await auth_class.authentication(self.request)

- self.request.user = user

-

- def handle_throttling(self) -> None:

- if throttling := self.throttling or config['throttling']:

- key = cache_key(self.request)

- time = round_datetime(datetime.now(), throttling.duration)

- throttling_key = f'{time}-{key}'

- if throttling_storage[throttling_key] > throttling.rate:

+ self.request.user = await config.AUTHENTICATION.authentication(self.request)

+

+ async def handle_throttling(self) -> None:

+ if throttling := self.throttling or config.THROTTLING:

+ if await get_throttling_from_cache(self.request, duration=throttling.duration) + 1 > throttling.rate:

raise ThrottlingAPIError

- throttling_storage[throttling_key] += 1

+ await increment_throttling_in_cache(self.request, duration=throttling.duration)

- async def handle_permissions(self) -> None:

+ async def handle_permission(self) -> None:

for perm in self.permissions:

if type(perm.authorization).__name__ != 'method':

logger.error(f'{perm.__name__}.authorization should be "classmethod"')

@@ -136,15 +137,17 @@ async def handle_permissions(self) -> None:

def handle_input_validation(self):

if self.input_model:

- validated_data = self.validate_input(model=self.input_model, request=self.request)

- self.request.set_validated_data(validated_data)

+ self.request.validated_data = self.validate_input(model=self.input_model, request=self.request)

@classmethod

def validate_input(cls, model, request: Request):

+ if isinstance(request.data, bytes):

+ raise BadRequestAPIError(detail='Content-Type is not valid')

+ if request.data is None:

+ raise BadRequestAPIError(detail='Request body is required')

try:

- if isinstance(request.data, bytes):

- raise BadRequestAPIError(detail='Content-Type is not valid')

- return model(**request.data)

+ # `request` will be ignored in regular `BaseModel`

+ return model(**request.data, request=request)

except ValidationError as validation_error:

error = {'.'.join(loc for loc in e['loc']): e['msg'] for e in validation_error.errors()}

raise BadRequestAPIError(detail=error)

@@ -153,8 +156,8 @@ def validate_input(cls, model, request: Request):

class GenericAPI:

- input_model = None

- output_model = None

+ input_model: type[ModelSerializer] | type[BaseModel] = None

+ output_model: type[ModelSerializer] | type[BaseModel] = None

auth: bool = False

permissions: list | None = None

throttling: Throttling | None = None

@@ -176,26 +179,27 @@ async def patch(self, *args, **kwargs):

async def delete(self, *args, **kwargs):

raise MethodNotAllowedAPIError

- @classmethod

- async def call_method(cls, *args, **kwargs):

- match kwargs['request'].method:

+ async def call_method(self, request: Request):

+ match request.method:

case 'GET':

- func = cls().get

+ func = self.get

case 'POST':

- func = cls().post

+ func = self.post

case 'PUT':

- func = cls().put

+ func = self.put

case 'PATCH':

- func = cls().patch

+ func = self.patch

case 'DELETE':

- func = cls().delete

+ func = self.delete

+ case _:

+ raise MethodNotAllowedAPIError

return await API(

- input_model=cls.input_model,

- output_model=cls.output_model,

- auth=cls.auth,

- permissions=cls.permissions,

- throttling=cls.throttling,

- cache=cls.cache,

- cache_exp_time=cls.cache_exp_time,

- )(func)(*args, **kwargs)

+ input_model=self.input_model,

+ output_model=self.output_model,

+ auth=self.auth,

+ permissions=self.permissions,

+ throttling=self.throttling,

+ cache=self.cache,

+ cache_exp_time=self.cache_exp_time,

+ )(func)(request=request)

diff --git a/panther/authentications.py b/panther/authentications.py

index 1861714..27454ea 100644

--- a/panther/authentications.py

+++ b/panther/authentications.py

@@ -67,7 +67,7 @@ async def authentication(cls, request: Request | Websocket) -> Model:

msg = 'Authorization keyword is not valid'

raise cls.exception(msg) from None

- if redis.is_connected and cls._check_in_cache(token=token):

+ if redis.is_connected and await cls._check_in_cache(token=token):

msg = 'User logged out'

raise cls.exception(msg) from None

@@ -82,8 +82,8 @@ def decode_jwt(cls, token: str) -> dict:

try:

return jwt.decode(

token=token,

- key=config['jwt_config'].key,

- algorithms=[config['jwt_config'].algorithm],

+ key=config.JWT_CONFIG.key,

+ algorithms=[config.JWT_CONFIG.algorithm],

)

except JWTError as e:

raise cls.exception(e) from None

@@ -95,7 +95,7 @@ async def get_user(cls, payload: dict) -> Model:

msg = 'Payload does not have `user_id`'

raise cls.exception(msg)

- user_model = config['user_model'] or cls.model

+ user_model = config.USER_MODEL or cls.model

if user := await user_model.find_one(id=user_id):

return user

@@ -107,9 +107,9 @@ def encode_jwt(cls, user_id: str, token_type: Literal['access', 'refresh'] = 'ac

"""Encode JWT from user_id."""

issued_at = datetime.now(timezone.utc).timestamp()

if token_type == 'access':

- expire = issued_at + config['jwt_config'].life_time

+ expire = issued_at + config.JWT_CONFIG.life_time

else:

- expire = issued_at + config['jwt_config'].refresh_life_time

+ expire = issued_at + config.JWT_CONFIG.refresh_life_time

claims = {

'token_type': token_type,

@@ -119,8 +119,8 @@ def encode_jwt(cls, user_id: str, token_type: Literal['access', 'refresh'] = 'ac

}

return jwt.encode(

claims,

- key=config['jwt_config'].key,

- algorithm=config['jwt_config'].algorithm,

+ key=config.JWT_CONFIG.key,

+ algorithm=config.JWT_CONFIG.algorithm,

)

@classmethod

@@ -132,24 +132,24 @@ def login(cls, user_id: str) -> dict:

}

@classmethod

- def logout(cls, raw_token: str) -> None:

+ async def logout(cls, raw_token: str) -> None:

*_, token = raw_token.split()

if redis.is_connected:

payload = cls.decode_jwt(token=token)

remaining_exp_time = payload['exp'] - time.time()

- cls._set_in_cache(token=token, exp=int(remaining_exp_time))

+ await cls._set_in_cache(token=token, exp=int(remaining_exp_time))

else:

logger.error('`redis` middleware is required for `logout()`')

@classmethod

- def _set_in_cache(cls, token: str, exp: int) -> None:

+ async def _set_in_cache(cls, token: str, exp: int) -> None:

key = generate_hash_value_from_string(token)

- redis.set(key, b'', ex=exp)

+ await redis.set(key, b'', ex=exp)

@classmethod

- def _check_in_cache(cls, token: str) -> bool:

+ async def _check_in_cache(cls, token: str) -> bool:

key = generate_hash_value_from_string(token)

- return bool(redis.exists(key))

+ return bool(await redis.exists(key))

@staticmethod

def exception(message: str | JWTError | UnicodeEncodeError, /) -> type[AuthenticationAPIError]:

diff --git a/panther/background_tasks.py b/panther/background_tasks.py

index c925375..9165b04 100644

--- a/panther/background_tasks.py

+++ b/panther/background_tasks.py

@@ -9,7 +9,6 @@

from panther._utils import is_function_async

from panther.utils import Singleton

-

__all__ = (

'BackgroundTask',

'background_tasks',

diff --git a/panther/base_request.py b/panther/base_request.py

index 314e44a..5e012f7 100644

--- a/panther/base_request.py

+++ b/panther/base_request.py

@@ -60,8 +60,6 @@ def __init__(self, scope: dict, receive: Callable, send: Callable):

self.scope = scope

self.asgi_send = send

self.asgi_receive = receive

- self._data = ...

- self._validated_data = None

self._headers: Headers | None = None

self._params: dict | None = None

self.user: Model | None = None

@@ -116,25 +114,22 @@ def collect_path_variables(self, found_path: str):

}

def clean_parameters(self, func: Callable) -> dict:

- kwargs = {}

+ kwargs = self.path_variables.copy()

+

for variable_name, variable_type in func.__annotations__.items():

# Put Request/ Websocket In kwargs (If User Wants It)

if issubclass(variable_type, BaseRequest):

kwargs[variable_name] = self

- continue

-

- for name, value in self.path_variables.items():

- if name == variable_name:

- # Check the type and convert the value

- if variable_type is bool:

- kwargs[name] = value.lower() not in ['false', '0']

-

- elif variable_type is int:

- try:

- kwargs[name] = int(value)

- except ValueError:

- raise InvalidPathVariableAPIError(value=value, variable_type=variable_type)

- else:

- kwargs[name] = value

- return kwargs

+ elif variable_name in kwargs:

+ # Cast To Boolean

+ if variable_type is bool:

+ kwargs[variable_name] = kwargs[variable_name].lower() not in ['false', '0']

+

+ # Cast To Int

+ elif variable_type is int:

+ try:

+ kwargs[variable_name] = int(kwargs[variable_name])

+ except ValueError:

+ raise InvalidPathVariableAPIError(value=kwargs[variable_name], variable_type=variable_type)

+ return kwargs

diff --git a/panther/base_websocket.py b/panther/base_websocket.py

index 7da3b74..5ba79e6 100644

--- a/panther/base_websocket.py

+++ b/panther/base_websocket.py

@@ -1,11 +1,8 @@

from __future__ import annotations

import asyncio

-import contextlib

import logging

-from multiprocessing import Manager

from multiprocessing.managers import SyncManager

-from threading import Thread

from typing import TYPE_CHECKING, Literal

import orjson as json

@@ -15,16 +12,17 @@

from panther.configs import config

from panther.db.connections import redis

from panther.exceptions import AuthenticationAPIError, InvalidPathVariableAPIError

+from panther.monitoring import Monitoring

from panther.utils import Singleton, ULID

if TYPE_CHECKING:

- from redis import Redis

+ from redis.asyncio import Redis

logger = logging.getLogger('panther')

class PubSub:

- def __init__(self, manager):

+ def __init__(self, manager: SyncManager):

self._manager = manager

self._subscribers = self._manager.list()

@@ -38,17 +36,8 @@ def publish(self, msg):

queue.put(msg)

-class WebsocketListener(Thread):

- def __init__(self):

- super().__init__(target=config['websocket_connections'], daemon=True)

-

- def run(self):

- with contextlib.suppress(Exception):

- super().run()

-

-

class WebsocketConnections(Singleton):

- def __init__(self, pubsub_connection: Redis | Manager):

+ def __init__(self, pubsub_connection: Redis | SyncManager):

self.connections = {}

self.connections_count = 0

self.pubsub_connection = pubsub_connection

@@ -56,20 +45,21 @@ def __init__(self, pubsub_connection: Redis | Manager):

if isinstance(self.pubsub_connection, SyncManager):

self.pubsub = PubSub(manager=self.pubsub_connection)

- def __call__(self):

+ async def __call__(self):

if isinstance(self.pubsub_connection, SyncManager):

- # We don't have redis connection, so use the `multiprocessing.PubSub`

+ # We don't have redis connection, so use the `multiprocessing.Manager`

+ self.pubsub: PubSub

queue = self.pubsub.subscribe()

logger.info("Subscribed to 'websocket_connections' queue")

while True:

received_message = queue.get()

- self._handle_received_message(received_message=received_message)

+ await self._handle_received_message(received_message=received_message)

else:

# We have a redis connection, so use it for pubsub

self.pubsub = self.pubsub_connection.pubsub()

- self.pubsub.subscribe('websocket_connections')

+ await self.pubsub.subscribe('websocket_connections')

logger.info("Subscribed to 'websocket_connections' channel")

- for channel_data in self.pubsub.listen():

+ async for channel_data in self.pubsub.listen():

match channel_data['type']:

# Subscribed

case 'subscribe':

@@ -78,12 +68,12 @@ def __call__(self):

# Message Received

case 'message':

loaded_data = json.loads(channel_data['data'].decode())

- self._handle_received_message(received_message=loaded_data)

+ await self._handle_received_message(received_message=loaded_data)

case unknown_type:

- logger.debug(f'Unknown Channel Type: {unknown_type}')

+ logger.error(f'Unknown Channel Type: {unknown_type}')

- def _handle_received_message(self, received_message):

+ async def _handle_received_message(self, received_message):

if (

isinstance(received_message, dict)

and (connection_id := received_message.get('connection_id'))

@@ -94,120 +84,156 @@ def _handle_received_message(self, received_message):

# Check Action of WS

match received_message['action']:

case 'send':

- asyncio.run(self.connections[connection_id].send(data=received_message['data']))

+ await self.connections[connection_id].send(data=received_message['data'])

case 'close':

- with contextlib.suppress(RuntimeError):

- asyncio.run(self.connections[connection_id].close(

- code=received_message['data']['code'],

- reason=received_message['data']['reason']

- ))

- # We are trying to disconnect the connection between a thread and a user

- # from another thread, it's working, but we have to find another solution for it

- #

- # Error:

- # Task <Task pending coro=<Websocket.close()>> got Future

- # <Task pending coro=<WebSocketCommonProtocol.transfer_data()>>

- # attached to a different loop

+ await self.connections[connection_id].close(

+ code=received_message['data']['code'],

+ reason=received_message['data']['reason']

+ )

case unknown_action:

- logger.debug(f'Unknown Message Action: {unknown_action}')

+ logger.error(f'Unknown Message Action: {unknown_action}')

- def publish(self, connection_id: str, action: Literal['send', 'close'], data: any):

+ async def publish(self, connection_id: str, action: Literal['send', 'close'], data: any):

publish_data = {'connection_id': connection_id, 'action': action, 'data': data}

if redis.is_connected:

- redis.publish('websocket_connections', json.dumps(publish_data))

+ await redis.publish('websocket_connections', json.dumps(publish_data))

else:

self.pubsub.publish(publish_data)

- async def new_connection(self, connection: Websocket) -> None:

+ async def listen(self, connection: Websocket) -> None:

# 1. Authentication

- connection_closed = await self.handle_authentication(connection=connection)

+ if not connection.is_rejected:

+ await self.handle_authentication(connection=connection)

# 2. Permissions

- connection_closed = connection_closed or await self.handle_permissions(connection=connection)

+ if not connection.is_rejected:

+ await self.handle_permissions(connection=connection)

- if connection_closed:

- # Don't run the following code...

+ if connection.is_rejected:

+ # Connection is rejected so don't continue the flow ...

return None

# 3. Put PathVariables and Request(If User Wants It) In kwargs

try:

kwargs = connection.clean_parameters(connection.connect)

except InvalidPathVariableAPIError as e:

- return await connection.close(status.WS_1000_NORMAL_CLOSURE, reason=str(e))

+ connection.log(e.detail)

+ return await connection.close()

# 4. Connect To Endpoint

await connection.connect(**kwargs)

- if not hasattr(connection, '_connection_id'):

- # User didn't even call the `self.accept()` so close the connection

- await connection.close()

+ # 5. Check Connection

+ if not connection.is_connected and not connection.is_rejected:

+ # User didn't call the `self.accept()` or `self.close()` so we `close()` the connection (reject)

+ return await connection.close()

- # 5. Connection Accepted

- if connection.is_connected:

- self.connections_count += 1

+ # 6. Listen Connection

+ await self.listen_connection(connection=connection)

+

+ async def listen_connection(self, connection: Websocket):

+ while True:

+ response = await connection.asgi_receive()

+ if response['type'] == 'websocket.connect':

+ continue

+

+ if response['type'] == 'websocket.disconnect':