-

Notifications

You must be signed in to change notification settings - Fork 533

4. Maintainers

- Overview

- Mandala Test Network Maintainers Guide

- Start a Private Network

- Node validator

Here is a guide to set up a node and ways to participate in the Acala Network.

Mandala Test Network is a risk-free and value-free playground, purely for testing out functionalities and "explosive" experiments. There is no network value nor rewards. However you are welcome to run a node and join the network, to try it out, prepare for Karura Network (that will join Kusama with network value) and Acala Mainnet, or just for the love of it.

Once you choose your cloud service provider and set-up your new server, the first thing you will do is install Rust.

If you have never installed Rust, you should do this first. This command will fetch the latest version of Rust and install it.

# Install

curl https://sh.rustup.rs -sSf | sh

# Configure

source ~/.cargo/envOtherwise, if you have already installed Rust, run the following command to make sure you are using the latest version.

rustup updateConfigure the Rust toolchain to default to the latest stable version:

rustup update stable

rustup default stableIf the compilation fails, you can try to switch to nightly

rustup update nightly

rustup default nightlyThe AcalaNetwork/acala repo's master branch contains the latest Acala code.

git clone https://github.com/AcalaNetwork/Acala.git

cd Acala

make init

make buildAlternatively, if you wish to use a specific release, you can check out a specific tag (v0.5.1 in the example below):

git clone https://github.com/AcalaNetwork/Acala.git

cd Acala

git checkout tags/v0.5.1

make init

make buildTo type check:

make checkTo purge old chain data:

make purgeTo purge old chain data and run

make restartUpdate ORML

make updatehe built binary will be in the target/release folder, called acala.

./target/release --chain mandala --name "My node's name"Use the --help flag to find out which flags you can use when running the node. For example, if connecting to your node remotely, you'll probably want to use --ws-external and --rpc-cors all.

The syncing process will take a while depending on your bandwidth, processing power, disk speed and RAM. On a $10 DigitalOcean droplet, the process can complete in some 36 hours.

Congratulations, you're now syncing with Acala. Keep in mind that the process is identical when using any other Substrate chain.

When running as a simple sync node (above), only the state of the past 256 blocks will be kept. When validating, it defaults to archive mode. To keep the full state use the --pruning flag:

./target/release/acala --name "My node's name" --pruning archive --chain mandalaIt is possible to almost quadruple synchronization speed by using an additional flag: --wasm-execution Compiled. Note that this uses much more CPU and RAM, so it should be turned off after the node is in sync.

Finally, you can use Docker to run your node in a container. Doing this is a bit more advanced so it's best left up to those that either already have familiarity with docker, or have completed the other set-up instructions in this guide. If you would like to connect to your node's WebSockets ensure that you run you node with the --rpc-external and --ws-external commands.

Install docker with Linux:

wget -qO- https://get.docker.com/ | sh

If you have docker installed, you can use it to start your node without needing to build from the code. Here is the command

docker run -d --restart=always -p 30333:30333 -p 9933:9933 -p 9944:9944 -v node-data:/acala/data acala/acala-node:latest --chain mandala --base-path=/acala/data/01-001 --ws-port 9944 --rpc-port 9933 --port 30333 --ws-external --rpc-external --ws-max-connections 1000 --rpc-cors=all --unsafe-ws-external --unsafe-rpc-external --pruning=archive --name "Name of Telemetry" Selecting a chain

Use the --chain <chainspec> option to select the chain. Can be mandala or a custom chain spec. By default, the client will start Acala.

Archive node

An archive node does not prune any block or state data. Use the --archive flag. Certain types of nodes, like validators and sentries, must run in archive mode. Likewise, all events are cleared from state in each block, so if you want to store events then you will need an archive node.

Exporting blocks

To export blocks to a file, use export-blocks. Export in JSON (default) or binary (--binary true).

acala export-blocks --from 0 <output_file>

RPC ports

Use the --rpc-external flag to expose RPC ports and --ws-external to expose websockets. Not all RPC calls are safe to allow and you should use an RPC proxy to filter unsafe calls. Select ports with the --rpc-port and --ws-port options. To limit the hosts who can access, use the --rpc-cors and --ws-cors options.

Execution

The runtime must compile to WebAssembly and is stored on-chain. If the client's runtime is the same spec as the runtime that is stored on-chain, then the client will execute blocks using the client binary. Otherwise, the client will execute the Wasm runtime.

Therefore, when syncing the chain, the client will execute blocks from past runtimes using their associated Wasm binary. This feature also allows forkless upgrades: the client can execute a new runtime without updating the client.

Acala client has two Wasm execution methods, interpreted (default) and compiled. Set the preferred method to use when executing Wasm with --wasm-execution <Interpreted|Compiled>. Compiled execution will run much faster, especially when syncing the chain, but is experimental and may use more memory/CPU. A reasonable tradeoff would be to sync the chain with compiled execution and then restart the node with interpreted execution.

The node stores a number of files in: /home/$USER/.local/share/acala/chains/<chain name>/. You can set a custom path with --base-path <path>.

keystore

The keystore stores session keys, which are important for validator operations.

db

The database stores blocks and the state trie. If you want to start a new machine without resyncing, you can stop your node, back up the DB, and move it to a new machine.

To delete your DB and re-sync from genesis, run:

acala purge-chain

Node status

You can check the node's health via RPC with:

curl -H "Content-Type: application/json" --data '{ "jsonrpc":"2.0", "method":"system_health", "params":[],"id":1 }' localhost:9933

Logs

The Acala client has a number of log targets. The most interesting to users may be:

telemetrytxpoolusage

Other targets include: db, gossip, peerset, state-db, state-trace, sub-libp2p, trie, wasm-executor, wasm-heap.

The log levels, from least to most verbose, are:

errorwarninfodebugtrace

All targets are set to info logging by default. You can adjust individual log levels using the --log (-l short) option, for example -l afg=trace,sync=debug or globally with -ldebug.

Telemetry & Metrics

The Acala client connects to telemetry by default. You can disable it with --no-telemetry, or connect only to specified telemetry servers with the --telemetry-url option (see the help options for instructions). Connecting to public telemetry may expose information that puts your node at higher risk of attack. You can run your own, private telemetry server or deploy a substrate-telemetry instance to a Kubernetes cluster using this Helm chart.

While the Acala Network will leverage Polkadot’s shared security and do not need dedicated validators itself, it will still require what we call Proof-of-Liveness (PoL) nodes to maintain the operation of the Acala network to perform part or all of the following actions

- As Collator to propose new blocks to validators for verification

- As Network Operator to perform off-chain work such as liquidating unsafe positions

- Optionally as Oracle Operator

For Karura Network and Acala Mainnet, there will be incentives for becoming a PoL node. There is reward for running PoL node as a Collator and Network Operator, and additional reward for also running as Oracle Operator.

The initial period of Karura and Acala network will be PoA, and gradually upgraded to be permission-less network. Oracle Operation will remain PoA until we have definitively secure decentralized solutions.

If you are interest in becoming a collator/liveness provider for the Acala Network, and joining the wider Acala ecosystem, please fill in this form.

Before we generate our own keys, and start a truly unique Acala network, let's learn the fundamentals by starting with a pre-defined network specification called local with two pre-defined (and definitely not private!) keys known as Alice and Bob.

Alice (or whomever is playing her) should run these commands from node-template repository root.

./acala --base-path /tmp/alice --chain local --alice --port 30333 --ws-port 9944 --rpc-port 9933 --validator --rpc-methods=Unsafe --ws-external --rpc-external --ws-max-connections 1000 --rpc-cors=all --unsafe-ws-external --unsafe-rpc-externalLet's look at those flags in detail:

| Flags | Descriptions |

|---|---|

--base-path |

Specifies a directory where Acala should store all the data related to this chain. If this value is not specified, a default path will be used. If the directory does not exist it will be created for you. If other blockchain data already exists there you will get an error. Either clear the directory or choose a different one. |

--chain local |

Specifies which chain specification to use. There are a few prepackaged options including local, development, and staging but generally one specifies their own chain spec file. We'll specify our own file in a later step. |

--alice |

Puts the predefined Alice keys (both for block production and finalization) in the node's keystore. Generally one should generate their own keys and insert them with an RPC call. We'll generate our own keys in a later step. This flag also makes Alice a validator. |

--port 30333 |

Specifies the port that your node will listen for p2p traffic on. 30333 is the default and this flag can be omitted if you're happy with the default. If Bob's node will run on the same physical system, you will need to explicitly specify a different port for it. |

--ws-port 9945 |

Specifies the port that your node will listen for incoming WebSocket traffic on. The default value is 9944. This example uses a custom web socket port number (9945). |

--rpc-port 9933 |

Specifies the port that your node will listen for incoming RPC traffic on. 9933 is the default, so this parameter may be omitted. |

--node-key |

The Ed25519 secret key to use for libp2p networking. The value is parsed as a hex-encoded Ed25519 32 byte secret key, i.e. 64 hex characters. WARNING: Secrets provided as command-line arguments are easily exposed. Use of this option should be limited to development and testing. |

--telemetry-url |

Tells the node to send telemetry data to a particular server. The one we've chosen here is hosted by Parity and is available for anyone to use. You may also host your own (beyond the scope of this article) or omit this flag entirely. |

--validator |

Means that we want to participate in block production and finalization rather than just sync the network. |

When the node starts you should see output similar to this.

2020-09-03 16:08:05.098 main INFO sc_cli::runner Acala Node

2020-09-03 16:08:05.098 main INFO sc_cli::runner ✌️ version 0.5.4-12db4ee-x86_64-linux-gnu

2020-09-03 16:08:05.098 main INFO sc_cli::runner ❤️ by Acala Developers, 2019-2020

2020-09-03 16:08:05.098 main INFO sc_cli::runner 📋 Chain specification: Local

2020-09-03 16:08:05.098 main INFO sc_cli::runner 🏷 Node name: Alice

2020-09-03 16:08:05.098 main INFO sc_cli::runner 👤 Role: AUTHORITY

2020-09-03 16:08:05.098 main INFO sc_cli::runner 💾 Database: RocksDb at /tmp/node01/chains/local/db

2020-09-03 16:08:05.098 main INFO sc_cli::runner ⛓ Native runtime: acala-504 (acala-0.tx1.au1)

2020-09-03 16:08:05.801 main WARN sc_service::builder Using default protocol ID "sup" because none is configured in the chain specs

2020-09-03 16:08:05.801 main INFO sub-libp2p 🏷 Local node identity is: 12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9 (legacy representation: Qmd49Akgjr9cLgb9MBerkWcqXiUQA7Z6Sc1WpwuwJ6Gv1p)

2020-09-03 16:08:07.117 main INFO sc_service::builder 📦 Highest known block at #3609

2020-09-03 16:08:07.119 tokio-runtime-worker INFO substrate_prometheus_endpoint::known_os 〽️ Prometheus server started at 127.0.0.1:9615

2020-09-03 16:08:07.128 main INFO babe 👶 Starting BABE Authorship worker

2020-09-03 16:08:09.834 tokio-runtime-worker INFO sub-libp2p 🔍 Discovered new external address for our node: /ip4/192.168.145.129/tcp/30333/p2p/12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9

2020-09-03 16:08:09.878 tokio-runtime-worker INFO sub-libp2p 🔍 Discovered new external address for our node: /ip4/127.0.0.1/tcp/30333/p2p/12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9Notes

🏷 Local node identity is: 12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9...shows the Peer ID that Bob will need when booting from Alice's node. This value was determined by the--node-keythat was used to start Alice's node.

You'll notice that no blocks are being produced yet. Blocks will start being produced once another node joins the network.

Now that Alice's node is up and running, Bob can join the network by bootstrapping from her node. His command will look very similar.

./acala --base-path /tmp/bob --chain local --bob --port 30334 --ws-port 9945 --rpc-port 9934 --validator --bootnodes /ip4/127.0.0.1/tcp/30333/p2p/12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9-

Because these two nodes are running on the same physical machine, Bob must specify different

--base-path,--port,--ws-port, and--rpc-portvalues. -

Bob has added the

--bootnodesflag and specified a single boot node, namely Alice's. He must correctly specify these three pieces of information which Alice can supply for him.

- Alice's IP Address, probably

127.0.0.1 - Alice's Port, she specified

30333 - Alice's Peer ID, copied from her log output.

- Alice's IP Address, probably

If all is going well, after a few seconds, the nodes should peer together and start producing blocks. You should see some lines like the following in the console that started Alice node.

2020-09-03 16:24:45.733 main INFO babe 👶 Starting BABE Authorship worker

2020-09-03 16:24:50.734 tokio-runtime-worker INFO substrate 💤 Idle (0 peers), best: #3807 (0x0fe1…13fa), finalized #3804 (0x9de1…1586), ⬇ 0 ⬆ 0

2020-09-03 16:24:52.667 tokio-runtime-worker INFO sub-libp2p 🔍 Discovered new external address for our node: /ip4/192.168.145.129/tcp/30334/p2p/12D3KooWNNioz32H5jygGeZLH6ZgJvcZMZR4MawjKV9FUZg6zBZd

2020-09-03 16:24:55.736 tokio-runtime-worker INFO substrate 💤 Idle (1 peers), best: #3807 (0x0fe1…13fa), finalized #3805 (0x9d23…20f1), ⬇ 1.2kiB/s ⬆ 1.4kiB/s

2020-09-03 16:24:56.077 tokio-runtime-worker INFO sc_basic_authorship::basic_authorship 🙌 Starting consensus session on top of parent 0x0fe19cbd2bae491db76b6f4ab684fcd9c98cdda70dd4a301ae659ffec4db13faThese lines shows that Bob has peered with Alice (1 peers), they have produced some blocks (best: #3807 (0x0fe1…13fa)), and blocks are being finalized (finalized #3805 (0x9d23…20f1)).

Looking at the console that started Bob's node, you should see something similar.

Subkey is a tool that generates keys specifically designed to be used with Substrate.

Begin by compiling and installing the utility. This may take up to 15 minutes or so.

git clone https://github.com/paritytech/substrate

cd substrate

cargo build -p subkey --release --target-dir=../target

cp -af ../target/release/subkey ~/.cargo/binWe will need to generate at least 2 keys from each type. Every node will need to have its own keys.

Generate a mnemonic and see the sr25519 key and address associated with it. This key will be used by Aura for block production.

subkey generate --scheme sr25519

Secret phrase `infant salmon buzz patrol maple subject turtle cute legend song vital leisure` is account:

Secret seed: 0xa2b0200f9666b743402289ca4f7e79c9a4a52ce129365578521b0b75396bd242

Public key (hex): 0x0a11c9bcc81f8bd314e80bc51cbfacf30eaeb57e863196a79cccdc8bf4750d21

Account ID: 0x0a11c9bcc81f8bd314e80bc51cbfacf30eaeb57e863196a79cccdc8bf4750d21

SS58 Address: 5CHucvTwrPg8L2tjneVoemApqXcUaEdUDsCEPyE7aDwrtR8DNow see the ed25519 key and address associated with the same mnemonic. This key will be used by grandpa for block finalization.

subkey inspect-key --scheme ed25519 "infant salmon buzz patrol maple subject turtle cute legend song vital leisure"

Secret phrase `infant salmon buzz patrol maple subject turtle cute legend song vital leisure` is account:

Secret seed: 0xa2b0200f9666b743402289ca4f7e79c9a4a52ce129365578521b0b75396bd242

Public key (hex): 0x1a0e2bf1e0195a1f5396c5fd209a620a48fe90f6f336d89c89405a0183a857a3

Account ID: 0x1a0e2bf1e0195a1f5396c5fd209a620a48fe90f6f336d89c89405a0183a857a3

SS58 Address: 5CesK3uTmn4NGfD3oyGBd1jrp4EfRyYdtqL3ERe9SXv8jUHbThe same UI that we used to see blocks being produced can also be used to generate keys. This option is convenient if you do not want to install Subkey. It can be used for production keys, but the system should not be connected to the internet when generating such keys.

On the "Accounts" tab, click "Add account". You do not need to provide a name, although you may if you would like to save this account for submitting transaction in addition to validating.

Generate an sr25519 key which will be used by Aura for block production. Take careful note of the menmonic phrase, and the SS58 address which can be copied by clicking on the identicon in the top left.

Then generate an ed25519 key which will be used by grandpa for block finalization. Again, note the menmonic phrase and ss58 address.

Now that each participant has their own keys generated, you're ready to create a custom chain specification. We will use this custom chain spec instead of the built-in local spec that we used previously.

In this example we will create a two-node network, but the process generalizes to more nodes in a straight-forward manner.

Last time around, we used --chain local which is a predefined "chain spec" that has Alice and Bob specified as validators along with many other useful defaults.

Rather than writing our chain spec completely from scratch, we'll just make a few modifications to the one we used before. To start, we need to export the chain spec to a file named customSpec.json. Remember, further details about all of these commands are available by running node-template --help.

./acala build-spec --disable-default-bootnode --chain local > customSpec.jsonWe need to change the fields under stakers and palletSession,That section looks like this

"stakers": [

[

"5GxjN8Kn2trMFhvhNsgD5BCDKJ7z5iwRsWvJpiKY6zvxk3ij",

"5FeBfmXBdoqdTysYex8zAGinb3xLeRSG95dnWyo8zYzaH24s",

100000000000000000000000,

"Validator"

],

[

"5FeBfmXBdoqdTysYex8zAGinb3xLeRSG95dnWyo8zYzaH24s",

"5EuxUQwRcoTXuFnQkQ2NtHBiKCWVEWG1TskHcUxatbuXSnAF",

100000000000000000000000,

"Validator"

],

[

"5GNod3xkEzrUTaHeWGUMsMMEgsUb3EWEyCURzrYvYjrnah9n",

"5D4TarorfXLgDc1txxuHJnD8pCPG6emmtQETb5DKkNHJsFmt",

100000000000000000000000,

"Validator"

]

]

},

"palletSession": {

"keys": [

[

"5GxjN8Kn2trMFhvhNsgD5BCDKJ7z5iwRsWvJpiKY6zvxk3ij",

"5GxjN8Kn2trMFhvhNsgD5BCDKJ7z5iwRsWvJpiKY6zvxk3ij",

{

"grandpa": "5CpwFsV8j3k68fxJj6NLT2uFs26DfokVpqxQLXuNuQs5Wku4",

"babe": "5CFzF2tGAcqUvxTd2afZCCnhUSXyWUaa2N1KymcmXECR5Tqh"

}

],

[

"5FeBfmXBdoqdTysYex8zAGinb3xLeRSG95dnWyo8zYzaH24s",

"5FeBfmXBdoqdTysYex8zAGinb3xLeRSG95dnWyo8zYzaH24s",

{

"grandpa": "5EcKEGQAciYNtu4TKZgEbPtiUrvZEYDLARQfj6YMtqDbJ9EV",

"babe": "5EuxUQwRcoTXuFnQkQ2NtHBiKCWVEWG1TskHcUxatbuXSnAF"

}

],

[

"5GNod3xkEzrUTaHeWGUMsMMEgsUb3EWEyCURzrYvYjrnah9n",

"5GNod3xkEzrUTaHeWGUMsMMEgsUb3EWEyCURzrYvYjrnah9n",

{

"grandpa": "5EU3jqPSF5jmnTpRRiFCjh1g5TQ47CJKBkxiHTHeN4KBpJUC",

"babe": "5D4TarorfXLgDc1txxuHJnD8pCPG6emmtQETb5DKkNHJsFmt"

}

]

]

}All we need to do is change the authority addresses listed (currently Alice and Bob) to our own addresses that we generated in the previous step. The sr25519 addresses go in the babe section, and the ed25519 addresses in the grandpa section. You may add as many validators as you like. For additional context, read about keys in Substrate.

For the address in babe, you also need to add it to ormlTokens and palletBalances

In addition, you can also change the address in stakes to your own validator address

Once the chain spec is prepared, convert it to a "raw" chain spec. The raw chain spec contains all the same information, but it contains the encoded storage keys that the node will use to reference the data in its local storage. Distributing a raw spec ensures that each node will store the data at the proper storage keys.

./acala build-spec --chain customSpec.json --raw --disable-default-bootnode > customSpecRaw.jsonFinally share the customSpecRaw.json with your all the other validators in the network.

You've completed all the necessary prep work and you're now ready to launch your chain. This process is very similar to when you launched a chain earlier, as Alice and Bob. It's important to start with a clean base path, so if you plan to use the same path that you've used previously, please delete all contents from that directory.

The first participant can launch her node with:

./acala --base-path /tmp/node01 --chain ./customSpecRaw.json --alice --port 30333 --ws-port 9944 --rpc-port 9933 --validator ----rpc-methods=Unsafe --ws-external --rpc-external --ws-max-connections 1000 --rpc-cors=all --unsafe-ws-external --unsafe-rpc-externalHere are some differences from when we launched as Alice.

- I've omitted the

--aliceflag. Instead we will insert our own custom keys into the keystore through the RPC shortly. - The

--chainflag has changed to use our custom chain spec. - I've added the optional

--nameflag. You may use it to give your node a human-readable name in the telemetry UI. - The optional

--rpc-methods=Unsafeflag has been added. As the name indicates, this flag is not safe to use in a production setting, but it allows this tutorial to stay focused on the topic at hand.

Once your node is running, you will again notice that no blocks are being produced. At this point, you need to add your keys into the keystore. Remember you will need to complete these steps for each node in your network. You will add two types of keys for each node: babe and grandpa keys. babe keys are necessary for block production; grandpa keys are necessary for block finalization.

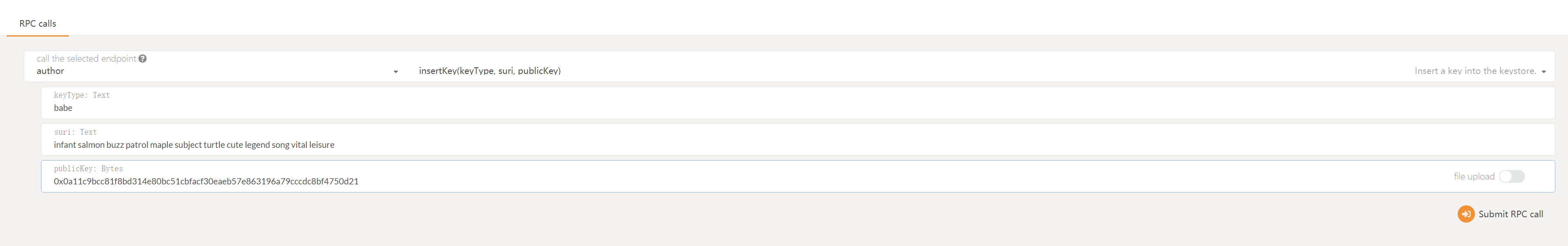

You can use the Apps UI to insert your keys into the keystore. Navigate to the "Toolbox" tab and the "RPC Call" sub-tab. Choose "author" and "insertKey". The fields can be filled like this:

keytype: babe

suri: <your mnemonic phrase>

(eg. infant salmon buzz patrol maple subject turtle cute legend song vital leisure)

publicKey: <your raw sr25519 key> (eg.0x0a11c9bcc81f8bd314e80bc51cbfacf30eaeb57e863196a79cccdc8bf4750d21)

If you generated your keys with the Apps UI you will not know your raw public key. In this case you may use your SS58 address (5CHucvTwrPg8L2tjneVoemApqXcUaEdUDsCEPyE7aDwrtR8D) instead.

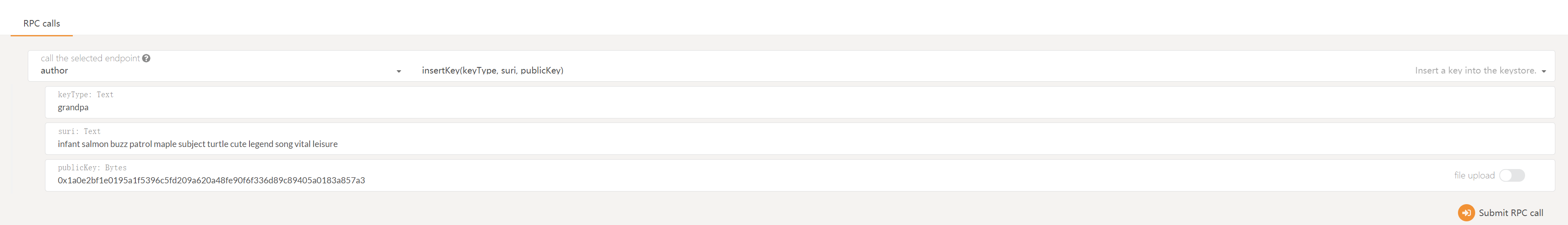

You've now successfully inserted your babe key. You can repeat those steps to insert your grandpa key (the ed25519 key)

keytype: grandpa

suri: <your mnemonic phrase>

(eg. infant salmon buzz patrol maple subject turtle cute legend song vital leisure)

publicKey: <your raw ed25519 key> (eg.0x1a0e2bf1e0195a1f5396c5fd209a620a48fe90f6f336d89c89405a0183a857a3)

If you generated your keys with the Apps UI you will not know your raw public key. In this case you may use your SS58 address (

5CesK3uTmn4NGfD3oyGBd1jrp4EfRyYdtqL3ERe9SXv8jUHb) instead.

If you are following these steps for the second node in the network, you must connect the UI to the second node before inserting the keys.

You can also insert a key into the keystore by using curl from the command line. This approach may be preferable in a production setting, where you may be using a cloud-based virtual private server.

Because security is of the utmost concern in a production environment, it is important to take every precaution possible. In this case, that means taking care that you do not leave any traces of your keys behind, such as in your terminal's history. Create a file that you will use to define the body for your curl request:

{

"jsonrpc":"2.0",

"id":1,

"method":"author_insertKey",

"params": [

"<babe/grandpa>",

"<mnemonic phrase>",

"<public key>"

]

}# Submit a new key via RPC, connect to where your `rpc-port` is listening

curl http://localhost:9933 -H "Content-Type:application/json;charset=utf-8" -d "@/path/to/file"If you enter the command and parameters correctly, the node will return a JSON response as follows.

{ "jsonrpc": "2.0", "result": null, "id": 1 }Make sure you delete the file that contains the keys when you are done.

Subsequent validators can now join the network. This can be done by specifying the --bootnodes parameter as Bob did previously.

./acala --base-path /tmp/node02 --chain ./customSpecRaw.json --name MyNode02 --port 30334 --ws-port 9945 --rpc-port 9934 --validator --bootnodes /ip4/127.0.0.1/tcp/30333/p2p/12D3KooWPcd2fQhT2HVGeUg9JSR6Ct3PqqxUjzjhvM1YZsjRo9PuNow you're ready to add keys to its keystore by following the process (in the previous section) just like you did for the first node.

If you're inserting keys with the UI, you must connect the UI to the second node's WebSocket endpoint before inserting the second node's keys.

A node will not be able to produce blocks if it has not added its babe key.

Block finalization can only happen if more than two-thirds of the validators have added their grandpa keys to their keystores. Since this network was configured with two validators (in the chain spec), block finalization can occur after the second node has added its keys (i.e. 50% < 66% < 100%).

Reminder: All validators must be using identical chain specifications in order to peer. You should see the same genesis block and state root hashes.

You will notice that even after you add the keys for the second node no block finalization has happened (finalized #0 (0x0ded…9b9d)). Substrate nodes require a restart after inserting a grandpa key. Kill your nodes and restart them with the same commands you used previously. Now blocks should be finalized.

The most common way for a beginner to run a validator is on a cloud server running Linux. You may choose whatever VPS provider that your prefer, and whatever operating system you are comfortable with. For this guide we will be using Ubuntu 18.04, but the instructions should be similar for other platforms.

The transactions weights in Acala were benchmarked on standard hardware. It is recommended that validators run at least the standard hardware in order to ensure they are able to process all blocks in time. The following are not minimum requirements but if you decide to run with less than this beware that you might have performance issue.

For the full details of the standard hardware please see here.

- CPU - Intel(R) Core(TM) i7-7700K CPU @ 4.20GHz

- Storage - A NVMe solid state drive. Should be reasonably sized to deal with blockchain growth. Starting around 80GB - 160GB will be okay for the first six months of Acala, but will need to be re-evaluated every six months.

- Memory - 64GB.

The specs posted above are by no means the minimum specs that you could use when running a validator, however you should be aware that if you are using less you may need to toggle some extra optimizations in order to be equal to other validators that are running the standard.

NTP is a networking protocol designed to synchronize the clocks of computers over a network. NTP allows you to synchronize the clocks of all the systems within the network. Currently it is required that validators' local clocks stay reasonably in sync, so you should be running NTP or a similar service. You can check whether you have the NTP client by running:

If you are using Ubuntu 18.04 / 19.04, NTP Client should be installed by default.

timedatectlIf NTP is installed and running, you should see System clock synchronized: yes (or a similar message). If you do not see it, you can install it by executing:

sudo apt-get install ntpntpd will be started automatically after install. You can query ntpd for status information to verify that everything is working:

sudo ntpq -pWARNING: Skipping this can result in the validator node missing block authorship opportunities. If the clock is out of sync (even by a small amount), the blocks the validator produces may not get accepted by the network. This will result in

ImOnlineheartbeats making it on chain, but zero allocated blocks making it on chain.

Note: By default, Validator nodes are in archive mode. If you've already synced the chain not in archive mode, you must first remove the database with acala purge-chain and then ensure that you run Acala with the --pruning=archive option.

You may run a validator node in non-archive mode by adding the following flags: -unsafe-pruning --pruning OF BLOCKS>, but note that an archive node and non-archive node's databases are not compatible with each other, and to switch you will need to purge the chain data.

You can begin syncing your node by running the following command:

./acala --pruning=archive-

Bond the ACALA of the Stash account. These ACALA will be put at stake for the security of the network and can be slashed.

-

Select the Controller. This is the account that will decide when to start or stop validating.

First, go to the Staking section. Click on "Account Actions", and then the "Validatior" button.

-

Stash account - Select your Stash account

-

Controller account - Select the Controller account created earlier

-

Value bonded - How much ACA from the Stash account you want to bond/stake. Note that you do not need to bond all of the ACA in that account. Also note that you can always bond more ACALA later. However, withdrawing any bonded amount requires the duration of the unbonding period. On Kusama, the unbonding period is 7 days. On Acala, the planned unbonding period is 28 days.

-

Payment destination - The account where the rewards from validating are sent.

Note: The session keys are consensus critical, so if you are not sure if your node has the current session keys that you made the

setKeystransaction then you can use one of the two available RPC methods to query your node: hasKey to check for a specific key or hasSessionKeys to check the full session key public key string.

Once your node is fully synced, stop the process by pressing Ctrl-C. At your terminal prompt, you will now start running the node.

./acala --validator --name "name on telemetry"

You can give your validator any name that you like, but note that others will be able to see it, and it will be included in the list of all servers using the same telemetry server. Since numerous people are using telemetry, it is recommended that you choose something likely to be unique.

You need to tell the chain your Session keys by signing and submitting an extrinsic. This is what associates your validator node with your Controller account on Acala.

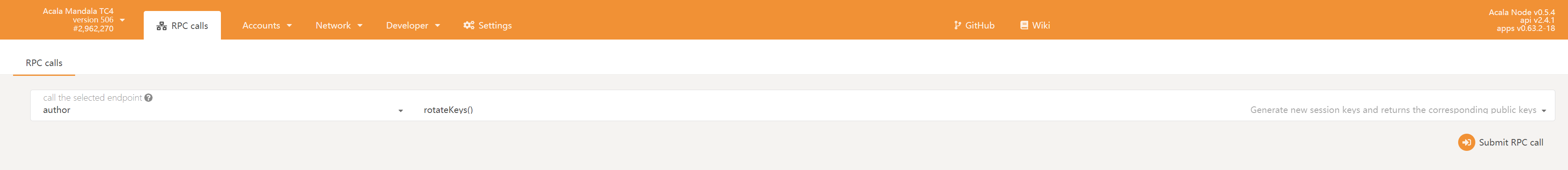

You can generate your Session keys in the client via the apps RPC. If you are doing this, make sure that you have the AcalaJS-Apps explorer attached to your validator node. You can configure the apps dashboard to connect to the endpoint of your validator in the Settings tab

Once ensuring that you have connected to your node, the easiest way to set session keys for your node is by calling the author_rotateKeys RPC request to create new keys in your validator's keystore. Navigate to Toolbox tab and select RPC Calls then select the author > rotateKeys() option and remember to save the output that you get back for a later step.

If you are on a remote server, it is easier to run this command on the same machine (while the node is running with the default HTTP RPC port configured):

curl -H "Content-Type: application/json" -d '{"id":1, "jsonrpc":"2.0", "method": "author_rotateKeys", "params":[]}' http://localhost:9933The output will have a hex-encoded "result" field. The result is the concatenation of the four public keys. Save this result for a later step.

You can restart your node at this point.

You need to tell the chain your Session keys by signing and submitting an extrinsic. This is what associates your validator with your Controller account.

Go to Staking > Account Actions, and click "Set Session Key" on the bonding account you generated earlier. Enter the output from author_rotateKeys in the field and click "Set Session Key".

Submit this extrinsic and you are now ready to start validating.

o verify that your node is live and synchronized, head to Telemetry and find your node. Note that this will show all nodes on the Acala network, which is why it is important to select a unique name!

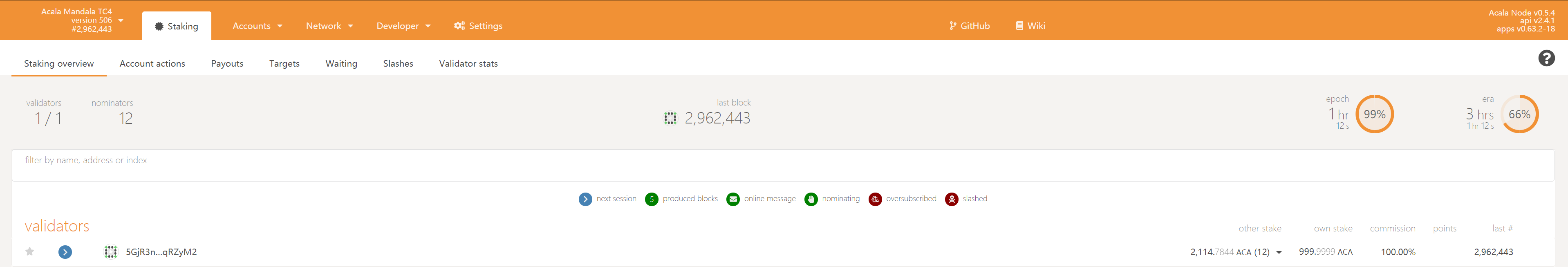

If everything looks good, go ahead and click on "Validate" in Acala UI.

- Payment preferences - You can specify the percentage of the rewards that will get paid to you. The remaining will be split among your nominators.

Click "Validate".

If you go to the "Staking" tab, you will see a list of active validators currently running on the network. At the top of the page, it shows the number of validator slots that are available as well as the number of nodes that have signaled their intention to be a validator. You can go to the "Waiting" tab to double check to see whether your node is listed there.

The validator set is refreshed every era. In the next era, if there is a slot available and your node is selected to join the validator set, your node will become an active validator. Until then, it will remain in the waiting queue. If your validator is not selected to become part of the validator set, it will remain in the waiting queue until it is. There is no need to re-start if you are not selected for the validator set in a particular era. However, it may be necessary to increase the number of ACA staked or seek out nominators for your validator in order to join the validator set.

We will use Wireguard to configure the VPN. Wireguard is a fast and secure VPN that uses state-of-the-art cryptography. If you want to learn more about Wireguard, please go here. Before we move on to the next step, configure the firewall to open the required ports.

# ssh port

ufw allow 22/tcp

# wireguard port

ufw allow 51820/udp

# libp2p port (Note: Only public node is required))

ufw allow 30333/tcp

ufw enable

# double check the firewall rules

ufw verbose# install linux headers

apt install linux-headers-$(uname -r)

add-apt-repository ppa:wireguard/wireguard

apt-get update # you can skip this on Ubuntu 18.04

apt-get install wireguardThere are two commands you will use quite a bit when setting up Wireguard; wg is the configuration utility for managing Wireguard tunnel interfaces; wg-quick is a utility for starting and stopping the interface.

To generate the public / private keypair, execute the following commands:

cd /etc/wireguard

umask 077

wg genkey | sudo tee privatekey | wg pubkey | sudo tee publickeyYou will see that two files, publickey and privatekey, have been created. As may be guessed from their names, publickey contains the public key and privatekey contains the private key of the keypair.

Now create a wg0.conf file under the /etc/wireguard/ directory. This file will be used to configure the interface.

Here is a wg0.conf configuration template for the validator.

[Interface]

# specify the address you want to assign for this machine.

Address = 10.0.0.1/32

# the private key you just generated

PrivateKey = 8MeWtQjBrmYazzwni7s/9Ow37U8eECAfAs0AIuffFng=

# listening port of your server

ListenPort = 51820

# if you use wg to add a new peer when running, it will automatically

# save the newly added peers to your configuration file

# without requiring a restart

SaveConfig = true

# Public Node A

[Peer]

# replace it to the public node A public key

PublicKey = Vdepw3JhRKDytCwjwA0nePLFiNsfB4KxGewl4YwAFRg=

# public ip address for your public node machine

Endpoint = 112.223.334.445:51820

# replace it to the public node A interface address

AllowedIPs = 10.0.0.2/32

# keep the connection alive by sending a handshake every 21 seconds

PersistentKeepalive = 21Note: In this guide, we only set up 1 peer (public node)

You need to do the previous steps (1 and 2) again in your public node but the wg0.conf configuration file will look like this:

[Interface]

Address = 10.0.0.2/32

PrivateKey = eCii0j3IWi4w0hScc8myUj5QjXjjt5rp1VVuqlEmM24=

ListenPort = 51820

SaveConfig = true

# Validator

[Peer]

# replace this with the validator public key

PublicKey = iZeq+jm4baF3pTWR1K1YEyLPhrfpIckGjY/DfwCoKns=

# public ip address of the validator

Endpoint = 55.321.234.4:51820

# replace it with the validator interface address

AllowedIPs = 10.0.0.1/32

PersistentKeepalive = 21If everything goes well, you are ready to test the connection.

To start the tunnel interface, execute the following command in both your validator and public node.

wg-quick up wg0

# The console would output something like this

#[#] ip link add wg0 type wireguard

#[#] wg setconf wg0 /dev/fd/63

#[#] ip -4 address add 10.0.0.1/24 dev wg0

#[#] ip link set mtu 1420 up dev wg0Note: If you are not able to start Wireguard or getting any errors during start, restart the computer and run the above command again.

You can check the status of the interface by running wg :

# Output

interface: wg0

public key: iZeq+jm4baF3pTWR1K1YEyLPhrfpIckGjY/DfwCoKns=

private key: (hidden)

listening port: 51820

peer: Vdepw3JhRKDytCwjwA0nePLFiNsfB4KxGewl4YwAFRg=

endpoint: 112.223.334.445:51820

allowed ips: 10.0.0.2/32

latest handshake: 18 seconds ago

transfer: 580 B received, 460 B sent

persistent keepalive: every 25 secondsNodes will use libp2p as the networking layer to establish peers and gossip messages. In order to specify nodes as peers, you must do so using a multiaddress (multiaddr), which includes a node's Peer Identity (PeerId). A validator node will need to specify the multiaddr of it's sentry node(s), and a sentry node will specify the multiaddr of it's validator node(s).

multiaddr - A multiaddr is a flexible encoding of multiple layers of protocols into a human readable addressing scheme. For example, /ip4/127.0.0.1/udp/1234 is a valid multiaddr that specifies you want to reach the 127.0.0.1 IPv4 loopback address with UDP packets on port 1234. Addresses in Substrate based chains will often take the form:

/ip4/<IP ADDRESS>/tcp/<P2P PORT>/p2p/<PEER IDENTITY>-

IP_ADDRESS- Unless the node is public, this will often be the ip address of the node within the private network. -

P2P_PORT- This is the port that nodes will send p2p messages over. By default, this will be 30333, but can be explicitly specified using the--portcli flag. -

PEER IDENTITY- The PeerId is a unique identifier for each peer.

starting the node to see the identity printed as follows:

./acala --validator2020-09-03 16:08:05.098 main INFO sc_cli::runner Acala Node

2020-09-03 16:08:05.098 main INFO sc_cli::runner ✌️ version 0.5.4-12db4ee-x86_64-linux-gnu

2020-09-03 16:08:05.098 main INFO sc_cli::runner ❤️ by Acala Developers, 2019-2020

2020-09-03 16:08:05.098 main INFO sc_cli::runner 📋 Chain specification: Local

2020-09-03 16:08:05.098 main INFO sc_cli::runner 🏷 Node name: Alice

2020-09-03 16:08:05.098 main INFO sc_cli::runner 👤 Role: AUTHORITY

2020-09-03 16:08:05.098 main INFO sc_cli::runner 💾 Database: RocksDb at /tmp/node01/chains/local/db

2020-09-03 16:08:05.098 main INFO sc_cli::runner ⛓ Native runtime: acala-504 (acala-0.tx1.au1)

2020-09-03 16:08:05.801 main WARN sc_service::builder Using default protocol ID "sup" because none is configured in the chain specs

2020-09-03 16:08:05.801 main INFO sub-libp2p 🏷 Local node identity is: 12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9 (legacy representation: Qmd49Akgjr9cLgb9MBerkWcqXiUQA7Z6Sc1WpwuwJ6Gv1p)Here we can see our PeerId is 12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9.

Lastly, we can also find the PeerId by calling the following RPC call from the same host:

curl -H "Content-Type: application/json" -d '{"id":1, "jsonrpc":"2.0", "method": "system_localPeerId", "params":[]}' http://localhost:9933

# Output

{"jsonrpc":"2.0","result":"12D3KooWNHQzppSeTxsjNjiX6NFW1VCXSJyMBHS48QBmmGs4B3B9","id":1}Start the validator with the --valdiator and --sentry-nodes flags:

Start your sentry with --sentry flag:

# Sentry Node

acala \

--name "Sentry-A" \

--sentry /ip4/10.0.0.1/tcp/30333/p2p/VALIDATOR_NODE_PEER_IDStart the validator with the --valdiator and --sentry-nodes flags:

# Validator Node

acala \

--name "Validator" \

--reserved-only \

--sentry-nodes /ip4/SENTRY_VPN_ADDRESS/tcp/30333/p2p/SENTRY_NODE_PEER_ID \

--validatorYou should see your validator has 1 peer, that is a connection from your sentry node. Do the above steps to spin up few more if you think one sentry node is not enough.

Note: You may have to start the sentry node first in order for the validator node to recognize it as a peer. If it does not show up as a peer, try resrtarting the validator node after the sentry is already running.

Validators perform critical functions for the network, and as such, have strict uptime requirements. Validators may have to go offline for periods of time to upgrade the client software or the host machine. This guide will walk you through upgrading your machine and keeping your validator online.

The process will take several hours, so make sure you understand the instructions first and plan accordingly.

Session keys are stored in the client and used to sign validator operations. They are what link your validator node to your Controller account. You cannot change them mid-Session.

Validators keep a database with all of their votes. If two machines have the same Session keys but different databases, they risk equivocating. For this reason, we will generate new Session keys each time we change machines

You will need to start a second validator to operate while you upgrade your primary. Throughout these steps, we will refer to the validator that you are upgrading as "Validator A" and the second one as "Validator B."

- Start a second node and connect it to your sentry nodes. Once it is synced, use the

--validatorflag. This is "Validator B." - Generate Session keys in Validator B.

- Submit a

set_keyextrinsic from your Controller account with your new Session keys. - Take note of the Session that this extrinsic was executed in.

It is imperative that your Validator A keep running in this Session. set_key only takes effect in the next Session.

Validator B is now acting as your validator. You can safely take Validator A offline. See note at bottom.

- Stop Validator A.

- Perform your system or client upgrade.

- Start Validator A, sync the database, and connect it to your sentry nodes.

- Generate new Session keys in Validator A.

- Submit a

set_keyextrinsic from your Controller account with your new Session keys for Validator A. - Take note of the Session that this extrinsic was executed in.

Again, it is imperative that Validator B keep running until the next Session.

Once the Session changes, Validator A will take over. You can safely stop Validator B.

NOTE: To verify that the Session has changed, make sure that a block in the new Session is finalized.